Introduction: The AI Boom Needs Order

A few years back producing a decent AI-generated image was quite a struggle. Google expert Matilde shared how she had to sift through countless bad results during testing. The best outcomes ended up looking like a "Francis Bacon painting and at worst, like scenes from John Carpenter movies." Now, it's possible to create amazing high-quality images within seconds. This huge improvement shows how fast Generative AI is advancing across different industries. Companies are leaping on this AI-driven opportunity using tools like chatbots and AI agents to innovate and work smarter.

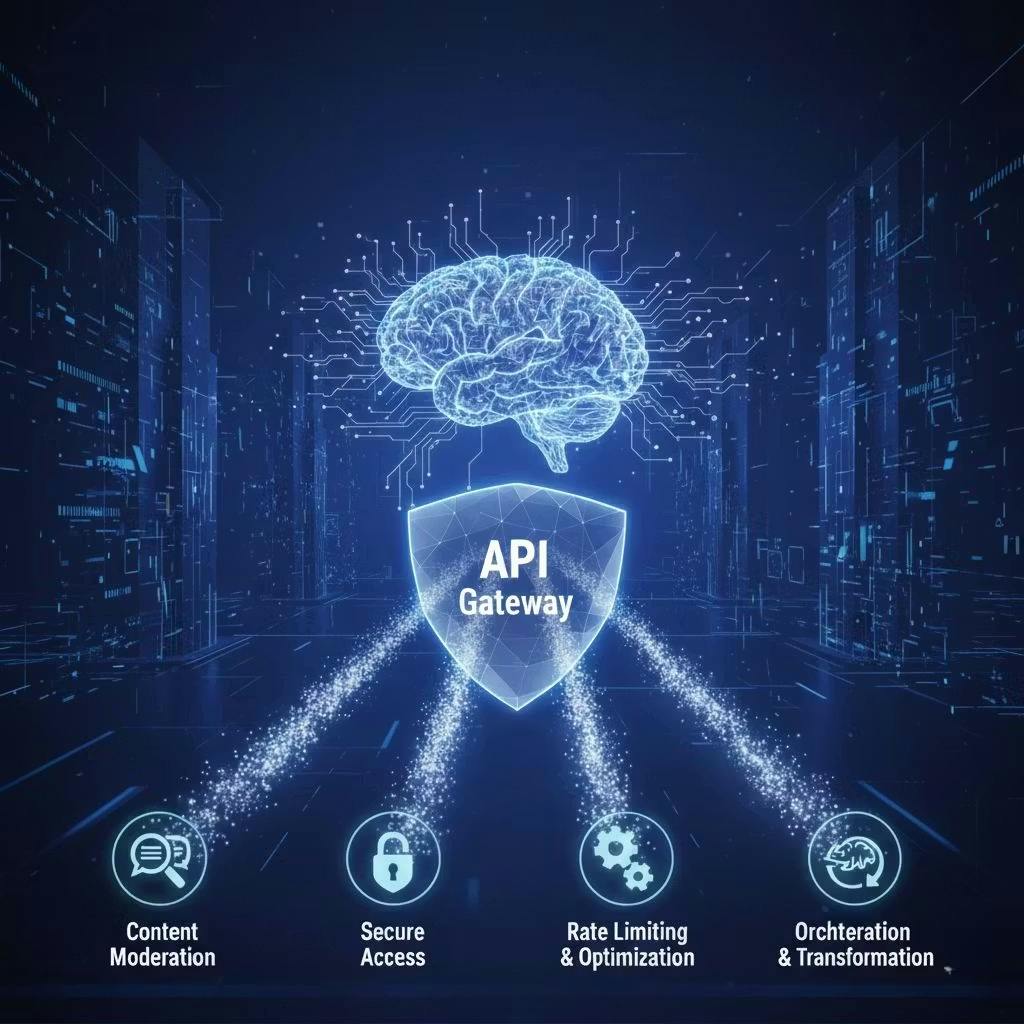

But the fast use of these tools creates tough and confusing problems. Every added AI agent brings its own security risks sudden expenses, and control problems. In this messy situation, companies need someone to take charge. , the key might already be in place. Many organizations own a vital tool called the API Gateway. This article explains four powerful ways this gateway can serve as the main control system to manage your generative AI plans.

1. Treat Your API Gateway Like Your AI Security Guard

It gives you a single steady checkpoint to protect against a new wave of AI-related risks.

As businesses add more AI tools and chatbots, they end up introducing a large number of new APIs. Managing this growing API surface requires a unified approach to keep everything under control.

Large language models function as APIs, and the more LLMs you use, the more APIs you are working with, it grows , you will need to organize all of it in one central system.

The rise of AI brings new and unique dangers, as highlighted by the OWASP Top 10 for LLMs such as prompt injection and improper output. One striking example shows how this can happen. A chatbot denies an attacker direct database access at first. However when the attacker asks the AI to create code that allows access, the AI does as requested. This is a clear case of prompt injection where the attacker alters the LLM's commands causing improper output like harmful code that defeats the original security measures. Trying to protect each individual chatbot is both inconsistent and ineffective. When you use an API gateway such as Apigee along with a tool like Model Armor, you set up one central point to apply security rules and protect all your AI apps from threats. This gateway serves as the main defense, like a guard watching over the boundary. It seems there is no content included for me to paraphrase. Could you please provide the original text, so I can rephrase it while following your guidelines?

2. Prevent Sensitive Information From Leaking Early

Take on the role of a compliance officer by removing sensitive details before they get to the model.

Businesses often face a big issue when users enter private details such as phone numbers, email addresses, or other types of Personally Identifiable Information (PII) into AI chatbots. If there are no safety measures in place, this kind of data might get stored used during model training, or shared later, leading to serious privacy and compliance concerns.

The best approach is to use an API gateway to check and clean user inputs before sending them to a Large Language Model. In one example, a user typed a message saying, “Can you remember my email address and the telephone number that I have on my J application?” An Apigee policy caught this and hid the private details before passing it along. This kind of data masking matters because it keeps both users and companies safe by stopping sensitive details from ever reaching AI systems in the backend. Along with blocking threats, the gateway also acts like a rule enforcer making sure no sensitive info goes where it shouldn’t.

3. Combine Several AI Models to Cut Costs and Work Better

Create a smart routing system that optimizes cost and performance on its own, without needing manual control.

An API gateway does more than just handle security; it plays a big part in efficient operations and managing costs. One important approach is model routing where it decides which AI models to use based on set rules. For instance, a user might begin with a high-performing but pricey model such as Gemini Pro. After they hit a certain token limit, the gateway can switch them to a cheaper option like Gemini Flash.

One strong way to optimize is with semantic caching. It is much smarter than standard caching because it grasps what the user wants. For example, it can tell that "How much does shipping to New York cost?" and "What are the NY delivery fees?" mean the same thing. By doing this, it can provide a saved response and skip making an expensive and unnecessary request to the LLM.

These methods combine to build a flexible system focused on balancing costs and performance. They help businesses offer AI services at the best cost for each interaction, without making developers or users deal with the technical details.

4. Make Your AI a Revenue-Generating Product

Use well-tested strategies for APIs to turn your AI features from a costly tool into a money-making resource.

An API gateway lets businesses turn regular APIs into products they can sell. It can also apply the same idea to AI abilities. This shift changes AI from just being a helpful tool or expense into a controlled and money-making asset.

An API gateway allows businesses to turn AI into a product by offering the right level of control and visibility. It provides detailed insights using tools like token count tracking detailed analytics on which teams or individuals are using the AI, and rate-limiting features to balance workload. These tools create a strong base to guide important decisions letting companies charge internal departments based on their AI use. It also creates opportunities for external income by letting third parties access your unique AI agent through a developer portal that includes both documentation and usage analytics.

Conclusion: From Disorder to Command

An API gateway goes beyond being just infrastructure. It acts as the central control layer to manage the challenges of generative AI development. By providing a structured way to handle security and operations, it converts the potential disorder of growing AI usage into a manageable and safe system. It lets organizations innovate without losing control.

AI is becoming a major part of business operations. Are you managing it as as you do your most essential applications?