Table of Links

-

Method

-

Experiments

-

Performance Analysis

Supplementary Material

- Details of KITTI360Pose Dataset

- More Experiments on the Instance Query Extractor

- Text-Cell Embedding Space Analysis

- More Visualization Results

- Point Cloud Robustness Analysis

Anonymous Authors

- Details of KITTI360Pose Dataset

- More Experiments on the Instance Query Extractor

- Text-Cell Embedding Space Analysis

- More Visualization Results

- Point Cloud Robustness Analysis

3 METHOD

3.1 Overview of Our Method

Following Text2Pos [21], our method adopts a coarse-to-fine strategy, as depicted in Fig. 2. Firstly, our text-cell retrieval model processes point clouds and textual cues to identify relevant cells, as detailed in Section 3.2. In the fine stage, our position estimation model directly predicts the final coordinates of the target location based on the textual hints and retrieved cells, as described in Section 3.3. Notably, this approach does not necessitate ground-truth instances as input and fully exploits spatial relations in both stages. The training objective is described in Section 3.4.

3.2 Coarse Text-cell Retrieval

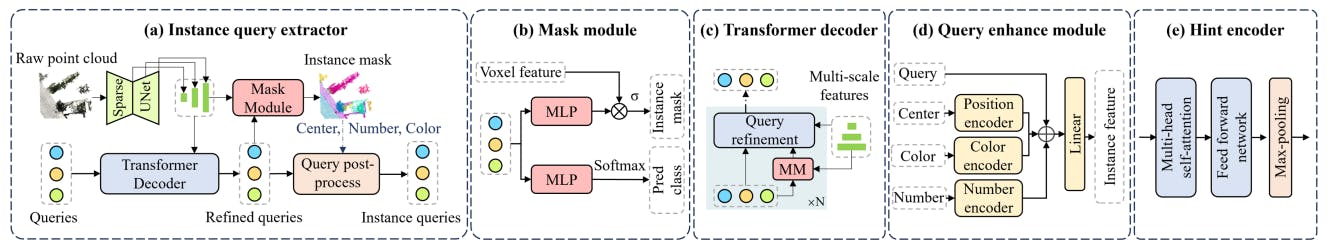

Similar to the previous methods [21, 39, 42], we employ a dual branch model to encode raw point cloud 𝐶 and text description 𝑇 into a shared embedding space, as shown in the left part of Fig. 2. To directly encode the point clouds of cells, our 3D cell branch consists of three main components: the instance query extractor, the query enhance module and the row-column relative position-aware self-attention (RowColRPA) module with max-pooling layer. The instance query extractor processes the initial queries and the raw point clouds to produce instance queries and instance masks. The query enhance module merges the semantic queries with corresponding instance mask features to generate the instance features, as depicted in Fig. 3(d). RowColRPA module with max-pooling layer, on the other hand, fuses these instance features to generate the cell feature.

where FFN(·) is the feed forward network, MHSA(·) is the multi-head self-attention, MMHCA(·) is the masked multi-head cross-attention. The mask module generates a binary mask for each query, as shown in Fig. 3(b). This process involves mapping instance queries to the same feature space as the backbone feature F0 using an Multi-Layer Perceptron (MLP). The similarity between these mapped instance queries and F0 is computed through dot products, with the resulting scores undergoing a sigmoid function and subsequent thresholding at 0.5 to yield the binary instance masks. During query post-processing, instance queries are filtered based on prediction confidence and are further combined with characteristics derived from the instance masks, resulting in the formation of the final instance queries. These instance queries are tuples that encapsulate the original queries, their center coordinates, point number, and mean RGB color value. The overall architecture and the key modules of instance query extractor are shown in Fig. 3(a).

Authors:

(1) Lichao Wang, FNii, CUHKSZ (wanglichao1999@outlook.com);

(2) Zhihao Yuan, FNii and SSE, CUHKSZ (zhihaoyuan@link.cuhk.edu.cn);

(3) Jinke Ren, FNii and SSE, CUHKSZ (jinkeren@cuhk.edu.cn);

(4) Shuguang Cui, SSE and FNii, CUHKSZ (shuguangcui@cuhk.edu.cn);

(5) Zhen Li, a Corresponding Author from SSE and FNii, CUHKSZ (lizhen@cuhk.edu.cn).

This paper is