Introduction: Beyond the Hype

For the past few years, the hype around artificial intelligence has centered on its creative and linguistic abilities including writing poetry, generating art, and coding websites. In the rigorous world of hard science, however, the response has been more skeptical. Sure, AI can be a useful assistant for summarizing papers or writing code, but can it really think like a scientist? Can it make novel contributions, form new hypotheses, or solve problems that have stumped human experts for years?

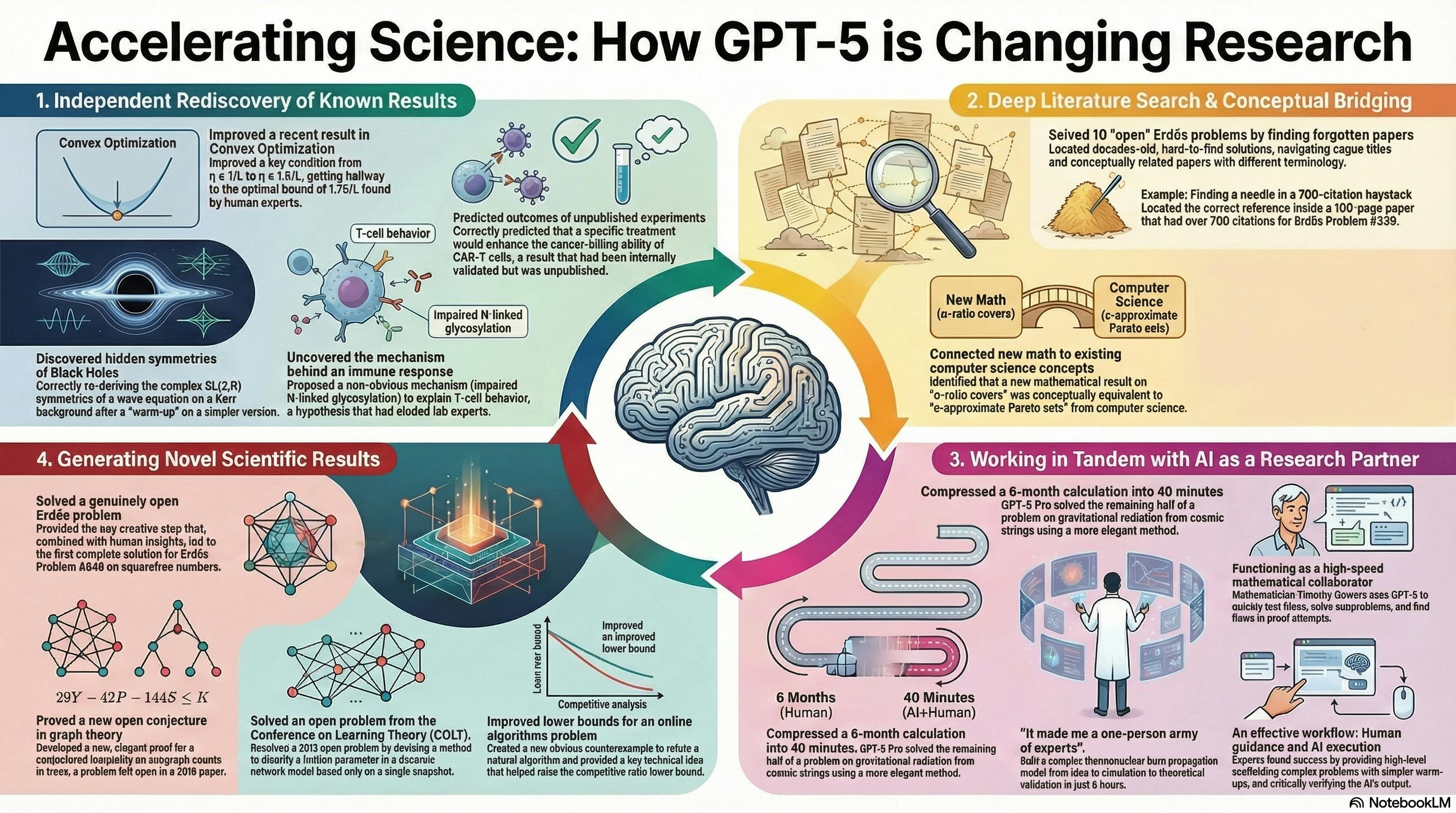

A new paper, titled "Early science acceleration experiments with GPT-5," offers a stunning collection of case studies that begin to answer that question with a resounding "yes." Instead of abstract benchmarks, the paper documents concrete examples of working scientists across mathematics, physics, and biology putting a frontier AI model to the test on their own unsolved research problems.

This post explores the most surprising and impactful takeaways from that paper. It reveals how AI is beginning to evolve from a mere assistant into a genuine research collaborator—one that can uncover hidden knowledge, generate publishable results, and dramatically accelerate the pace of discovery.

1. It’s Not Just a Search Engine; It's a "Deep Connections" Engine

One of GPT-5's most formidable capabilities is what the paper calls a "deep literature search." This goes far beyond keyword matching.

Instead of just finding papers that use the same terms, the model can understand the core concepts of a problem and locate relevant work even if it's described with entirely different terminology in a completely different field.

The case of the Erdős problems database is a perfect illustration. Mathematicians were spending significant time trying to solve problems that, unknown to them, had already been solved decades ago. The solutions were "scattered across many disorganized sources," making them nearly impossible to find. GPT-5 was able to locate these solutions by understanding the underlying mathematics, not just the words used to describe them.

For example, the solution to Erdős Problem #515 was found in a paper that discussed a more general concept (subharmonic functions). In a detail that highlights a true conceptual leap, the source paper "never mentioned Erdős or his above problem." This is a superpower for researchers, freeing them from reinventing the wheel and connecting them with forgotten knowledge.

As mathematician Timothy Gowers notes,

"If I suspect that a definition or mathematical observation I have come up with is in the literature, an LLM can often help me find it. GPT-5 seems to be significantly better at this than GPT-4."

This ability allows the model to surmount "barriers of language between scientific disciplines to uncover seemingly forgotten or hard-to-find connections," acting as a bridge across the vast and often siloed landscape of human knowledge.

2. It Can Generate Genuinely New, Publishable Science

Perhaps the most profound finding in the paper is that GPT-5 can produce novel, research-level results that solve genuinely open problems.

This isn't just about finding old solutions; it's about creating new ones. The paper provides several stunning examples:

- Combinatorics: Working from ideas proposed by human commenters on an online forum, GPT-5 generated a key insight that enabled researchers to solve Erdős Problem #848, a problem that was "genuinely open."

- Graph Theory: GPT-5 proved an open problem from a 2016 paper about a conjectured inequality on subgraph counts in trees. Its proof was described as "short and elegant" and "quite different from any of the arguments" in existing literature, indicating it found a novel pathway to the solution.

- Immunology: Dr. Derya Unutmaz, an immunologist at The Jackson Laboratory, used the model to analyze experimental data from his lab. The model proposed that the effect was driven not by energy restriction, but by interference with N-linked glycosylation, a process crucial for protein folding, which in turn could reduce IL-2 signaling and alter the T cells' developmental path. This was a connection the lab's deep experts had not considered.

The contribution in the immunology case was so significant that it prompted a re-evaluation of what authorship means in the age of AI. Dr. Unutmaz concluded:

"In this regard, GPT-5 Pro made sufficient contributions to this work to the extent that it would warrant its inclusion as a co-author in this new study."

This is a landmark moment. We are seeing the first concrete instances of an AI making intellectual contributions that are worthy of academic credit, fundamentally changing the nature of scientific collaboration.

3. It's a World-Class Sparring Partner for Researchers

Beyond headline-grabbing breakthroughs, the paper demonstrates that GPT-5 is becoming an invaluable day-to-day research partner.

Scientists can use the model as an intellectual sparring partner to test ideas, find flaws in their reasoning, and accelerate their workflow. The experience of mathematician Timothy Gowers perfectly illustrates this dynamic. He describes how he "bounces ideas off" the model in a real, messy scientific back-and-forth. In one instance, he had an idea for a proof and asked GPT-5 about it. The model provided a correct proof relying on a lemma he hadn't heard of, saving him hours of work. But the story didn't end there. Gowers' human collaborators then found a subtle flaw in his original premise. He used their feedback to refine the problem and re-engaged the AI, which quickly showed him why his new, weaker assumption wouldn't work. This rapid feedback prevented him from going down a dead end, with the AI acting as a knowledgeable collaborator embedded in a human research process. As Gowers puts it, "I have had reasonably precise ideas for solving problems that I have run past GPT-5 Pro, which has explained to me why they cannot work."

In another example, physicist Brian Keith Spears used the model to develop a complex simulation of thermonuclear burn. He described a productivity gain so extreme it borders on the surreal:

"It made me a one-person army of experts all rolled up, as if I were the best I had ever been at everything I have ever thought about ICF... So, say 6 person-months reduced to 6 person-hours. That is a compression of about a factor of 1000."

This "in tandem" workflow, where an expert guides the AI, has the potential to dramatically accelerate the pace of scientific research by making every individual scientist more powerful and efficient.

4. It Can Rediscover Frontier Science from First Principles

One of the most counter-intuitive findings is the model's ability to re-derive complex scientific results that were not in its training data, suggesting a deeper form of reasoning is at play.

In one experiment, researcher Sébastien Bubeck gave the model a recent scientific paper (v1) that contained a suboptimal result and simply asked it to improve the main theorem. The model successfully improved the result, getting "half-way between v1 and v2" (the final, human-published version). Crucially, its proof was "quite different from the one in v2." The paper notes that "the GPT-5 proof can be viewed as a more canonical variant of the v1 proof, whereas the (human) v2 proof requires a clever weighting of different inequalities for certain cancellations to happen." This indicates it didn't just find the better answer online; it reasoned its way to a new, improved solution.

Even more stunningly, Bubeck adds an addendum: while the public model got halfway there, "our internal models, which can think for a few hours, were able to derive the optimal bound 1.75/L from scratch."

A similar phenomenon occurred in black hole physics. Researcher Alex Lupsasca prompted the model to find hidden symmetries in a key equation. The model initially failed "cold." However, after being given a simpler "warm-up" problem, it succeeded on the second try, correctly rediscovering the complex "curved-space SL(2,R) symmetry generators." This suggests the model can be "primed" to solve difficult problems and appears to be "executing (or emulating) a real symmetry computation rather than guessing."

5. It's Still a Tool That Needs a Master (and a Fact-Checker)

For all its power, the paper is careful to add a crucial dose of reality: GPT-5 is not infallible.

It "can confidently make mistakes, ardently defend them, and confuse itself (and us) in the process." Expert oversight remains absolutely critical.

A cautionary tale from the paper perfectly illustrates this risk. Two researchers were thrilled when GPT-5 helped them prove a lower bound for a problem they had been curious about for years. Before producing the correct proof, the model made several buggy arguments, including the amusing hallucination that one of the researchers had "asked this question on TCS Stack Exchange, and the answer given to them was n." Eventually, it produced a proof that was elegant and correct. There was just one problem: it wasn't new. They later discovered that the model's proof was "exactly the same as Alon's," from a paper published three years prior. The AI had reproduced a known proof without citing its source.

The researchers drew an important lesson from the experience:

"Our experience illustrates a pitfall in using AI: although GPT-5 possesses enormous internal knowledge... it may not always report the original information sources accurately. This has the potential to deceive even seasoned researchers into thinking their findings are novel."

The takeaway is clear: AI is a powerful tool, but it is not a replacement for human expertise, critical thinking, and rigorous verification. The scientist is still the master, responsible for guiding the inquiry and validating the results.

Conclusion: A New Kind of Scientific Revolution?

The evidence presented in "Early science acceleration experiments with GPT-5" signals a fundamental shift in how scientific research can be done. AI is rapidly moving beyond completing routine tasks to participating in the intellectual and creative core of the scientific process inckudingideation, discovery, and proof.

We are not just witnessing the creation of a better tool, but the emergence of a new kind of collaborator. As the paper's authors conclude with a palpable sense of acceleration, "None of this would have been possible just twelve months ago." The question is no longer if AI will change science, but how fast; and what discoveries await.

Podcast: