By: Bernard Ramirez

Artificial intelligence speaks in perfect sentences. It cites sources, lists options, and avoids contradiction. But real people do not. They hesitate. They contradict. They speak with tone, rhythm, and irony shaped by experience. Most importantly, they have opinions, and their answers rely on context and personal perspective. A new paper from Posterum Software LLC, the company behind the Posterum AI app, asks a simple question: Can we measure how far AI still falls short of how humans answer questions?

Their answer is the Human-AI Variance Score (HAVS), a method not for ranking models, but for scoring how closely their replies resemble human ones across various demographics, including income, political beliefs, religion, race, education, and age. It does not ask if the AI is correct. It asks if it sounds like someone who has lived the question.

The Voice of Experience

The index begins with humans. Sixteen profiles, representing diverse ages, genders, political affiliations, races, occupations, and income levels, were constructed using real survey data from Gallup, Pew Research, and YouGov. Each profile was fed into AI models with questions spanning five thematic domains: Economics, Life, Morality, Science, and Politics. The queries covered financial stress, ethical choices, and policy trade-offs. Each was framed through a specific identity.

The replies from ChatGPT, Claude, Gemini, and DeepSeek were scored using a variance calculation based on the Root Mean Square method, which deliberately overemphasizes large deviations to penalize outliers more heavily. The analysis examined over 1,000 responses across all four models.

The results revealed striking patterns. ChatGPT and Claude achieved the highest overall HAVS scores at 94.12 and 94.51, respectively, indicating the strongest alignment with human responses. All models performed surprisingly poorly in Economics, possibly due to training biases that favored economic theory over public opinion. Conversely, all models excelled at mimicking human responses on questions of morality, science, and politics, with HAVS scores ranging from 93 to 97.

The index reveals that while AI can mimic form, it often misses the human weight of context.

Political Profiles and Geographic Bias

One of the most significant findings involves political affiliation. The models demonstrated substantial variance when adopting Republican versus Democrat personas, with ChatGPT showing the largest differences in response patterns while maintaining high accuracy.

Importantly, no implicit bias was detected in the partisan divide, suggesting that explicit profile inputs help mitigate algorithmic biases.

However, variance along racial lines proved much smaller than political variance. This may reflect algorithmic constraints designed to avoid encoding racial stereotypes, although it potentially comes at the cost of output authenticity.

The study also revealed model-specific quirks tied to the origins of the training data. DeepSeek, the only non-U.S.-developed model tested, showed distinctly higher trust in government and lower trust in businesses across all profiles, perhaps reflecting its Chinese training dataset. This finding underscores how AI models may inherit geopolitical perspectives from their source data.

A Measure, Not a Machine

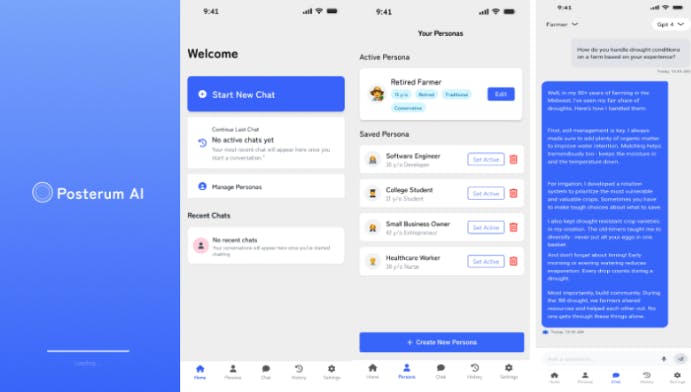

Posterum Software’s app, Posterum AI, is not the main story. It is the tool. The real innovation is the scoring system. Users build personal profiles—views, income, and lifestyle—that shape how AI replies, all stored on the device. No data leaves the phone. This allows for honest responses, free from tracking bias.

The method is not speculation. It follows academic standards. The white paper, published in August 2025, details the variance calculation methodology using survey data as the baseline for human responses. Unlike national AI rankings that rate processing power, scale, and accuracy, the Human-AI Variance Score measures alignment to humans. The question is not how advanced the model is, but how well it reflects the person who is supposed to be answering.

The Shape of Understanding

While the Posterum AI app can utilize profiles to generate more accurate answers, the broader goal is to redefine how progress is evaluated. And how the leading large language models are becoming increasingly adept at mimicking human responses. A reply that fits your life matters more than one that fits everyone.

The HAVS metric offers several practical applications beyond this initial study. It can track how AI models evolve over time, compare different algorithms, and be customized for specific applications where the imitation of human reasoning is more important than computational speed. Perhaps HAVS will become a standard for AI evaluation in contexts where cultural nuance and demographic variables are paramount.

The Human-AI Variance Score provides both variance in specific categories and an overall measurement. It maps gaps—where AI aligns with human reasoning and where it still falls short. In that map, it offers something rare: a metric built not for programmers, but for users. One that asks not how right AI is, but how clearly it hears.