Table of Links

-

Related Works

2.3 Evaluation benchmarks for code LLMs and 2.4 Evaluation metrics

-

Methodology

-

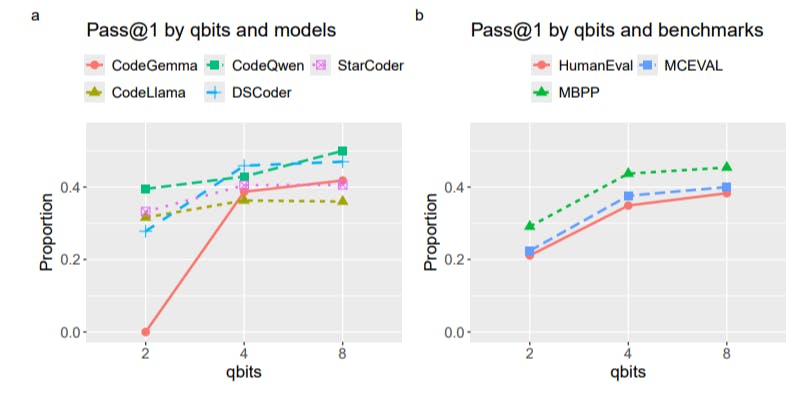

Evaluation

2 Related Works

2.1 Code LLMs

HuggingFace offers a comprehensive repository[1] of models that are free to use. The repository has become a go-to place for anyone interested in AI models including LLMs. As such, HuggingFace is an important resource toward the democratization of AI and LLMs particularly.

More relevant to this study is the Multilingual Code Models Evaluation leaderboard[2] hosted on the HuggingFace ecosystem. The leaderboard maintains a comprehensive list of open-source and permissively licensed code LLMs ranked according to their performance in well-established coding benchmark datasets. Based on this leaderboard, this section reviews some of the better-performing models that are not derivatives of each other.

The well-performing code LLMs (relative to other code LLMs) include CodeLlama [17], CodeQwen 1.5 [18], DeepSeek Coder [19], CodeGemma [20], and StarCoder2 [21]. These code LLMs are trained on large amounts of coding data available on the Internet with popular websites, such as Stack Overflow and GitHub, being one of the primary sources.

vailable on the Internet with popular websites, such as Stack Overflow and GitHub, being one of the primary sources. Many of these code LLMs are available with different parameters. For example, CodeLlama is offered with 7, 13, and 34 billion parameters. While the larger number of parameters increases the model’s performance, it also increases computational demand. For example, CodeLlama 34B may require somewhere between 60-70GB of memory, while CodeLlama 7B may need about 13GB of memory.

All five models listed here are free to use and have either open-source or permissive licenses making them especially suitable for individual users. These are also multilingual code LLMs meaning that the models can generate code in more than one programming language. For example, StarCoder2 supports 17 programming languages while CodeQwen 1.5 claims to support 92 programming languages.

The programming tasks can be roughly divided into three categories [22]. The first is code generation given a natural language prompt. The second is code completion where a snippet of code is provided as a prompt and the model is expected to fill in the missing part. Finally, code understanding is the ability of a model to understand and explain a snippet of code. This study is only concerned with code generation, and the other two categories are ignored. The ranking on the Multilingual Code Models Evaluation leaderboard is also based on the code generation tasks.

2.2 Quantization

Quantization is a compression of a Large Language Model to reduce its size with the least impact on its performance [16]. There are several benefits of applying quantization to LLMs including reduced computational demands and greater accessibility of LLMs. There are two main methods of quantization, Post-Training Quantization (PTQ) and Quantization-Aware Training (QAT). While QAT methods apply quantization during the training process, PTQ methods are applied after the training phase. Because of the computational demand for QAT methods, PTQ methods are more prevalent [23]. This study is therefore concerned with PTQ only.

PTQ methods can compress weights only or both weights and activations in LLMs. Training of LLMs usually happens at full precision, which uses a 32-bit floating point (FP32) to represent each weight and activation. After the training, LLMs can be deployed at half-precision (FP16) to improve their efficiency without a significant reduction in performance. In a quantized model, either weights or both weights and activations are represented with integers. For example, INT4/INT8 is a notation indicating that weights and activations were quantized to 4-bit and 8-bit integers respectively. Having integer bits also makes it more feasible to run a model on a CPU. However, quantizing activations can severely affect performance. Hence, it is common to quantize weights only and leave activations at full or half precision [16].

Zhu, Li, Liu, et al. [23] provides a comprehensive review of PTQ methods both weights-only and weight-activation combined. Among these methods, GPTQ [24] and AWQ [25] are of particular interest since they are the primary methods with which the models on HuggingFace were quantized[3]. Both of these methods apply weights-only quantization.

Several studies evaluate quantized LLMs. Lee, Park, Kwon, et al. [26] evaluated quantized models on 13 benchmark datasets assessing among others common sense knowledge, algorithmic reasoning, language knowledge, instruction following, mathematical reasoning, and conversational skills. The quantized models included Vicuna, Gemma, Llama 2, and Llama 3.1 with parameters ranging from 2B to 405B. The models were quantized with GPTQ, AWQ, and SmoothQuant algorithms to 4- and 8-bit integers. Jin, Du, Huang, et al. [16] used 10 benchmark datasets on mathematical reasoning, language understanding, summarization, instruction following, etc to evaluate quantized Qwen 1.5 models with 7B - 72B parameters. The models were quantized to 2-, 3-, 4-, and 8-bit integers using the GPTQ, LLM.int8(), and SpQR algorithms. Li, Ning, Wang, et al. [27] similarly evaluated 11 quantized models on language understanding, multi-step reasoning, instruction-following, dialogue, and other tasks. Overall, 19 benchmark datasets were used to test OPT, LLaMA2, Falcon, Bloomz, Mistral, ChatGLM, Vicuna, LongChat, StableLM, Gemma, and Mamba, with parameters ranging from 125M to 180B.

In conclusion of this section, there is a particular lack of attention toward quantized code LLMs. Furthermore, in the above-mentioned studies, even quantized general-purpose LLMs such as Qwen 1.5 and Llama 3.1 are not tested against well-known benchmarks with programming tasks. This gap is addressed in the current study.

Author:

(1) Enkhbold Nyamsuren, School of Computer Science and IT University College Cork Cork, Ireland, T12 XF62 ([email protected]).

This paper is available on arxiv under CC BY-SA 4.0 license.

[1] https://huggingface.co/models

[2] https://huggingface.co/spaces/bigcode/bigcode-models-leaderboard