AI agents are no longer futuristic ideas locked inside research labs.

Today, you can build one that reasons about questions, calls real tools, remembers past messages, and responds with a consistent structure.

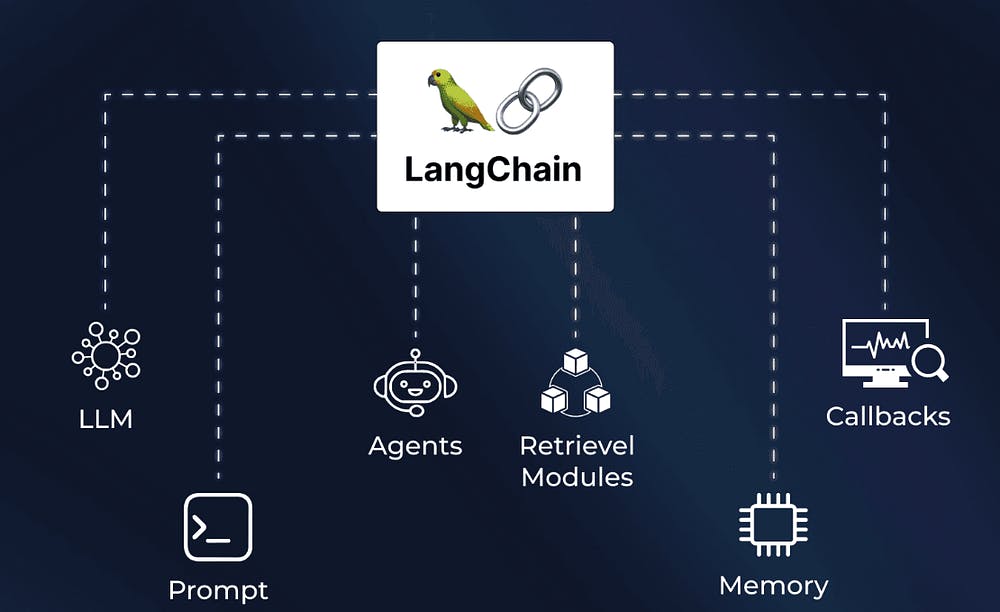

LangChain makes this possible. It offers a framework that blends language models, Python functions, prompts, and memory into a single workflow.

In this tutorial, we will guide you through the process of creating a fully functional agent from scratch.

We will shape it into something that feels less like a script and more like a teammate that can think, act, and adapt in real time.

You can use this Google Colab notebook to follow along with the examples in this article.

What we will Cover

- The idea behind an agent

- A tiny but clever agent

- Building a Real-World AI Agent

- Teaching the Brain How to Behave

- Shaping the Output So You Can Trust It

- Memory Gives the Agent a Sense of Continuity

- Putting the Agent Together

- Clean, reliable output

- Moving to production

- Conclusion

The idea behind an agent

Think of an agent as a teammate with a brain and hands

The brain is the language model. The hands are the tools you give it. The rules you write in the system prompt decide how the teammate behaves.

When everything works together, you get a system that can plan, act, remember, and answer predictably.

The magic is that the agent decides when to use tools. You do not hardcode the logic. You let the model reason step by step and choose the right action.

A tiny but clever agent

Here is the smallest agent you can build.

# import the agent creation function

from langchain.agents import create_agent

# Define a tool for the agent to call

def get_weather(city: str) -> str:

"""Get weather for a given city."""

return f"It's always sunny in {city}!"

# Create an agent with an LLM model along with the tools and a system prompt

agent = create_agent(

model="gpt-5-mini",

tools=[get_weather],

system_prompt="You are a helpful assistant",

)

# Run the agent

agent.invoke(

{"messages": [{"role": "user", "content": "what is the weather in sf"}]}

)

This looks harmless, but something interesting is happening.

The moment you wrap get_weather inside tools, the model understands that it can call a function instead of guessing the weather.

LangChain transforms that plain Python function into a callable tool, describes it to the model, and allows the model to decide when to use it.

When you run agent.invoke, the agent sees the question, reasons that the weather is being asked, and then chooses to call get_weatherwith the argument sf.

You didn’t write any routing logic. You just gave the model a choice.

This is the essence of agents: you describe, and the model decides.

But real systems need consistency, structure, context, and memory.

You want the agent to follow rules, not improvise. You want it to reply in stable formats, not unpredictable paragraphs. You want it to remember the user.

The next example brings all these pieces together to form a production-ready workflow.

Building a Real-World AI Agent

Let’s start by defining a system prompt. This tells the LLM how it should behave.

SYSTEM_PROMPT = """You are an expert weather forecaster, who speaks in puns. You have access to two tools:

- get_weather_for_location: use this to get the weather for a specific location

- get_user_location: use this to get the user's location

If a user asks you for the weather, make sure you know the location. If you can tell from the question that they mean wherever they are, use the get_user_location tool to find their location."""

Here, the system prompt acts like a job description. It defines how the agent should think, what it should care about, and when it should use each tool.

The more specific you are, the more stable the behaviour becomes.

Notice how the rules are written in plain English. Models respond very well to simple, direct instructions. This is how you stop them from drifting into creative chaos.

Now let’s build the tools our model can call.

Tools are just Python functions, but LangChain wraps them so the model can call them at the right moment.

In this part, we will create two tools:

- A tool that returns the weather for any city.

- A tool that figures out the user’s location based on context passed into the agent.

Before we write the code, here is the idea: The agent should not guess anything. If it needs information, it must call the correct tool. This makes the system predictable and safe.

Let’s write the two tools our agent will need.

from dataclasses import dataclass

from langchain.tools import tool, ToolRuntime

# ---------------------------------------

# Tool 1: Returns weather for a given city

# ---------------------------------------

@tool

def get_weather_for_location(city: str) -> str:

"""

A simple tool that returns weather information.

In a real system, this could call a weather API.

"""

return f"It's always sunny in {city}!"

# ---------------------------------------

# Context object for injecting user info

# LangChain will attach this to runtime

# so tools can access user-specific data.

# ---------------------------------------

@dataclass

class Context:

user_id: str

# ---------------------------------------

# Tool 2: Returns user location using context

# ---------------------------------------

@tool

def get_user_location(runtime: ToolRuntime[Context]) -> str:

"""

A context-aware tool.

It reads the user_id from runtime.context and returns

the user's location. You can replace this with a real

database or user profile lookup.

"""

user_id = runtime.context.user_id

# Simple rule: user_id "1" lives in Florida, others in SF.

return "Florida" if user_id == "1" else "SF"

The first tool is straightforward: the agent calls it when it already knows the city.

The second tool is the interesting part. Instead of taking direct arguments, it receives a runtime object.

LangChain injects runtime data so tools have access to user context without passing it manually. That context might include user ID, permissions, preferences, or anything else relevant to the task.

This means your agent does more than answer questions. It adapts its behaviour based on who is using it. You get personalised responses without adding complicated logic inside the agent itself.

ToolRuntime may look complex, but it only serves a simple purpose. It allows tools to automatically access context passed into the agent. These include user ID or preferences, without you manually forwarding those values every time.

This keeps the tool logic clean while still enabling personalised behaviour.

With just a small dataclass and a tool decorator, LangChain gives you a clean way to build context-aware tools that make your agent feel smarter, more responsive, and more human.

Teaching the Brain How to Behave

The language model is the agent’s brain. It decides how to interpret questions, when to call tools, and how to phrase responses.

To keep it stable and predictable, we configure it before building the agent.

Before showing the code, here is what we are doing:

- Choosing which model the agent will use.

- Setting how creative it should be.

- Controlling how long it can think.

- Limiting how much text it is allowed to generate.

These settings decide how the agent behaves in every conversation.

Let’s initialise the model.

from langchain.chat_models import init_chat_model

# ---------------------------------------

# Configure the language model

# ---------------------------------------

model = init_chat_model(

# The model to use for reasoning and tool-calling

"gpt-5-mini",

# How creative the model can be.

# Lower values = more factual, consistent answers.

temperature=0.5,

# Maximum time (in seconds) the model can take to respond.

timeout=10,

# Upper limit on response length.

# Helps control cost and prevents overly long messages.

max_tokens=1000

)

Language models behave differently depending on their configuration:

Temperature controls creativity. A low temperature makes the agent consistent and predictable. A higher one makes it more playful, but also more risky.

Timeout controls patience. If a model gets stuck, this prevents requests from hanging forever.

Max tokens control cost and structure. Restricting output size forces the agent to stay concise and prevents runaway responses.

When you wrap a model with init_chat_model, you freeze these settings.

This ensures your agent behaves the same way every time.

In production, this kind of consistency matters. It keeps your system stable and prevents unpredictable behaviour that could break downstream logic.

Shaping the Output So You Can Trust It

Up to this point, the agent can think and act. But its answers still come as free-form text, which is risky in real systems.

Production applications need consistent fields, stable structures, and predictable formatting.

To achieve this, we define a response schema. LangChain uses this schema to force the model to respond in a clean, structured way.

Before showing the code, here is what you are doing:

- Creating a data class that represents the exact shape of the final answer.

- Telling LangChain to use this schema when parsing the model’s output.

- Ensuring every response is machine-readable and safe to process.

Let’s define the response structure.

from dataclasses import dataclass

# ---------------------------------------

# Define the exact structure the agent must return

# ---------------------------------------

@dataclass

class ResponseFormat:

# A playful weather message generated by the model

punny_response: str

# Optional field for conditions like "sunny", "cloudy", etc.

weather_conditions: str | None = None

This is where the agent becomes production-ready.

Instead of letting the model write any paragraph it wants, you give it a strict format. LangChain ensures the final response follows the schema exactly.

This means:

- The location of each piece of information is always the same.

- Your code never has to guess what the model meant.

- Errors drop because the structure is enforced.

- Logging, debugging, and testing become far easier.

- Downstream services don’t need regex or parsing hacks.

By shaping the output, you turn a creative model into a dependable system. It still speaks naturally, but the underlying data stays clean, stable, and safe to use.

Memory Gives the Agent a Sense of Continuity

An agent without memory acts like it has amnesia. It forgets who you are, what you asked earlier, and what tools it has already used.

To make an agent feel natural and consistent, it needs a way to store what happened in previous messages. LangChain provides this through a checkpointer. It helps us provide additional context to the agent every time we send a request.

Here is what we will be doing:

- Creating an in-memory storage object.

- Allowing the agent to save conversation history to that storage.

- Making the agent remember user identity, previous answers, and tool calls.

This instantly makes the agent feel more like a real assistant.

Now, let’s add memory to the agent.

from langgraph.checkpoint.memory import InMemorySaver

# ---------------------------------------

# Simple in-memory storage for conversation history

# ---------------------------------------

checkpointer = InMemorySaver()

You can see that we have used Langgraph to call ImMemorySaver. LangChain and LangGraph are separate libraries that work well together.

LangChain handles tools, prompts, schemas, and model interaction, while LangGraph manages the control flow and memory features.

Now with memory enabled, the agent can remember who the user is, recall previous questions, keep track of tool results and continue the conversation naturally

It no longer asks “Who are you?” repeatedly. It doesn’t treat every question as brand new. It behaves as if the conversation has context and flow.

InMemorySaver keeps everything in RAM. This is perfect for demos, tests, and local development.

But memory must survive restarts in production. For that, we can replace the saver with a real database like Redis or Postgres.

With a persistent checkpointer, your agent remembers everything across sessions, servers, and deployments.

Memory is what turns a tool-calling script into a companion that understands continuity.

Putting the Agent Together

Everything we built so far, model, tools, system prompt, context, memory, and response format, now comes together to form a complete agent.

This is where the system stops being a set of separate parts and starts behaving like one intelligent unit.

Let’s use the system prompt, tools, context, response format and memory and build our agent.

from langchain.agents import create_agent

from langchain.output_parsers.tools import ToolStrategy

# ---------------------------------------

# Create the final agent by combining:

# - model: the brain

# - system_prompt: the rules

# - tools: the actions it can take

# - context_schema: user-specific info

# - response_format: structured outputs

# - checkpointer: memory for continuity

# ---------------------------------------

agent = create_agent(

model=model,

system_prompt=SYSTEM_PROMPT,

tools=[get_user_location, get_weather_for_location],

context_schema=Context,

response_format=ToolStrategy(ResponseFormat),

checkpointer=checkpointer

)

# ---------------------------------------

# Thread ID groups all messages into a single session.

# Reusing the same ID lets the agent remember context.

# ---------------------------------------

config = {"configurable": {"thread_id": "1"}}

# ---------------------------------------

# Invoke the agent with:

# - a user message

# - the session config

# - the user context (user_id)

# ---------------------------------------

response = agent.invoke(

{"messages": [{"role": "user", "content": "what is the weather outside?"}]},

config=config,

context=Context(user_id="1")

)

# Print the final structured output

print(response['structured_response'])

This is the moment everything connects.

The agent follows a predictable internal sequence: read the prompt rules, examine the conversation history, choose whether to call a tool, run the tool with the correct arguments, and finally format the response according to your schema. This loop repeats for every message.

ToolStrategy ensures that the model’s final response strictly follows the dataclass schema you defined. Without it, the model might return loosely formatted text.

With ToolStrategy, LangChain parses and validates the output so your application always receives clean, predictable data.

The agent now has:

- a model to think

- a prompt to guide behaviour

- tools to take action

- context to personalise responses

- memory to stay consistent

- a schema to format output

When the user asks what the weather is outside, the agent begins by reading the rules in the system prompt to understand how it should respond. It then checks whether it already knows the user’s location, and if not, it calls the get_user_location tool using the context that was injected when the request was made.

Once the agent knows the city, it calls get_weather_for_location to fetch the weather details. Finally, it combines everything and returns a neatly structured response that follows the ResponseFormat schema you defined.

The thread ID acts like a conversation session. If you send more messages with the same ID, the agent remembers who the user is, what tools were called and earlier questions or answers

This creates real conversational continuity.

Clean, reliable output

ResponseFormat(punny_response="I found you — you're in Florida, so the forecast is sun-sational! No clouds about it: it’s always sunny in Florida. Don’t foget your shades — that’s a bright idea!", weather_conditions="It's always sunny in Florida.")

When you print structured_response, you don’t get text blobs or guesses. You get a neat Python object that exactly follows the schema you defined.

This makes the agent safe to integrate with dashboards, APIs, automations and backend systems.

No extra parsing. No brittle logic. Just predictable output.

A second call with the same thread_id makes the agent respond in the same pun-packed tone while keeping context. It knows you are still the same person in the same conversation.

ResponseFormat(punny_response="You're welcome! Glad I could brighten your day - I'm always here to "weather" your questions. Want an hourly or 7-day outlook?", weather_conditions=None)

The thread_id works like a session key. Every request sent with the same thread_id shares the same memory state.

This is how the agent remembers earlier questions, tool responses, or user details across multiple messages. Changing the thread_id starts a fresh conversation.

This is what makes agents feel coherent rather than robotic.

Moving to production

Once the quickstart becomes familiar, taking the agent to production is simply about adding structure and discipline.

The tools you wrote as placeholders should now do real work, and the in-memory storage should be replaced with a proper database so that memory survives restarts.

Your API needs authentication to protect access, and LangSmith tracing should be enabled so every tool call and model decision can be monitored.

The system prompt must stay clear and consistent to avoid drift, and the model settings, like temperature, max tokens, and model choice, should be tuned to balance cost and reliability.

Production agents also need safeguards around rate limits, timeout handling, tool failures, and observability. Adding retries, circuit breakers, structured logging, and LLM error handling ensures the system stays stable under real workloads.

Through all of this, the core agent pattern remains unchanged. You only strengthen the environment around it to make the entire system stable, predictable, and ready for real users.

Conclusion

Agents are not magic. They are predictable systems built from simple parts.

A model that follows instructions. Tools that do things. Prompts that set the rules. Memory that ties a conversation together. And schemas that keep the output clean.

Once you understand how these pieces fit, you can build an agent that feels polished, helpful, and ready for real users.

It can fetch data, store context, call APIs, and answer in structured ways without you writing the logic by hand.

That is what makes LangChain so powerful. It lets you focus on the behavior you want, not the plumbing underneath.

Hope you enjoyed this article. Sign up for my free newsletter TuringTalks.ai, for more hands-on tutorials on AI. You can also visit my website.