Table of Links

-

Prior Work and 2.1 Educational Objectives of Learning Activities

-

3.1 Multiscale Design Environment

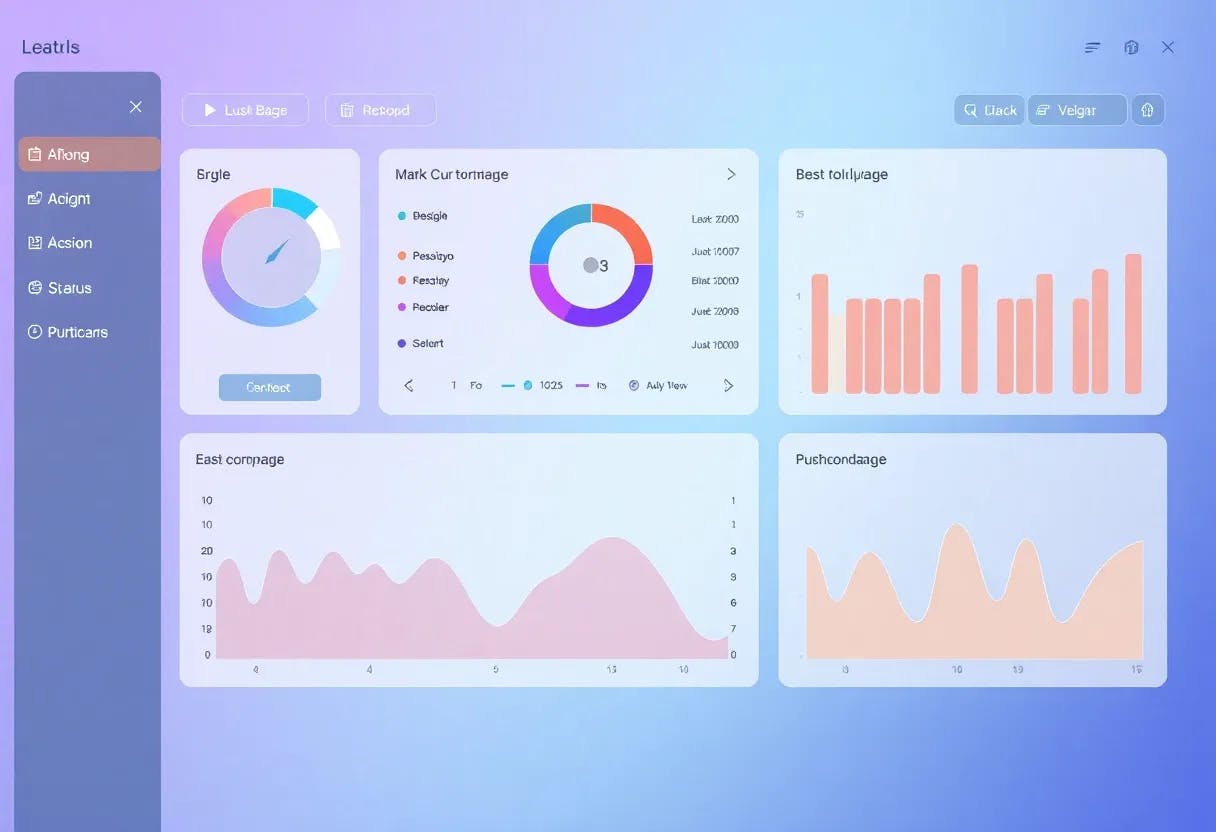

3.2 Integrating a Design Analytics Dashboard with the Multiscale Design Environment

-

5.1 Gaining Insights and Informing Pedagogical Action

5.2 Support for Exploration, Understanding, and Validation of Analytics

5.3 Using Analytics for Assessment and Feedback

5.4 Analytics as a Potential Source of Self-Reflection for Students

-

Discussion + Implications: Contextualizing: Analytics to Support Design Education

6.1 Indexicality: Demonstrating Design Analytics by Linking to Instances

6.2 Supporting Assessment and Feedback in Design Courses through Multiscale Design Analytics

7 CONCLUSION

We took a Research through Design approach and created a research artifact to understand the implications of AI-based multiscale design analytics, in practice. Our study demonstrates the potential of multiscale design analytics for providing instructors insights into student design work and so support their assessment efforts. We focused on supporting users engaged in creative design tasks. Underlying our investigation was our understanding of how multiscale design contributes to teaching and performing these tasks.

We develop multiscale design theory to focus on how people assemble information elements in order to convey meanings. The tasks that students perform in the assignments cross fields. Multiscale design tasks are exploratory search tasks, which involve looking up, learning, and investigating [54]. They are information-based ideation tasks, which involve finding and curating information elements in order to generate and develop new ideas as part of creativity and innovation [41, 44]. They are visual design thinking tasks, which involve forming combinations through sketching and the reverse, sketching to generate images of forms in the mind [34]. They are constructivist learning tasks, in which making serves as a fundamental basis for learning by doing [15, 42, 83]. On the whole, multiscale design has roots in diverse fields and, as we see from our initial study, applications in diverse fields. The scopes of intellectual merit and potential broad impact are wide.

The present research contributes how to convey the meaning of multiscale design analytics derived using AI, by linking dashboard presentation of design analytics with the actual design work that they measure and characterize. Making AI results understandable by humans is fundamental to building their trust in using systems supported by AI [67]. In our study, when the interface presents what is being measured by AI, it allows users to agree or disagree. Specifically, our integration of the dashboard presentation with the actual design environment allowed instructors to independently validate the particular sets of design element assemblages that the AI determined as nested clusters. This makes the interface to the AI-based analytics visible, or as Bellotti and Edwards said, intelligible and accountable [11]. The importance of making AI decisions visible has been noted in healthcare [18, 80] and criminal justice [26] domains. Likewise, in education, supporting users’ understanding of AI-based analytics is vital, as the measures can directly impact outcomes for an individual. Analytics that do not connect with students’ design work would have little meaning for instructors, if at all. Students, if provided with such analytics, would fail to understand and address the shortcomings that they indicate.

Significant implications for future research are stimulated by the current level of investigation of the particular multiscale design analytics in particular situated course context classrooms. We need further investigation of how these as well as new multiscale design analytics affect other design education contexts and design in industry. Such research can investigate the extent to which different analytics and visualization techniques—e.g., indexical representation and animation—are beneficial in specific contexts. Actionable insights on design work can prove vital in improving learners’ creative strategies and abilities, which in turn can stimulate economic growth and innovation [56]. Continued efforts toward simultaneously satisfying the dual goals of AI performance and visibility of decisions—across a range of contexts—has the potential to create broad impacts by providing inroads to addressing complex sociotechnical challenges, such as ensuring reliability and trust [67] in the use of AI systems.

REFERENCES

[1] Amina Adadi and Mohammed Berrada. 2018. Peeking inside the black-box: A survey on Explainable Artificial Intelligence (XAI). IEEE Access 6 (2018), 52138–52160.

[2] Nancy E Adams. 2015. Bloom’s taxonomy of cognitive learning objectives. Journal of the Medical Library Association: JMLA 103, 3 (2015), 152.

[3] Robin S Adams, Tiago Forin, Mel Chua, and David Radcliffe. 2016. Characterizing the work of coaching during design reviews. Design Studies 45 (2016), 30–67.

[4] Christopher Alexander. 1964. Notes on the Synthesis of Form. Vol. 5. Harvard University Press.

[5] Patricia Armstrong. 2016. Bloom’s taxonomy. Vanderbilt University Center for Teaching (2016).

[6] Kimberly E Arnold and Matthew D Pistilli. 2012. Course signals at Purdue: Using learning analytics to increase student success. In Proceedings of the 2nd international conference on learning analytics and knowledge. ACM, 267–270.

[7] Yaneer Bar-Yam. 2006. Engineering complex systems: multiscale analysis and evolutionary engineering. In Complex engineered systems. Springer, 22–39.

[8] Evan Barba. 2019. Cognitive Point of View in Recursive Design. She Ji: The Journal of Design, Economics, and Innovation 5, 2 (2019), 147–162.

[9] Benjamin B Bederson. 2011. The promise of zoomable user interfaces. Behaviour & Information Technology 30, 6 (2011), 853–866.

[10] Benjamin B Bederson and Angela Boltman. 1999. Does animation help users build mental maps of spatial information?. In Proceedings 1999 IEEE Symposium on Information Visualization (InfoVis’ 99). IEEE, 28–35.

[11] Victoria Bellotti and Keith Edwards. 2001. Intelligibility and accountability: human considerations in context-aware systems. Human–Computer Interaction 16, 2-4 (2001), 193–212.

[12] Melanie Birks and Jane Mills. 2015. Grounded theory: A practical guide. Sage.

[13] Paulo Blikstein. 2011. Using learning analytics to assess students’ behavior in open-ended programming tasks. In Proceedings of the 1st international conference on learning analytics and knowledge. ACM, 110–116.

[14] Benjamin S Bloom et al. 1956. Taxonomy of educational objectives. Vol. 1: Cognitive domain. New York: McKay 20 (1956), 24.

[15] Phyllis C Blumenfeld, Elliot Soloway, Ronald W Marx, Joseph S Krajcik, Mark Guzdial, and Annemarie Palincsar. 1991. Motivating project-based learning: Sustaining the doing, supporting the learning. Educational psychologist 26, 3-4 (1991), 369–398.

[16] Gabriel Britain, Ajit Jain, Nic Lupfer, Andruid Kerne, Aaron Perrine, Jinsil Seo, and Annie Sungkajun. 2020. Design is (A)live: An Environment Integrating Ideation and Assessment. In CHI Late-Breaking Work. ACM, 1–8.

[17] Ann L Brown. 1992. Design experiments: Theoretical and methodological challenges in creating complex interventions in classroom settings. The journal of the learning sciences 2, 2 (1992), 141–178.

[18] Adrian Bussone, Simone Stumpf, and Dympna O’Sullivan. 2015. The role of explanations on trust and reliance in clinical decision support systems. In 2015 International Conference on Healthcare Informatics. IEEE, 160–169.

[19] Kathy Charmaz. 2014. Constructing grounded theory. Sage.

[20] Bo T Christensen and Linden J Ball. 2016. Dimensions of creative evaluation: Distinct design and reasoning strategies for aesthetic, functional and originality judgments. Design Studies 45 (2016), 116–136.

[21] Andy Cockburn, Amy Karlson, and Benjamin B Bederson. 2009. A review of overview+ detail, zooming, and focus+ context interfaces. ACM Computing Surveys (CSUR) 41, 1 (2009), 1–31.

[22] Deanna P Dannels, Amy L Housley Gaffney, and Kelly Norris Martin. 2011. Students’ talk about the climate of feedback interventions in the critique. Communication Education 60, 1 (2011), 95–114.

[23] John Davies, Erik de Graaff, and Anette Kolmos. 2011. PBL across the disciplines: research into best practice. In The 3rd International Research Symposium on PBL. Aalborg: Aalborg Universitetsforlag.

[24] Shane Dawson, Leah Macfadyen, F Risko Evan, Tom Foulsham, and Alan Kingstone. 2012. Using technology to encourage self-directed learning: The Collaborative Lecture Annotation System (CLAS). In Australasian Society for Computers in Learning in Tertiatry Education. 246–255.

[25] Barbara De La Harpe, J Fiona Peterson, Noel Frankham, Robert Zehner, Douglas Neale, Elizabeth Musgrave, and Ruth McDermott. 2009. Assessment focus in studio: What is most prominent in architecture, art and design? International Journal of Art & Design Education 28, 1 (2009), 37–51.

[26] Ashley Deeks. 2019. The Judicial Demand for Explainable Artificial Intelligence. Columbia Law Review 119, 7 (2019), 1829–1850.

[27] Erik Duval. 2011. Attention please!: learning analytics for visualization and recommendation. In Proceedings of the 1st international conference on learning analytics and knowledge. ACM, 9–17.

[28] Clive L. Dym, Alice M. Agogino, Ozgur Eris, Daniel D. Frey, and Larry J. Leifer. 2005. Engineering Design Thinking, Teaching, and Learning. Journal of Engineering Education 94, 1 (jan 2005), 103–120. https://doi.org/10.1002/j.2168-9830.2005.tb00832.x

[29] Charles Eames and Ray Eames. 1968. Powers of ten. Pyramid Films (1968).

[30] Vladimir Estivill-Castro and Ickjai Lee. 2002. Multi-level clustering and its visualization for exploratory spatial analysis. GeoInformatica 6, 2 (2002), 123–152.

[31] William Gaver. 2012. What should we expect from research through design?. In Proceedings of the SIGCHI conference on human factors in computing systems. 937–946. [32] Dedre Gentner and Albert L Stevens. 2014. Mental models. Psychology Press.

[33] John S Gero and Mary Lou Maher. 1993. Modeling creativity and knowledge-based creative design. Psychology Press.

[34] Gabriela Goldschmidt. 1994. On visual design thinking: the vis kids of architecture. Design studies 15, 2 (1994), 158–174.

[35] William A Hamilton, Nic Lupfer, Nicolas Botello, Tyler Tesch, Alex Stacy, Jeremy Merrill, Blake Williford, Frank R Bentley, and Andruid Kerne. 2018. Collaborative Live Media Curation: Shared Context for Participation in Online Learning. In Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems. ACM, 1–14.

[36] Meng-Leong How, Sin-Mei Cheah, Yong-Jiet Chan, Aik Cheow Khor, and Eunice Mei Ping Say. 2020. Artificial intelligence-enhanced decision support for informing global sustainable development: A human-centric AI-thinking approach. Information 11, 1 (2020), 39.

[37] Hilary Hutchinson, Wendy Mackay, Bo Westerlund, Benjamin B Bederson, Allison Druin, Catherine Plaisant, Michel Beaudouin-Lafon, Stéphane Conversy, Helen Evans, Heiko Hansen, and Others. 2003. Technology probes: inspiring design for and with families. In Proceedings of the SIGCHI conference on Human factors in computing systems. ACM, 17–24.

[38] Ajit Jain. 2017. Measuring Creativity: Multi-Scale Visual and Conceptual Design Analysis. In Proceedings of the 2017 ACM SIGCHI Conference on Creativity and Cognition. 490–495.

[39] Ajit Jain. 2021. How to Support Situated Design Education through AI-Based Analytics. Ph. D. Dissertation.

[40] Ajit Jain, Andruid Kerne, Nic Lupfer, Gabriel Britain, Aaron Perrine, Yoonsuck Choe, John Keyser, and Ruihong Huang. 2021. Recognizing creative visual design: multiscale design characteristics in free-form web curation documents. In Proceedings of the 21st ACM Symposium on Document Engineering. 1–10.

[41] Ajit Jain, Nic Lupfer, Yin Qu, Rhema Linder, Andruid Kerne, and Steven M. Smith. 2015. Evaluating tweetbubble with ideation metrics of exploratory browsing. In Proceedings of the 2015 ACM SIGCHI Conference on Creativity and Cognition. 53–62.

[42] David H Jonassen. 1994. Thinking technology: Toward a constructivist design model. Educational technology 34, 4 (1994), 34–37.

[43] Andruid Kerne, Nic Lupfer, Rhema Linder, Yin Qu, Alyssa Valdez, Ajit Jain, Kade Keith, Matthew Carrasco, Jorge Vanegas, and Andrew Billingsley. 2017. Strategies of Free-Form Web Curation: Processes of Creative Engagement with Prior Work. In Proceedings of the 2017 ACM SIGCHI Conference on Creativity and Cognition. 380–392.

[44] Andruid Kerne, Andrew M Webb, Steven M Smith, Rhema Linder, Nic Lupfer, Yin Qu, Jon Moeller, and Sashikanth Damaraju. 2014. Using metrics of curation to evaluate information-based ideation. ACM Transactions on Computer-Human Interaction (TOCHI) 21, 3 (2014), 1–48.

[45] Aniket Kittur, Lixiu Yu, Tom Hope, Joel Chan, Hila Lifshitz-Assaf, Karni Gilon, Felicia Ng, Robert E Kraut, and Dafna Shahaf. 2019. Scaling up analogical innovation with crowds and AI. Proceedings of the National Academy of Sciences 116, 6 (2019), 1870–1877.

[46] David R Krathwohl. 2002. A revision of Bloom’s taxonomy: An overview. Theory into practice 41, 4 (2002), 212–218.

[47] Markus Krause, Tom Garncarz, JiaoJiao Song, Elizabeth M Gerber, Brian P Bailey, and Steven P Dow. 2017. Critique style guide: Improving crowdsourced design feedback with a natural language model. In Proceedings of the 2017 CHI Conference on Human Factors in Computing Systems. 4627–4639.

[48] Hajin Lim. 2018. Design for Computer-Mediated Multilingual Communication with AI Support. In Companion of the 2018 ACM Conference on Computer Supported Cooperative Work and Social Computing. 93–96.

[49] Lisa-Angelique Lim, Sheridan Gentili, Abelardo Pardo, Vitomir Kovanović, Alexander Whitelock-Wainwright, Dragan Gašević, and Shane Dawson. 2019. What changes, and for whom? A study of the impact of learning analytics-based process feedback in a large course. Learning and Instruction (2019), 101202.

[50] Lori Lockyer, Elizabeth Heathcote, and Shane Dawson. 2013. Informing pedagogical action: Aligning learning analytics with learning design. American Behavioral Scientist 57, 10 (2013), 1439–1459.

[51] Nic Lupfer, Hannah Fowler, Alyssa Valdez, Andrew Webb, Jeremy Merrill, Galen Newman, and Andruid Kerne. 2018. Multiscale Design Strategies in a Landscape Architecture Classroom. In Proceedings of the 2018 on Designing Interactive Systems Conference 2018. ACM, 1081–1093.

[52] Nic Lupfer, Andruid Kerne, Rhema Linder, Hannah Fowler, Vijay Rajanna, Matthew Carrasco, and Alyssa Valdez. 2019. Multiscale Design Curation: Supporting Computer Science Students’ Iterative and Reflective Creative Processes. In Proceedings of the 2019 on Creativity and Cognition. ACM, 233–245.

[53] N Lupfer, A Kerne, AM Webb, and R Linder. 2016. Patterns of free-form curation: Visual thinking with web content. Proceedings of the 2016 ACM on Multimedia Conference. http://dl.acm.org/citation.cfm?id=2964303

[54] Gary Marchionini. 2006. Exploratory search: from finding to understanding. Commun. ACM 49, 4 (2006), 41–46.

[55] Richard E Mayer and Roxana Moreno. 2002. Animation as an aid to multimedia learning. Educational psychology review 14, 1 (2002), 87–99.

[56] National Academy of Engineering. 2010. Rising Above the Gathering Storm, Revisited: Rapidly Approaching Category 5. The National Academies Press.

[57] Yeonjoo Oh, Suguru Ishizaki, Mark D Gross, and Ellen Yi-Luen Do. 2013. A theoretical framework of design critiquing in architecture studios. Design Studies 34, 3 (2013), 302–325.

[58] Jane Osmond and Michael Tovey. 2015. The Threshold of Uncertainty in Teaching Design. Design and Technology Education 20, 2 (2015), 50–57.

[59] Antti Oulasvirta, Samuli De Pascale, Janin Koch, Thomas Langerak, Jussi Jokinen, Kashyap Todi, Markku Laine, Manoj Kristhombuge, Yuxi Zhu, Aliaksei Miniukovich, and Others. 2018. Aalto Interface Metrics (AIM) A Service and Codebase for Computational GUI Evaluation. In The 31st Annual ACM Symposium on User Interface Software and Technology Adjunct Proceedings. 16–19.

[60] Abelardo Pardo, Jelena Jovanovic, Shane Dawson, Dragan Gašević, and Negin Mirriahi. 2019. Using learning analytics to scale the provision of personalised feedback. British Journal of Educational Technology 50, 1 (2019), 128–138.

[61] Ken Perlin and David Fox. 1993. Pad: an alternative approach to the computer interface. In Proceedings of the 20th annual conference on Computer graphics and interactive techniques. 57–64.

[62] Yin Qu, Andruid Kerne, Nic Lupfer, Rhema Linder, and Ajit Jain. 2014. Metadata type system: Integrate presentation, data models and extraction to enable exploratory browsing interfaces. In Proc. EICS. ACM, 107–116.

[63] Juan Rebanal, Jordan Combitsis, Yuqi Tang, and Xiang’Anthony’ Chen. 2021. XAlgo: a Design Probe of Explaining Algorithms’ Internal States via Question-Answering. In 26th International Conference on Intelligent User Interfaces. 329–339.

[64] Katharina Reinecke, Tom Yeh, Luke Miratrix, Rahmatri Mardiko, Yuechen Zhao, Jenny Liu, and Krzysztof Z Gajos. 2013. Predicting users’ first impressions of website aesthetics with a quantification of perceived visual complexity and colorfulness. In Proc. CHI. ACM, 2049–2058.

[65] Wojciech Samek, Thomas Wiegand, and Klaus-Robert Müller. 2017. Explainable artificial intelligence: Understanding, visualizing and interpreting deep learning models. arXiv preprint arXiv:1708.08296 (2017).

[66] Elizabeth B-N Sanders and Pieter Jan Stappers. 2008. Co-creation and the new landscapes of design. Co-design 4, 1 (2008), 5–18.

[67] Ben Shneiderman. 2020. Human-Centered Artificial Intelligence: Reliable, Safe & Trustworthy. International Journal of Human–Computer Interaction (2020), 1–10.

[68] Simon Buckingham Shum and Ruth Deakin Crick. 2012. Learning dispositions and transferable competencies: pedagogy, modelling and learning analytics. In Proceedings of the 2nd international conference on learning analytics and knowledge. 92–101.

[69] Katrina Sin and Loganathan Muthu. 2015. Application of Big Data in Education Data Mining and Learning Analytics–A Literature Review. ICTACT journal on soft computing 5, 4 (2015).

[70] Steven M Smith, Thomas B Ward, and Ronald A Finke. 1995. The creative cognition approach. MIT press.

[71] Lucy A Suchman. 1987. Plans and situated actions: The problem of human-machine communication. Cambridge university press.

[72] Joshua D Summers and Jami J Shah. 2010. Mechanical engineering design complexity metrics: size, coupling, and solvability. Journal of Mechanical Design 132, 2 (2010).

[73] Edward R Tufte, Nora Hillman Goeler, and Richard Benson. 1990. Envisioning information. Vol. 126. Graphics press Cheshire, CT.

[74] David Turnbull and Helen Watson. 1993. Maps Are Territories Science is an Atlas: A Portfolio of Exhibits. University of Chicago Press.

[75] Barbara Tversky, Julie Bauer Morrison, and Mireille Betrancourt. 2002. Animation: can it facilitate? International journal of human-computer studies 57, 4 (2002), 247–262.

[76] Vladimir L Uskov, Jeffrey P Bakken, Ashok Shah, Nicholas Hancher, Cade McPartlin, and Kaustubh Gayke. 2019. Innovative InterLabs system for smart learning analytics in engineering education. In 2019 IEEE Global Engineering Education Conference (EDUCON). IEEE, 1363–1369.

[77] Katrien Verbert, Erik Duval, Joris Klerkx, Sten Govaerts, and José Luis Santos. 2013. Learning analytics dashboard applications. American Behavioral Scientist 57, 10 (2013), 1500–1509.

[78] Katrien Verbert, Sten Govaerts, Erik Duval, Jose Luis Santos, Frans Assche, Gonzalo Parra, and Joris Klerkx. 2014. Learning dashboards: an overview and future research opportunities. Personal and Ubiquitous Computing 18, 6 (2014), 1499–1514.

[79] Johan Wagemans, James H Elder, Michael Kubovy, Stephen E Palmer, Mary A Peterson, Manish Singh, and Rüdiger von der Heydt. 2012. A century of Gestalt psychology in visual perception: I. Perceptual grouping and figure–ground organization. Psychological bulletin 138, 6 (2012), 1172.

[80] Danding Wang, Qian Yang, Ashraf Abdul, and Brian Y Lim. 2019. Designing theory-driven user-centric explainable AI. In Proceedings of the 2019 CHI conference on human factors in computing systems. 1–15.

[81] Alyssa Friend Wise. 2014. Designing pedagogical interventions to support student use of learning analytics. In Proceedings of the fourth international conference on learning analytics and knowledge. 203–211.

[82] Anbang Xu, Huaming Rao, Steven P Dow, and Brian P Bailey. 2015. A classroom study of using crowd feedback in the iterative design process. In Proceedings of the 18th ACM conference on computer supported cooperative work & social computing. 1637–1648.

[83] Robert E Yager. 1991. The constructivist learning model. The science teacher 58, 6 (1991), 52.

[84] John Zimmerman, Jodi Forlizzi, and Shelley Evenson. 2007. Research through design as a method for interaction design research in HCI. In Proceedings of the ACM CHI. ACM, 493–502.

[85] John Zimmerman, Erik Stolterman, and Jodi Forlizzi. 2010. An analysis and critique of Research through Design: towards a formalization of a research approach. In proceedings of the 8th ACM conference on designing interactive systems. 310–319.

A INTERVIEW QUESTIONS

We used the following questions to guide our semi-structured interviews:

• Please briefly describe your experiences with the courses dashboard.

• Do you think the class would be different with and without the dashboard? If so, how?

• How does how you use the courses dashboard compare with other learning management systems and environments? What is similar? Is anything different?

• Has using the dashboard shown you anything new or unexpected about your students’ learning? If yes, what?

• What do you understand about the analytics presented on the dashboard with submissions?

• Do you utilize analytics? If so, do they support in monitoring and intervening? Assessment and feedback? How?

• If the answer to ‘Do you utilize analytics’ is ‘No’: Do you think these analytics have the potential to become a part of the assessment and feedback that you provide to the students? If so, how?

• What do you think about showing these analytics to students on-demand?

• Did you click on ‘Scales’ analytics? How did seeing its relationship with the actual design work affect your utilization (or potential utilization) for assessment and feedback?

• Did you click on ‘Clusters’ analytics? How did seeing its relationship with the actual design work affect your utilization (or potential utilization) for assessment and feedback?

• Has using the dashboard to follow and track student design work changed how you teach or interact with the students? If so, how?

• What would you do different, if anything, next time you teach the class?

• What are your suggestions for making the dashboard more suited for your teaching and assessment practices? Or for design education in general?

Authors:

(1) Ajit Jain, Texas A&M University, USA; Current affiliation: Audigent;

(2) Andruid Kerne, Texas A&M University, USA; Current affiliation: University of Illinois Chicago;

(3) Nic Lupfer, Texas A&M University, USA; Current affiliation: Mapware;

(4) Gabriel Britain, Texas A&M University, USA; Current affiliation: Microsoft;

(5) Aaron Perrine, Texas A&M University, USA;

(6) Yoonsuck Choe, Texas A&M University, USA;

(7) John Keyser, Texas A&M University, USA;

(8) Ruihong Huang, Texas A&M University, USA;

(9) Jinsil Seo, Texas A&M University, USA;

(10) Annie Sungkajun, Illinois State University, USA;

(11) Robert Lightfoot, Texas A&M University, USA;

(12) Timothy McGuire, Texas A&M University, USA.

This paper is