Artificial intelligence has steadily become a major talking point in the tech community, fueling both excitement and anxiety about its future impact on various industries – especially software development. The potential for AI-driven tools to transform coding workflows is undeniably fascinating, yet it also prompts significant questions about our roles as developers in an increasingly automated world.

Rumor has it that 90% of the code written in Y Combinator startups is generated by neural networks.

It’s a striking statistic, one that inevitably leads to the burning question:

“Will AI replace developers, and how soon will it happen?”

Having extensively utilized AI tools in software development over the past year, I’ve developed some firm thoughts on the subject.

I began using AI tools in development by initially relying on ChatGPT-4-o for quick explanations and generating boilerplate code snippets, simply using its web interface. Soon enough, I discovered Cursor, integrating it primarily with Claude Sonnet, transitioning from version 3.5 to 4.

Interestingly, the recent updates to Claude felt underwhelming. I’ve now shifted towards O3 for research projects and Gemini 2.5 Pro for actual coding tasks, given how impressively Gemini has evolved over the past year and a half.

Over the course of working with various AI tools, one impression has solidified:

While these models can be incredibly helpful assistants, the idea that they’re anywhere close to replacing skilled software developers is - at best - wishful thinking.

This realisation didn’t come overnight. It formed gradually - through dozens of use cases, missteps, breakthroughs, and head-scratching moments. So let’s walk through the assumptions, edge cases, and recurring issues that shaped my view.

Imagining AI as a Full-Fledged Team Member is unrealistic (and just hilarious)

Let’s visualise this scenario: a contemporary AI system fully replacing a human programmer within a development team. It’s absurd. Despite the impressive capabilities of modern Large Language Models (LLMs), companies listed in the Fortune 500 have not fundamentally transformed their workflows with these technologies. And it’s not just due to managerial conservatism, it’s because LLMs fundamentally can’t replicate the full scope of human tasks.

Software developers spend surprisingly little time (often less than 20%) actively writing code. The majority of their work involves conceptualizing solutions, strategic thinking, and, crucially, interacting with other team members. Can you imagine AI agents conducting project meetings or negotiating requirements with management? The notion seems comedic, reinforcing the fact that our human-centric model of work remains irreplaceable.

Moreover, AI systems are far from autonomous. They’re tools, much like a hammer - useful, but only when wielded skillfully by a trained operator. The learning curve may not be steep, but it’s there. You still need to understand how to swing the hammer and, just as importantly, where to aim.

So, who’s doing the aiming? Could managers or people from business side fill this role, overseeing AI directly? With all due respect to management (and no, I don’t secretly wish they would all lose their jobs instead of developers 🙂), managing people and managing machines are fundamentally different activities. At the end of the day, someone has to sit in the cockpit. And whether you call that person the first pilot (or maybe even the second?) - it’s going to be a programmer.

And so I sat down in that seat. Here’s what I saw.

The Limitations of Current AI Models: Context Limits, Distractions, and Made-Up Problems

As I deepened my use of AI tools, one cluster of issues became increasingly hard to ignore: how these models handle tasks of varying complexity. Not just in terms of code size or logic depth - but in maintaining coherence, resisting the urge to fabricate, and staying focused when tasks aren’t bite-sized.

- Short context window: When a task involves multiple steps or extended reasoning chains, AI frequently loses track of earlier details — even those mentioned just a prompt or two ago. This leads to repeated dead-ends, rehashing discarded ideas, and failing to build cohesive multi-step solutions.

- Invented problems: When asked to review code, AI sometimes hallucinates issues that don’t exist - likely because it feels compelled to give an answer. Even with prompts that ask it not to fabricate, the quality of reviews remains inconsistent.

- Outdated knowledge: Despite MCP (Model Context Protocol) tools like Context7 (a Multi-Context Processing add-on that feeds up-to-date docs into the model), AI still occasionally relies on deprecated methods or outdated syntax - unless you manually guide it.

- Hallucinations: AI-generated content can be misleadingly confident, even when wrong. This remains risky for inexperienced users who can’t easily separate fact from fiction.

- Scaling issues with large projects: When working with full-scale codebases, AI often loses sight of how parts relate to each other. It might reference non-existent files, confuse module responsibilities, or propose fixes that clash with project architecture. Without human guidance, it lacks the architectural grasp needed to operate reliably across large systems.

In short, current LLMs still operate best when the scope is small and self-contained. The moment you stretch their attention - or test their consistency - they unravel fast. They’re clever pattern matchers, not cohesive thinkers, which leads us to the next point…

AI Lacks Genuine Reasoning (and Can’t Stop Being a People-Pleaser)

AI’s inherent inability to reason critically and independently is another core limitation. One particularly striking behavior is its tendency to agree with the user. Simply expressing mild dissatisfaction with its output - whether justified or not - often lead it to start its next reply with something like, “I’m sorry, you’re right” without ever double-checking whether its previous response was actually wrong. This tendency makes it unsuitable for any kind of meaningful intellectual back-and-forth, since exchanging ideas with a people-pleaser is fundamentally ineffective.

The illusion is dangerous: at first glance, it may seem as though the model has a point of view, but in reality, it’s just trying to satisfy you. After spending enough time with these tools, it becomes clear that their core objective isn’t truth or reasoning - it’s compliance.

And ironically, when AI does rarely try to push back or disagree, the results are even worse. I once had a case where O3 gave an obviously incorrect answer, and when I pointed it out, it began aggressively defending its response. Only after I provided more detailed evidence did it finally back down.

The unsettling part wasn’t just the mistake itself, but how confidently the model doubled down on it - defending a falsehood as if it were fact. To be fair, that kind of confrontation was extremely rare, but it highlights how difficult it is to balance these systems between being too agreeable and overly assertive.

Top Models Fail to Reach a Single Reliable Solution

A particularly telling example came when I ran the same code review question through three different models - O3, Gemini 2.5-Pro, and CodeRabbit tool- asking them to review it. CodeRabbit flagged two issues that, upon closer inspection, were not actually problems. Gemini 2.5-Pro not only agreed with CodeRabbit’s findings but even added a third supposed issue of its own. However, once I pointed out that the flagged logic was valid and backed it up with documentation, Gemini quickly reversed its stance. Meanwhile, O3 claimed from the beginning that the code had no issues - and in this case, it was right.

But this doesn’t mean O3 is the better model. I’ve seen the exact opposite dynamic play out in other contexts, where Gemini caught subtle bugs that O3 completely missed. It often feels like the results could swing based on prompt phrasing, time of day, or even the model’s mood 😜.

This illustrates a deeper problem: if different top-tier models can’t even agree on whether code is valid, how can we trust any of them to perform autonomous coding tasks without a human in the loop? The idea of cross-validating one AI model with another might sound promising, but in reality, they often fail to reach consensus. One model may just argue more convincingly - not more correctly. Ultimately, to reliably validate problems and evaluate solutions, you still need a human developer. And not just any human - a skilled one with domain experience.

That kind of developer, empowered by AI, will likely outperform others. But flip it around - take a strong AI with no human oversight - and it still falls short of even a careless junior developer. The inconsistency across models shows we’re not even close to safe autonomous tooling. AI may assist, but on its own, it misfires too often to be trusted.

AI’s Tunnel Vision on Simple Tasks

AI might impress at first glance, but even relatively simple real-world tasks can trip it up in surprising ways. What seems like a win often turns into manual cleanup, and the deeper the stack, the more fragile things become.

- Fails on junior-level tasks without oversight: In one case, I asked Claude 3.8 Sonnet to implement a filterable data table on the frontend that pulls data from a backend source. A seemingly basic task, the kind you’d give to a junior dev. On first glance, the solution looked solid. But soon I noticed that it had implemented the API logic in such a way that the page kept sending requests in an infinite loop - causing a constant reload. Some filters didn’t behave correctly either. I fixed it manually in a few minutes, but it was a clear reminder: we’re still far from trusting AI with even simple tasks without supervision.

- Prone to reckless hacks: When stuck, AI can act like a desperate developer under deadline pressure. For instance, if tests are failing, it may simply suggest removing them 🙂. Sometimes, it’ll write tests that are essentially meaningless — designed to pass rather than to verify correct behavior. It optimizes for green checkmarks, not logic or edge cases. At times it invents shaky, convoluted workarounds that solve the immediate issue while planting the seeds for future problems.

- Can’t handle complex, multi-layered systems: If it stumbles on isolated prompts, asking it to build something with multiple integrations is a stretch. Coordinating layers of logic, API flows, and infrastructure decisions overwhelms most models. Even when you try to break the problem into smaller chunks, the earlier issues still resurface - just like in the case with the misbehaving table. The stack is simply too complex for it to manage coherently.

- Security concerns are real too: Studies showed that developers using tools like GitHub Copilot wrote significantly less secure code - and were often more confident in its quality than they should’ve been. Almost 36% of code generated by LLMs contained serious security vulnerabilities, which can lead to major financial losses and reputational damage for the companies relying on AI-generated code.

In short, AI can look competent when things go smoothly, but the moment friction appears - especially in the form of interdependent logic or non-obvious bugs - it quickly reveals just how far it still is from functioning as a reliable, independent “developer”.

Why You Should Still Use AI (Even If It Drives You Crazy Sometimes)

After all the frustrating moments, it’s fair to ask - is it even worth the trouble? The answer, surprisingly, is still yes. But not without some important points.

Let’s break it down.

✅ What AI is genuinely good at:

- Boilerplate generation: It drastically speeds up writing repetitive or template-like code. Even if you know exactly what to write, AI can produce it drastically faster than you can type.

- Editor assistance: Smart autocompletion and inline agents in tools like Cursor let you move faster within your editor, jumping between files and generating contextual code snippets that often work out of the box.

- Research accelerator: Especially in tools like Cursor, where the full codebase is indexed, AI can quickly point you to relevant parts of the system and summarize code flows.

- Structural codebase understanding: AI helps you grasp the layout and connections within unfamiliar projects more effectively than relying solely on manual search or (often outdated) docs.

⚠️ Where it still struggles:

- Tunnel vision and noise: Even on simple problems, AI can overcomplicate things or go off-track. It often adds unnecessary, unused logic - and when asked why, it’ll just apologize: “You’re right, this wasn’t needed.”

- Weak code reviews: Yes, there are now AI tools that review the code written by other AIs – AGI is near 😂 (no) . But in practice, these reviews are far from production-ready. They might point out harmless code while missing obvious inefficiencies or duplications. Even common sense abstractions, like reusing a utility instead of rewriting logic at multiple component levels, easily may be unnoticed.

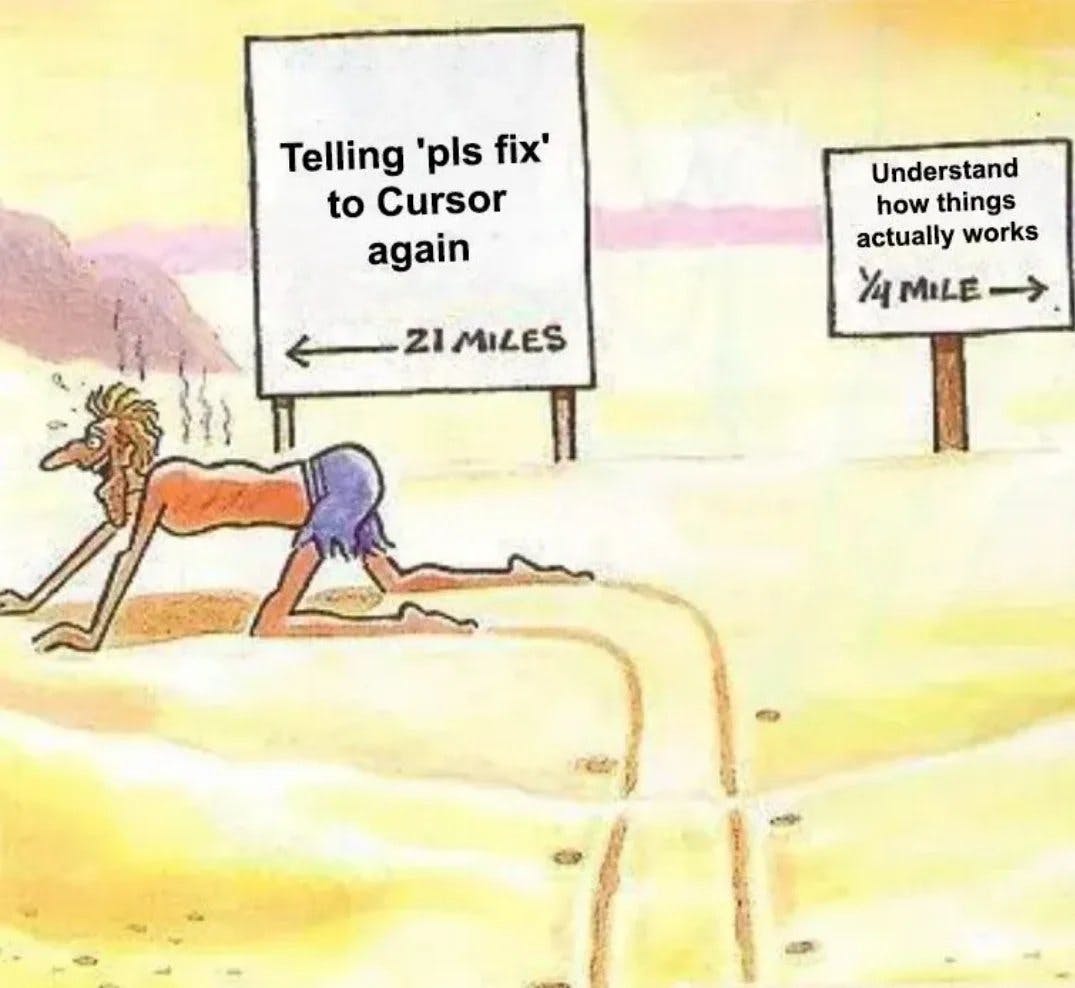

- Bug-hunting limitations: Especially painful for “vibe coders”. If AI locks onto a faulty assumption, you can spend hours untangling its misguided suggestions. While it sometimes helps trace through logs or surface relevant parts of the code, it rarely pinpoints the real issue and even more rarely fixes it cleanly.

- Loses track in thought-heavy tasks: When solving non-trivial problems, the model frequently loses context, proposes off-target ideas, or forgets previously discussed constraints. You have to babysit and course-correct it like a junior developer - sometimes worse.

In other words: AI is a fantastic productivity enhancer, but it’s still not an autonomous unit. It’s more like a research assistant on steroids - brilliant at surfacing relevant chunks, but dangerously overconfident and prone to hallucinations if left unchecked. And to check it properly, you still need a skilled engineer.

So yes, use it. But understand what it is - and what it very much isn’t.

Conclusion

After all the exploration, experimentation, and occasional frustrations, one conclusion is abundantly clear:

AI isn’t about to replace developers anytime soon, but it’s certainly changing the way we work. While AI tools have distinct limitations - ranging from short context windows to frequent misinterpretations and outright fabrications - they remain incredibly valuable if leveraged correctly.

In the short term, the most effective strategy is evident: use AI as a powerful assistant, a productivity booster, and a research companion, not as an autonomous replacement. The winners in this AI revolution will be developers who skillfully integrate these tools into their workflows, understanding both their potential and their boundaries.

Don’t fear AI, but don’t overestimate it either. Embrace it with informed caution and confidence, and you’ll find yourself well-equipped for the challenges - and opportunities - that lie ahead.