Table of Links

-

Experiments

6. Experiments

We conduct extensive experiments on benchmark datasets to evaluate the effectiveness of the proposed LSEnet.[2] Furthermore, we compare with the classic formulation, evaluate the parameter sensitivity, and visualize the hyperbolic portioning tree. Additional results are shown in Appendix D.

6.1. Graph Clustering

Metric & Configuration. Both Normalized Mutual Information (NMI) and Adjusted Rand Index (ARI) (Hassani & Ahmadi, 2020; Li et al., 2022) are employed as the evaluation metric for the clustering results. In LSEnet, we work in the Lorentz model with curvature κ = −1. The MLP of Lorentz assigner has 3 layers. The dimension of structural entropy is a hyperparameter. The parameters are optimized by Riemannian Adam (Becigneul & Ganea, 2019) with a 0.003 learning rate. For all the methods, the cluster number K is set as the number of ground-truth classes, and we report the mean value with standard deviations of 10 independent runs. Further details are in Appendix C.4.

Comparison with State-of-the-art. Clustering results on Cora, Citeseer, AMAP and Computer datasets are collected in Table 1. Concretely, self-supervised GNNs do not generate node clusters themselves, and we apply K-means to obtain the results. In LSEnet, node features are projected to Lorentz model via the exponential map (Eq. 60) with

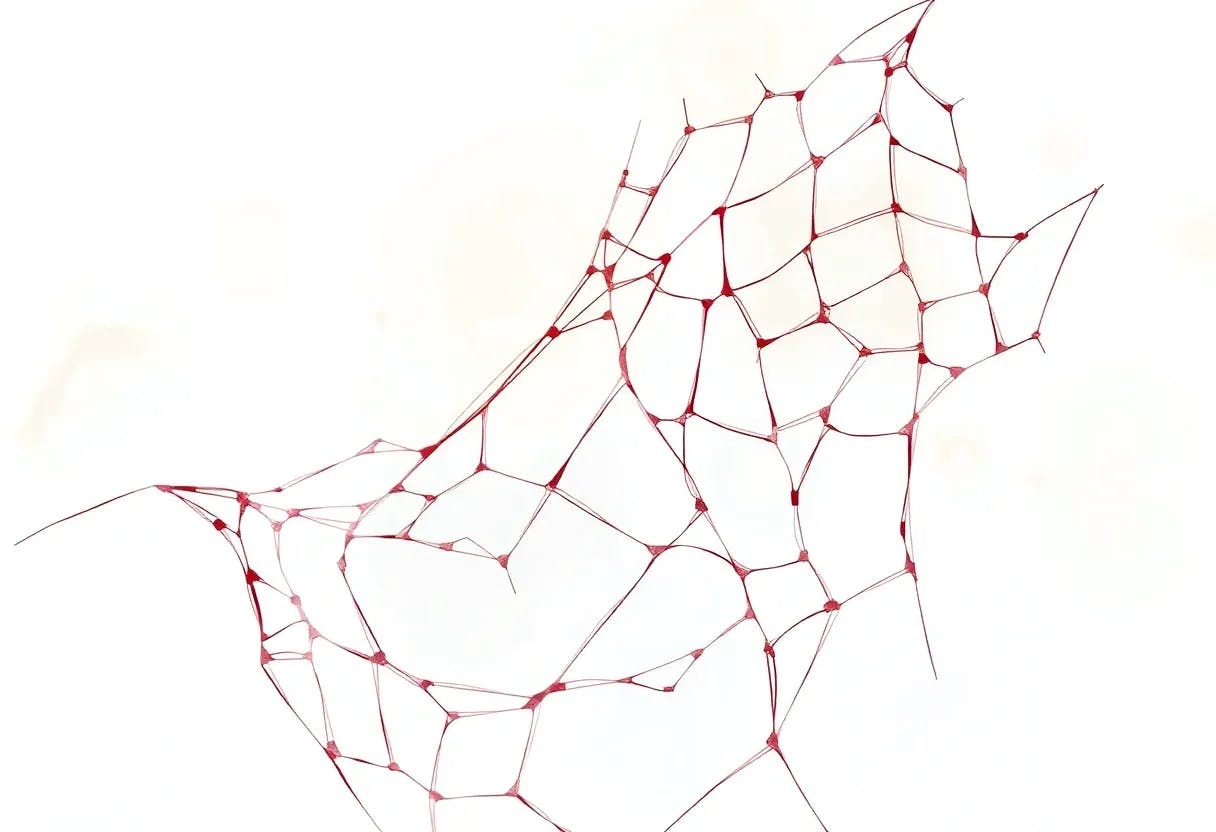

respect to the origin point. LSEnet learns the hyperbolic partitioning tree, and node clusters are given in a divisive manner with hyperbolic distance, detailed as Algorithm 3 in Appendix C.2. As shown in Table 1, even though all the baselines except RGC have the cluster number K as input parameter, the proposed LSEnet achieves the best performance except on AMAP dataset and has more consistent performance, e.g., DinkNet does well on AMAP but worse on Computer and Citeseer. Without K, RGC automatically seeks K via reinforcement learning, while we present another idea of learning a partitioning tree and have better results, e.g., over 20% NMI gain on AMAP dataset. LSEnet takes advantage of structural entropy, encoding the self-organization of graphs, and hyperbolic geometry further benefits graph clustering, as shown in the next Sec.

Authors:

(1) Li Sun, North China Electric Power University, Beijing 102206, China (ccesunli@ncepu.edu);

(2) Zhenhao Huang, North China Electric Power University, Beijing 102206, China;

(3) Hao Peng, Beihang University, Beijing 100191, China;

(4) Yujie Wang, North China Electric Power University, Beijing 102206, China;

(5) Chunyang Liu, Didi Chuxing, Beijing, China;

(6) Philip S. Yu, University of Illinois at Chicago, IL, USA.

This paper is

[2] Datasets and codes of LSEnet are available at the anonymous link https://anonymous.4open.science/ r/LSEnet-3EF2