Retrieval-Augmented Generation (RAG) enhances LLMs by retrieving relevant document snippets to improve responses. With rlama, you can build a fully local, offline RAG system—no cloud services, no dependencies, and complete data privacy. While rlama supports both large and small LLMs, it is especially optimized for smaller models without sacrificing flexibility for larger ones.

Introduction to RAG and rlama

In RAG, a knowledge store is queried to retrieve pertinent documents added to the LLM prompt. This helps ground the model’s output with factual, up-to-date data. Traditional RAG setups require multiple components (document loaders, text splitters, vector databases, etc.), but rlama streamlines the entire process with a single CLI tool.

It handles:

- Document ingestion and chunking.

- Embedding generation via local models (using Ollama).

- Storage in a hybrid vector store that supports both semantic and textual queries.

- Querying to retrieve context and generate answers.

This local-first approach ensures privacy, speed, and ease of management.

Step-by-Step Guide to Implementing RAG with rlama

1. Installation

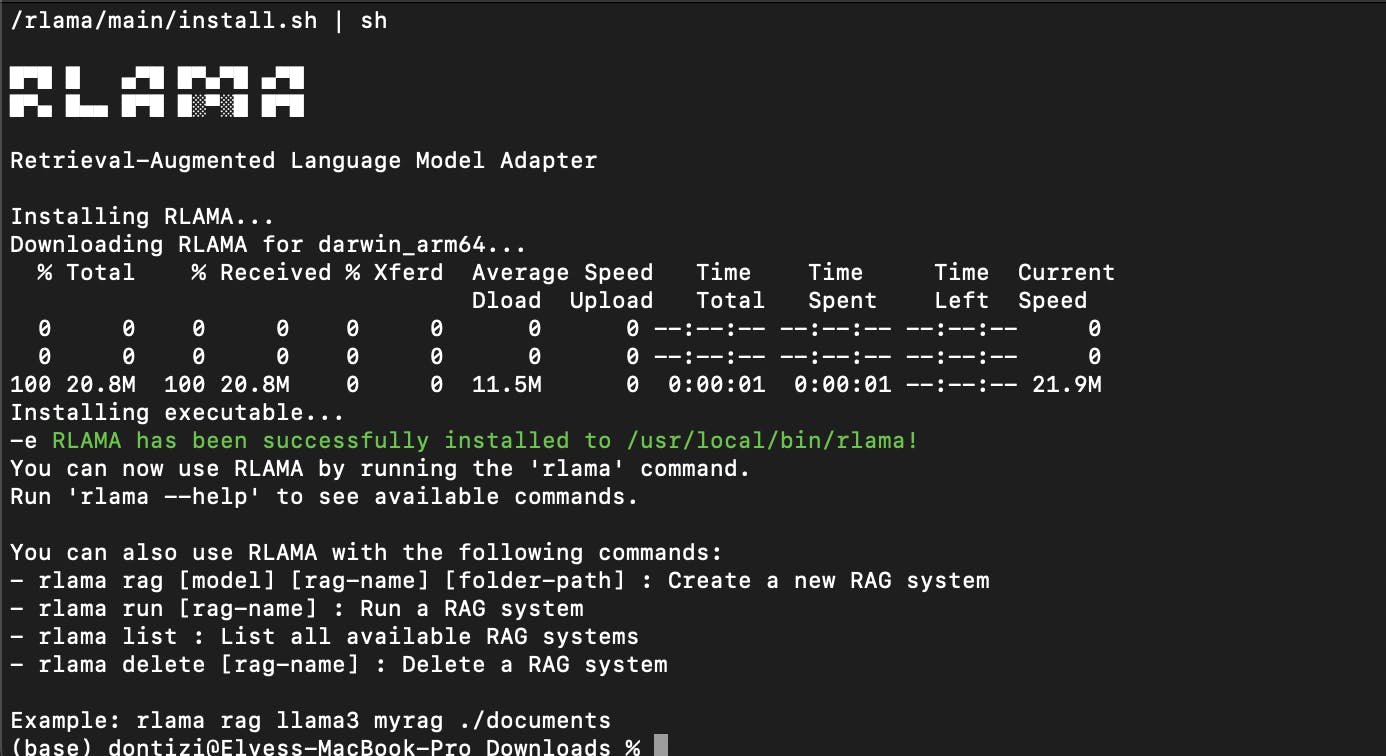

Ensure you have Ollama installed. Then, run:

curl -fsSL https://raw.githubusercontent.com/dontizi/rlama/main/install.sh | sh

Verify the installation:

rlama --version

2. Creating a RAG System

Index your documents by creating a RAG store (hybrid vector store):

rlama rag <model> <rag-name> <folder-path>

For example, using a model like deepseek-r1:8b:

rlama rag deepseek-r1:8b mydocs ./docs

This command:

- Scans your specified folder (recursively) for supported files.

- Converts documents to plain text and splits them into chunks (default: moderate size with overlap).

- Generates embeddings for each chunk using the specified model.

- Stores chunks and metadata in a local hybrid vector store (in

~/.rlama/mydocs).

3. Managing Documents

Keep your index updated:

-

Add Documents:

rlama add-docs mydocs ./new_docs --exclude-ext=.log -

List Documents:

rlama list-docs mydocs -

Inspect Chunks:

rlama list-chunks mydocs --document=filename -

Update Model:

rlama update-model mydocs <new-model>

4. Configuring Chunking and Retrieval

- Chunk Size & Overlap: Chunks are pieces of text (e.g. ~300-500 tokens) that enable precise retrieval. Smaller chunks yield higher precision; larger ones preserve context. Overlapping (about 10-20% of chunk size) ensures continuity.

- Context Size: The

--context-sizeflag controls how many chunks are retrieved per query (default is 20). For concise queries, 5-10 chunks might be sufficient, while broader questions might require 30 or more. Ensure the total token count (chunks + query) stays within your LLM’s limit.

- Hybrid Retrieval: While

rlamaprimarily uses dense vector search, it stores the original text to support textual queries. This means you get both semantic matching and the ability to reference specific text snippets.

5. Running Queries

Launch an interactive session:

rlama run mydocs --context-size=20

In the session, type your question:

> How do I install the project?

rlama:

- Converts your question into an embedding.

- Retrieves the top matching chunks from the hybrid store.

- Uses the local LLM (via Ollama) to generate an answer using the retrieved context.

You can exit the session by typing exit.

6. Using the rlama API

Start the API server for programmatic access:

rlama api --port 11249

Send HTTP queries:

curl -X POST http://localhost:11249/rag \

-H "Content-Type: application/json" \

-d '{

"rag_name": "mydocs",

"prompt": "How do I install the project?",

"context_size": 20

}'

The API returns a JSON response with the generated answer and diagnostic details.

Recent Enhancements and Tests

EnhancedHybridStore

- Improved Document Management: Replaces the traditional vector store.

- Hybrid Searches: Supports both vector embeddings and textual queries.

- Simplified Retrieval: Quickly finds relevant documents based on user input.

Document Struct Update

- Metadata Field: Now each document chunk includes a

Metadatafield for extra context, enhancing retrieval accuracy.

RagSystem Upgrade

- Hybrid Store Integration: All documents are now fully indexed and retrievable, resolving previous limitations.

Router Retrieval Testing

I compared the new version with v0.1.25 using deepseek-r1:8b with the prompt:

"list me all the routers in the code"(as simple and general as possible to verify accurate retrieval)

- Published Version on GitHub:Answer: The code contains at least one router,

CoursRouter, which is responsible for course-related routes. Additional routers for authentication and other functionalities may also exist.(Source: src/routes/coursRouter.ts) - New Version:Answer: There are four routers:

sgaRouter,coursRouter,questionsRouter, anddevoirsRouter.(Source: src/routes/sgaRouter.ts)

Optimizations and Performance Tuning

Retrieval Speed:

- Adjust

context_sizeto balance speed and accuracy. - Use smaller models for faster embedding, or a dedicated embedding model if needed.

- Exclude irrelevant files during indexing to keep the index lean.

Retrieval Accuracy:

- Fine-tune chunk size and overlap. Moderate sizes (300-500 tokens) with 10-20% overlap work well.

- Use the best-suited model for your data; switch models easily with

rlama update-model. - Experiment with prompt tweaks if the LLM occasionally produces off-topic answers.

Local Performance:

- Ensure your hardware (RAM/CPU/GPU) is sufficient for the chosen model.

- Leverage SSDs for faster storage and multithreading for improved inference.

- For batch queries, use the persistent API mode rather than restarting CLI sessions.

Next Steps

- Optimize Chunking: Focus on enhancing the chunking process to achieve an optimal RAG, even when using small models.

- Monitor Performance: Continue testing with different models and configurations to find the best balance for your data and hardware.

- Explore Future Features: Stay tuned for upcoming hybrid retrieval enhancements and adaptive chunking features.

Conclusion

rlama simplifies building local RAG systems with a focus on confidentiality, performance, and ease of use. Whether you’re using a small LLM for quick responses or a larger one for in-depth analysis, rlama offers a powerful, flexible solution. With its enhanced hybrid store, improved document metadata, and upgraded RagSystem, it’s now even better at retrieving and presenting accurate answers from your data. Happy indexing and querying!

- Github repo: https://github.com/DonTizi/rlama

- Website: https://rlama.dev/

- X :https://x.com/LeDonTizi/status/1898233014213136591