Your users don’t care why a quote failed—they care that the number didn’t flash 0.00. Here’s a pragmatic, production-ready pipeline that returns the best price you can get within a strict time budget, exposes provenance, and never silently lies.Audience: engineers shipping finance dashboards, brokers, or portfolio tools.

Deliverables: architecture, TypeScript code (fetch with timeout + backoff), and a reproducible test harness you can run locally.

The failure modes we must beat

- Single-provider coupling. One upstream hiccup → you show

0.00or stale data. - Unbounded waits. A slow API call blocks the UI thread or burns your edge budget.

- Silent staleness. You cache yesterday’s price and don’t tell the user.

- Inconsistent math. Different providers round/adjust differently, breaking P/L.

We’ll design for bounded latency, graceful degradation, and auditability.

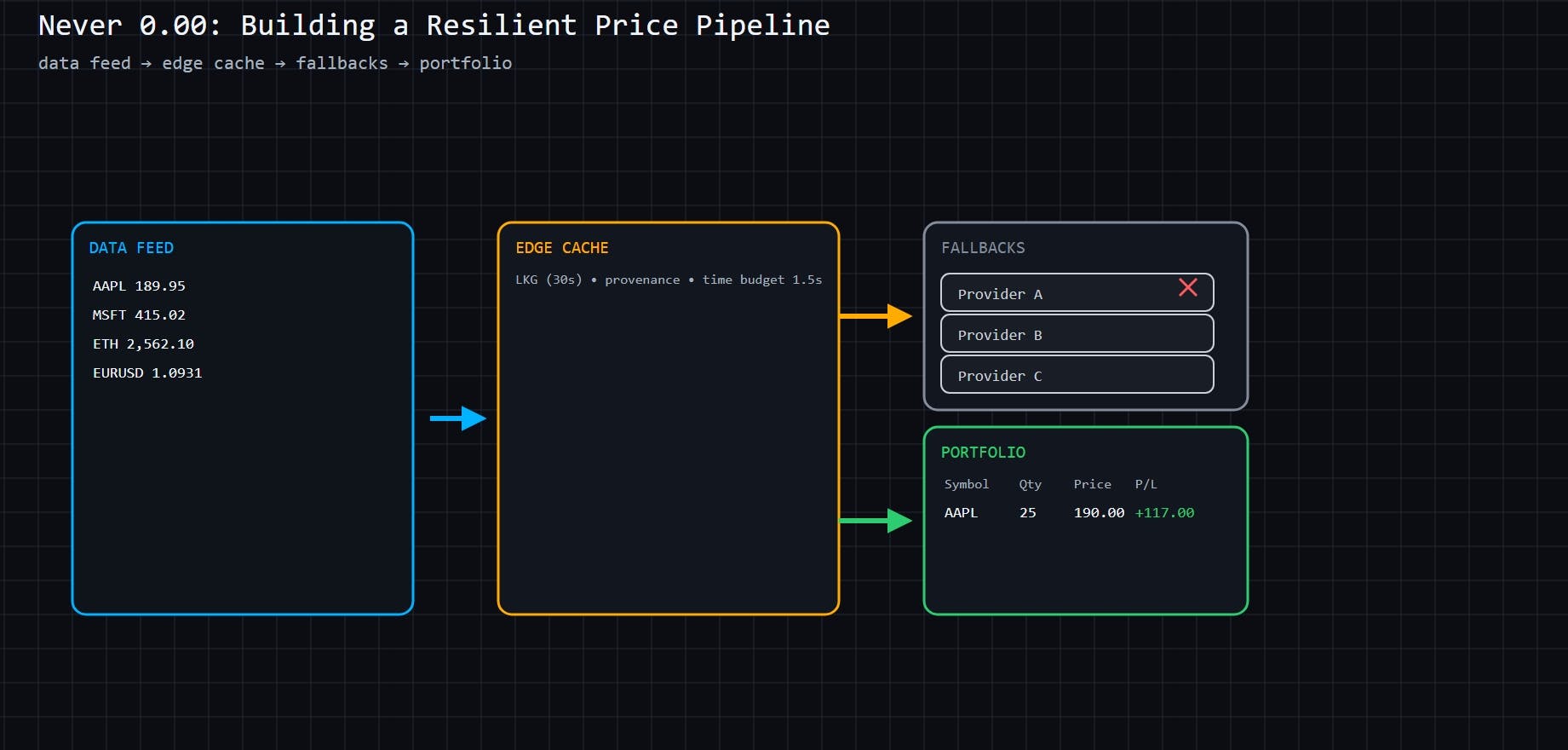

Architecture: time-boxed fan-out + LKG + provenance

Imagine the following diagram (textual description):

[ Client ] --(fetch / 1.5s budget)--> [ Edge Worker ]

|-> Provider A (Yahoo) [score 0]

|-> Provider B (NBBO) [score +1]

|-> Provider C (CoinGecko/OHLC) [score +2]

|

<best valid within budget>

|

[ LKG cache (30s TTL) ]

|

returns {price, ts, source, stale}

- The edge queries providers in parallel within a strict budget (e.g., 1500 ms).

- We prefer the first valid response using a simple health score (recent failures raise the score; ties go to lowest score).

- If nothing lands in time, we return LKG (last-known-good) marked

stale: true. - The client renders a Provenance chip:

Yahoo • 12s • LiveorCache • 45s • Stale. No black boxes.

Core TypeScript: bounded fetch with backoff + jitter

We’ll start with a few helpers.

// utils/timeout.ts

export async function fetchWithTimeout(

url: string,

ms: number,

init: RequestInit = {}

): Promise<Response> {

const ctrl = new AbortController();

const timer = setTimeout(() => ctrl.abort("time_budget_exceeded"), ms);

try {

const res = await fetch(url, { ...init, signal: ctrl.signal });

return res;

} finally {

clearTimeout(timer);

}

}

// utils/backoff.ts

export function backoffDelay(attempt: number, base = 150, cap = 1000) {

// exponential backoff with full jitter

const exp = Math.min(cap, base * 2 ** attempt);

return Math.floor(Math.random() * exp);

}

Provider adapters (pure functions)

Each adapter returns a normalized quote or throws.

// providers/types.ts

export type Quote = {

symbol: string;

price: number;

ts: number; // epoch millis

source: string; // "yahoo", "nbbo", etc.

};

export type Provider = (s: string) => Promise<Quote>;

// providers/yahoo.ts

import { fetchWithTimeout } from "../utils/timeout";

import type { Quote, Provider } from "./types";

export const yahoo: Provider = async (symbol) => {

const r = await fetchWithTimeout(`https://api.example/yahoo?symbol=${symbol}`, 700);

if (!r.ok) throw new Error(`yahoo:${r.status}`);

const j = await r.json();

const price = Number(j?.price);

if (!Number.isFinite(price)) throw new Error("yahoo:invalid");

return { symbol, price, ts: Date.now(), source: "yahoo" };

};

Duplicate for other sources (nbbo, coingecko, etc.) with their own timeouts.

Circuit breaker + scoring

Providers accumulate penalties on failure (simple, sticky health heuristic).

// engine/quotes.ts

import type { Provider, Quote } from "../providers/types";

import { backoffDelay } from "../utils/backoff";

type Health = Record<string, number>; // lower is healthier

export type BudgetedOptions = {

budgetMs?: number; // total time budget

attempts?: number; // retries per provider

lkg?: Quote | null; // last-known-good

health?: Health; // provider health map

};

export async function getQuoteWithBudget(

symbol: string,

providers: Record<string, Provider>,

opts: BudgetedOptions = {}

): Promise<Quote & { stale: boolean }> {

const budgetMs = opts.budgetMs ?? 1500;

const attempts = opts.attempts ?? 1;

const health = opts.health ?? {};

const start = Date.now();

let winner: Quote | null = null;

// run providers in order of health (lowest first)

const entries = Object.entries(providers).sort(

([a], [b]) => (health[a] ?? 0) - (health[b] ?? 0)

);

// Launch all providers in parallel but respect total budget

await Promise.any(

entries.map(async ([name, p]) => {

for (let i = 0; i < attempts; i++) {

const elapsed = Date.now() - start;

const remaining = budgetMs - elapsed;

if (remaining <= 0) throw new Error("time_budget_exceeded");

try {

const q = await p(symbol); // each p uses its own per-call timeout

if (!Number.isFinite(q.price)) throw new Error("invalid");

if (!winner) winner = q; // first valid wins

return;

} catch {

health[name] = (health[name] ?? 0) + 1; // penalize on fail

await new Promise((r) => setTimeout(r, backoffDelay(i)));

}

}

throw new Error(`${name}_exhausted`);

})

).catch(() => null);

// If nothing landed, fall back to LKG

const now = Date.now();

if (!winner && opts.lkg) {

return { ...opts.lkg, source: `${opts.lkg.source}+lkg`, stale: true };

}

if (!winner) {

// honest sentinel; caller should render skeleton/“unavailable”

return { symbol, price: NaN, ts: now, source: "unavailable", stale: true };

}

// Mark staleness based on age (e.g., 30s)

const stale = now - winner.ts > 30_000;

return { ...winner, stale };

}

Why this shape works: bounded total latency, first valid result preference, provider health nudges future calls, and a clean stale flag keeps the UI honest.Client UX: provenance chip (text-described diagram)

Imagine a small pill next to the price:

[ Yahoo • 12s • Live ] // green background

[ Cache • 47s • Stale ] // amber background

When a user clicks it, show a popover with source, requestId, and the time budget used—handy for bug reports and audits.

// ui/ProvenanceBadge.tsx

export function ProvenanceBadge({ source, ts, stale }: { source: string; ts: number; stale: boolean }) {

const ageSec = Math.max(0, Math.round((Date.now() - ts) / 1000));

const label = `${source} • ${ageSec}s • ${stale ? "Stale" : "Live"}`;

const cls = `px-2 py-1 rounded-full text-xs ${stale ? "bg-yellow-100 text-yellow-800" : "bg-green-100 text-green-800"}`;

return <span className={cls} aria-live="polite">{label}</span>;

}

Reproducible test harness

We’ll simulate providers with deterministic latency/failure. You can paste this into a Node/TS project (no network required).

// harness/sim.ts

import type { Provider, Quote } from "../providers/types";

import { getQuoteWithBudget } from "../engine/quotes";

function fakeProvider(name: string, opts: { latency: number; fail?: boolean; price?: number }): Provider {

return async (symbol: string) => {

await new Promise((r) => setTimeout(r, opts.latency));

if (opts.fail) throw new Error(`${name}_fail`);

const price = opts.price ?? 100 + Math.random();

return { symbol, price, ts: Date.now(), source: name } as Quote;

};

}

async function main() {

const providers = {

yahoo: fakeProvider("yahoo", { latency: 180, price: 189.97 }),

nbbo: fakeProvider("nbbo", { latency: 420, price: 189.95 }),

alt: fakeProvider("alt", { latency: 1100, fail: true }),

};

const out = await getQuoteWithBudget("AAPL", providers, { budgetMs: 600, attempts: 1, lkg: null, health: {} });

console.log(out);

}

main().catch(console.error);

Expected behavior: with a 600 ms budget, yahoo wins (180 ms). If you increase yahoo latency above 600, nbbo becomes the winner. If everything fails, the function returns { price: NaN, source: "unavailable", stale: true }—which your UI should render as a skeleton or an explicit “unavailable” state.

Unit tests (Vitest)

// harness/sim.test.ts

import { describe, it, expect } from "vitest";

import { getQuoteWithBudget } from "../engine/quotes";

import type { Provider } from "../providers/types";

function p(name: string, latency: number, ok = true, price = 101): Provider {

return async (s) => {

await new Promise((r) => setTimeout(r, latency));

if (!ok) throw new Error(name);

return { symbol: s, price, ts: Date.now(), source: name };

};

}

describe("getQuoteWithBudget", () => {

it("returns first valid within budget", async () => {

const out = await getQuoteWithBudget("AAPL", { slow: p("slow", 500), fast: p("fast", 80, true, 190.12) }, { budgetMs: 300 });

expect(out.source).toBe("fast");

expect(out.stale).toBe(false);

expect(Number.isFinite(out.price)).toBe(true);

});

it("falls back to LKG when none arrive in time", async () => {

const lkg = { symbol: "AAPL", price: 188.5, ts: Date.now() - 40_000, source: "cache" };

const out = await getQuoteWithBudget("AAPL", { slow: p("slow", 800) }, { budgetMs: 200, lkg });

expect(out.source).toContain("lkg");

expect(out.stale).toBe(true);

expect(out.price).toBe(188.5);

});

it("returns sentinel when all fail & no LKG", async () => {

const out = await getQuoteWithBudget("AAPL", { a: p("a", 50, false), b: p("b", 60, false) }, { budgetMs: 150 });

expect(out.source).toBe("unavailable");

expect(isNaN(out.price)).toBe(true);

});

});

Run it

npm i -D vitest typescript tsx

npx vitest run

Production notes (learned the hard way)

- Time budgets must be end-to-end (edge → providers → marshaling). Don’t just set per-call timeouts; total elapsed matters.

- Never coerce NaN to 0.00. Return a sentinel and render it honestly.

- Record provenance (

source,ts,requestId) for every price; it turns user bug reports into reproducible tickets. - Cache with intent. LKG cache should be small (e.g., 30–60 s) and keyed by

symbol + venuewhere applicable. - Score providers. A sticky health score quickly pushes you away from flaky sources without a full breaker library.

- UI skeletons + chips > spinners. Show skeleton rows during refresh and clear “Stale” chips when using LKG.

What this buys you

- Predictable UX: Users get a number (or a truthful “stale/unavailable”) within your SLA.

- Operational safety: Slow or failing providers don’t stall the entire pipeline.

- Auditability: Every number is explainable—source + age + freshness.

Next steps you can ship today

- Drop

getQuoteWithBudgetinto your edge worker. - Add the Provenance chip.

- Wire a 30–60 s LKG cache and emit “stale” when using it.

- Expand the test harness with your real providers behind a feature flag.

If you want a code drop-in adapted to your stack (Vercel Edge, Cloudflare Workers, or Node), ping me your environment and symbols—you’ll get a tailored snippet.

Want the companion “OpenBrokerCSV v0.1” spec + validator to make imports boringly reliable? I’ve got a minimal template and test fixtures—DM me your broker and I’ll spin up a mapping PR.