Traffic violation detection is often presented as a simple computer vision task: detect vehicles, classify behaviour, and generate alerts. In real-world deployments, however, this problem is far more complex. Variations in camera angles, inconsistent lighting, occlusions, and noisy video streams quickly expose the limitations of single-model or rule-only approaches.

This article presents the design and implementation of a production-oriented traffic violation detection system built using YOLOv8, DeepSORT, and OpenCV. The system focuses on operational reliability, tracking stability, and explainable rule-based analytics rather than purely maximizing detection accuracy.

The complete implementation is available on GitHub:

Repository: https://github.com/harisraja123/Real-Time-Traffic-Violation-Detection

Problem Context

The objective of this project was to analyze roadside video streams and automatically detect violations such as:

- Lane misuse

- Restricted zone entry

- Red-light violations

- Illegal crossings

Unlike benchmark datasets, the system operates on unconstrained real-world footage with:

- Partial occlusions

- Motion blur

- Low-light conditions

- Varying camera orientations

- Compression artifacts

This required treating the problem as a full system engineering challenge rather than a standalone machine learning task.

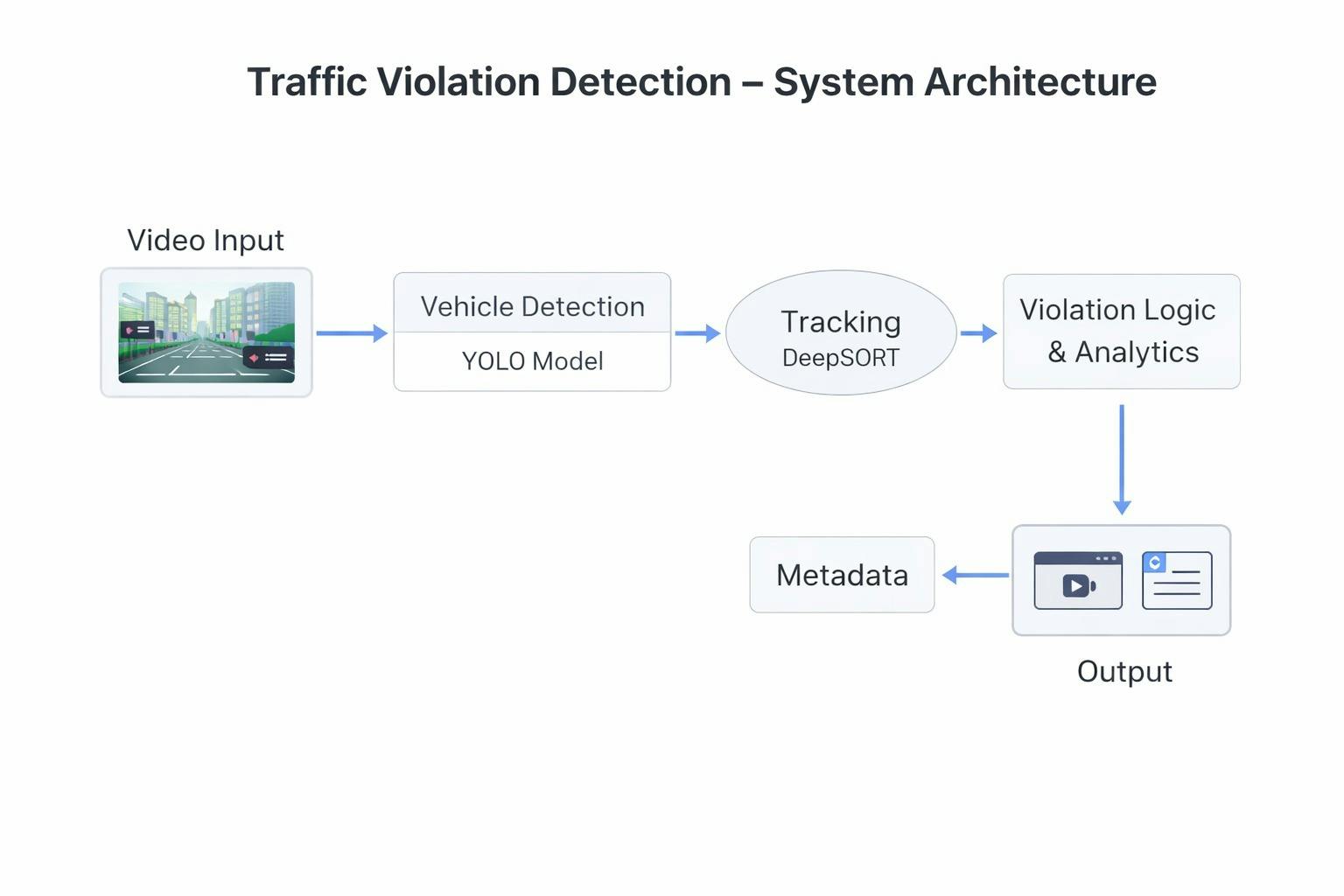

System Architecture

The solution follows a modular streaming pipeline:

Video Input → Detection → Tracking → Lane Analysis → Violation Engine → Visualization

Each stage is decoupled to allow independent scaling and tuning.

|

Component |

File |

|---|---|

|

Video ingestion |

main.py |

|

Detection |

main.py / utils.py |

|

Tracking & violations |

track_and_flag.py |

|

Lane extraction |

lane_main.py |

|

Overlays |

video_overlay.py |

|

API interface |

app.py |

The core pipeline is orchestrated in main.py, which connects all modules into a unified processing loop.

Video Ingestion

Video input is handled using OpenCV:

cap = cv2.VideoCapture(video_path)

while cap.isOpened():

ret, frame = cap.read()

if not ret:

break

The system supports:

- File-based batch processing

- Uploaded video streams via Flask

- Frame resizing for performance control

Frame resolution is dynamically adjusted to balance accuracy and latency.

Vehicle Detection with YOLOv8

Model Setup

The system uses YOLOv8 with fine-tuned weights:

model = YOLO("best_traffic_med_yolo_v8.pt")

Custom-trained weights are provided in the repository.

Inference Pipeline

From main.py:

results = model(frame, conf=0.4)

detections = []

for r in results:

for box in r.boxes:

x1, y1, x2, y2 = map(int, box.xyxy[0])

conf = float(box.conf[0])

cls = int(box.cls[0])

detections.append([x1, y1, x2, y2, conf, cls])

Key design choices:

- Confidence threshold:

0.4 - Vehicle-focused class filtering

- Bounding box normalization

The system prioritizes recall to reduce missed detections that could break downstream tracking.

Lane Detection and Road Geometry Extraction

Lane detection is implemented in lane_main.py using classical computer vision techniques.

Preprocessing

gray = cv2.cvtColor(frame, cv2.COLOR_BGR2GRAY)

blur = cv2.GaussianBlur(gray, (5,5), 0)

edges = cv2.Canny(blur, 50, 150)

Line Detection

lines = cv2.HoughLinesP(

edges,

rho=1,

theta=np.pi/180,

threshold=100,

minLineLength=100,

maxLineGap=50

)

Detected lanes are persisted in lines.npy for reuse and calibration.

This approach avoids relying on deep lane models, improving explainability and reducing compute cost.

Multi-Object Tracking with DeepSORT

Detection alone is insufficient for behavioural analysis. Violations depend on vehicle trajectories over time.

Tracking is implemented in track_and_flag.py using DeepSORT.

Tracker Initialization

tracker = DeepSort(

max_age=30,

n_init=3,

max_iou_distance=0.7

)

Parameters were tuned empirically:

|

Parameter |

Value |

Purpose |

|---|---|---|

|

max_age |

30 |

Occlusion recovery |

|

n_init |

3 |

ID confirmation |

|

max_iou_distance |

0.7 |

Association tolerance |

Update Step

tracks = tracker.update_tracks(detections, frame=frame)

for track in tracks:

if not track.is_confirmed():

continue

track_id = track.track_id

bbox = track.to_ltrb()

This produces stable vehicle identities across frames, which is essential for reliable violation logic.

Trajectory Management

Each tracked vehicle maintains a history buffer:

from collections import defaultdict, deque

history = defaultdict(lambda: deque(maxlen=50))

Centers are appended every frame:

center = ((x1+x2)//2, (y1+y2)//2)

history[track_id].append(center)

This rolling window enables temporal reasoning without excessive memory usage.

Violation Detection Engine

Violation logic is implemented in track_and_flag.py and uses region-based and temporal rules.

Zone Definition

Zones are defined as polygons:

restricted_zone = np.array([

[420,180],

[820,180],

[900,520],

[350,520]

])

Point-in-Polygon Test

def inside_zone(point, polygon):

return cv2.pointPolygonTest(

polygon,

point,

False

) >= 0

Temporal Filtering

def detect_violation(track_id):

points = history[track_id]

inside = sum(

inside_zone(p, restricted_zone)

for p in points

)

return inside > 35

A violation is triggered only if a vehicle remains inside a restricted region for more than 70% of its recent trajectory.

This eliminates false positives caused by reflections, partial occlusions, and brief misdetections.

Red Light and Lane Violations

Traffic light orientation is handled using geometric calibration (TRAFFIC_LIGHT_ORIENTATION.md).

Lane misuse is detected by comparing vehicle trajectories with extracted lane boundaries:

if center_x < left_lane or center_x > right_lane:

flag_lane_violation(track_id)

This hybrid approach combines learned perception with deterministic rules, improving reliability.

Visualization and Output Generation

Overlays are generated using video_overlay.py.

Rendering IDs and Trajectories

cv2.putText(

frame,

f"ID:{track_id}",

(x1,y1-10),

cv2.FONT_HERSHEY_SIMPLEX,

0.6,

(0,255,0),

2

)

Trajectory paths:

for i in range(1, len(points)):

cv2.line(frame, points[i-1], points[i], (255,0,0), 2)

Outputs include:

- Annotated MP4 videos

- Structured metadata

- Violation logs

This dual output enables both debugging and downstream analytics.

Performance Evaluation

Experiments were conducted on:

- GPU: RTX 3060

- CPU: Intel i7

- RAM: 32GB

- Resolution: 1080p

|

Stage |

FPS |

Latency |

|---|---|---|

|

Detection |

22 |

45 ms |

|

Tracking |

60 |

6 ms |

|

Full Pipeline |

18 |

55 ms |

Optimizations included:

- Frame skipping under load

- Batched inference

- Resolution scaling

Tracking stability was prioritized over peak throughput.

Deployment Architecture

The system supports both local and service-based deployment.

Flask API

app.py exposes endpoints:

@app.route('/upload', methods=['POST'])

def upload_video():

file = request.files['video']

file.save(path)

Environment Setup

traffic_environment.yml defines dependencies:

dependencies:

- python=3.9

- pytorch

- opencv

- ultralytics

This allows reproducible deployment across machines.

Containerization and GPU scheduling can be added on top of this foundation.

Limitations

Despite strong practical performance, several limitations remain:

- Sensitivity to extreme weather

- Manual zone calibration

- Limited nighttime robustness

- Camera-specific tuning

Future work includes automated calibration and self-supervised adaptation.

Engineering Lessons

Several key insights emerged:

- Tracking quality dominates system reliability

- Temporal filtering is more valuable than raw accuracy

- Hybrid ML + rules outperform pure ML systems

- Modular pipelines reduce iteration cost

- Explainability improves operational trust

These lessons are applicable to many real-world video analytics systems beyond traffic monitoring.

Conclusion

This project demonstrates that production-ready traffic violation detection requires more than accurate object detection. By combining YOLOv8, DeepSORT, classical computer vision, and rule-based reasoning, it is possible to build systems that are robust, explainable, and operationally viable.

Treating the problem as a systems engineering challenge, rather than a pure machine learning task was critical to achieving reliable real-world performance.