Meetup is the most popular platform to organize community events. With more than 52 million members and over 300,000 Meetup groups, the site facilitates over 100,000 meetups per week around the world.

The COVID-19 pandemic has shifted these in-person community gatherings to virtual events. With this shift, I have noticed that groups I personally attend have become less engaged over time.

For example, In North America, the AWS meetup groups had at least one meetup in the past 12 months, while only 36% of groups have had one meetup in the past 3 months. This data indicates that "zoom fatigue" is growing as events have stayed virtual.

As a freshly minted Developer Advocate, I wanted to gather data on the best way to re-engage with the meetup groups. Before anything gets done at AWS, data is needed. To collect meetup data I developed a mechanism to capture relevant data and built a dashboard with Streamlit to display insights. Some highlights include…

- Tracking active meetups: The team now has visibility into which user groups has been active in the past 12, 6 or, 3 months

- Visualizing where members are: The team now has a map to see where our groups are located, and how big their footprint is.

- Keeping up with events: The team now has a table ordered by last event timestamp to see which groups recently had an event

In this post, I will describe how I built this solution and how I leveraged cloud resources.

Overview of the solution

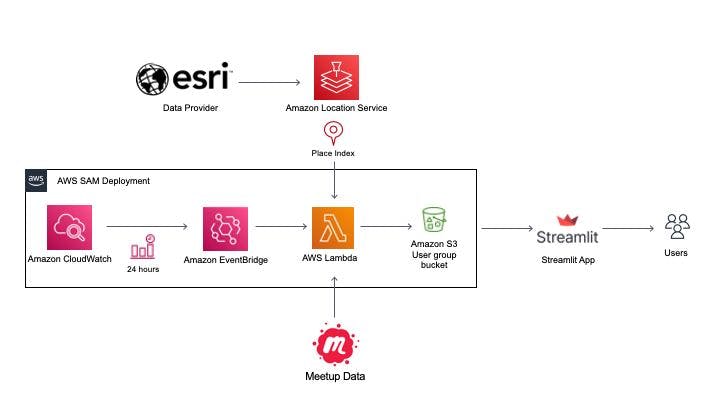

While this solution could be deployed in a cloud provider of your choosing, I will focus on how I leveraged AWS. First, I started by developing python code to scrape meetup data. Second, I used Amazon Location Service to geocode the meetup cities into longitude and latitude coordinates. Afterward, the final data is stored in an Amazon S3 bucket. I used Amazon EventBridge to set up a daily job to trigger a lambda function to call my python code. Then, I used Streamlit to create a dashboard to display the data. Finally, the entire pipeline is deployed by using AWS Serverless Application Model (SAM) The following diagram illustrates this architecture.

Collecting Meetup data

I used BeautifulSoup and the requests library to scrape the content from the AWS User group website. The script first gets the meetup URL for each user group through the get_user_group_data function. Based on the presence of certain div attributes, it stores the relevant meetup URL and name in a list to be scrapped. Next, the get_meetup_info function iterates through the list and parses the information on each individual meetup page, number of members, and meetup location. The raw data is saved as a CSV for further processing.

The following shows a sample of the script

meetup_json = {}

page = requests.get(meetup_url)

usergroup_html = page.text

soup = BeautifulSoup(usergroup_html, "html.parser")

# Get Meetup Name

meetup_name = soup.findAll("a", {"class": "groupHomeHeader-groupNameLink"})[0].text

# Meetup location

meetup_location = soup.findAll("a", {"class": "groupHomeHeaderInfo-cityLink"})[

0

].text

# Number of members

meetup_members = (

soup.findAll("a", {"class": "groupHomeHeaderInfo-memberLink"})[0]

.text.split(" ")[0]

.replace(",", "")

)

# Past events

past_events = (

soup.findAll("h3", {"class": "text--sectionTitle text--bold padding--bottom"})[

0

]

.text.split("Past events ")[1]

.replace("(", "")

.replace(")", "")

)

Geocoding user groups

In order to plot each meetup group on a map, we need the longitude and latitude for each city in the meetup group. I was able to use Amazon Location Service to geocode each city name into longitude and latitude coordinates using a place index. For more information about creating a place index, see Amazon Location Service Developer Guide. Here is an example of a block of python code that uses a place index for geocoding.

import boto3

def get_location_data(location: str):

"""

Purpose:

get location data from name

Args:

location - name of location

Returns:

lat, lng - latitude and longitude of location

"""

client = boto3.client("location")

response = client.search_place_index_for_text(

IndexName="my_place_index", Text=location

)

print(response)

geo_data = response["Results"][0]["Place"]["Geometry"]["Point"]

# Example output for Arlington, VA: 'Results': [{'Place': {'Country': 'USA', 'Geometry': {'Point': [-77.08628999999996, 38.89050000000003]}, 'Label': 'Arlington, VA, USA', 'Municipality': 'Arlington', 'Region': 'Virginia', 'SubRegion': 'Arlington County'}}

lat = geo_data[1]

lng = geo_data[0]

print(f"{lat},{lng}")

return lat, lng

Using SAM to Orchestrate the Deployment

After testing the script locally, the next step was to create a mechanism to run the script daily and store the results in S3. I used SAM to create a serverless application that does this.

-

From a terminal window, initialize a new application

sam init -

Change directory:

cd ./sam-meetup -

Update dependencies

-

update my_app/requirements.txt

requests pandas bs4 -

Add in your code to the example

my_app/app.pyimport json import logging import get_meetup_data def lambda_handler(event, context): logging.info("Getting meetup data") try: get_meetup_data.main() except Exception as error: logging.error(error) raise error return { "statusCode": 200, "body": json.dumps( { "message": "meetup data collected", } ), } -

Update template.yml

Globals: Function: Timeout: 600 Resources: S3Bucket: Type: 'AWS::S3::Bucket' Properties: BucketName: MY_BUCKET_NAME GetMeetupDataFunction: Type: AWS::Serverless::Function Properties: CodeUri: my_app/ Handler: app.lambda_handler Policies: - S3WritePolicy: BucketName: MY_BUCKET_NAME Runtime: python3.9 Architectures: - x86_64 Events: GetMeetupData: Type: Schedule Properties: Schedule: 'rate(24 hours)' Name: MeetupData Description: getMeetupData Enabled: True -

Run

sam build -

Deploy the application to AWS:

sam deploy --guided

For more detailed information on developing SAM applications visit Getting started with AWS SAM

Visualizing data with Streamlit

To gain insights from the meetup data, I chose to use Streamlit to host our dashboard. Streamlit is an open-source python module that allows you to build beautiful visualizations with ease. Streamlit works well on your laptop or in the cloud making it great for building sharable dashboards. The following are example screenshots from the dashboard.

Here is a snippet of code used to render the map from Streamlit using streamlit-folium

import streamlit as st

from streamlit_folium import folium_static

import folium

def show_map(df):

"""

Purpose:

Show map

Args:

df - raw data

Returns:

N/A

"""

# center of US

m = folium.Map(location=[38.500000, -98.000000], zoom_start=4)

for index, row in df.iterrows():

# add marker for meetup group

meetup_name = row["meetup_name"]

meetup_members = row["meetup_members"]

lat = row["latitude"]

lng = row["longitude"]

is_meetup_active_6_months = row["is_meetup_active_6_months"]

popup_html = f"<ul><li>Meetup Name:{meetup_name}</li><li>Meetup Members:{meetup_members} </li><li>is_meetup_active_6_months: {is_meetup_active_6_months} </li></ul>"

curr_color = ""

if is_meetup_active_6_months:

curr_color = "blue"

else:

curr_color = "red"

folium.Circle(

[lat, lng],

popup=popup_html,

tooltip=meetup_name,

radius=float(meetup_members) * 20,

color=curr_color,

fill=True,

fill_color=curr_color,

).add_to(m)

# call to render Folium map in Streamlit

folium_static(m)

Conclusion

In this post, we discussed how I built a serverless data pipeline to analyze meetup data with Streamlit. I was able to successfully highlight the following:

-

Geocode addresses using Amazon Location Service

-

Use Amazon EventBridge and AWS Lambda to transform and load the data daily to S3

-

Visualize and share the data stored using Streamlit

-

Automate and orchestrate the entire solution using SAM

My team plans on using this solution to strategize on how best to engage our meetup community. If you are interested in participating in your local AWS community, check out the list of meetups located here: https://aws.amazon.com/developer/community/usergroups/

Disclaimer

I currently work for AWS as a Senior Developer Advocate. The opinions expressed in this blog are mine.