Authors:

(1) Smi Hinterreiter;

(2) Martin Wessel;

(3) Fabian Schliski;

(4) Isao Echizen;

(5) Marc Erich Latoschik;

(6) Timo Spinde.

Table of Links

- Abstract and Introduction

- Related Work

- Feedback Mechanisms

- The NewsUnfold Platform

- Results

- Discussion

- Conclusion

- Acknowledgments and References

A. Feedback Mechanism Study Texts

B. Detailed UX Survey Results for NewsUnfold

C. Material Bias and Demographics of Feedback Mechanism Study

Abstract

Media bias is a multifaceted problem, leading to one-sided views and impacting decision-making. A way to address digital media bias is to detect and indicate it automatically through machine-learning methods. However, such detection is limited due to the difficulty of obtaining reliable training data. Human-in-the-loop-based feedback mechanisms have proven an effective way to facilitate the data-gathering process. Therefore, we introduce and test feedback mechanisms for the media bias domain, which we then implement on NewsUnfold, a news-reading web application to collect reader feedback on machine-generated bias highlights within online news articles. Our approach augments dataset quality by significantly increasing inter-annotator agreement by 26.31% and improving classifier performance by 2.49%. As the first human-in-the-loop application for media bias, the feedback mechanism shows that a user-centric approach to media bias data collection can return reliable data while being scalable and evaluated as easy to use. NewsUnfold demonstrates that feedback mechanisms are a promising strategy to reduce data collection expenses and continuously update datasets to changes in context.

1 Introduction

Media bias, slanted or one-sided media content, impacts public opinion and decision-making processes, especially on web platforms and social media (Ardevol-Abreu and Z ` u´niga ˜ 2017; Eberl, Boomgaarden, and Wagner 2017; Spinde et al. 2023). News consumers are frequently unaware of the extent and influence of bias (Kause, Townsend, and Gaissmaier 2019; Spinde et al. 2020; Ribeiro et al. 2018), leading to limited awareness of specific issues and narrow, one-sided points of view (Ardevol-Abreu and Z ` u´niga 2017; Eberl, ˜ Boomgaarden, and Wagner 2017). As promoting media bias awareness has beneficial effects (Park et al. 2009; Spinde et al. 2022), emphasis on the need for methods that automatically detect media bias is growing (Wessel et al. 2023). Such methods potentially impact user behavior, as they facilitate the development of systems that analyze various subtypes of bias comprehensively and in real-time (Spinde et al. 2021a).

Several approaches have been developed for automated media bias classification (Wessel et al. 2023; Spinde et al. 2024; Liu et al. 2021; Hube and Fetahu 2019; Vraga and Tully 2015). However, they share a challenge: While datasets are vital for training machine-learning models, the intricate and subjective nature of media bias makes the manual creation of these datasets time-consuming and expensive (Spinde et al. 2021b). Crowdsourcing is cost-effective but can yield unreliable annotations with low annotator agreement (Recasens, Danescu-Niculescu-Mizil, and Jurafsky 2013). In contrast, expert raters ensure consistency but

lead to substantial costs (Spinde et al. 2021b),1 making scaling data collection challenging (Spinde et al. 2021b). Consequently, the media bias domain lacks reliable datasets for effective training of automatic detection systems (Wessel et al. 2023). Successful Human-in-the-loop (HITL) approaches addressing similar challenges (Mosqueira-Rey et al. 2022; Karmakharm, Aletras, and Bontcheva 2019) remain untested for media bias, particularly visual methods (Karmakharm, Aletras, and Bontcheva 2019).

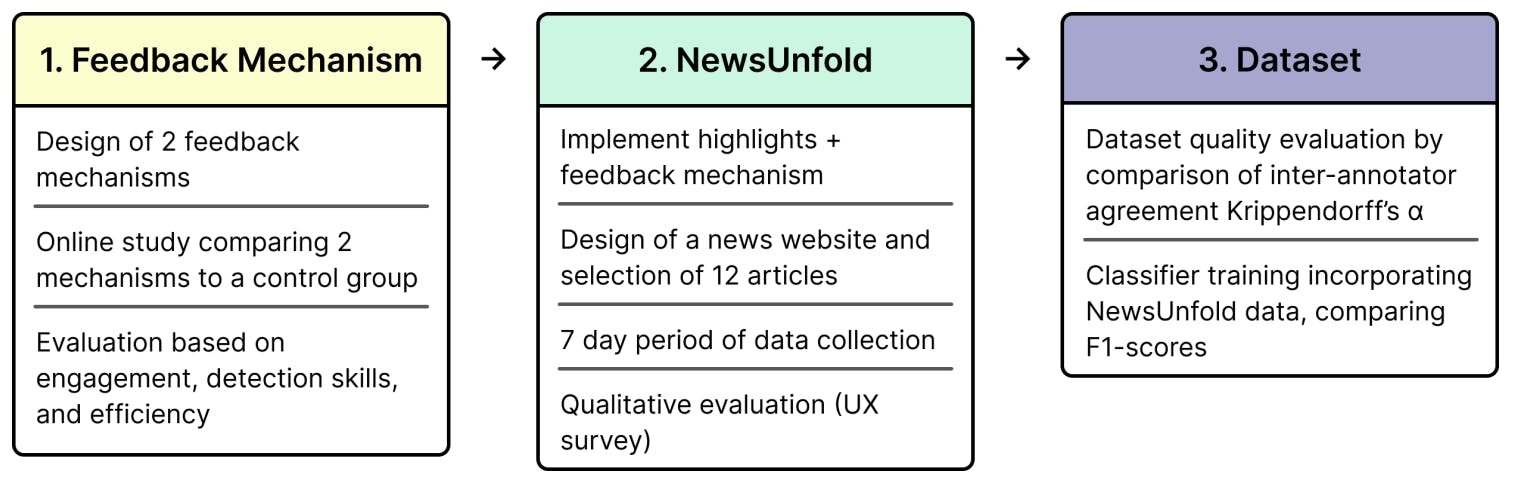

We propose a HITL ffedback mechanim showcased on NewsUnfold, a news-reading platform that visually indicates linguistic bias to readers and collects user input to improve dataset quality. NewsUnfold is the first approach employing feedback collection to gather a media bias dataset. In the first of three phases (Figure 1), since visual HITL (Human-in-the-Loop) methods for media bias annotation have not previously been tested, we conducted a study comparing two feedback mechanisms (Section 3). Second, we implement a feedback mechanism on NewsUnfold (Section 4). Third, we use NewsUnfold with 12 articles to curate the NewsUnfold Dataset (NUDA), comprising approximately 2000 annotations (Section 4). Notably, the collected feedback annotations exhibit a 90.97% agreement with expert annotations and a 26.31% higher inter-annotator agreement (IAA) than the baseline, the expert-annotated BABE dataset (Spinde et al. 2021b).[2] This increase is also visible when the dataset is used in classifier training, resulting in an F1-score of .824, an increase of 2.49% compared to the baseline BABE performance. While the platform’s design is adaptable to diverse subtypes of bias, we facilitate our evaluation by focusing on linguistic bias. Linguistic bias is defined by Spinde et al. (2024) as a bias by word choice to transmit a perspective that manifests prejudice or favoritism towards a specific group or idea (Spinde et al. 2024). Despite being neither objective nor binary, collecting binary labels is a promising solution regarding the challenges arising from its ambiguous and complex nature (Spinde et al. 2021b). A UX study involving 13 participants highlights high ease of use and enthusiasm for the concept. Participants also reported a strong perceived impact on critical reading and expressed positive sentiment toward the highlights.

In this work, we:

-

Explore feedback mechanisms for the first time in the context of automated media bias detection methods.

-

Introduce and evaluate NewsUnfold, a news-reading platform highlighting bias in news articles, making media bias detection models accessible for everyday news consumers. NewsUnfold collects feedback on bias highlights to improve its automatic detection.[3]

-

Generate the NewsUnfold Dataset (NUDA) incorporating approximately 2,000 annotations.

-

Present classifiers trained using NUDA and benchmarked against existing methodologies, enhancing performance when combined with other datasets.

This paper proposes a design for a cost-effective HITL system to improve and scale media bias datasets. Such feedback mechanisms can be integrated into various media platforms to highlight media bias and related concepts. Further, the system can adapt to changes in language and context, facilitating applied endeavors to run models on news sites and social media to understand and mitigate media bias and increase readers’ awareness.

This paper is available on arxiv under CC0 1.0 license.

[1] For example, in the expert-based BABE dataset, one sentence label costs four to six euros, varying with rater count.

[2] The IAA evaluates how consistently different individuals assess or classify the same dataset (Hayes and Krippendorff 2007).