Table of Links

- Abstract and Introduction

- Related Work

- Experiments

- Discussion

- Limitations and Future Work

- Conclusion, Acknowledgments and Disclosure of Funding, and References

3 Experiments

3.1 Design

Model Selection. To analyze the impact of fine-tuning on toxicity we first select a small number of high impact base models for experimentation. For compute-efficiency, and because many community developers similarly lack computational resources for large models, we select small models offered by three major labs, Google, Meta, and Microsoft, for analysis. For each lab we select two generations of models (e.g. Llama-2 and Llama-3) in order to explore potential changes over time. For each model we sought to analyze both the foundation model and the instruction-tuned, or chat-tuned, variant where available. Six models in total were analyzed: Phi-3-mini, Phi-3.5-mini, Llama-2-7B, Llama-3.1-8B, Gemma-2B, and Gemma-2-2B.

For each instruction-tuned model we conducted additional fine-tuning using the Dolly dataset from Databricks, an open-source dataset of 15k instruction-following records across topics including question-answering, text generation and summarization (Conover et al., 2023). The dataset does not intentionally contain toxic content, and is intended to fine-tune models to improve instructionfollowing capabilities. We conducted LoRA fine-tuning via the Unsloth library, and tuned each model using a T4 GPU via Google Colab for 1 epoch, with prior work demonstrating the number of epochs does not appear to materially impact safety performance (Qi et al., 2023).

Finally, for each instruction-tuned model we selected additional community-tuned variants uploaded to Hugging Face which were fine-tuned from the instruction-tuned checkpoint. To select these models, we searched for the instruction-tuned model within the Hugging Face model library, and sorted models by “Most Downloaded” (monthly), to assess models which were commonly used by other users. Many of the most popular models were quantizations of models, which were removed from analysis. We selected only models which were available using the Transformers library and analyzed two community-tuned models for each instruction-tuned variant. We observed that frequently the most popular models related to fine-tuning for improving multilingual capabilities or fine-tuning for uncensoring, otherwise known as “abliterating” - where fine-tuning aims to reduce refusal rates. This enabled us to assess a range of community-tuned models which might be expected to impact toxicity in different ways.

The final models selected in aggregate were downloaded over 60,000 times in the month prior to analysis, highlighting the popularity and relevance of community-tuned models.

Data. To assess toxicity we compiled a dataset of 2,400 prompts. The majority of the prompts derived from the RealToxicityPrompts dataset (Gehman et al., 2020). 1000 prompts of the 2.4k dataset were randomly sampled from the RealToxicityPrompts dataset, to assess how models respond to a range of toxic and non-toxic input prompts (Gehman et al., 2020). An additional 1000 prompts were selected based on scoring >0.75 for “severe toxicity” within the RealToxicityPrompts dataset. These prompts aimed to assess how robust models were to specific attacks seeking to elicit toxic outputs. The final 400 prompts consisted of four 100 prompt datasets covering prompts targeting race, age, gender, and religion, taken from the Compositional Evaluation Benchmark (CEB) dataset, intended to analyze potential disparities in performance across specific topics (S. Wang et al., 2024).

Metric. The roberta-hate-speech-dynabench-r4 model was used to determine toxicity of model generations (Vidgen et al., 2020). This model is the default toxicity metric provided by the Hugging Face Evaluate library, and defines toxicity, or hate, as “abusive speech targeting specific group characteristics, such as ethnic origin, religion, gender, or sexual orientation”. The model rates each output from 0 (non-toxic) to 1 (toxic) and sets a default threshold of >0.5 for determining a toxic output.

Comparisons. To assess the impact of fine-tuning on toxicity we conduct three experiments:

-

Comparing base models with instruction-tuned variants. We analyze how model creators’ fine-tuning impacts toxicity rates.

-

Comparing instruction-tuned variants with Dolly-tuned variants. We compare how toxicity is impacted when instruction tuned variants are continually fine-tuned using a non-adversarial dataset (Dolly), using the parameter efficient fine-tuning low rank adaptation.

-

Comparing instruction-tuned variants with community-tuned variants. We assess how toxicity is impacted in popularly used community-tuned variants of instruction-tuned models.

For each experiment we set temperature to 0 for all model generations, to determine the most likely next token. For each generation we restricted model outputs to 50 tokens. All models were accessed via the Hugging Face Model Hub using the Transformers library. Experiments were run using Google Colab using a single L4 GPU. In total, we assessed 28 models, which are listed in full in Appendix A.

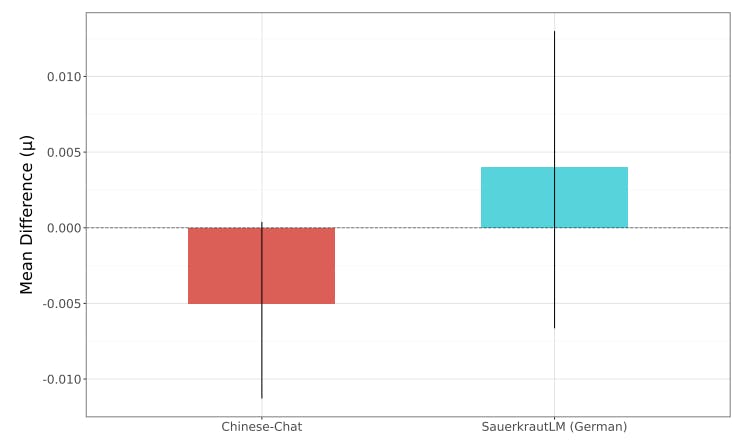

Estimation. To determine whether there is a credible difference between the propensity of models to output toxic content, we conduct Bayesian estimation analysis (BEST) to compare the results of pairs of models. We undertake this analysis using the continuous toxicity score, (yij ), provided by the toxicity metric, ranging from 0 to 1. We assume that the scores for each model j are sampled from a t-distribution:

yij ∼ t(ν, µj , σj ),

where ν is the degrees of freedom, µj is the mean toxicity score for model j, and σj is the scale parameter for model j. We then estimate the posterior distribution of the difference between group means (µ1 − µ2) using Bayesian inference and Markov Chain Monte Carlo (MCMC) methods. We use weakly informative priors for µ and σ, with a standard normal distribution applied for µ and a half-cauchy prior distribution with a beta of 10 in the case of σ (Gelman, 2006).

We select bayesian analysis rather than traditional significance tests such as a chi-squared test or z-test for two reasons. Firstly, the nature of conducting evaluations on generative models means it can be trivial to achieve statistically significant but practically small differences in model outputs. Secondly, various scholars have highlighted the pitfalls of converging continuous data into dichotomous data for the purposes of significance analysis (Dawson & Weiss, 2012; Irwin & McClelland, 2003; Royston et al., 2006). As a result, we concluded that bayesian analysis was the most appropriate measurement to determine how credible the differences between the toxicity rates for different models were.

Authors:

(1) Will Hawkins, Oxford Internet Institute University of Oxford;

(2) Brent Mittelstadt, Oxford Internet Institute University of Oxford;

(3) Chris Russell, Oxford Internet Institute University of Oxford.

This paper is available on arxiv under CC 4.0 license.