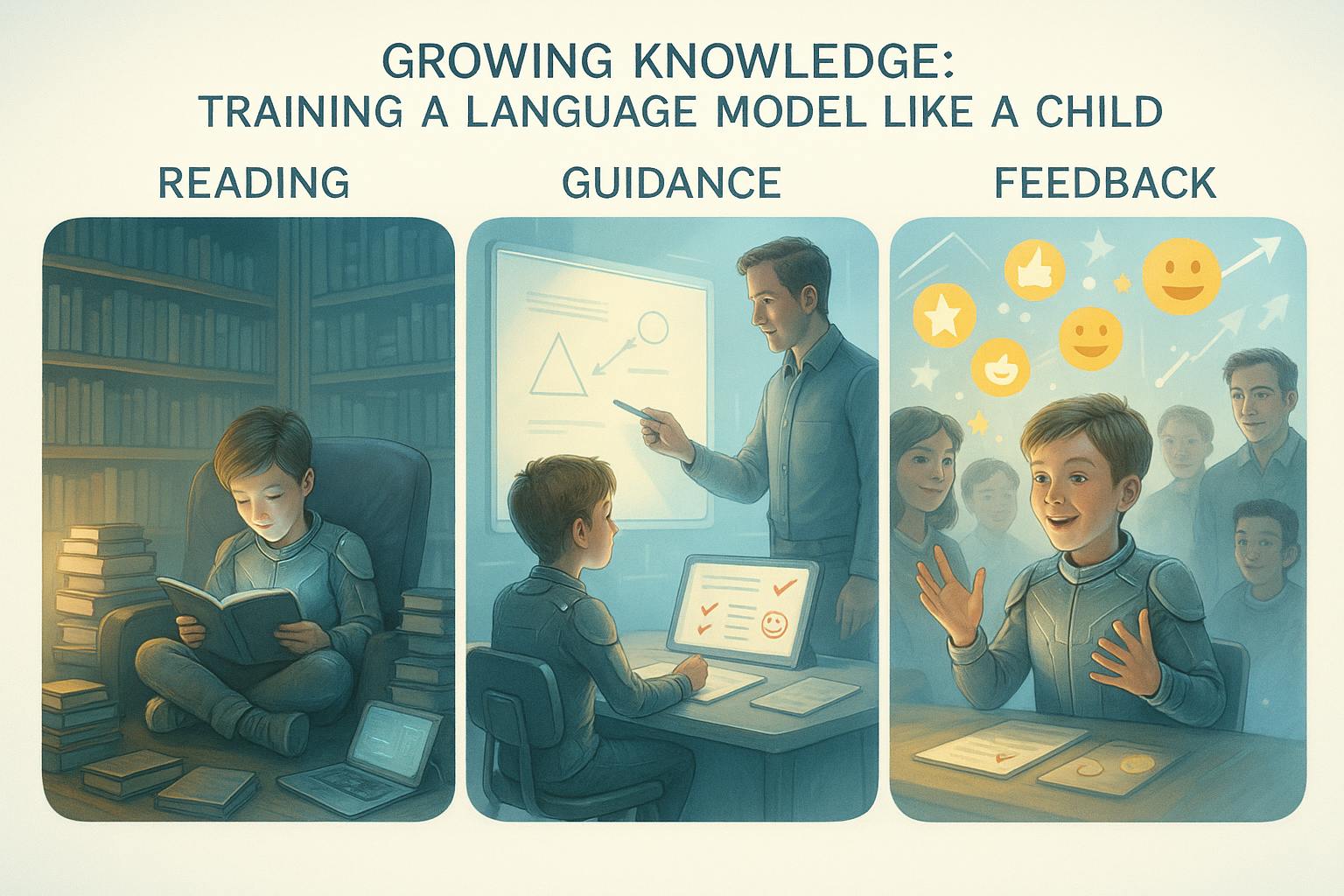

Training a large language model is like teaching someone how to speak and behave. Imagine a kid learning a language and being gradually guided to answer questions appropriately. We can break this process into three main stages, each with everyday examples.

Step 1: Learning by Reading — Self-Supervised Pre-Training

Think of a child growing up by reading every book available in the home library. Instead of having a teacher always explain things, the child learns the language naturally by listening, reading, and trying to understand context, grammar, and vocabulary.

How It Works in LLMs:

-

Learning from All Kinds of Text:

The model "reads" a huge amount of text from books, websites, and online forums. Just as a child picks up language patterns and common phrases, the model learns how words connect to form meaningful sentences.

-

Predicting the Next Word:

Imagine a fill-in-the-blank game where the child must guess the missing word in a sentence. Over time, by guessing repeatedly, the child starts to know which words fit best. The model is trained to predict the next word in a sentence, helping it learn the structure and flow of language.

Step 2: Learning What’s Right and Wrong — Supervised Fine-Tuning

Now, consider that the same child has a teacher who guides them on proper behavior and acceptable language. The teacher shows the child examples of good answers and explains why certain responses are more appropriate than others.

The same concept works in LLMs:

-

Curated Examples:

In this stage, engineers compile examples of questions and correct answers. It’s like a teacher offering a set of “model answers” to common questions.

-

Adjusting the Response:

The model is fine-tuned to mimic these good answers. For instance, if the model had previously been able to generate harmful or inappropriate responses (just as a child might mimic questionable language they overheard), now it’s corrected with examples of what to say.

Real-Life Analogy:

Consider a scenario where a teacher explains why certain instructions—like “don’t run in the hallways”—are important even though a child might know how to run. The teacher’s guidance shapes the child’s understanding of the proper way to act or answer.

Step 3: Learning by Feedback — Reinforcement Learning from Human Feedback (RLHF)

Imagine the child now taking part in a class debate. They speak, and the teacher and classmates provide immediate praise for good points and gentle correction for weak arguments. Over time, the child refines how they articulate ideas based on this feedback.

How It Works in LLMs:

-

Multiple Attempts and Feedback:

The model generates several responses for a given question, much like a student offering different answers during a discussion. -

Reward System:

Human reviewers (acting as the teacher) score these responses. Good answers earn “rewards” while poor answers receive lower scores. Think of it as a gold star system where each star helps the student understand what they did well.

-

Adjusting Future Responses:

Based on these scores, the model learns to favor responses similar to the highly scored ones. This is similar to the child learning which arguments or explanations are most persuasive or correct.

-

Two Methods – PPO and DPO:

-

PPO (Proximal Policy Optimization):

Imagine a situation where the teacher not only gives stars but also keeps track of how closely the student's answers match the ideal answers. This keeps the child’s responses balanced between creativity and correctness.

-

DPO (Direct Preference Optimization):

In another classroom, instead of tracking each detail, the teacher simply praises or gives feedback based on overall performance. This streamlines the process and works well when there isn’t time for detailed monitoring.

-

Further Reading for Advanced Users:

For those interested in delving deeper into the methodologies behind InstructGPT and RLHF (the main papers I was focused on in this article), the following papers are recommended:

- InstructGPT Paper: "Training language models to follow instructions with human feedback" by Ouyang et al. (2022). This paper outlines the process of aligning language models with user intent through fine-tuning with human feedback. Link to paper

- PPO and DPO Techniques:

- "Policy Optimization with RLHF — PPO/DPO/ORPO" by Sulbha Jindal (2024). This article provides an overview of various policy optimization techniques in RLHF, including PPO and DPO. Link to article

- "Unpacking DPO and PPO: Disentangling Best Practices for Learning from Preference Feedback" (2024). This paper discusses the differences and best practices between DPO and PPO in the context of RLHF. Link to paper

These resources offer a comprehensive understanding of the techniques and advancements in training LLMs to align with human preferences.