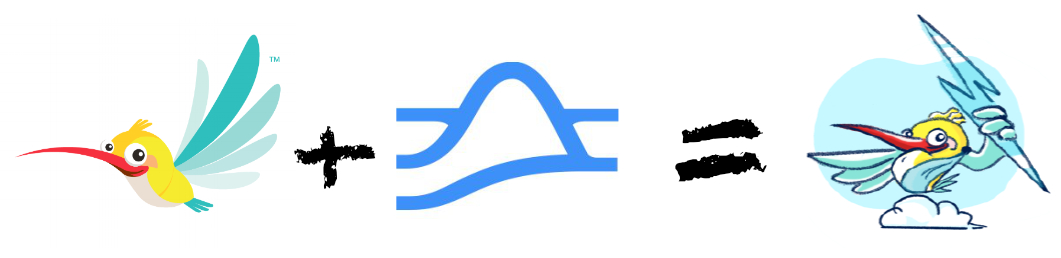

In this post we’ll look at building a highly efficient event processing topology by leveraging Apache Pulsar’s Functions framework and Project Flogo’s event-driven app framework.

Wait, why?

Perhaps you’re scratching your head and asking yourself why do we need this, what’s the point in using Apache Pulsar Functions vs something else for my event processing logic?. Good question, let’s examine just a few of the benefits of Pulsar’s functions framework before we move on:

- Significant reduction in overall system complexity, specifically the number of components required as your functions share resources with the Pulsar nodes

- Significantly improved performance and reduced latency

- Stateful support via Apache BookKeeper, when required for some streaming use cases

Additionally, as mentioned above, we’ll use Project Flogo to build our event processing logic. If you’re not familiar, Project Flogo is an open source, event-driven app framework designed from the ground up specifically around the event-driven paradigm and for serverless and FaaS compute models. Some of the benefits of the framework include:

- Built using Go, which yields a considerably more resource efficient runtime footprint vs an interpreted language.

- 100s of open source contributions for a number of common APIs, services and systems

- Event-driven paradigm designed for simple, streaming, and contextual event processing use cases

What about Use Cases?

Before we move on let’s spend a few minutes reviewing some of the ideal use cases for Apache Pulsar Functions + Project Flogo.

- High-volume event stream processing: This is a pretty obvious one (and encompasses a lot of higher level use cases). This can include high throughput log processing or on the other end of the spectrum, streams from edge devices to analyze metrics, detect faults and take action(s).

- Fraud detection: Leveraging the high throughout/low latency capability of Pulsar with Project Flogo’s native machine learning + contextual event processing capabilities can be leveraged for real-time fraud detection scenarios.

- Low latency content routing: Notification of content changes to end users or other systems.

The above list is just a few of the more common scenarios, however as you begin to combine the above into interconnected logic via Pulsar topics + Functions the myriad of problems you can solve with minimal infrastructure becomes quite impressive.

Getting Started with Apache Pulsar

I’m really not going to get into the details of Apache Pulsar itself, as there are countless articles that cover this in great detail and the Apache Pulsar docs are pretty awesome!

Pulsar Functions

So what exactly are Pulsar Functions? Well, they are compute processes that can be co-located with the cluster nodes. They are designed to consume messages from input topic(s), execute user-defined processing logic (the function itself) and publish results to an output topic. Optionally you can leverage Apache BookKeeper for state persistence and audit to a logging topic.

Put another way, Pulsar Functions provide a platform that is similar to other FaaS platforms, such as that provided by AWS Lambda, Azure Functions, etc. However, the system resources for Pulsar Functions are shared with the Pulsar node(s) and therefore not serverless, just FaaS. You may hear people refer to Pulsar Functions as serverless-like or serverless-inspired for this reason.

The benefits of leveraging such an approach, include:

- Reduced latency, as the function is co-located with the node(s)

- Simplified deployment topology. Well, in some ways this is true, in others not so much, especially if you’re comparing 1:1 to Lambda functions.

- Support for state via Apache BookKeeper

I suggest you take a look at docs for Pulsar Functions for a more detailed overview.

Spinning up our Pulsar Cluster

I’m not going to spend time setting up our Pulsar environment in this article. If you’re interested in setting up your own environment take a look at the Pulsar Docs. Again, they’re quite good!

However, if you’d like to follow along with me I’ll be using the Pulsar Docker Image from DockerHub. Go ahead and fetch the image. I'll wait.

Building the Function

You’ll recall that we’ve chosen to use Project Flogo as the app framework for building our function. This leaves us with several possibilities as to how we’d like to handle incoming events.

Project Flogo exposes a trigger & action construct. The trigger, in this case, is simply the event from a Pulsar topic. The action, a bit more interesting, encapsulates the intricacies of different event processing paradigms, for example:

- Stream processing is the notion of consuming events and applying, typically, time-based (or count based) operations against these events, such as aggregation.

- Simple event processing is, well, rather simple in that each event is processed one at a time and a specific action is taken.

Oftentimes stream processing is used as a pre-processor for simple event processing. For example, the average temp from a sensor is calculated over a period of time; after the time-bound window has expired a derived event is then published and consumed by a simple event processing function for some action to be taken (notify someone, shut something down, etc).

So, What are we Building?

Consider the following diagram illustrating the basic concept that we're targeting. Note that we're deploying the function in cluster mode, hence co-locating alongside the broker.

The above yields a few major benefits:

- When messages are published to the specified input topic the function logic is automatically executed

- The result of the event processing logic is automatically published to the specified output topic(s). This is incredibly handy, especially when you're building a pre-processor, such as in the typical case for streaming or simply looking to enrich events, as briefly discussed above.

This paradigm leaves you to focus only on the function logic itself, no need to worry about how to consume messages or how to publish the results to another topic or consumer. We also need not fret over where to run our functions!

Setup our Dev Environment

Before we can start building we’ll need to grab the latest Flogo Web UI from DockerHub.

docker run -it -p 3303:3303 flogo/flogo-docker eula-acceptAfter the container starts successfully you'll be presented with the following message:

======================================================

___ __ __ __ TM

|__ | / \ / _` / \

| |___ \__/ \__| \__/

[success] open http://localhost:3303 in your browser

======================================================Designing our Function Logic

Now that we’ve got the Flogo Web UI up and running, we’ll need to install the Pulsar Function Trigger. This trigger is a specialized Flogo trigger called a shim trigger, which basically allows the trigger to override the main entry point, hence bypassing the generic Flogo engine startup.

To install the trigger:

- - Select `Install contribution` from the top nav bar.

- - When prompted, enter the following in the dialog:

github.com/project-flogo/messaging-contrib/pulsar/functionAfter the trigger has successfully installed, we can begin designing our function:

- Create a new application by selecting

from the landing pageNew - You will be taken to your new app, name it anything you'd like, I've gone for the very creative name of

PulsarFunctions - Add a new flow (A flow is used for simple event processing; add a stream resource if you’d like to use streaming-oriented constructs) action, by clicking

orCreate an action

, name it anything you’d likeNew Action - Click on your newly created flow action to open it

You’ll be presented with a blank canvas where you can begin designing your flow (function) logic.

Hello Pulsar

To get started, let's first configure our flow input and outputs.

- Click on Input / Output

- Select Input and add a param named msgPayload

- Click Output and add a param named msgResponse

- Click Save when done

Add the following activities (activities are the core building block of a Flogo app, essentially the unit of work for a specific task — read from a database, invoke a REST service, etc) to your flow and configure them as discussed below:

- -

: Returns the specified data to the trigger. This allows us to return from the *flow* and map the output params.Return - Open the Configuration Window for the newly added activity

- Select

and insert the following into the mapper:msgResponsestring.concat("Hello ", $flow.msgPayload) - - Select

when doneSave

Feel free to add any additional activities based on your specific use case (take a look at the Flogo Showcase to browse community contributed activities/triggers).

Add the Trigger

We'll need to add the Pulsar Function trigger to our flow, this acts as the entry point into this flow (I’ve taken a bit of liberty and used flow and function interchangeably, as they are conceptually the same thing in this context.).

- Click Add Trigger

- Select Apache Pulsar Trigger Function from the list

After the trigger has been added, click the trigger on the left side of the canvas to open the trigger config modal. You'll want to map the input and output data to/from the trigger.

- On the Map to flow inputs tab, select the flow input param msgPayload and click the message from the trigger output

- On the Map from flow output select the trigger output out and click the flow output msgResponse

Note, trigger mappings enable you to build your flow logic independent of the trigger itself, we could easily add another trigger and support a different deployment model without any changes to our app logic.

Compiling your Function

To compile your binary, navigate back to the list of resources within your Flogo app, select the Build button and in the drop down, select

pulsar-function-triggerFlogo will build the binary and return a ELF bin that we can deploy to our Pulsar cluster.

Deploying our Function

Publishing our function to Pulsar in cluster mode is pretty easy:

/pulsar/bin/pulsar-admin functions create \

--go /root/pulsar-functions_linux_amd64 \

-i persistent://public/default/to-flogo \

-o persistent://public/default/from-flogo \

--classname emptyYou should see the message

"Created successfully"--classnameDoes This Actually Work?

Good question, let’s find out!

- Publish a message to the topic you specified as the input topic when deploying your function. In our case,

. To publish the message you can use theto-flogo

CLI:pulsar-client

bin/pulsar-client produce -m "Flogo" \

persistent://public/default/to-flogo- To consume from the

topic, which, as you'll recall, is the output topic specified when we deployed our function.from-flogo

bin/pulsar-client consume -s tst-sub \

persistent://public/default/from-flogoThe result of the

from-flogo----- got message -----

"Hello Flogo"Summary

On the Flogo front, I think it would be great to see a few enhancements:

- Support for the session capabilities to enable stateful stream processing

- Support for the `Log` activity to publish to the Pulsar log topic

- Support for publishing Flogo metrics to the Pulsar metrics topic. I think we’ll need to specifically consider what metrics we’d need to publish, but this could include execution time per activity, memory consumption, etc.

I really love the concept of hosting event processing logic alongside the Pulsar node(s), especially considering the reduction in latency and a simplified app architecture.

Interested in Enterprise Support?

I work at TIBCO Software and would be remiss if I didn’t at least mention our support for Apache Pulsar as part of TIBCO Messaging.