Table of Links

- Abstract and Introduction

- Backgrounds

- Type of remote sensing sensor data

- Benchmark remote sensing datasets for evaluating learning models

- Evaluation metrics for few-shot remote sensing

- Recent few-shot learning techniques in remote sensing

- Few-shot based object detection and segmentation in remote sensing

- Discussions

- Numerical experimentation of few-shot classification on UAV-based dataset

- Explainable AI (XAI) in Remote Sensing

- Conclusions and Future Directions

- Acknowledgements, Declarations, and References

10 Explainable AI (XAI) in Remote Sensing

XAI has become an increasingly crucial area of research and development in the field of remote sensing. As deep learning and other complex black-box models gain popularity for analysis of remote sensing data, there is a growing need to provide transparent and understandable explanations for how these models arrive at their predictions and decisions. Within remote sensing, explainability takes on heightened importance because model outputs often directly inform real-world actions with major consequences. For example, models identifying at-risk areas for natural disasters, pollution, or disease outbreaks can drive

evacuations, remediation efforts, and public health interventions. If the reasoning behind these model outputs is unclear, stakeholders are less likely to trust and act upon the model’s recommendations.

To address these concerns, XAI techniques in remote sensing aim to shed light inside the black box [134]. Explanations can highlight which input features and patterns drive particular model outputs [135]. Visualizations can illustrate a model’s step-by-step logic [136]. Uncertainty estimates can convey when a model is likely to be incorrect or unreliable [137]. Prototypes and case studies have shown promise for increasing trust and adoptability of AI models for remote sensing applications ranging from climate monitoring to precision agriculture [138]. As remote sensors continue producing ever-larger and more complex datasets, the role of XAI will likely continue growing in importance. With thoughtful XAI implementations, developers can enable deep learning models to not only make accurate predictions from remote sensing data, but also provide the transparency and justifications required for stakeholders to confidently use these tools for critical real-world decision making. Recent approaches to XAI in the field of remote sensing are outlined below.

One notable development is the ”What I Know” (WIK) method, which verifies the reliability of deep learning models by providing examples of similar instances from the training dataset to explain each new inference [139]. This technique demonstrates how the model arrived at its predictions.

XAI has also been applied to track the spread of infectious diseases like COVID-19 using remote sensing data [140]. By explaining disease prediction models, XAI enables greater trust and transparency. Additionally, XAI techniques have been used for climate adaptation monitoring in smart cities, where satellite imagery helps extract indicators of land use and environmental change [141].

Several specific XAI methods show promise for remote sensing tasks, including Local Interpretable Model-Agnostic Explanations (LIME), SHapley Additive exPlanations (SHAP), and Gradient-weighted Class Activation Mapping (Grad-CAM)[139]. These methods highlight influential input features and image regions that led to a model’s outputs. Grad-CAM produces visual heatmaps to indicate critical areas in an input image for each inference made by a convolutional neural network.

However, some challenges remain in fully integrating XAI into remote sensing frameworks. Practical difficulties exist in collecting labeled training data, extracting meaningful features, selecting appropriate models, ensuring generalization, and building reproducible and maintainable systems[141]. There are also inherent uncertainties in modeling complex scientific processes like climate change that limit the interpretability of model predictions [141]. Furthermore, the types of explanations provided by current XAI methods do not always match human modes of reasoning and explanation [142]. Despite the challenges, XAI methods hold promise for enhancing few-shot learning approaches in remote sensing. Few-shot learning aims to learn new concepts from very few labeled examples, which is important in remote sensing where labeled data is scarce across the diversity of land cover types. However, the complexity of few-shot learning models makes their predictions difficult to interpret.

10.1 XAI in Few-Shot Learning for Remote Sensing

Most XAI methods for classification tasks are post-hoc, which cannot be incorporated into the model structure during training. Back-propagation [143–146] and perturbation-based methods [147] are commonly used in XAI for classification tasks. However, few works have been carried out on XAI for few-shot learning tasks. Initial work has explored techniques like attention maps and feature visualization to provide insights into few-shot model predictions for remote sensing tasks [148]. Recently, a new type of XAI called SCOUTER [149] has been proposed, in which the self-attention mechanism are applied to the classifier. This method extracts discriminant attentions for each category in the training phase, allowing the classification results to be explainable. Such techniques can provide valuable insights into the decision-making process of few-shot classification models, increasing transparency and accountability, which is particularly important in remote sensing due to the high cost of acquiring and processing remote sensing data. In another recent work [150], a new approach to few-shot learning for image classification has been proposed that uses visual representations from a backbone model and weights generated by an explainable classifier. A minimum number of distinguishable features are incorporated into the weighted representations, and the visualized weights provide an informative hint for the few-shot learning process. Finally, a discriminator compares the representations of each pair of images in the support and query set, and pairs yielding the highest scores determined the classification results. This approach, when applied onto three mainstream datasets, achieved good accuracy and satisfactory explainability.

10.2 Taxonomy of Explainable Few-Shot Learning Approaches

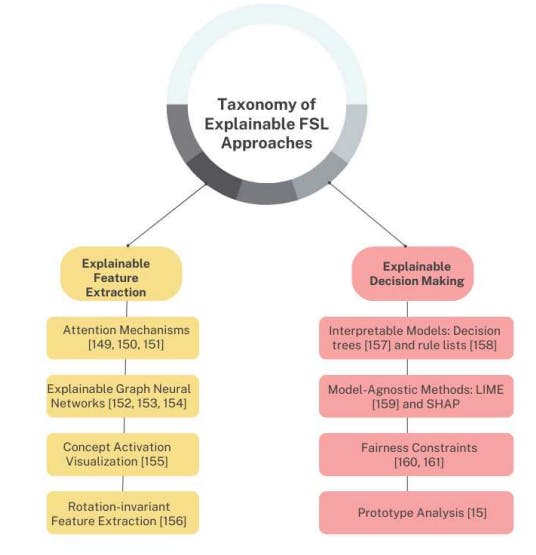

Explainable few-shot learning techniques for remote sensing can be categorized along two main dimensions:

10.2.1 Explainable Feature Extraction

These methods aim to highlight influential features or inputs that drive the model’s predictions.

• Attention Mechanisms: Attention layers accentuate informative features and inputs by assigning context-specific relevance weights [151]. They produce activation maps visualizing influential regions [150, 152]. However, they don’t explain overall reasoning process.

• Explainable Graph Neural Networks: Techniques like xGNNs [153, 154] can identify important nodes and relationships in graph-structured data. [155] puts forth attentive graph neural network modules that can provide visual and textual explanations illustrating which features are most crucial for fewshot learning. This provides feature-level transparency. But complete logic remains unclear.

• Concept Activation Visualization: Approaches like Grad-CAM produce saliency maps showing influential regions of input images [156]. But local feature importance may not fully represent global decision process.

• Rotation-invariant Feature Extraction: The proposed rotation-invariant feature extraction framework in [157] introduces an interpretable approach for extracting features invariant to rotations. This provides intrinsic visual properties rather than extraneous rotation variations.

10.2.2 Explainable Decision Making

These methods aim to directly elucidate the model’s internal logic and reasoning.

• Interpretable Models: Decision trees [158] and rule lists [159] provide complete transparency into model logic in a simplified human-readable format. However, accuracy is often lower than complex models.

• Model-Agnostic Methods: Techniques like LIME [160] and SHAP approximate complex models locally using interpretable representations. But generating explanations can be slow at prediction time.

• Fairness Constraints: By imposing fairness constraints during training [161] or transforming data into fair representations [162], biases can be mitigated. However, constraints may overly restrict useful patterns.

• Prototype Analysis: Analyzing prototypical examples from each class provides intuition into a model’s reasoning [15]. But limited to simpler instance-based models.

Overall, choosing suitable explainable few-shot learning techniques requires trading off accuracy, transparency, and efficiency based on the application requirements and constraints. A combination of feature and decision explanation methods is often necessary for complete interpretability. The taxonomy provides an initial guide to navigating this complex landscape of approaches in remote sensing contexts. For clarity, the taxonomy is also illustrated in Figure 8.

Authors:

(1) Gao Yu Lee, School of Electrical and Electronic Engineering, Nanyang Technological University, 50 Nanyang Ave, 639798, Singapore ([email protected]);

(2) Tanmoy Dam, School of Mechanical and Aerospace Engineering, Nanyang Technological University, 65 Nanyang Drive, 637460, Singapore and Department of Computer Science, The University of New Orleans, New Orleans, 2000 Lakeshore Drive, LA 70148, USA ([email protected]);

(3) Md Meftahul Ferdaus, School of Electrical and Electronic Engineering, Nanyang Technological University, 50 Nanyang Ave, 639798, Singapore ([email protected]);

(4) Daniel Puiu Poenar, School of Electrical and Electronic Engineering, Nanyang Technological University, 50 Nanyang Ave, 639798, Singapore ([email protected]);

(5) Vu N. Duong, School of Mechanical and Aerospace Engineering, Nanyang Technological University, 65 Nanyang Drive, 637460, Singapore ([email protected]).

This paper is