The Problem

Most people assume an image is either usable or not—that what we see is what we get. But in satellite feeds, bodycam footage, and drone surveillance, what we get is often blur, motion smear, noise, or resolution so low it borders on useless. Faces vanish. License plates become suggestion, not evidence. And that’s if you’re lucky.

Strangely, the tools we rely on to “enhance” those frames are designed to beautify, not verify. Most are built to please the eye, not the courtroom. Worse still are generative models that fill in gaps with fabricated data, which—though impressive—risk creating stories that were never real.

The stakes aren’t just theoretical. I’ve seen images that changed lives—courtroom exhibits, security feeds, aerial captures. I’ve also seen those same images go from murky to miraculous in the hands of the wrong algorithm. The enhancement looked perfect—but it wasn’t real. Somewhere in the pursuit of clarity, we started accepting creation. That’s where the danger begins: when sharpening the image means rewriting the moment.

It doesn’t help when the highest authorities throw up their hands and, without proper review, greenlight new technologies for courtroom use.

Every AI model—whether it enhances images or recognizes faces—operates on similarity, not certainty, often evaluated through statistical metrics like SSIM (Structural Similarity Index Measure). That “match” between input and output isn’t absolute; it reflects probability, not truth. If a system claims 99.9% accuracy on a dataset of 10,000 people, that still means 10 false positives—enough to misidentify an innocent person in a single city. Worse, many law enforcement systems are trained disproportionately on arrest databases or vendor-collected surveillance data, amplifying bias against certain demographics. The most reliable use of AI in this space isn’t to replace human observation, but to confirm it—starting with case-driven evidence, such as surveillance footage and a defined list of suspects drawn from investigative leads. Facial recognition should be used to confirm a lead, not generate one from the void.

The Approach

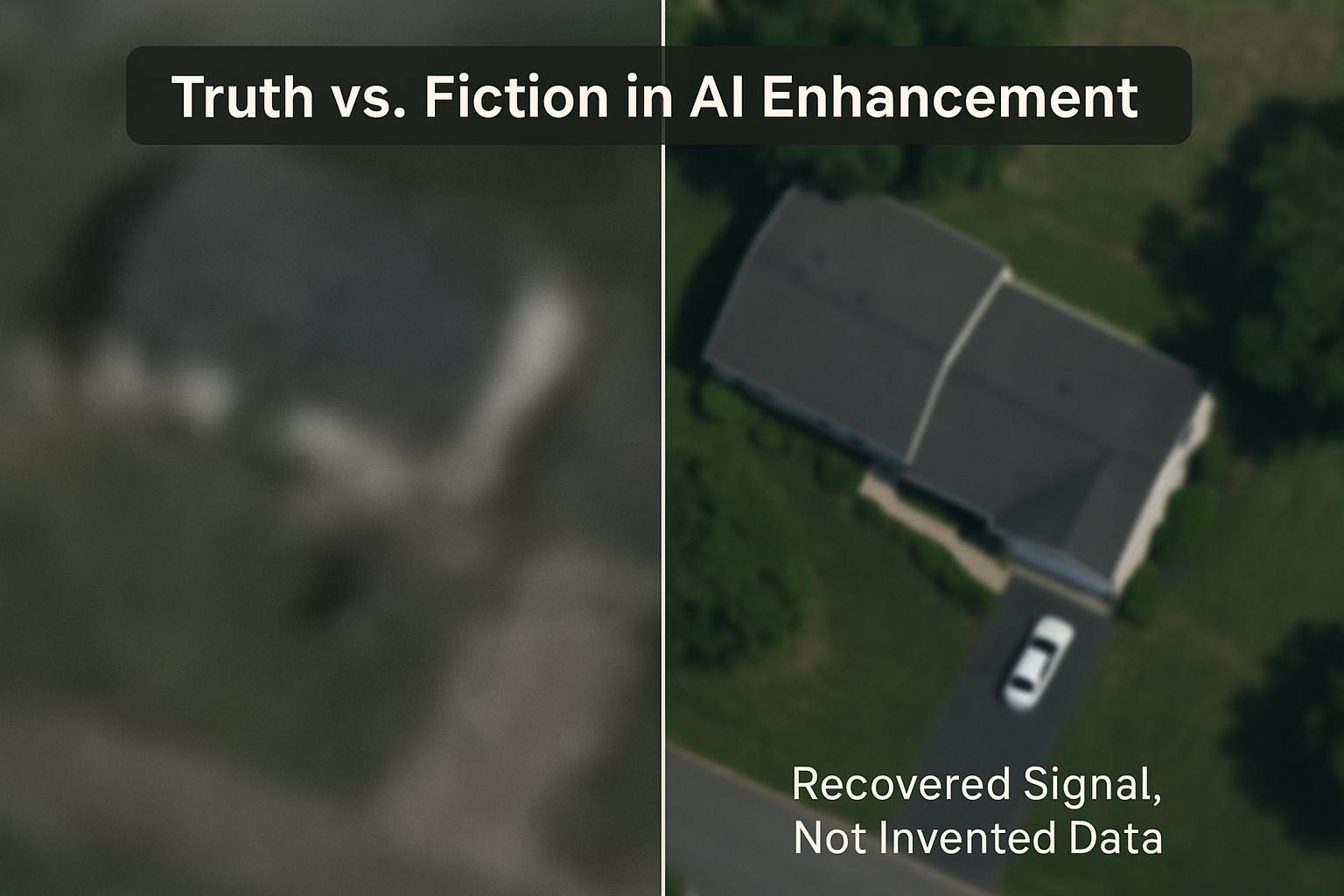

Most AI enhancement systems today fall into one of two camps: those that recover what’s there, and those that imagine what might be, where the AI takes the input to create a wholly new output based on the input, but with differing levels of truth. We usually liken it to, if in modern forensic investigations and photoediting a is b, and b is c, generative AI are using to generate b through z, and any iteration of which might differ dramatically in different ways from each, compared to the original. Hence why with generative ai you never really get the same face back twice, and in court, that distortion of truth makes it inadmissible, and if such in court- means unusable operationally ultimately. The former restores signal; the latter interpolates possibility. The difference isn’t academic—it determines whether an image is clarifying or compromising.

High-fidelity, context-aware enhancement for operational and court end use prioritizes the recovery of details that actually existed in the input data and if the observer were present—deblurring, denoising, light extraction based on existing values applied context context aware to the entire scene, where noise often acts as a sort of cloud over the underlying that once removed reveals the truth—without hallucinating missing content. Rather than stylizing or smoothing, this approach works with real signal patterns, adapting to scene context while maintaining evidentiary integrity.

In contrast, high-integrity enhancement approaches rely on supervised visual reconstruction—using models that are interpretable, deterministic, and designed to work directly with the structure of real-world images. These systems are often built from convolutional layers (which scan the image in patches to detect edges, textures, and shapes), residual or dense connections (which help preserve detail and reduce signal loss during processing), and sometimes lightweight attention modules that adapt based on what’s most relevant in the scene. These are not generative models; they don’t remix noise or interpolate from prompts. The goal isn’t to simulate an image—it’s to recover it. The result is an output that can be audited, explained, and trusted compared to the black box nature of many generative based AI models.

Despite these guardrails, generative image systems—those designed to “fill in” rather than recover—have entered legal practice at unprecedented speed. It took nearly 50 years for CCTV, invented in the 1940s, to become broadly accepted as courtroom evidence by the 1980s. Generative AI, by contrast, exploded into public consciousness in the early 2020s, and by 2024, court systems were already issuing advisory opinions on its admissibility (NCSC, Aug 7, 2024).

The legal system is now accepting outputs it can’t fully interrogate—models that hallucinate structure, can't be reverse-audited, and whose training sets are unknown. Meanwhile, more traditional, fidelity-first models—those designed to clarify, not invent—are quietly powering investigative workflows and court-admitted evidence across criminal, civil, and regulatory cases.

The irony is layered. While generative imagery raises foundational concerns, systems built to clarify rather than fabricate are already proving their reliability in practice. In multiple jurisdictions—including criminal, civil, and intellectual property cases—fidelity-focused enhancements have reached trial stage, helped secure early dismissals, or led to preemptive prosecutorial withdrawal. In some instances, enhancement has revealed inconsistencies between physical evidence and official testimony, or exposed procedural errors—such as when fingerprinting was skipped and multiple defendants were charged as interchangeable, despite enhanced footage showing clearly divergent behavior. One person wrongly swept up in such a charge was released on this basis.

And yet, the legal landscape isn’t moving in one direction. In January 2025, the White House issued an executive order that explicitly revoked several prior AI directives, citing them as barriers to American innovation. The intent is clear: to accelerate development, deployment, and adoption of AI systems, particularly those tied to economic and national security interests. At the same time, bipartisan proposals like the Cruz-Blackburn provision (2024) aim to protect law enforcement use of AI from federal overreach, restricting the ability of states to regulate how these tools are used within their own jurisdictions.

This creates a tension between federal encouragement and state-level caution. On one hand, the revocation of oversight frameworks clears a path for rapid AI integration across sectors—including surveillance, defense, and criminal justice—without waiting for consensus on ethics or transparency. On the other, state courts, attorneys general, and local prosecutors are left navigating legal gray zones where admissibility, accountability, and due process vary widely. The result is a fragmented system: what’s accepted as “evidence” in one state may be inadmissible in another—not because the facts differ, but because the tooling and its scrutiny do.

It also raises a deeper question: what power do existing judicial guidelines or professional ethics standards really have when executive and legislative priorities are increasingly aligned toward speed, not caution? As AI adoption accelerates from the top down, courts are being asked to retrofit trust onto tools they never vetted. The burden of integrity, in practice, is shifting away from law and toward code.

But the deeper irony is this: the public has long assumed this kind of technology already existed. From CSI to Stargate, Blade Runner to Person of Interest, audiences were trained to believe that you could just “zoom in and enhance”—a meme unto itself, with pop culture making it seem like CSI-level image enhancement had already arrived. In reality, it took more than a century of math to get there. From Fourier transforms to Bayesian inference, scientists spent decades figuring out how to reverse blur, isolate noise, and reconstruct signals—not guess at them. The problem wasn’t lack of images or compute. It was that until the early 2000s, the math wasn’t ready. And until recently, few systems treated each pixel as part of a global signal—something that should only be changed when everything else is taken into account. Enhancement isn’t just a filter. It’s the long game of physics, statistics, and spatial geometry finally catching up to our expectations.

For context, filters, as they’ve existed historically, are clumsy. They apply the same transformation to every pixel regardless of context. Generative AI, for all its novelty, isn’t much better—it cuts corners, fills backgrounds with unrealistic smoothness, erases natural texture from clothing, and treats enhancement as an artistic task. But real restoration is a pixel-by-pixel reconstruction of signal—each pixel is a value, not an opinion—and no pixel should be adjusted without taking into account the rest of the frame. The shirt sleeve in one corner obscuring or covering a light source affects the shadow beneath a chin across the frame. Context matters. That’s what real enhancement respects, and what generative systems still get wrong.

The Use Cases: Operational Reality vs. Aesthetic Guesswork

There’s a difference between cleaning an image to look good and cleaning it to be right. When the goal is presentation, aesthetics might be enough. But when the outcome affects operational and legal responsibility, mission decisions, or source verification—the enhancement has to hold up under pressure, without a black box nature, traceable, auditable, predictable. That’s where operational use cases split from artistic ones, for example:

Forensics & Law

Clarify evidence in active investigations and courtroom trials. Recover visual elements that may otherwise be dismissed—faces, license plates, gestures. Distinguish between suspects. Reduce wrongful convictions. Enable fair representation, especially when footage is the only witness available.

Generative AI fails here because it introduces details that never existed—creating faces that resemble the original, but are not it. A becomes B-Z, and no version is forensically admissible. Worse, many generative systems are proprietary black boxes—neither the model’s weights nor the engineer behind it can be brought to testify. If the enhancement can’t be defended, it can’t be evidence.

Aerospace & Space Systems

Enhance the clarity of high-velocity satellite, aerial, and drone imagery affected by motion blur, atmospheric distortion, or compression artifacts. Restoration techniques recover critical visual detail from low-resolution or edge-of-range sensor data, improving situational awareness, flight safety analysis, terrain modeling, and post-mission assessment in challenging operational environments.

Surveillance & Security

Enhance motion-blurred CCTV or bodycam footage. Extract usable detail from degraded or partial frames. Identify individuals or reconstruct events in legally admissible form. Make surveillance footage actionable—not decorative.

Generative models are disqualified in this space because they can’t explain their outputs. A generative model may output a face or object, but there’s no guarantee it was in the original data—it may simply "look correct." In legal review or internal investigations, that gap between real and plausible invites mistrial, misidentification, and legal liability.

OEM Partnerships

Embed enhancement pipelines directly into edge devices—drones, sensors, vehicle systems—so restoration starts at the point of capture. Reduce downstream load. Preserve clarity in bandwidth- or compute-limited environments. Support aerospace, automotive, or tactical workflows where fidelity must persist across the signal chain.

Generative systems introduce risk to embedded environments because their outputs are inherently non-deterministic and cannot be easily validated. You can’t place a black-box model on a drone and expect traceable output at altitude. Edge deployments require predictable, auditable logic—not best guesses disguised as reconstructions.

AI Verification

Detect synthetic or manipulated content—deepfakes, in-painted features, generative overlays—by comparing restored signal against physical consistency and known degradation profiles. Determine whether an image was recovered or manufactured. Preserve source integrity and provenance, especially when public trust or legal weight is at stake.

Generative models undermine this process entirely. If the same class of models used to generate fakes is also used to “enhance” real images, there’s no line left between signal and synthesis. Verification relies on contrast—restoration systems must be rooted in physics and truth, not similarity and style. GenAI blurs that distinction until authenticity is meaningless.

Why Generative AI Fails at the Architectural Level

It’s not just the output that disqualifies most generative AI systems—it’s the math. These models are built to generate new content, not recover lost signal. Architectures like GANs (Generative Adversarial Networks) and diffusion models are designed to fill gaps, synthesize plausible imagery, or remix representations drawn from learned latent spaces. They do not perform deterministic transformations from A to B. They generate B through Z—multiple possible outcomes, each subtly or drastically different from the original.

Even when generative tools include traditional image processing steps (like edge detection, denoising, or segmentation), those elements are often used as guides for synthesis, not for recovery. The core pipeline is still based on non-invertible mappings, latent blending, and sampling from distributions, not signal preservation.

This makes their outputs mathematically unverifiable. You cannot trace a pixel back to its origin. You cannot reproduce the result deterministically. You cannot audit the decision chain. And in any domain where the image matters operationally—where it might be used to detain someone, prosecute a case, assess a threat, or steer a drone—that’s not just a technical limitation. It’s a fatal flaw and why generative models, no matter how sharp their output looks, are inherently mismatched to these roles. They don’t restore signal—they generate substitutes. And in fields where clarity must also be traceable, that makes them not just risky, but disqualifying.

Real enhancement doesn’t invent. It reveals. These use cases only matter when the image being enhanced still tells the truth.

-

History of Signal Processing

A Brief History of Signal Processing and the Path to CSI-Level Image Restoration

The kind of image restoration we now consider routine—sharpening a license plate, clarifying a face, pulling light out of shadow—didn’t begin with digital cameras or AI. It began with math.

The foundations go back to the 19th and early 20th centuries, starting with the Fourier transform, which allowed signals and images to be analyzed in the frequency domain. This made it possible to understand how noise, blur, and distortion actually work—not just how they look. Around the same time, the Gaussian function, first studied in physics and probability, became the mathematical model for blur. It smoothed evenly in all directions (what we now call isotropic blur), and later served as the basis for denoising and low-pass filtering.

Then came Norbert Wiener, whose 1949 work introduced the Wiener filter—the first method that treated restoration as a formal inverse problem. His approach didn’t try to "enhance" an image visually. It tried to solve for the original image, balancing assumptions about the signal with a statistical model of the noise. That idea—that damaged images could be mathematically reversed—redefined the field.

But this theoretical progress couldn’t be fully realized until the arrival of something deceptively simple: the pixel. The modern concept of the pixel (short for “picture element”) emerged in 1957 with the first digital image scanners, and was formalized through the work of Russell Kirsch, who produced the first scanned digital photo at 176×176 resolution. It marked a seismic shift. It collapsed continuous visual data into discrete, quantized spatial units—creating the grid structure that all modern digital image processing depends on.

Why it mattered: before pixels, restoration operated entirely in the continuous domain—using analog signals, differential equations, and assumptions of infinite resolution. With the advent of digital imaging, all of that had to be redefined. Filters became matrix convolutions. Noise became quantized. Algorithms like Wiener filtering could now be applied pixel by pixel, rather than as abstract models. It also made Fourier transforms computationally practical (via FFT), and Gaussian smoothing executable on actual image data. This shift into the discrete domain turned signal theory into software.

In the 1970s, William H. Richardson and Leonard B. Lucy pushed that capability further. They developed what’s now known as the Richardson–Lucy deconvolution algorithm—a Bayesian, iterative method that progressively refines a sharp image from a blurry one. Instead of applying a fixed filter, it updated the estimate at every step, using the observed data itself to guide the process. It assumed Poisson noise, making it ideal for low-light conditions—like surveillance footage or astronomical imaging.

By the late 1970s, William K. Pratt’s textbook Digital Image Processing gave the field a spatial grounding. He introduced the motion blur kernel, modeling how blur forms from straight-line movement—like a shaking camera. His work led to the development of the point spread function (PSF), which models how a single point of light spreads in an image due to motion or optical imperfections. This gave image scientists a way to simulate and reverse blur with precision.

Then, in 2006, came a turning point. Rob Fergus, Barun Singh, Aaron Hertzmann, Sam Roweis, and William Freeman published Removing Camera Shake from a Single Photograph—a now-landmark paper presented at SIGGRAPH and cited over 3,000 times. Their system offered a practical solution for non-uniform, spatially varying motion blur, which until then had been largely unsolved. Instead of assuming one blur pattern across the whole image, they broke the image into regions, estimated a separate motion path for each, and then reconstructed the image using local priors. It was adaptive. It was patch-based. And it reflected how blur actually happens in real-world images.

Taken together, this lineage—Wiener’s mathematical restoration, Richardson-Lucy’s statistical refinement, Pratt’s geometric modeling, and Fergus et al.’s localized, Bayesian blur correction—defined the technical pathway that today’s best enhancement systems build on.

These models didn’t just create tools. They shaped the philosophy behind restoration: that signal can be recovered—not just guessed at—and that clarity is something you reconstruct, not generate. That assumption, once theoretical, now forms the backbone of modern forensic enhancement systems capable of achieving results that once only existed in science fiction.

-

Why It Matters Now

This isn’t a future problem. It’s a current one.

The explosion of generative tools—deepfakes, face swaps, synthetic media—has undermined evidentiary trust at the source. It’s no longer enough to say, “we have the footage.” Courts, investigators, and operators now have to ask: who enhanced it, how, and with what?

The chain of evidence becomes fragile when any visual can be fabricated indistinguishably. A single synthetic frame can contaminate a case, a report, or a mission record. And the tools that generate these distortions often look indistinguishable from those that claim to fix them.

The public’s perception of “real vs fake” is shifting fast. And in that shift, truth is being flattened into aesthetics. Real clarity—the kind that matters in court, in airspace, or on the ground—requires more than visual sharpness. It requires provenance. It requires transparency. It requires tools that protect signal—not decorate it.

Most people still think we solved this problem years ago. We didn’t. We just faked it well enough to stop asking questions. But the time for that is over. Enhancing the truth is now a legal, ethical, and operational necessity—not a cosmetic one.

If we’re going to trust the image again, we have to earn that trust back—one pixel at a time.

What Comes Next, What Can We Expect, What Should We Expect?

The problem isn’t that generative AI exists. The problem is that we’ve let it bleed into domains that demand accountability—without demanding any from the systems that create it.

This won’t be solved by another algorithm. It will be solved by expectation.

The government—particularly legislative bodies and executive departments—must set a standard. Not just one that funds innovation, but one that defines boundaries for admissibility and accountability. This means requiring auditability for any AI system used in law enforcement or litigation, mandating transparency in procurement and deployment, and enforcing disclosure when synthetic enhancement is involved. Innovation should not outrun oversight.

Courts need to stop accepting enhancement tools without validation. If the model can’t be explained, traced, or defended in court, it should be excluded—just like any other unauthenticated evidence.

Prosecutors must ask where enhanced footage came from, what process was applied, and whether that process can be challenged—not just relied on because it “looks clearer.”

Defense attorneys must press harder when enhancements are presented. If it’s not deterministic, if it can’t be reproduced, if the developer won’t testify—it’s not evidence. It’s opinion.

Police and agencies need to be honest about their tooling. If they're relying on black-box enhancement systems that hallucinate structure, they’re not strengthening cases—they're opening them to collapse.

AI companies, even those not working in generative media, should commit to building transparent, explainable, and auditable systems—especially for operational and high-stakes domains. That means documentation, testable logic, and a willingness to defend the model in court—not just “API-as-a-service.” At a minimum, they should embed authorship metadata and clearly label AI-generated content, so provenance and transformation history aren’t erased at export. It’s not about watermarking—it’s about preserving the chain of custody. It’s not about watermarking—it’s about preserving the chain of custody. Truth needs to be traceable, not just visible. Clarity means nothing without credibility—and credibility demands trust.

Engineers have a critical role to play — not just in optimizing performance, but in ensuring systems are traceable and defensible. Mathematical elegance alone doesn’t make a system legally or ethically sound, admissible in court or accountable in practice. Engineers can lead the way by designing systems that prioritize traceability, not just performance. Engineers aren’t just building models—they’re shaping how those models are used. When traceability is part of the design, it strengthens both the system and the society it serves.

The general public must stop assuming that “enhanced” means “true.” We’ve seen too many images pass through filters—on phones, on social media, in newsrooms—and come out looking sharper, but meaning less.

Jurors, who decide over 90% of criminal trials that go to court, should understand that an image is not neutral—and that in some cases, the image itself is the argument. Ask where it came from. Ask what changed. Ask who changed it.

And if you're reading this and you're working with AI—building, applying, regulating, teaching, litigating—you have a role in this too. Because the future of evidence isn’t just about clarity. It’s about credibility, and how much we can trust true ground truth.

This isn’t about fearing the tech. It’s about not giving it power it hasn’t earned.

If we’re going to trust what we see again, we have to build systems that deserve it.