Content Overview

- Setup

- Overview

- What you can with a ragged tensor

- Constructing a ragged tensor

- What you can store in a ragged tensor

- Example use case

- Ragged and uniform dimensions

- Ragged vs sparse

- TensorFlow APIs

- Keras

- tf.Example

- Datasets

- tf.function

- SavedModels

- Overloaded operators

Setup

!pip install --pre -U tensorflow

import math

import tensorflow as tf

2024-08-27 01:20:40.536630: E external/local_xla/xla/stream_executor/cuda/cuda_fft.cc:485] Unable to register cuFFT factory: Attempting to register factory for plugin cuFFT when one has already been registered

2024-08-27 01:20:40.557820: E external/local_xla/xla/stream_executor/cuda/cuda_dnn.cc:8454] Unable to register cuDNN factory: Attempting to register factory for plugin cuDNN when one has already been registered

2024-08-27 01:20:40.563984: E external/local_xla/xla/stream_executor/cuda/cuda_blas.cc:1452] Unable to register cuBLAS factory: Attempting to register factory for plugin cuBLAS when one has already been registered

Overview

Your data comes in many shapes; your tensors should too. Ragged tensors are the TensorFlow equivalent of nested variable-length lists. They make it easy to store and process data with non-uniform shapes, including:

- Variable-length features, such as the set of actors in a movie.

- Batches of variable-length sequential inputs, such as sentences or video clips.

- Hierarchical inputs, such as text documents that are subdivided into sections, paragraphs, sentences, and words.

- Individual fields in structured inputs, such as protocol buffers.

What you can do with a ragged tensor

Ragged tensors are supported by more than a hundred TensorFlow operations, including math operations (such as tf.add and tf.reduce_mean), array operations (such as tf.concat and tf.tile), string manipulation ops (such as tf.strings.substr), control flow operations (such as tf.while_loop and tf.map_fn), and many others:

digits = tf.ragged.constant([[3, 1, 4, 1], [], [5, 9, 2], [6], []])

words = tf.ragged.constant([["So", "long"], ["thanks", "for", "all", "the", "fish"]])

print(tf.add(digits, 3))

print(tf.reduce_mean(digits, axis=1))

print(tf.concat([digits, [[5, 3]]], axis=0))

print(tf.tile(digits, [1, 2]))

print(tf.strings.substr(words, 0, 2))

print(tf.map_fn(tf.math.square, digits))

WARNING: All log messages before absl::InitializeLog() is called are written to STDERR

I0000 00:00:1724721643.161122 10064 cuda_executor.cc:1015] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355

I0000 00:00:1724721643.164924 10064 cuda_executor.cc:1015] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355

I0000 00:00:1724721643.168750 10064 cuda_executor.cc:1015] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355

I0000 00:00:1724721643.172328 10064 cuda_executor.cc:1015] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355

I0000 00:00:1724721643.183636 10064 cuda_executor.cc:1015] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355

I0000 00:00:1724721643.187178 10064 cuda_executor.cc:1015] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355

I0000 00:00:1724721643.190668 10064 cuda_executor.cc:1015] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355

I0000 00:00:1724721643.194028 10064 cuda_executor.cc:1015] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355

I0000 00:00:1724721643.197422 10064 cuda_executor.cc:1015] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355

I0000 00:00:1724721643.201089 10064 cuda_executor.cc:1015] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355

I0000 00:00:1724721643.204485 10064 cuda_executor.cc:1015] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355

I0000 00:00:1724721643.207934 10064 cuda_executor.cc:1015] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355

I0000 00:00:1724721644.469725 10064 cuda_executor.cc:1015] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355

I0000 00:00:1724721644.471772 10064 cuda_executor.cc:1015] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355

I0000 00:00:1724721644.473847 10064 cuda_executor.cc:1015] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355

I0000 00:00:1724721644.475930 10064 cuda_executor.cc:1015] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355

I0000 00:00:1724721644.477949 10064 cuda_executor.cc:1015] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355

I0000 00:00:1724721644.479867 10064 cuda_executor.cc:1015] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355

I0000 00:00:1724721644.481845 10064 cuda_executor.cc:1015] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355

I0000 00:00:1724721644.483831 10064 cuda_executor.cc:1015] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355

I0000 00:00:1724721644.486309 10064 cuda_executor.cc:1015] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355

I0000 00:00:1724721644.488220 10064 cuda_executor.cc:1015] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355

I0000 00:00:1724721644.490205 10064 cuda_executor.cc:1015] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355

I0000 00:00:1724721644.492181 10064 cuda_executor.cc:1015] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355

I0000 00:00:1724721644.532055 10064 cuda_executor.cc:1015] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355

I0000 00:00:1724721644.534019 10064 cuda_executor.cc:1015] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355

I0000 00:00:1724721644.536012 10064 cuda_executor.cc:1015] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355

I0000 00:00:1724721644.538150 10064 cuda_executor.cc:1015] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355

I0000 00:00:1724721644.540140 10064 cuda_executor.cc:1015] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355

I0000 00:00:1724721644.542032 10064 cuda_executor.cc:1015] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355

I0000 00:00:1724721644.543963 10064 cuda_executor.cc:1015] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355

I0000 00:00:1724721644.545991 10064 cuda_executor.cc:1015] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355

I0000 00:00:1724721644.547989 10064 cuda_executor.cc:1015] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355

I0000 00:00:1724721644.551435 10064 cuda_executor.cc:1015] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355

I0000 00:00:1724721644.553772 10064 cuda_executor.cc:1015] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355

I0000 00:00:1724721644.556197 10064 cuda_executor.cc:1015] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355

<tf.RaggedTensor [[6, 4, 7, 4], [], [8, 12, 5], [9], []]>

tf.Tensor([2.25 nan 5.33333333 6. nan], shape=(5,), dtype=float64)

<tf.RaggedTensor [[3, 1, 4, 1], [], [5, 9, 2], [6], [], [5, 3]]>

<tf.RaggedTensor [[3, 1, 4, 1, 3, 1, 4, 1], [], [5, 9, 2, 5, 9, 2], [6, 6], []]>

<tf.RaggedTensor [[b'So', b'lo'], [b'th', b'fo', b'al', b'th', b'fi']]>

<tf.RaggedTensor [[9, 1, 16, 1], [], [25, 81, 4], [36], []]>

There are also a number of methods and operations that are specific to ragged tensors, including factory methods, conversion methods, and value-mapping operations. For a list of supported ops, see the tf.ragged package documentation.

Ragged tensors are supported by many TensorFlow APIs, including Keras, Datasets, tf.function, SavedModels, and tf.Example. For more information, check the section on TensorFlow APIs below.

As with normal tensors, you can use Python-style indexing to access specific slices of a ragged tensor. For more information, refer to the section on Indexing below.

print(digits[0]) # First row

tf.Tensor([3 1 4 1], shape=(4,), dtype=int32)

print(digits[:, :2]) # First two values in each row.

<tf.RaggedTensor [[3, 1], [], [5, 9], [6], []]>

print(digits[:, -2:]) # Last two values in each row.

<tf.RaggedTensor [[4, 1], [], [9, 2], [6], []]>

And just like normal tensors, you can use Python arithmetic and comparison operators to perform elementwise operations. For more information, check the section on Overloaded operators below.

print(digits + 3)

<tf.RaggedTensor [[6, 4, 7, 4], [], [8, 12, 5], [9], []]>

print(digits + tf.ragged.constant([[1, 2, 3, 4], [], [5, 6, 7], [8], []]))

<tf.RaggedTensor [[4, 3, 7, 5], [], [10, 15, 9], [14], []]>

If you need to perform an elementwise transformation to the values of a RaggedTensor, you can use tf.ragged.map_flat_values, which takes a function plus one or more arguments, and applies the function to transform the RaggedTensor's values.

times_two_plus_one = lambda x: x * 2 + 1

print(tf.ragged.map_flat_values(times_two_plus_one, digits))

<tf.RaggedTensor [[7, 3, 9, 3], [], [11, 19, 5], [13], []]>

Ragged tensors can be converted to nested Python lists and NumPy arrays:

digits.to_list()

[[3, 1, 4, 1], [], [5, 9, 2], [6], []]

digits.numpy()

array([array([3, 1, 4, 1], dtype=int32), array([], dtype=int32),

array([5, 9, 2], dtype=int32), array([6], dtype=int32),

array([], dtype=int32)], dtype=object)

Constructing a ragged tensor

The simplest way to construct a ragged tensor is using tf.ragged.constant, which builds the RaggedTensor corresponding to a given nested Python list or NumPy array:

sentences = tf.ragged.constant([

["Let's", "build", "some", "ragged", "tensors", "!"],

["We", "can", "use", "tf.ragged.constant", "."]])

print(sentences)

<tf.RaggedTensor [[b"Let's", b'build', b'some', b'ragged', b'tensors', b'!'],

[b'We', b'can', b'use', b'tf.ragged.constant', b'.']]>

paragraphs = tf.ragged.constant([

[['I', 'have', 'a', 'cat'], ['His', 'name', 'is', 'Mat']],

[['Do', 'you', 'want', 'to', 'come', 'visit'], ["I'm", 'free', 'tomorrow']],

])

print(paragraphs)

<tf.RaggedTensor [[[b'I', b'have', b'a', b'cat'], [b'His', b'name', b'is', b'Mat']],

[[b'Do', b'you', b'want', b'to', b'come', b'visit'],

[b"I'm", b'free', b'tomorrow']]]>

Ragged tensors can also be constructed by pairing flat values tensors with row-partitioning tensors indicating how those values should be divided into rows, using factory classmethods such as tf.RaggedTensor.from_value_rowids, tf.RaggedTensor.from_row_lengths, and tf.RaggedTensor.from_row_splits.

tf.RaggedTensor.from_value_rowids

If you know which row each value belongs to, then you can build a RaggedTensor using a value_rowids row-partitioning tensor:

print(tf.RaggedTensor.from_value_rowids(

values=[3, 1, 4, 1, 5, 9, 2],

value_rowids=[0, 0, 0, 0, 2, 2, 3]))

<tf.RaggedTensor [[3, 1, 4, 1], [], [5, 9], [2]]>

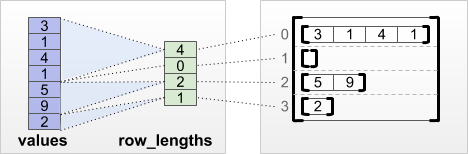

tf.RaggedTensor.from_row_lengths

If you know how long each row is, then you can use a row_lengths row-partitioning tensor:

print(tf.RaggedTensor.from_row_lengths(

values=[3, 1, 4, 1, 5, 9, 2],

row_lengths=[4, 0, 2, 1]))

<tf.RaggedTensor [[3, 1, 4, 1], [], [5, 9], [2]]>

tf.RaggedTensor.from_row_splits

If you know the index where each row starts and ends, then you can use a row_splits row-partitioning tensor:

print(tf.RaggedTensor.from_row_splits(

values=[3, 1, 4, 1, 5, 9, 2],

row_splits=[0, 4, 4, 6, 7]))

<tf.RaggedTensor [[3, 1, 4, 1], [], [5, 9], [2]]>

See the tf.RaggedTensor class documentation for a full list of factory methods.

Note: By default, these factory methods add assertions that the row partition tensor is well-formed and consistent with the number of values. The validate=False parameter can be used to skip these checks if you can guarantee that the inputs are well-formed and consistent.

What you can store in a ragged tensor

As with normal Tensors, the values in a RaggedTensor must all have the same type; and the values must all be at the same nesting depth (the rank of the tensor):

print(tf.ragged.constant([["Hi"], ["How", "are", "you"]])) # ok: type=string, rank=2

<tf.RaggedTensor [[b'Hi'], [b'How', b'are', b'you']]>

print(tf.ragged.constant([[[1, 2], [3]], [[4, 5]]])) # ok: type=int32, rank=3

<tf.RaggedTensor [[[1, 2], [3]], [[4, 5]]]>

try:

tf.ragged.constant([["one", "two"], [3, 4]]) # bad: multiple types

except ValueError as exception:

print(exception)

Can't convert Python sequence with mixed types to Tensor.

try:

tf.ragged.constant(["A", ["B", "C"]]) # bad: multiple nesting depths

except ValueError as exception:

print(exception)

all scalar values must have the same nesting depth

Example use case

The following example demonstrates how RaggedTensors can be used to construct and combine unigram and bigram embeddings for a batch of variable-length queries, using special markers for the beginning and end of each sentence. For more details on the ops used in this example, check the tf.ragged package documentation.

queries = tf.ragged.constant([['Who', 'is', 'Dan', 'Smith'],

['Pause'],

['Will', 'it', 'rain', 'later', 'today']])

# Create an embedding table.

num_buckets = 1024

embedding_size = 4

embedding_table = tf.Variable(

tf.random.truncated_normal([num_buckets, embedding_size],

stddev=1.0 / math.sqrt(embedding_size)))

# Look up the embedding for each word.

word_buckets = tf.strings.to_hash_bucket_fast(queries, num_buckets)

word_embeddings = tf.nn.embedding_lookup(embedding_table, word_buckets) # ①

# Add markers to the beginning and end of each sentence.

marker = tf.fill([queries.nrows(), 1], '#')

padded = tf.concat([marker, queries, marker], axis=1) # ②

# Build word bigrams and look up embeddings.

bigrams = tf.strings.join([padded[:, :-1], padded[:, 1:]], separator='+') # ③

bigram_buckets = tf.strings.to_hash_bucket_fast(bigrams, num_buckets)

bigram_embeddings = tf.nn.embedding_lookup(embedding_table, bigram_buckets) # ④

# Find the average embedding for each sentence

all_embeddings = tf.concat([word_embeddings, bigram_embeddings], axis=1) # ⑤

avg_embedding = tf.reduce_mean(all_embeddings, axis=1) # ⑥

print(avg_embedding)

tf.Tensor(

[[-0.07189021 0.23444025 -0.04020268 -0.27113184]

[ 0.10560822 0.00976487 -0.17885399 0.5371701 ]

[-0.23479678 -0.15996003 0.07078557 0.24388357]], shape=(3, 4), dtype=float32)

Ragged and uniform dimensions

A ragged dimension is a dimension whose slices may have different lengths. For example, the inner (column) dimension of rt=[[3, 1, 4, 1], [], [5, 9, 2], [6], []] is ragged, since the column slices (rt[0, :], ..., rt[4, :]) have different lengths. Dimensions whose slices all have the same length are called uniform dimensions.

The outermost dimension of a ragged tensor is always uniform, since it consists of a single slice (and, therefore, there is no possibility for differing slice lengths). The remaining dimensions may be either ragged or uniform. For example, you may store the word embeddings for each word in a batch of sentences using a ragged tensor with shape [num_sentences, (num_words), embedding_size], where the parentheses around (num_words) indicate that the dimension is ragged.

Ragged tensors may have multiple ragged dimensions. For example, you could store a batch of structured text documents using a tensor with shape [num_documents, (num_paragraphs), (num_sentences), (num_words)] (where again parentheses are used to indicate ragged dimensions).

As with tf.Tensor, the rank of a ragged tensor is its total number of dimensions (including both ragged and uniform dimensions). A potentially ragged tensor is a value that might be either a tf.Tensor or a tf.RaggedTensor.

When describing the shape of a RaggedTensor, ragged dimensions are conventionally indicated by enclosing them in parentheses. For example, as you saw above, the shape of a 3D RaggedTensor that stores word embeddings for each word in a batch of sentences can be written as [num_sentences, (num_words), embedding_size].

The RaggedTensor.shape attribute returns a tf.TensorShape for a ragged tensor where ragged dimensions have size None:

tf.ragged.constant([["Hi"], ["How", "are", "you"]]).shape

TensorShape([2, None])

The method tf.RaggedTensor.bounding_shape can be used to find a tight bounding shape for a given RaggedTensor:

print(tf.ragged.constant([["Hi"], ["How", "are", "you"]]).bounding_shape())

tf.Tensor([2 3], shape=(2,), dtype=int64)

Ragged vs sparse

A ragged tensor should not be thought of as a type of sparse tensor. In particular, sparse tensors are efficient encodings for tf.Tensor that model the same data in a compact format; but ragged tensor is an extension to tf.Tensor that models an expanded class of data. This difference is crucial when defining operations:

- Applying an op to a sparse or dense tensor should always give the same result.

- Applying an op to a ragged or sparse tensor may give different results.

As an illustrative example, consider how array operations such as concat, stack, and tile are defined for ragged vs. sparse tensors. Concatenating ragged tensors joins each row to form a single row with the combined length:

ragged_x = tf.ragged.constant([["John"], ["a", "big", "dog"], ["my", "cat"]])

ragged_y = tf.ragged.constant([["fell", "asleep"], ["barked"], ["is", "fuzzy"]])

print(tf.concat([ragged_x, ragged_y], axis=1))

<tf.RaggedTensor [[b'John', b'fell', b'asleep'], [b'a', b'big', b'dog', b'barked'],

[b'my', b'cat', b'is', b'fuzzy']]>

However, concatenating sparse tensors is equivalent to concatenating the corresponding dense tensors, as illustrated by the following example (where Ø indicates missing values):

sparse_x = ragged_x.to_sparse()

sparse_y = ragged_y.to_sparse()

sparse_result = tf.sparse.concat(sp_inputs=[sparse_x, sparse_y], axis=1)

print(tf.sparse.to_dense(sparse_result, ''))

tf.Tensor(

[[b'John' b'' b'' b'fell' b'asleep']

[b'a' b'big' b'dog' b'barked' b'']

[b'my' b'cat' b'' b'is' b'fuzzy']], shape=(3, 5), dtype=string)

For another example of why this distinction is important, consider the definition of “the mean value of each row” for an op such as tf.reduce_mean. For a ragged tensor, the mean value for a row is the sum of the row’s values divided by the row’s width. But for a sparse tensor, the mean value for a row is the sum of the row’s values divided by the sparse tensor’s overall width (which is greater than or equal to the width of the longest row).

TensorFlow APIs

Keras

tf.keras is TensorFlow's high-level API for building and training deep learning models. It doesn't have ragged support. But it does support masked tensors. So the easiest way to use a ragged tensor in a Keras model is to convert the ragged tensor to a dense tensor, using .to_tensor() and then using Keras's builtin masking:

# Task: predict whether each sentence is a question or not.

sentences = tf.constant(

['What makes you think she is a witch?',

'She turned me into a newt.',

'A newt?',

'Well, I got better.'])

is_question = tf.constant([True, False, True, False])

# Preprocess the input strings.

hash_buckets = 1000

words = tf.strings.split(sentences, ' ')

hashed_words = tf.strings.to_hash_bucket_fast(words, hash_buckets)

hashed_words.to_list()

[[940, 203, 668, 387, 790, 320, 939, 185],

[315, 515, 791, 181, 939, 787],

[564, 205],

[820, 180, 993, 739]]

hashed_words.to_tensor()

<tf.Tensor: shape=(4, 8), dtype=int64, numpy=

array([[940, 203, 668, 387, 790, 320, 939, 185],

[315, 515, 791, 181, 939, 787, 0, 0],

[564, 205, 0, 0, 0, 0, 0, 0],

[820, 180, 993, 739, 0, 0, 0, 0]])>

tf.keras.Input?

# Build the Keras model.

keras_model = tf.keras.Sequential([

tf.keras.layers.Embedding(hash_buckets, 16, mask_zero=True),

tf.keras.layers.LSTM(32, return_sequences=True, use_bias=False),

tf.keras.layers.GlobalAveragePooling1D(),

tf.keras.layers.Dense(32),

tf.keras.layers.Activation(tf.nn.relu),

tf.keras.layers.Dense(1)

])

keras_model.compile(loss='binary_crossentropy', optimizer='rmsprop')

keras_model.fit(hashed_words.to_tensor(), is_question, epochs=5)

Epoch 1/5

WARNING: All log messages before absl::InitializeLog() is called are written to STDERR

I0000 00:00:1724721648.311913 10235 service.cc:146] XLA service 0x7f82d4008680 initialized for platform CUDA (this does not guarantee that XLA will be used). Devices:

I0000 00:00:1724721648.311945 10235 service.cc:154] StreamExecutor device (0): Tesla T4, Compute Capability 7.5

I0000 00:00:1724721648.311949 10235 service.cc:154] StreamExecutor device (1): Tesla T4, Compute Capability 7.5

I0000 00:00:1724721648.311952 10235 service.cc:154] StreamExecutor device (2): Tesla T4, Compute Capability 7.5

I0000 00:00:1724721648.311955 10235 service.cc:154] StreamExecutor device (3): Tesla T4, Compute Capability 7.5

1/1 ━━━━━━━━━━━━━━━━━━━━ 4s 4s/step - loss: 8.0590

Epoch 2/5

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 47ms/step - loss: 8.0590

Epoch 3/5

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 44ms/step - loss: 8.0590

Epoch 4/5

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 44ms/step - loss: 8.0590

Epoch 5/5

I0000 00:00:1724721650.626376 10235 device_compiler.h:188] Compiled cluster using XLA! This line is logged at most once for the lifetime of the process.

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 43ms/step - loss: 8.0590

<keras.src.callbacks.history.History at 0x7f8444309fd0>

print(keras_model.predict(hashed_words.to_tensor()))

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 407ms/step

[[-0.00517703]

[-0.00227403]

[-0.00706224]

[-0.00354813]]

tf.Example

tf.Example is a standard protobuf encoding for TensorFlow data. Data encoded with tf.Examples often includes variable-length features. For example, the following code defines a batch of four tf.Example messages with different feature lengths:

import google.protobuf.text_format as pbtext

def build_tf_example(s):

return pbtext.Merge(s, tf.train.Example()).SerializeToString()

example_batch = [

build_tf_example(r'''

features {

feature {key: "colors" value {bytes_list {value: ["red", "blue"]} } }

feature {key: "lengths" value {int64_list {value: [7]} } } }'''),

build_tf_example(r'''

features {

feature {key: "colors" value {bytes_list {value: ["orange"]} } }

feature {key: "lengths" value {int64_list {value: []} } } }'''),

build_tf_example(r'''

features {

feature {key: "colors" value {bytes_list {value: ["black", "yellow"]} } }

feature {key: "lengths" value {int64_list {value: [1, 3]} } } }'''),

build_tf_example(r'''

features {

feature {key: "colors" value {bytes_list {value: ["green"]} } }

feature {key: "lengths" value {int64_list {value: [3, 5, 2]} } } }''')]

You can parse this encoded data using tf.io.parse_example, which takes a tensor of serialized strings and a feature specification dictionary, and returns a dictionary mapping feature names to tensors. To read the variable-length features into ragged tensors, you simply use tf.io.RaggedFeature in the feature specification dictionary:

feature_specification = {

'colors': tf.io.RaggedFeature(tf.string),

'lengths': tf.io.RaggedFeature(tf.int64),

}

feature_tensors = tf.io.parse_example(example_batch, feature_specification)

for name, value in feature_tensors.items():

print("{}={}".format(name, value))

colors=<tf.RaggedTensor [[b'red', b'blue'], [b'orange'], [b'black', b'yellow'], [b'green']]>

lengths=<tf.RaggedTensor [[7], [], [1, 3], [3, 5, 2]]>

tf.io.RaggedFeature can also be used to read features with multiple ragged dimensions. For details, refer to the API documentation.

Datasets

tf.data is an API that enables you to build complex input pipelines from simple, reusable pieces. Its core data structure is tf.data.Dataset, which represents a sequence of elements, in which each element consists of one or more components.

# Helper function used to print datasets in the examples below.

def print_dictionary_dataset(dataset):

for i, element in enumerate(dataset):

print("Element {}:".format(i))

for (feature_name, feature_value) in element.items():

print('{:>14} = {}'.format(feature_name, feature_value))

Building Datasets with ragged tensors

Datasets can be built from ragged tensors using the same methods that are used to build them from tf.Tensors or NumPy arrays, such as Dataset.from_tensor_slices:

dataset = tf.data.Dataset.from_tensor_slices(feature_tensors)

print_dictionary_dataset(dataset)

Element 0:

colors = [b'red' b'blue']

lengths = [7]

Element 1:

colors = [b'orange']

lengths = []

Element 2:

colors = [b'black' b'yellow']

lengths = [1 3]

Element 3:

colors = [b'green']

lengths = [3 5 2]

Note: Dataset.from_generator does not support ragged tensors yet, but support will be added soon.

Batching and unbatching Datasets with ragged tensors

Datasets with ragged tensors can be batched (which combines n consecutive elements into a single elements) using the Dataset.batch method.

batched_dataset = dataset.batch(2)

print_dictionary_dataset(batched_dataset)

Element 0:

colors = <tf.RaggedTensor [[b'red', b'blue'], [b'orange']]>

lengths = <tf.RaggedTensor [[7], []]>

Element 1:

colors = <tf.RaggedTensor [[b'black', b'yellow'], [b'green']]>

lengths = <tf.RaggedTensor [[1, 3], [3, 5, 2]]>

Conversely, a batched dataset can be transformed into a flat dataset using Dataset.unbatch.

unbatched_dataset = batched_dataset.unbatch()

print_dictionary_dataset(unbatched_dataset)

Element 0:

colors = [b'red' b'blue']

lengths = [7]

Element 1:

colors = [b'orange']

lengths = []

Element 2:

colors = [b'black' b'yellow']

lengths = [1 3]

Element 3:

colors = [b'green']

lengths = [3 5 2]

Batching Datasets with variable-length non-ragged tensors

If you have a Dataset that contains non-ragged tensors, and tensor lengths vary across elements, then you can batch those non-ragged tensors into ragged tensors by applying the dense_to_ragged_batch transformation:

non_ragged_dataset = tf.data.Dataset.from_tensor_slices([1, 5, 3, 2, 8])

non_ragged_dataset = non_ragged_dataset.map(tf.range)

batched_non_ragged_dataset = non_ragged_dataset.apply(

tf.data.experimental.dense_to_ragged_batch(2))

for element in batched_non_ragged_dataset:

print(element)

WARNING:tensorflow:From /tmpfs/tmp/ipykernel_10064/1427668168.py:4: dense_to_ragged_batch (from tensorflow.python.data.experimental.ops.batching) is deprecated and will be removed in a future version.

Instructions for updating:

Use `tf.data.Dataset.ragged_batch` instead.

<tf.RaggedTensor [[0], [0, 1, 2, 3, 4]]>

<tf.RaggedTensor [[0, 1, 2], [0, 1]]>

<tf.RaggedTensor [[0, 1, 2, 3, 4, 5, 6, 7]]>

Transforming Datasets with ragged tensors

You can also create or transform ragged tensors in Datasets using Dataset.map:

def transform_lengths(features):

return {

'mean_length': tf.math.reduce_mean(features['lengths']),

'length_ranges': tf.ragged.range(features['lengths'])}

transformed_dataset = dataset.map(transform_lengths)

print_dictionary_dataset(transformed_dataset)

Element 0:

mean_length = 7

length_ranges = <tf.RaggedTensor [[0, 1, 2, 3, 4, 5, 6]]>

Element 1:

mean_length = 0

length_ranges = <tf.RaggedTensor []>

Element 2:

mean_length = 2

length_ranges = <tf.RaggedTensor [[0], [0, 1, 2]]>

Element 3:

mean_length = 3

length_ranges = <tf.RaggedTensor [[0, 1, 2], [0, 1, 2, 3, 4], [0, 1]]>

tf.function

tf.function is a decorator that precomputes TensorFlow graphs for Python functions, which can substantially improve the performance of your TensorFlow code. Ragged tensors can be used transparently with @tf.function-decorated functions. For example, the following function works with both ragged and non-ragged tensors:

@tf.function

def make_palindrome(x, axis):

return tf.concat([x, tf.reverse(x, [axis])], axis)

make_palindrome(tf.constant([[1, 2], [3, 4], [5, 6]]), axis=1)

<tf.Tensor: shape=(3, 4), dtype=int32, numpy=

array([[1, 2, 2, 1],

[3, 4, 4, 3],

[5, 6, 6, 5]], dtype=int32)>

make_palindrome(tf.ragged.constant([[1, 2], [3], [4, 5, 6]]), axis=1)

2024-08-27 01:20:51.662289: W tensorflow/core/grappler/optimizers/loop_optimizer.cc:933] Skipping loop optimization for Merge node with control input: RaggedConcat/assert_equal_1/Assert/AssertGuard/branch_executed/_9

<tf.RaggedTensor [[1, 2, 2, 1], [3, 3], [4, 5, 6, 6, 5, 4]]>

If you wish to explicitly specify the input_signature for the tf.function, then you can do so using tf.RaggedTensorSpec.

@tf.function(

input_signature=[tf.RaggedTensorSpec(shape=[None, None], dtype=tf.int32)])

def max_and_min(rt):

return (tf.math.reduce_max(rt, axis=-1), tf.math.reduce_min(rt, axis=-1))

max_and_min(tf.ragged.constant([[1, 2], [3], [4, 5, 6]]))

(<tf.Tensor: shape=(3,), dtype=int32, numpy=array([2, 3, 6], dtype=int32)>,

<tf.Tensor: shape=(3,), dtype=int32, numpy=array([1, 3, 4], dtype=int32)>)

Concrete functions

Concrete functions encapsulate individual traced graphs that are built by tf.function. Ragged tensors can be used transparently with concrete functions.

@tf.function

def increment(x):

return x + 1

rt = tf.ragged.constant([[1, 2], [3], [4, 5, 6]])

cf = increment.get_concrete_function(rt)

print(cf(rt))

<tf.RaggedTensor [[2, 3], [4], [5, 6, 7]]>

SavedModels

A SavedModel is a serialized TensorFlow program, including both weights and computation. It can be built from a Keras model or from a custom model. In either case, ragged tensors can be used transparently with the functions and methods defined by a SavedModel.

Example: saving a Keras model

import tempfile

keras_module_path = tempfile.mkdtemp()

keras_model.save(keras_module_path+"/my_model.keras")

imported_model = tf.keras.models.load_model(keras_module_path+"/my_model.keras")

imported_model(hashed_words.to_tensor())

<tf.Tensor: shape=(4, 1), dtype=float32, numpy=

array([[-0.00517703],

[-0.00227403],

[-0.00706224],

[-0.00354813]], dtype=float32)>

Example: saving a custom model

class CustomModule(tf.Module):

def __init__(self, variable_value):

super(CustomModule, self).__init__()

self.v = tf.Variable(variable_value)

@tf.function

def grow(self, x):

return x * self.v

module = CustomModule(100.0)

# Before saving a custom model, you must ensure that concrete functions are

# built for each input signature that you will need.

module.grow.get_concrete_function(tf.RaggedTensorSpec(shape=[None, None],

dtype=tf.float32))

custom_module_path = tempfile.mkdtemp()

tf.saved_model.save(module, custom_module_path)

imported_model = tf.saved_model.load(custom_module_path)

imported_model.grow(tf.ragged.constant([[1.0, 4.0, 3.0], [2.0]]))

INFO:tensorflow:Assets written to: /tmpfs/tmp/tmpkmr703wo/assets

INFO:tensorflow:Assets written to: /tmpfs/tmp/tmpkmr703wo/assets

<tf.RaggedTensor [[100.0, 400.0, 300.0], [200.0]]>

Note: SavedModel

Overloaded operators

The RaggedTensor class overloads the standard Python arithmetic and comparison operators, making it easy to perform basic elementwise math:

x = tf.ragged.constant([[1, 2], [3], [4, 5, 6]])

y = tf.ragged.constant([[1, 1], [2], [3, 3, 3]])

print(x + y)

<tf.RaggedTensor [[2, 3], [5], [7, 8, 9]]>

Since the overloaded operators perform elementwise computations, the inputs to all binary operations must have the same shape or be broadcastable to the same shape. In the simplest broadcasting case, a single scalar is combined elementwise with each value in a ragged tensor:

x = tf.ragged.constant([[1, 2], [3], [4, 5, 6]])

print(x + 3)

<tf.RaggedTensor [[4, 5], [6], [7, 8, 9]]>

For a discussion of more advanced cases, check the section on Broadcasting.

Ragged tensors overload the same set of operators as normal Tensors: the unary operators -, ~, and abs(); and the binary operators +, -, *, /, //, %, **, &, |, ^, ==, <, <=, >, and >=.

Originally published on the __TensorFlow __website, this article appears here under a new headline and is licensed under CC BY 4.0. Code samples shared under the Apache 2.0 License.