7B Models: Cheap, Fast… and Brutally Honest About Your Prompting

If you’ve deployed a 7B model locally (or on a modest GPU), you already know the trade:

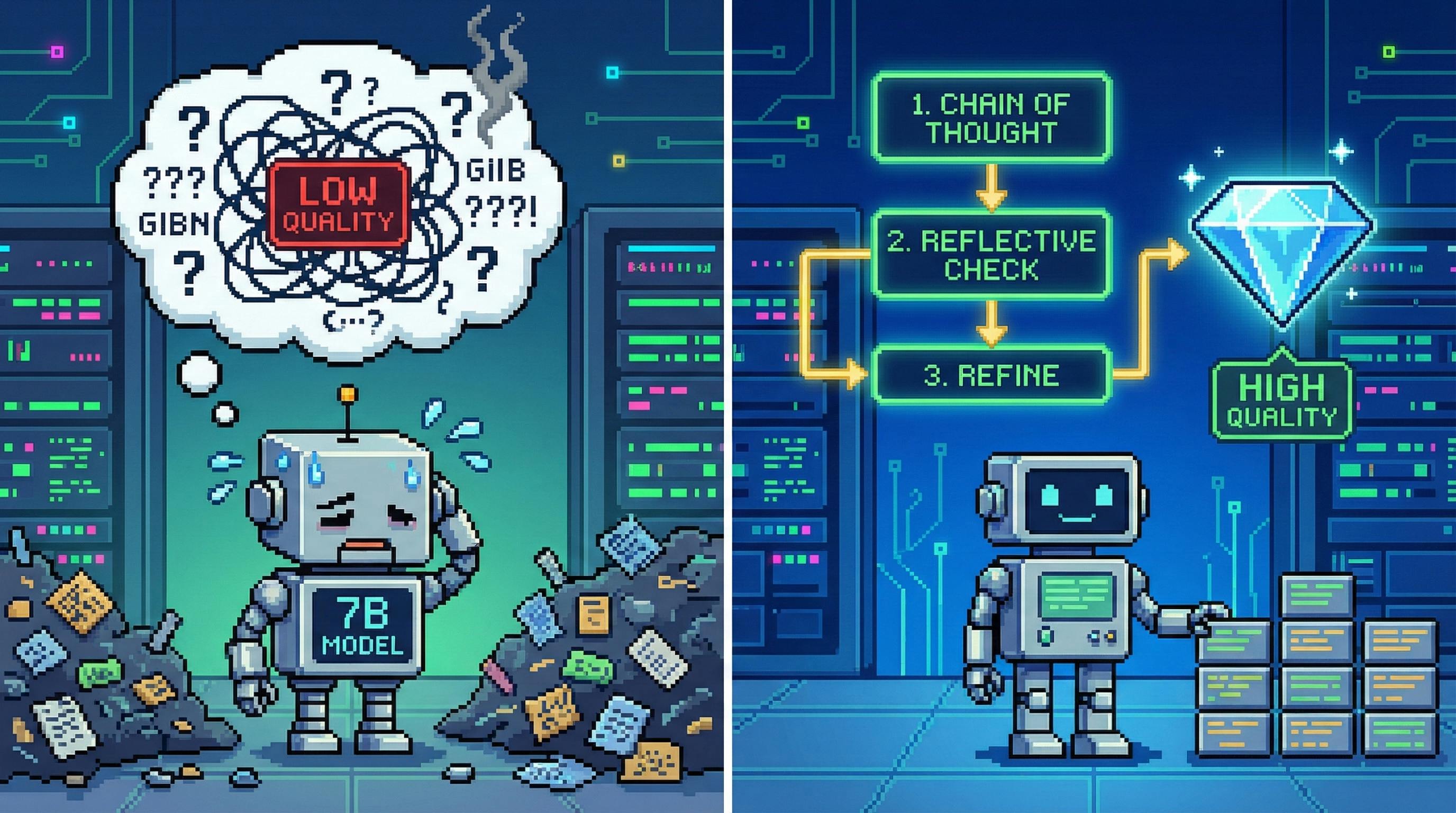

Pros

- low cost

- low latency

- easy to self-host

Cons

- patchy world knowledge

- weaker long-chain reasoning

- worse instruction-following

- unstable formatting (“JSON… but not really”)

The biggest mistake is expecting 7B models to behave like frontier models. They won’t.

But you can get surprisingly high-quality output if you treat prompting like systems design, not “creative writing.”

This is the playbook.

1) The 7B Pain Points (What You’re Fighting)

1.1 Limited knowledge coverage

7B models often miss niche facts and domain jargon. They’ll bluff or generalise.

Prompt implication: provide the missing facts up front.

1.2 Logic breaks on multi-step tasks

They may skip steps, contradict themselves, or lose track halfway through.

Prompt implication: enforce short steps and validate each step.

1.3 Low instruction adherence

Give three requirements, it fulfils one. Give five, it panics.

Prompt implication: one task per prompt, or a tightly gated checklist.

1.4 Format instability

They drift from tables to prose, add commentary, break JSON.

Prompt implication: treat output format as a contract and add a repair loop.

2) The Four High-Leverage Prompt Tactics

2.1 Simplify the instruction and focus the target

Rule: one prompt, one job.

Bad:

“Write a review with features, scenarios, advice, and a conclusion, plus SEO keywords.”

Better:

“Generate 3 key features (bullets). No intro. No conclusion.”

Then chain steps: features → scenarios → buying advice → final assembly.

A reusable “micro-task” skeleton

ROLE: You are a helpful assistant.

TASK: <single concrete output>

CONSTRAINTS:

- length:

- tone:

- must include:

FORMAT:

- output as:

INPUT:

<your data>

7B models love rigid scaffolding.

2.2 Inject missing knowledge (don’t make the model guess)

If accuracy matters, give the model the facts it needs, like a tiny knowledge base.

Use “context injection” blocks:

FACTS (use only these):

- Battery cycles: ...

- Fast charging causes: ...

- Definition of ...

Then ask the question.

Hidden benefit: this reduces hallucination because you’ve narrowed the search space.

2.3 Few-shot + strict formats (make parsing easy)

7B models learn best by imitation. One good example beats three paragraphs of explanation.

The “format contract” trick

- Tell it: “Output JSON only.”

- Define keys.

- Provide a small example.

- Add failure behaviour: “If missing info, output

INSUFFICIENT_DATA.”

This reduces the “creative drift” that kills batch workflows.

2.4 Step-by-step + multi-turn repair (stop expecting perfection first try)

Small models benefit massively from:

- step decomposition

- targeted corrections (“you missed field X”)

- re-generation of only the broken section

Think of it as unit tests for text.

3) Real Scenarios: Before/After Prompts That Boost Output Quality

3.1 Content creation: product promo copy

Before (too vague)

“Write a fun promo for a portable wireless power bank for young people.”

After (facts + tone + example)

TASK: Write ONE promo paragraph (90–120 words) for a portable wireless power bank.

AUDIENCE: young commuters in the UK.

TONE: lively, casual, not cringe.

MUST INCLUDE (in any order):

- 180g weight and phone-sized body

- 22.5W fast charging: ~60% in 30 minutes

- 10,000mAh: 2–3 charges

AVOID:

- technical jargon, long specs tables

STYLE EXAMPLE (imitate the vibe, not the product):

"This mini speaker is unreal — pocket-sized, loud, and perfect for the commute."

OUTPUT: one paragraph only. No title. No emojis.

Product:

Portable wireless power bank

Why this works on 7B:

- facts are injected

- task is singular

- style anchor reduces tone randomness

- strict output scope prevents rambling

3.2 Coding: pandas data cleaning

Before

“Write Python code to clean user spending data.”

After (step contract + no guessing)

You are a Python developer. Output code only.

Goal: Clean a CSV file and save the result.

Input file: customer_spend.csv

Columns:

- age (numeric)

- consumption_amount (numeric)

Steps (must follow in order):

1) Read the CSV into `df`

2) Fill missing `age` with the mean of age

3) Fill missing `consumption_amount` with 0

4) Cap `consumption_amount` at 10000 (values >10000 become 10000)

5) Save to clean_customer_spend.csv with index=False

6) Print a single success message

Constraints:

- Use pandas only

- Do not invent extra columns

- Include basic comments

7B bonus: by forcing explicit steps, you reduce the chance it “forgets” a requirement.

3.3 Data analysis: report without computing numbers

This is where small models often hallucinate numbers. So don’t let them.

Prompt that forbids fabrication

TASK: Write a short analysis report framework for 2024 monthly sales trends.

DATA SOURCE: 2024_monthly_sales.xlsx with columns:

- Month (Jan..Dec)

- Units sold

- Revenue (£k)

IMPORTANT RULE:

- You MUST NOT invent any numbers.

- Use placeholders like [X month], [X], [Y] where values are unknown.

Report structure (must match):

# 2024 Product Sales Trend Report

## 1. Sales overview

## 2. Peak & trough months

## 3. Overall trend summary

Analysis requirements:

- Define how to find peak/low months

- Give 2–3 plausible reasons for peaks and troughs (seasonality, promo, stock issues)

- Summarise likely overall trend patterns (up, down, volatile, U-shape)

Output: the full report with placeholders only.

Why this works: it turns the model into a “framework generator,” which is a sweet spot for 7B.

4) Quality Evaluation: How to Measure Improvements (Not Just “Feels Better”)

If you can’t measure it, you’ll end up “prompt-shopping” forever. For 7B models, I recommend an evaluation loop that’s cheap, repeatable, and brutally honest.

4.1 A lightweight scorecard (run 10–20 samples)

Pick a small test set (even 20–50 prompts is enough) and record:

- Adherence: did it satisfy every MUST requirement? (hit-rate %)

- Factuality vs context: count statements that contradict your provided facts (lower is better)

- Format pass-rate: does the output parse 100% of the time? (JSON/schema/table)

- Variance: do “key decisions” change across runs? (stability %)

- Cost: avg tokens + avg latency (P50/P95)

A simple rubric:

- Green: ≥95% adherence + ≥95% format pass-rate

- Yellow: 80–95% (acceptable for drafts)

- Red: <80% (you’re still guessing / under-specifying)

4.2 Common failure modes (and what to change)

If it invents facts

- inject missing context

- add a “no fabrication” rule

- require citations from the provided context only (not web links)

If it ignores constraints

- move requirements into a short MUST list

- reduce optional wording (“try”, “maybe”, “if possible”)

- cap output length explicitly

If the format drifts

- add a schema + a “format contract”

- include a single example that matches the schema

- set a stop sequence (e.g., stop after the closing brace)

4.3 Iteration loop that doesn’t waste time

- Freeze your test prompts

- Change one thing (constraints, example, context, or step plan)

- Re-run the scorecard

- Keep changes that improve adherence/format without increasing cost too much

- Only then generalise to new tasks

5) Iteration Methods That Work on 7B

5.1 Prompt iteration loop

- Run prompt

- Compare output to checklist

- Issue a repair prompt targeting only the broken parts

- Save the “winning” prompt as a template

A reusable repair prompt

You did not follow the contract.

Fix ONLY the following issues:

- Missing: <field>

- Format error: <what broke>

- Constraint violation: <too long / invented numbers / wrong tone>

Return the corrected output only.

5.2 Few-shot tuning (but keep it small)

For 7B models: 1–3 examples usually beats 5–10 (too much context = distraction).

5.3 Format simplification

If strict JSON fails:

- use a Markdown table with fixed columns

- or “Key: Value” lines Then parse with regex.

6) Deployment Notes: Hardware, Quantisation, and Inference Choices

7B models can run on consumer hardware, but choices matter:

6.1 Hardware baseline

- CPU-only: workable, but slower; more RAM helps (16GB+)

- GPU: smoother UX; 3090/4090 class GPUs are comfortable for many 7B setups

6.2 Quantisation

INT8/INT4 reduces memory and speeds up inference, but can slightly degrade accuracy. Common approaches:

- GPTQ / AWQ

- 4-bit quant + LoRA adapters for domain tuning

6.3 Inference frameworks

llama.cppfor fast local CPU/GPU setups- vLLM for server-style throughput

- Transformers.js for lightweight client-side experiments

Final Take

7B models don’t reward “clever prompts.” They reward clear contracts.

If you:

- keep tasks small,

- inject missing facts,

- enforce formats,

- and use repair loops,

you can make a 7B model deliver output that feels shockingly close to much larger systems—especially for structured work like copy templates, code scaffolding, and report frameworks.

That’s the point of low-resource LLMs: not to replace frontier models, but to own the “cheap, fast, good enough” layer of your stack.