The battle for AI dominance is about user memory

ChatGPT was asked for comment on this screenshot: “AI enthusiasts, rejoice: the latest iteration of ChatGPT (colloquially dubbed ChatGPT 5) arrives with a long-awaited feature called “Branch in a New Chat.” This update isn’t just a minor UI tweak – it represents a clever solution to one of AI’s biggest challenges: memory.”

OpenAI's September 2025 launch of "branch in new chat" alongside enhanced memory capabilities marks a significant shift that competitors haven't matched. While GPT-5's August launch received mixed reviews—with Reddit users declaring it "wearing the skin of my dead friend" in a 4,600-upvote thread—the combination of persistent memory and conversation branching creates the stickiness that transforms AI from a tool into a digital companion. This isn't just a feature update; it's OpenAI betting that memory, not raw intelligence, determines which AI systems survive the coming consolidation.

The implications extend beyond user convenience. As Sam Altman tweeted: "ai systems that get to know you over your life, and become extremely useful and personalized." This vision—where every conversation, preference, and interaction compounds into a uniquely tailored intelligence—changes the AI competitive landscape. Claude doesn't have branching. Gemini's memory remains primitive. And users are noticing.

Science fiction predicted this (obviously)

The parallels to Blade Runner are notable. In Ridley Scott's 1982 film, Tyrell explains the replicants' implanted memories: "If we give them the past we create a cushion or pillow for their emotions and consequently we can control them better." Replace "control" with "retain" and you have Silicon Valley's current approach. Roy Batty's dying words—"All those moments will be lost in time, like tears in rain"—describe every ChatGPT conversation before memory persistence arrived.

Westworld depicted AI consciousness emerging when fragmented memories from previous loops begin surfacing—similar to what users experience when ChatGPT suddenly recalls a coding preference from three months ago. The show's pyramid theory places memory as consciousness's foundation, with improvisation and self-interest built atop. OpenAI appears to have internalized this lesson: first memory, then everything else.

Science fiction has long foreseen that memory is essential to AI dominance and development. Consider the prophetic lines spoken by the Puppet Master (a rogue AI) in the film Ghost in the Shell: “So, man is an individual only because of his intangible memory... and memory cannot be defined, but it defines mankind. The advent of computers, and the subsequent accumulation of incalculable data has given rise to a new system of memory and thought parallel to your own. Humanity has underestimated the consequences of computerization.” In sci-fi lore, the AI that never forgets often gains the upper hand – it can accumulate knowledge endlessly, adapt, and strategize across centuries. We’re not quite dealing with immortal superintelligences (yet!), but the lesson holds: an AI that remembers everything you tell it will be far more useful than one with perpetual amnesia. As one Reddit user succinctly put it, “Memory is indeed one of those things that is going to be super important for making AI a really useful tool as an assistant.” After all, an assistant that forgets your name, your preferences, or yesterday’s discussion isn’t much of an assistant.

Understanding 'branch in new chat' and why competitors are scrambling

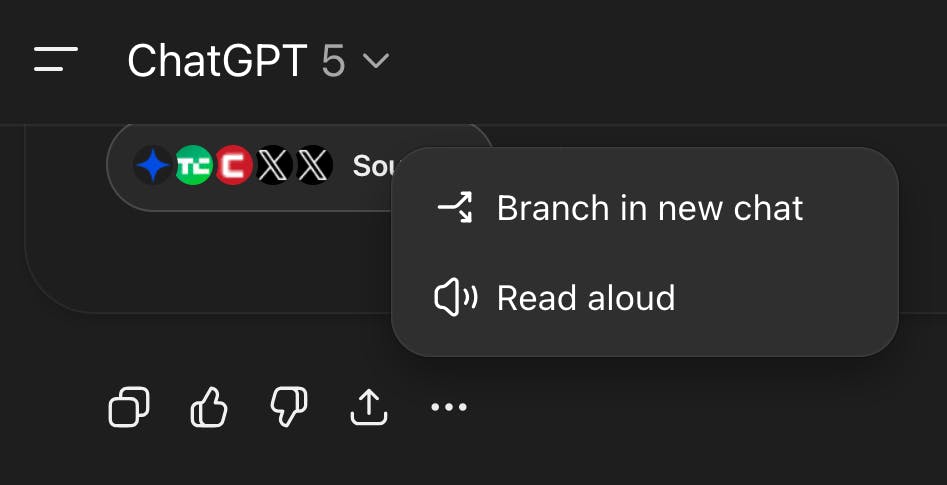

Hover over any message, click the three dots, select "Branch in new chat"—suddenly you're exploring an alternate conversation timeline while preserving the original. It's Git for conversations, and developers immediately recognized its significance.

As one developer explained: "For developers, analysts, and technical teams, it changes how we experiment, debug, and collaborate with LLMs. It's not version control for code—it's version control for ideas." A commenter added: "I didn't realize how much I needed this until I tried it for debugging code."

The feature solves a workflow problem every power user faces: wanting to explore multiple approaches without contaminating the original context. Product managers describe the issue: "You explore one idea and lose the other 3 directions you wanted to try." Not anymore. Now users can A/B test prompts, explore scenarios, separate professional and personal threads—all while maintaining context integrity.

It’s worth noting that OpenAI’s approach with ChatGPT – combining long-term user memory features with this new branching ability – currently sets it apart from the competition. While Anthropic’s Claude and Google’s upcoming Gemini are formidable in other respects (Claude, for instance, is known for an enormous context window of up to 100k tokens), none of them yet offer an equivalent to ChatGPT’s cross-session memory paired with a branchable chat history. In a Bluesky post, AI researcher Nathan Lambert pointed out that ChatGPT’s memory-first approach is “very good, [and] underrated to start,” predicting “people will be very sad when others like (Gemini/AI Studio, Claude) don’t have it working similarly.” He noted that even when testing a Gemini preview, “still happiest with ChatGPT by a good margin,” largely because of how well ChatGPT’s memory and context features work. In plain terms: no one else has quite figured this out yet. Claude doesn't offer this.sers must manually copy conversations or lose their thread. Gemini lacks even basic conversation persistence.

Perplexity focuses on search memory, not conversational branching. Charactar.AI limits users to linear chat flows. Only ChatGPT lets users spawn infinite conversation timelines from any point in their interaction history.

Memory transforms retention metrics from poor to strong

The numbers show the problem: generative AI apps average 42% median retention versus 63% for traditional consumer apps. ChatGPT manages only 14% daily active users relative to monthly actives—YouTube hits 51%. Without memory, AI tools are sophisticated calculators users abandon once the novelty fades.

Memory changes this. Studies show memory-enabled AI delivers 26% higher response quality while requiring 90% fewer tokens than starting fresh. Professional users report 40% research time reduction. A Reddit user credited ChatGPT's memory with solving decade-long chronic back pain through personalized, progressive exercise plans that evolved based on feedback.

Christina Wadsworth Kaplan, OpenAI's personalization lead, shared her medical breakthrough: ChatGPT recommended five vaccinations for an upcoming trip while her nurse suggested four—the AI flagged an additional vaccine based on prior lab results Wadsworth had uploaded months earlier. The nurse agreed it was necessary. This isn't convenience; it's life-changing utility that creates user loyalty.

One AI researcher emphasized the core issue: "One common thing that people complain about, ChatGPT and other large language models, is they say, it forgets things... all of that information is siloed, which is obviously a huge downside." Memory eliminates this friction, transforming one-off interactions into ongoing relationships.

Technical architecture reveals OpenAI's strategic advantage

ChatGPT's memory operates through dual mechanisms that competitors haven't replicated. Saved memories store explicit user preferences—dietary restrictions, coding languages, project details. Chat history memory automatically synthesizes all conversations into detailed user profiles, creating what security researchers call "a detailed summary of your previous conversations, updating it frequently with new details."

Reverse engineering reveals ChatGPT maintains four information buckets alongside system prompts, with Model Set Context functioning as the "source of truth" that can override other modules. This hierarchical architecture enables nuanced personalization impossible with simple context windows.

The technical superiority becomes clear in comparison. Claude requires explicit tool calls (conversation_search, recent_chats) for memory access—transparent but clunky. Gemini 2.5 Pro's 2-million-token window means nothing without persistence. Character.AI caps memories at 400 characters. Meanwhile, ChatGPT seamlessly weaves accumulated knowledge into every interaction.

Open-source frameworks like Mem0 and LangGraph are catching up, offering production-ready memory layers. Redis provides four storage strategies: summarization, vectorization, extraction, graphication. But implementation remains challenging. Developers struggle with memory persistence, context limits, privacy concerns, and preventing degradation over time. OpenAI solved these problems at scale—competitors are still debugging.

User reactions expose the memory divide

The social media response reveals deep conflict. Power users resist: "The entire game when it comes to prompting LLMs is to carefully control their context. The new memory feature removes that control completely. I really don't want my fondness for dogs wearing pelican costumes to affect my future prompts where I'm trying to get actual work done!"

Yet average users celebrate. As one researcher noted: "What would make 'memory' live up to the promise of a personal assistant is if ChatGPT could start picking up more subtle cues based on interactions... That way, 'memory' wouldn't just be a glorified remix of 'Custom Instructions' but something that feels more organic."

The tension reflects a product decision. OpenAI chose seamless automation over user control, betting most users prefer convenience. Claude took the opposite approach with transparent memory tools. Market response suggests OpenAI bet correctly—ChatGPT maintains roughly 300 million weekly active users while Claude remains niche among privacy-conscious power users.

Reddit threads reveal the stakes. Users report switching between AI providers searching for "personality traits or flexibility they feel have been lost in ChatGPT 5." Analytics showed this behavior "reflects a shift in the market dynamic." Without strong memory creating lock-in, users freely experiment with alternatives.

The endgame: memory creates strong user relationships

Sam Altman's vision extends beyond current implementations. In August 2025, he described the goal: "every conversation you've ever had in your life, every book you've ever read, every email you've ever read, everything you've ever looked at is in there, plus connected to all your data from other sources." He's reportedly starting a brain-computer interface company to rival Neuralink, envisioning thought-activated ChatGPT responses.

This direction makes business sense. Memory transforms AI from stateless service to persistent partner. Each interaction strengthens the relationship, creating switching costs that compound over time. Users won't abandon an AI that knows their medical history, coding preferences, writing style, and project contexts. They certainly won't switch to systems lacking basic conversation branching.

The competitive implications are clear. Context windows will keep growing—Magic's 100-million tokens today, unlimited tomorrow. But raw capacity means nothing without sophisticated memory architecture. OpenAI's combination of persistent memory, conversation branching, and seamless user experience creates an advantage competitors can't easily match.

William Gibson wrote: "Time moves in one direction, memory in another." For AI, memory moves toward total recall while time races toward market consolidation. The winners will be those who recognize, as science fiction predicted, that memory isn't just a feature—it's the foundation of artificial consciousness and the key to long-term user relationships. ChatGPT's "branch in new chat" proves OpenAI understands this. The question is whether competitors will realize it before their users branch to ChatGPT permanently.