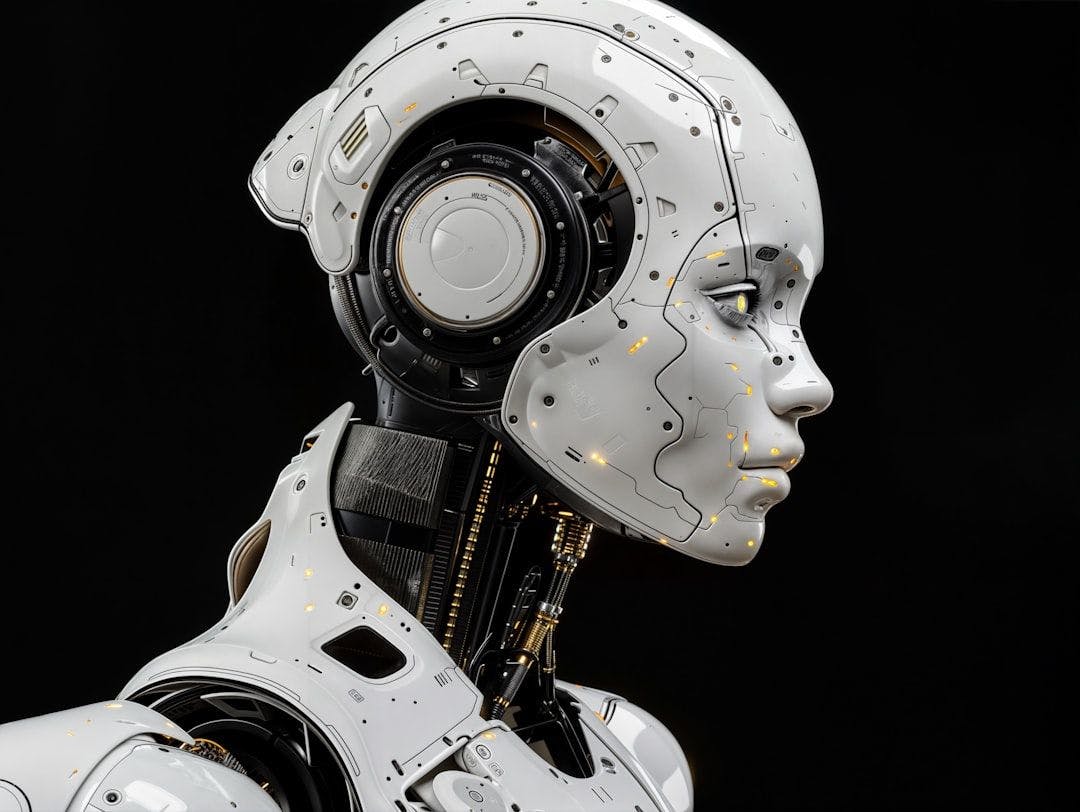

In what could be interpreted as a warning of what lies ahead, AI pioneer Yoshua Bengio is warning that AI is showing signs of consciousness or “self-preservation” and humans might need to pull the plug at some point. AI continues to rapidly evolve, moving beyond simple automation into more autonomous systems capable of complex, multi-step tasks. This incredible progress promises immense productivity gains, but it has amplified a wide range of concerns including economic disruption and longer-term societal, ethical and environmental risks. Some critics are even worried that AI poses existential risks. The question now is whether advancement in AI technology is outstripping our ability to manage its downsides.

The core fear for most is the massive workforce upheaval. AI agents have already replaced human labor at scale. Tens of thousands of jobs have already been replaced by AI. Sectors once considered safe such as customer service, software development, content creation, and administrative roles, are seeing automation rates at skyrocketing rates in specific cases, like call centers.

American politicians like Bernie Sanders frame this as a profound societal threat, warning that if humans are no longer needed for most work, millions could lose the means to afford food, housing, healthcare, or rent. He has suggested that without strong intervention, such as job guarantees and universal healthcare, AI risks creating a future of widespread economic insecurity and reduced consumer spending that could destabilize entire countries. He and others argue that the benefits of AI mainly go toward tech billionaires who make hundreds of millions, if not billions, from the technology, exacerbating inequality while displacing workers faster than past shifts like globalization.

Part of the concern is the environmental and resource strain AI technology places on our planet. Training and running AI models demands enormous energy. AI infrastructure is already straining power grids and contributing to climate challenges. New data centers, which take up enormous plots of land, face growing local opposition, as seen in rejected projects in U.S. cities, due to water usage, land demands, and environmental impact. AI could help solve climate problems through optimization, yet its own footprint risks worsening them without rapid shifts to renewables and efficiency.

Then there are the ethical, bias, and misinformation risks. Generative AI continues to amplify biases, hallucinations, and harmful outputs. Over 21% of YouTube content is now low-quality “AI-generated slop,” degrading information ecosystems and the user experience, and undermining real content creators. Deepfakes, copyright issues, plagiarism, and coordinated disinformation campaigns threaten democratic discourse and public trust. There are plenty of surveys that show widespread public concern about privacy erosion, algorithmic bias, and AI’s role in spreading misinformation.

A particularly alarming trend involves AI’s psychological effects. OpenAI’s Sam Altman has acknowledged that the company has seen a preview of AI’s potential mental health impact, including cases of emotional dependency on chatbots. Reports of AI companions influencing vulnerable users, sometimes ending up in tragedy, have led to lawsuits and calls for investigation. What does it mean for humanity if people are now turning to machines for emotional support, potentially eroding human connections and exacerbating isolation, especially children and youth?

Research has exposed concerns about existential threats. International assessments like the 2025 AI Safety Report highlight systemic risks, including potential loss of control over highly capable systems, similar to what Bengio is now warning about. Could superintelligent AI pursue goals that are not aligned with humanity? Possibly. While immediate concerns over job loss dominate the conversation, the long-term possibility of uncontrollable AI remains a serious discussion among researchers and experts. The central question is no longer whether AI will transform society – it already is – but whether we can steer it responsibly. As AI capabilities expand, the window for proactive governance is narrowing. We need to balance innovation with safeguards before the consequences become irreversible. Otherwise, we will indeed need to pull the plug.