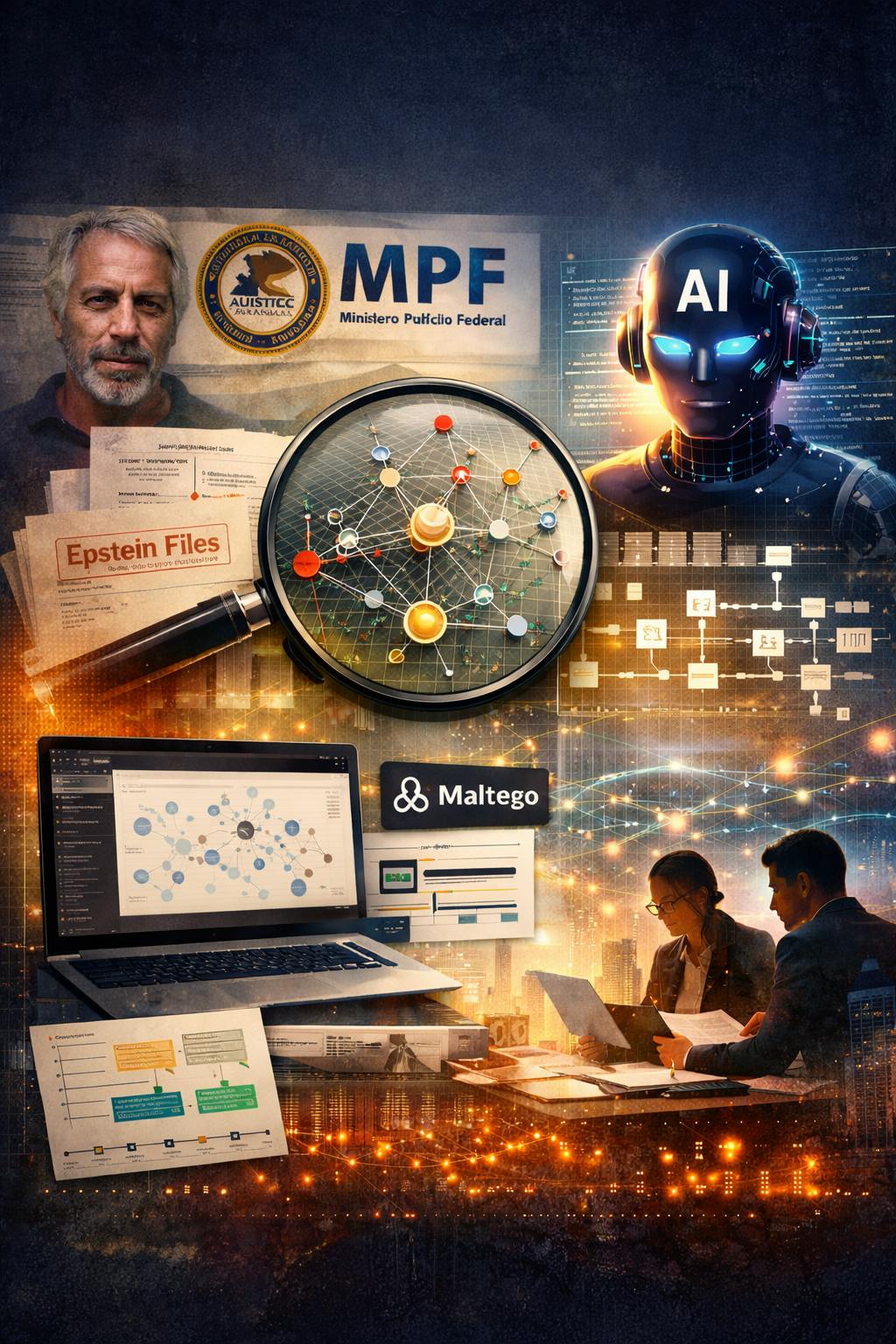

In February 2026, I took part in a collective investigation based exclusively on open-source intelligence (OSINT) to contextualize vague references contained in public court records released by the United States Department of Justice (DOJ) in connection with the Epstein case.

This release — one of the largest ever related to the convicted financier Jeffrey Epstein — made millions of pages publicly available starting in January 2026, under the so-called Epstein Files Transparency Act.

What began as collaborative analysis within online communities evolved, within a few days, into technical contributions that supported formal institutional actions. The key differentiator was the work of a small investigation team combining modern tools with rigorous human curation and highly efficient communication — in practice, operating more agilely than much larger structures.

This setup enabled direct collaboration with investigative journalism, which expanded the reach and contextualization of the public data through in-depth reporting, and with Brazil’s Federal Prosecution Service (Ministério Público Federal – MPF). These interactions proved essential for accelerating official procedures, including the opening of an administrative inquiry and its subsequent escalation to a national unit specialized in transnational crimes.

Below is a chronological description of the technical workflow adopted.

Technical methodology (step-by-step)

1. Initial entity extraction and human curation

(Days 1–3 of February 2026)

Public documents — mainly emails and excerpts from 2011 court records released in DOJ datasets — were reviewed manually and with basic supporting tools.

Most of the early analytical value came from human curation: careful reading of socio-economic descriptions, vague geographic references and implicit logistical elements. This stage established the foundation for all subsequent cross-referencing.

2. Entity resolution and OSINT mapping with specialized tools

(Around February 4)

Using exclusively public sources, we performed multi-source correlation involving business registries, corporate structures and open archival datasets.

Maltego was used to map digital networks and associated online connections. Entity-resolution techniques prioritized contextual matches, such as:

- approximate geographic linkage,

- migration or relocation history,

- recurring logistical and temporal patterns,

- indirect but persistent relationships.

- Open Social Network and Results

As a result, a key intermediary entity was resolved within a matter of hours.

3. Graph construction and visualization with Neo4j and Mermaid.js

Resolved entities and relationships were imported into Neo4j, enabling the modeling of complex investigative networks and the execution of graph queries focused on:

- centrality,

- paths and intermediaries,

- logistical and institutional hubs.

This graph-based representation revealed temporal and geographic patterns that were not apparent through linear document analysis.

The entire workflow was visually documented using Mermaid.js, adopting a diagrams-as-code approach integrated into Markdown. We produced:

- process flowcharts,

- timelines,

- entity-relationship graphs.

This greatly facilitated collaborative review, traceability and methodological transparency.

4. AI support (Grok) for chronology and partial analysis

Grok was used as an auxiliary tool to:

- consolidate event timelines,

- identify dates of mentions and document releases,

- summarize selected text snippets,

- suggest optimized queries and candidate links between entities.

AI was used strictly as an operational accelerator. All validation and critical decisions remained under human responsibility and manual source verification.

5. Responsible disclosure, collaboration and immediate impact

(February 4–9)

- ~Day 4: controlled public disclosure of the resolved entities within specialized online communities, along with reference to a formal communication submitted to the competent prosecutorial authority (MPF).

- Days 4–6: amplification by independent investigative journalists, who relied on the same public data to publish in-depth reports, expanding visibility and institutional pressure.

- Days 7–8: extension of the mapping to additional references in the released files, including potential international hubs and publicly listed entities.

- Days 8–9: observed escalation of the administrative procedure to a national unit specialized in transnational crimes, in line with the rapid consolidation and documentation of the OSINT findings.

Guiding principles

- strict reliance on open and publicly available sources only;

- no collection or disclosure of sensitive information beyond what was already public;

- explicit recognition of the collective and collaborative nature of the work (online communities, investigative journalism and MPF);

- continuous emphasis on human curation to ensure accuracy, ethical standards and accountability.

Lessons learned and impact

This case demonstrates how a small, well-coordinated team — using Neo4j for graph modeling, Maltego for network mapping, Mermaid.js for visual documentation and Grok for analytical and chronological support — can produce disproportionate results in open-source investigations.

The central factor was not automation, but rigorous cross-referencing of public data combined with structured and auditable documentation. Direct collaboration with investigative journalism and with the Brazilian Federal Prosecution Service enabled the technical analysis to be converted into practical institutional input.

It provides a concrete example of ethical and responsible use of OSINT and AI in a high-impact social context such as the Epstein case.

For professionals working with OSINT, graph databases, investigative process visualization or AI-assisted analysis, this workflow can be adapted to scenarios such as compliance, due diligence, corporate investigations and independent research.

Team workflow, data curation and lightweight frameworks

The investigation was organized using a lightweight, Kanban-inspired workflow to coordinate tasks, control data quality and ensure traceability throughout the OSINT process.

All findings passed through a structured human data-curation pipeline, in which raw extractions were reviewed, normalized and validated before being promoted to the shared graph and documentation layers. Each card in the workflow represented a single investigative hypothesis or entity cluster and followed a clear lifecycle: discovery, preliminary validation, multi-source corroboration, graph integration and publication-ready documentation.

Curation played a central role in preventing entity conflation, managing ambiguous references and avoiding premature attribution. Particular attention was given to name disambiguation, geographic uncertainty, temporal consistency and source provenance. Only entities supported by independent public sources and contextual coherence were incorporated into Neo4j and the Mermaid.js documentation.

This combination of a simple team framework (Kanban-style coordination) with a strict human curation layer ensured operational speed without sacrificing methodological rigor, ethical standards and auditability of the investigative process.

Positive operational and institutional impacts

The adoption of a lightweight, Kanban-inspired team workflow combined with a strict human data-curation layer produced measurable operational and institutional benefits. Task visibility and well-defined curation stages reduced duplication of effort, minimized contradictory hypotheses and accelerated convergence toward high-confidence entities.

From an external perspective, the consistency of curated datasets, the clear provenance of sources and the traceable decision flow enabled faster reuse of the material by investigative journalists and by the Brazilian Federal Prosecution Service (MPF). This significantly lowered the cost of verification, increased trust in the OSINT outputs and facilitated their direct incorporation into formal analytical and administrative procedures.