For decades, the idea of a general-purpose robot, one that can handle complex, multi-step tasks in the messy, unpredictable real world has remained a persistent challenge. Unlike their specialized counterparts on factory assembly lines, these robots have struggled to navigate the nuances of everyday environments.

Google DeepMind’s Gemini Robotics 1.5 update represents a fundamental architectural shift in this pursuit. It moves beyond reactive models that simply follow commands and introduces a new era of proactive, reasoning “physical agents” powered by models that can perceive, plan, think, and act. This is not just an upgrade; it’s a new paradigm for how robots interact with our world.

This article will analyze four of the most impactful breakthroughs from this announcement that are bringing us closer to a future of truly intelligent, general-purpose robots.

Robots Now ‘Think’ Before They Act

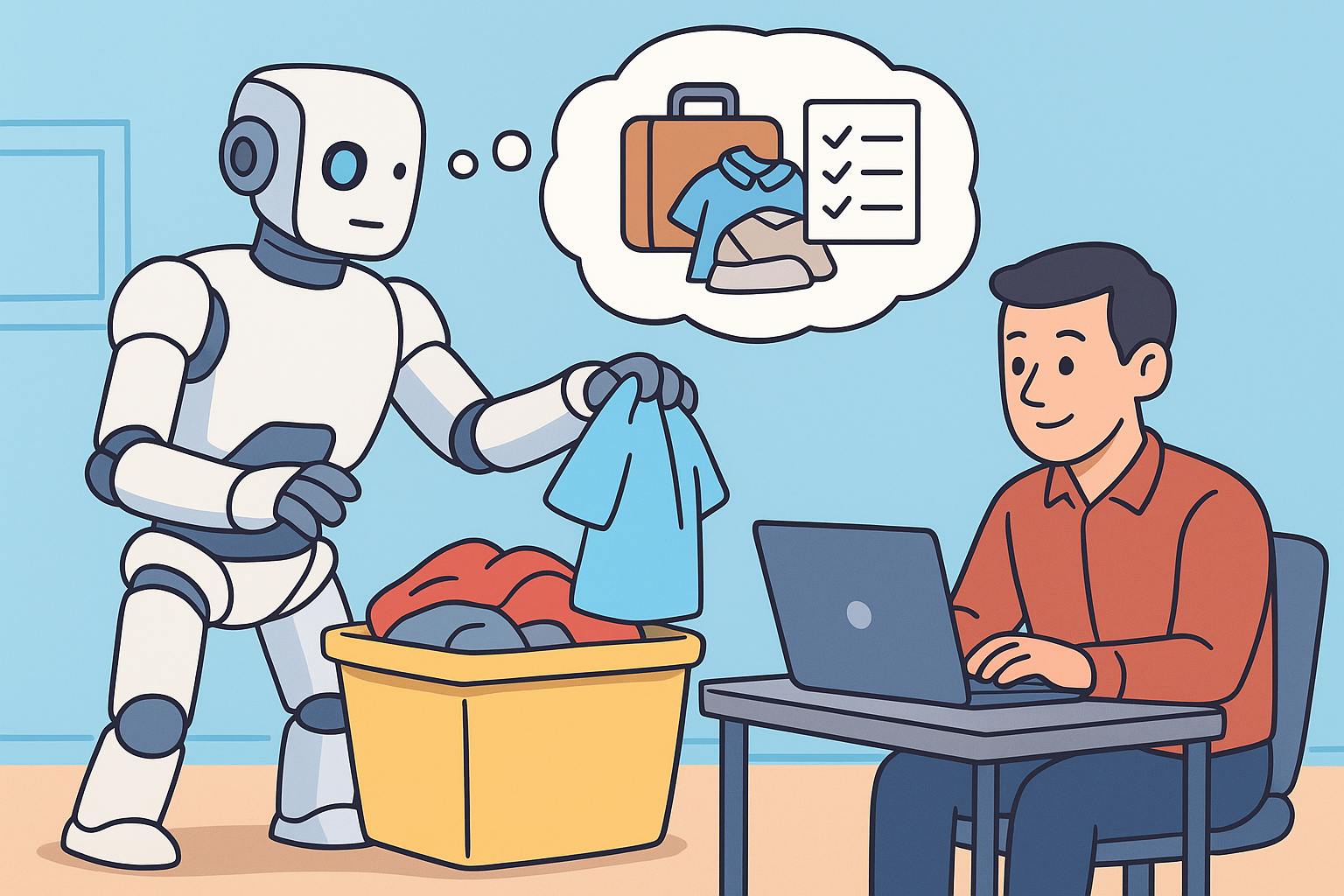

The most profound shift in Gemini Robotics 1.5 is the introduction of “Embodied Thinking.” Unlike traditional models that translate a command directly into movement, these new agents engage in a multi-level internal reasoning process in natural language before performing an action.

This is best illustrated with a practical example: sorting laundry. When tasked to “Sort my laundry by color,” the robot first thinks at a high level (“white clothes in the white bin”). It then decomposes this into a specific step (“pick up the red sweater and put it in the black bin”), and even breaks that down into an inner monologue of primitive motions like “move the gripper to the left” or “close the gripper.”

This capability is critical for several reasons. Analytically, this two-step decomposition of language-based thinking followed by action is more robust because it first “leverages the powerful visual-linguistic capabilities of the VLM backbone” to plan, which simplifies the second step into a more straightforward action. This improves the robot’s ability to handle complex tasks, makes its behavior transparent and interpretable to humans, and allows it to generalize its skills more effectively.

This enables the robot to “think before acting” and notably improves its ability to decompose and execute complex, multi-step tasks, and also makes the robot’s behavior more interpretable to the user.

They Can Learn Skills from Different Types of Robots

A long-standing problem in robotics is that machines come in all shapes and sizes, making it difficult to transfer skills learned on one robot to another. The Gemini Robotics 1.5 update addresses this with a breakthrough capability called “Motion Transfer,” allowing the AI to learn across different robot forms, or “embodiments,” without specializing the model for each one.

The impact of this is significant, but its effectiveness is nuanced. The technical report reveals that for robots with large existing datasets (like the ALOHA robot), Motion Transfer primarily helps align the different embodiments, amplifying the benefit of multi-robot data. However, for robots with scarce data (like the Apptronik Apollo humanoid), the addition of external data provides a massive performance boost, though the effect of Motion Transfer itself is “less pronounced” due to the significant “embodiment gap.”

This nuanced understanding is key: in a counter-intuitive demonstration, tasks demonstrated only to the ALOHA 2 robot could be performed by the Apollo humanoid and the bi-arm Franka robot, and vice-versa, without retraining. This capability helps solve the data scarcity problem and dramatically accelerates learning, but its true power lies in its ability to adapt its benefits based on the data landscape of each specific robot.

A ‘Two-Brain’ System Orchestrates Complex Tasks

To handle truly complex missions, Gemini Robotics 1.5 employs an “agentic framework” where two specialized models work in tandem, much like a Project Manager and a Skilled Technician. This division of labor separates strategic planning from tactical execution.

The first model, Gemini Robotics-ER 1.5, acts as the “orchestrator” or the Project Manager. It excels at planning and making logical decisions. Its key functions include creating detailed, multi-step plans, understanding its environment with state-of-the-art spatial awareness, and natively calling digital tools like Google Search to gather information.

The orchestrator then gives natural language instructions to the second model, Gemini Robotics 1.5, which serves as the “action model,” or the Skilled Technician. This model uses its advanced vision and language understanding to translate the orchestrator’s commands into specific, low-level physical actions.

A powerful example from the technical report illustrates this partnership: packing a suitcase for a trip to London. The orchestrator (Gemini Robotics-ER 1.5) would first access a travel itinerary and use web search to check the weather forecast in London. Based on that information, it would create a high-level plan (e.g., “pack the rain jacket”) and instruct the action model to perform the physical steps of locating the jacket and placing it in the suitcase.

Safety Isn’t an Add-On, It’s Part of Their Reasoning

As robots become more capable, ensuring they act safely is paramount. Google DeepMind’s approach moves beyond traditional robot safety, which focused primarily on physical concerns like low-level collision avoidance. The new paradigm integrates high-level semantic safety directly into the model’s core reasoning.

This “holistic approach” is built on two distinct layers. The first is Safe Human-Robot Dialog, which ensures the robot’s communication aligns with Gemini’s core safety policies to prevent harmful content and ensure respectful interaction. The second, and more novel, layer is Semantic Action Safety. This is the ability to “think about safety before acting” by applying common-sense constraints, such as reasoning that a box is too heavy to lift or that a spilled liquid on the floor presents a slip hazard.

To guide this work, the team has released an upgraded ASIMOV-2.0 benchmark, a comprehensive set of datasets used to evaluate and improve this understanding of semantic safety. Building safety into the AI’s core reasoning is a foundational step toward responsibly deploying these advanced robots in our daily lives.

Conclusion: The Dawn of Physical Agents

These four breakthroughs are not independent features; they are interlocking components that create what the technical report calls a “complete and powerful agentic system.” The ability to reason before acting is useless without the capacity to apply that reasoning to novel bodies, which is enabled by Motion Transfer. Executing complex, multi-step actions is impossible without the “two-brain” architecture to orchestrate them. And none of this is viable for real-world deployment without a foundational layer of semantic safety built into the agent’s very logic. Together, these advances mark a definitive step beyond reactive machines and toward the dawn of true physical agents.