Authors":

Table of links

SEARCH ENGINES AND WOMEN’S DESCRIPTIVE REPRESENTATION

MEASURING THE EXTENT OF ALGORITHMIC REPRESENTATION

GENERAL DISCUSSION, CONCLUSION AND REFERENCES

Abstract

Search engines like Google have become major information gatekeepers that use artificial intelligence (AI) to determine who and what voters find when searching for political information. This article proposes and tests a framework of algorithmic representation of minoritized groups in a series of four studies. First, two algorithm audits of political image searches delineate how search engines reflect and uphold structural inequalities by under- and misrepresenting women and non-white politicians. Second, two online experiments show that these biases in algorithmic representation in turn distort perceptions of the political reality and actively reinforce a white and masculinized view of politics. Together, the results have substantive implications for the scientific understanding of how AI technology amplifies biases in political perceptions and decision-making. The article contributes to ongoing public debates and cross-disciplinary research on algorithmic fairness and injustice

Introduction

Though political landscapes around the world are slowly changing, the face of contemporary political decision-making is still disproportionately male and, in the Global North, disproportionately white. In 2024, global descriptive representation for women in parliaments is 26.7% (Inter-parliamentary Union 2024). In the U.S. House of Representatives, 29% of members are women and 26% are non-white,1 making the 118th Congress the most ethnically diverse and gender-balanced to date in US history (Pew Research Center 2023a,b). Yet according to census data, the shares of women and non-white persons in the general US population are 50.5% and 41.1% respectively (U.S. Census Bureau 2023). Decades of scientific exploration into the mechanisms of the underrepresentation of minoritized groups indicate that political structures, gender perceptions, and the media environment are multicausal drivers of gender and race inequalities in politics (Bratton and Ray 2002; Bos et al. 2022; Dolan 1998; Kahn 1994; Kanthak and Woon 2015; Mendelberg et al. 2014; Thomsen and King 2020; Teele et al. 2018; Dolan and Hansen 2018; Huddy and Terkildsen 1993; O’Brien 2015). In this article, we turn to artificial intelligence (AI) driven search engines like Google as a novel and so far underexplored driver of political exclusion of underrepresented groups. Specifically, we propose and test a framework of algorithmic representation to delineate the role of search engines in constructing masculinized and white views of politics as well as delineate the consequences of such views for political perceptions.

Search engines have become major information gatekeepers that use artificial intelligence (AI) to determine who and what voters find when searching for political information (Trielli and Diakopoulos 2022; Urman and Makhortykh 2022; Wallace 2018; White and Horvitz 2015). How information is represented on search engines has been shown to influence perceptions of political campaigns (Epstein and Robertson 2015; Zweig 2017) and individual vote choices (Diakopoulos et al. 2018). Selection and ranking of information by search engines—and, crucially, biases of such algorithmic curation,2 including the systematic under- and misrepresentation of gender and racial groups (Makhortykh et al. 2021; Noble 2018; Urman and Makhortykh 2022)—in turn shape perceptions of political realities. Specifically, search engine outputs that underrepresent women or non-white politicians may reinforce inequalities in politics by reifying the collective stereotypical representation of politicians as white and male (Bateson 2020; Corbett et al. 2022; Stokes-Brown and Dolan 2010; Vlasceanu and Amodio 2022; Philpot and Walton 2007).

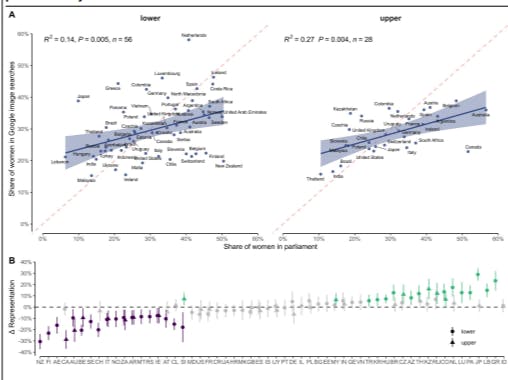

Theoretically, our framework of algorithmic representation integrates established literature on descriptive representation and gendered mediation with more recent work on strategic discrimination to showcase how algorithmic biases systematically shift perceptions of political realities in ways that exacerbate existing gender and race inequalities. We test causal relationships by combining evidence from two observational algorithm auditing studies with two survey experiments. We provide compelling evidence supporting our assumptions regarding algorithmic representation in the context of politics. In our algorithmic audits (studies 1 and 2), we find that women are consistently underrepresented in Google searches dealing with political information in 56 countries and for both lower and upper legislative bodies. Moreover, this algorithmic underrepresentation moderately correlates with women’s actual descriptive representation in countries’ legislative bodies. The experimental analysis (studies 3 and 4) shows that underrepresentation of women or non-white politicians in Google search outputs leads voters to underestimate these groups’ descriptive representation by roughly 10 percentage points. Crucially, mediation analyses suggest that this perceptual bias regarding descriptive representation results in undesirable political perceptions concerning the viability of politicians from minoritized groups.

The results advance scientific understanding of how AI gatekeepers reflect structural inequalities in politics—and how they amplify them by exacerbating biases in political perceptions and decisionmaking. Our framework of algorithmic representation adds a crucial component to understanding the enduring structural disadvantages of women and people of color in an increasingly AI-driven political landscape. It contributes to current public and interdisciplinary scientific research concerning algorithmic fairness and injustice (Birhane 2021; Kalluri 2020; Wong 2020; Weinberg 2022). Such insight is integral for raising societal awareness of the discriminatory tendencies inherent in AI-driven systems within increasingly digital political spaces (Friesen et al. 2021). Moreover, It provides an empirical basis for developing new regulation for preventing risks associated with the growing adoption of AI and is thus relevant for a broad range of stakeholders, including policymakers and industry.

This paper is