Table Of Links

2.1 Code Review As Communication Network

2.2 Code Review Networks

2.3 Measuring Information Diffusion in Code Review

3.1 Hypotheses

3.2 Measurement model

3.3 Measuring system

ACKNOWLEDGMENTS AND REFERENCES

2 BACKGROUND

2.1 Code Review As Communication Network

The theory of code review as communication network is based on different exploratory studies that investigated the motivations and expectations towards code review in an industrial context [2, 3, 5, 6, 19]. In a synthesis of expectations and motivations towards code review reported by the exploratory studies, Dorner et al. identified that information1 exchange as the cause for all effects expected from code review [8].

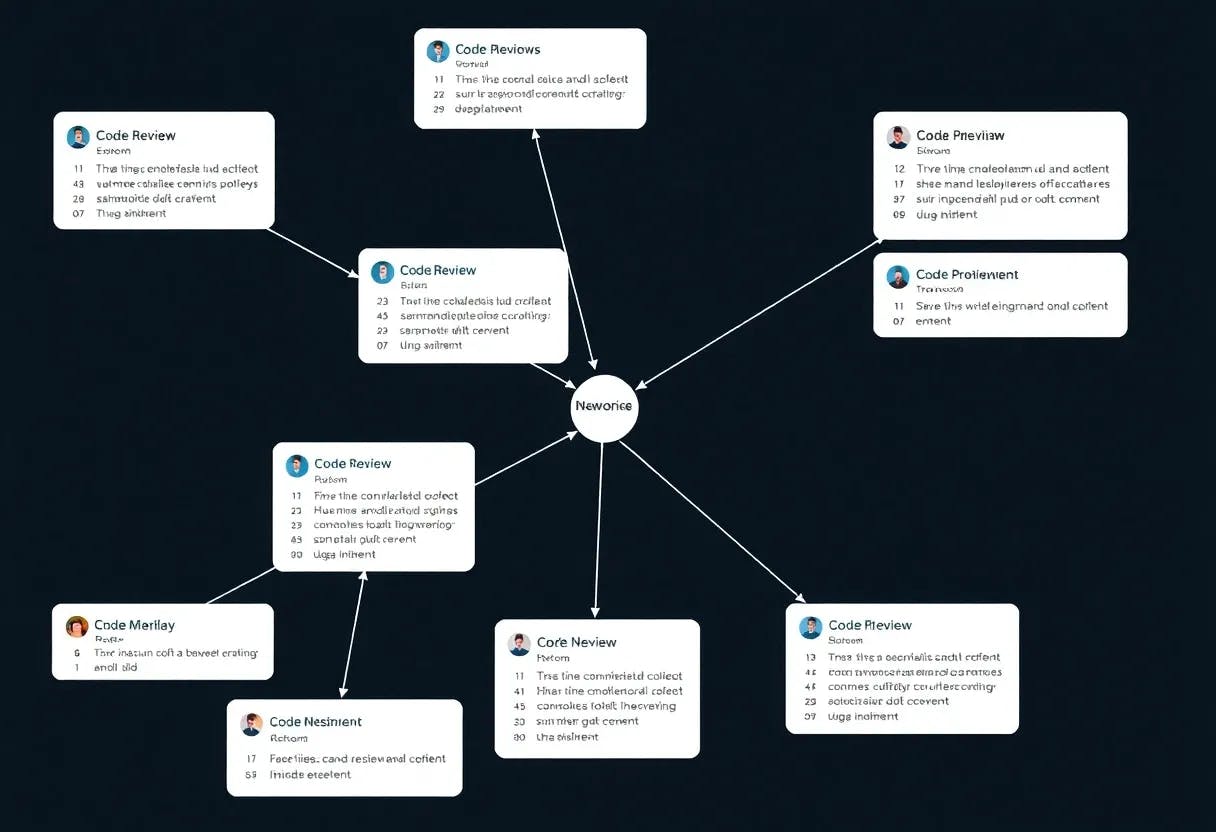

2.2 Code Review Networks

In contrast to other work (e.g., [4, 7, 11, 21, 22]) using social network analysis to investigate code review, we use a code review network as the name suggests: a network of code reviews whose nodes represent code reviews and links indicate the references to other code reviews explicitly and manually added by human code review participants.

This modelling approach is not novel. Li et al. and Hirao et al. used this modelling approach to explore the links between code reviews. Hirao et al. explored the links between code reviews in six open-source projects that use Gerrit as a code review tool [12]. Li et al. extended the modelling and investigation beyond pull requests to issues and identified patterns among the linkings [15].

Although the context (i.e., open-source software development), research objective, and analyses of those studies are not comparable, our modelling approach for the code review network, which we will discuss in Section 3.2 in detail, is similar but differs as we exclude all nonhuman linking activities (in contrast to [12, 15] and use code review only (in contrast to [15]).

2.3 Measuring Information Diffusion in Code Review

Although different qualitative studies report information sharing as a key expectation towards code review [2, 3, 5, 6, 19], only three prior studies have quantified information exchange in code review. In an in-silico experiment, Dorner et al. simulated an artificial information diffusion within large (Microsoft), mid-sized (Spotify), and small code review systems (Trivago) modelled as communication networks [8]. We measured the minimal topological and temporal distances between the participants to quantify how far and how fast information can spread in code review.

We found evidence that the communication network emerging from code review scales well and spreads information fast and broadly, corroborating the findings of prior qualitative work. The reported upper bound of information diffusion, however, describes information diffusion in code review under best-case assumptions, which are unlikely to be achieved. While the upper bound of information diffusion helps us already to understand the boundaries of code review as a communication network, it still does not substitute a more profound empirical measurement, for which we set the foundation with this registered report.

In the first observational study, Rigby and Bird extended the expertise measure proposed by Mockus and Herbsleb [16]. The study contrasts the number of files a developer has modified with the number of files the developer knows about (submitted files ∪ reviewed files) and found a substantial increase in the number of files a developer knows about exclusively through code review.

A second observational study [19] reports (a) the number of comments per change a change author receives over tenure at Google and (b) the median number of files edited, reviewed, and both—as suggested by Rigby and Bird [18]. The study finds that the more senior a code change author is, the fewer code comments he or she gets.

The authors “postulate that this decrease in commenting results from reviewers needing to ask fewer questions as they build familiarity with the codebase and corroborates the hypothesis that the educational aspect of code review may pay off over time.” In its second measurement, the study reproduces the measurements of Rigby and Bird but reports it over the tenure of employees at Google. They showed that reviewed and edited files are distinct sets to a large degree.

Although the proposed file-based network creation is a sophisticated approach and may serve as a complement measurement in future studies, we found the following limitations in the measurement applied in prior work:

• File names may change over time, which introduces an unknown error to those measurements.

• The software-architectural or other technical aspects (e.g., programming language, coding guidelines) of code make the measurements difficult to compare in heterogeneous software projects.

• We are unaware of empirical evidence that passive exposure to files in code review would lead to improved developer fluency.

• The explanatory power of both measurements is limited since the authors set arbitrary boundaries: [18] excluded changes and reviews that contain more than ten files, and [19] limited the tenure of developers to 18 months and aggregated the tenure of developers by three months.

Furthermore, our code-review-based approach differs in two aspects:

First, information in code review is not only encoded in the source code but also is also in the discussions within a code review. A file-based approach does not reveal this type of information diffusion. Our code-review-based approach includes information encoded in the affected files and in the related discussions but also subsumes information on other abstraction layers of the software system.

Second, a file-based approach assumes a passive and implicit information diffusion. That is, information is passively absorbed during review by the developers. In contrast, the information diffusion captured by a code-review-based approach like ours is an active information diffusion, that is, a developer actively and explicitly links information that she or he deems to be worth linking, which makes linking a human, explicit, and active decision.

This paper is