Like many developers, I’d been curious about AI for a while. I’d played with ChatGPT, tested some AI photo tools, and read too many threads about “building the next AI startup in a weekend.”

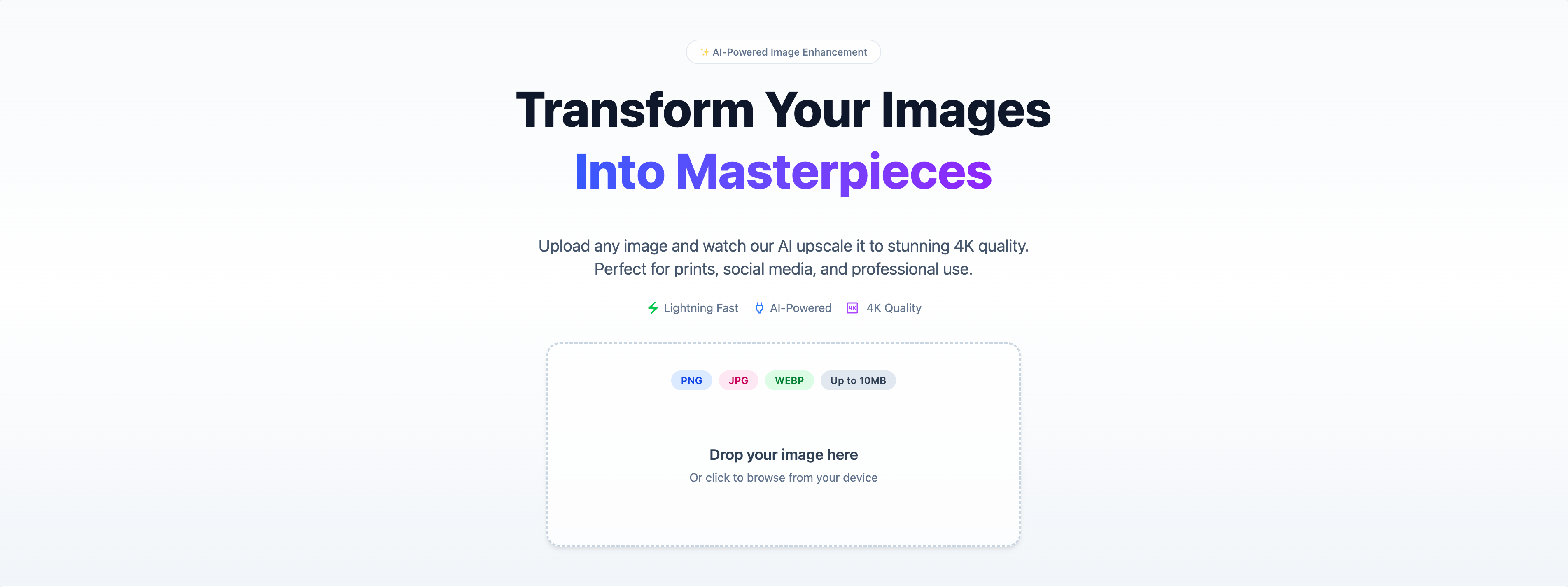

But I wanted to go beyond playing. I wanted to build something real, with actual users. That’s how Photfix was born, my first AI-powered app that takes low-quality or blurry images and transforms them into something clearer, sharper, and upscale-ready.

Spoiler: it wasn’t a smooth ride.

The Idea

I wanted something dead simple for the user:

- Upload an image (could be blurry, low-res, noisy)

- Let AI enhance and upscale it (2K or 4K)

- Download the result

Sure, tools like this already exist. But I wasn’t building it to compete. I wanted to understand the process, test different models, experiment with hosting, and learn what it takes to ship a real AI product.

The Stack

I built both the backend and frontend with Nuxt.js. This helped me move fast, stay in a single repo, and avoid overcomplicating the architecture.

Some of the tech choices:

- Frontend/Backend: Nuxt.js

- Hosting: Docker containers on Dokploy

- Storage: Cloudflare R2

- CDN: Cloudflare

- GPU Inference: Eventually, I landed on Modal.com

- Models Tried: Replicate-hosted models → Real-ESRGAN → other open-source models → custom LLM weights

Early Attempts: API Pains

At first, I used Replicate to handle the heavy lifting. It was the fastest way to prototype, just send an image, get back an enhanced version.

But the latency was killing the UX. Cold starts, model spin-ups, and slow inference meant users waited 30+ seconds. It wasn’t reliable, and I didn’t have control over the underlying model behavior.

So I dove deeper.

Going Open Source (and Failing a Bit)

I started experimenting with open-source models like Real-ESRGAN, self-hosting them with GPU containers on Modal.com.

The first version worked, but:

- The results were... meh.

- Cold starts were still a problem.

- And downloading model weights into containers on every start was painfully slow.

I thought: “Why not just keep the weights in a volume?”

Turns out: still slow.

Docker-Level Optimization

Eventually, I discovered the game-changer: embedding model weights directly into the Docker image. That way, there was zero weight downloading during cold start. Just boot the container and go.

This cut the cold start time significantly—from 25-30 seconds down to under 5 seconds.

GPUs Matter (A Lot More Than I Thought)

I also played with different GPU options.

You’d think the A100would be the best, right? Turns out, not necessarily for image models.

TheNVIDIA L40S gave me better performance for Photfix’s image enhancement workload:

- Faster cold starts

- Faster inference

- Lower cost per processed image (in some setups)

That’s when everything finally started to click.

The Final Product

Photfix is now live and running. Users can upload an image and get an enhanced version in just a few seconds. It works best for:

- Upscaling profile photos

- Restoring old pictures

- Cleaning up low-res screenshots

It's a small tool, but it works. And I built it from scratch—backend, frontend, AI orchestration, and deployment.

Lessons Learned

Here’s what I picked up along the way:

- APIs are great to start, but limited. You’ll eventually want more control over latency, performance, and model behavior.

- Cold start optimizations matter a lot if you're using serverless GPUs. Pre-bake your weights into the image.

- Not all GPUs are created equal. Test different types for your specific workload.

- Shipping is the best teacher. I learned more building this than from any AI course or blog post.

What's Next

I’m now:

- Experimenting with face-aware models for smarter enhancement

- Adding support for batch processing

- Exploring streaming + chunked preview rendering

- Possibly releasing a smaller open-source version for devs

Photfix is far from perfect, but it’s my first real AI app, and it’s live. I use it myself. Others use it too. And that’s what matters.

💡 Want to check it out?

👉https://photfix.com

📫 Got feedback or want to collab? Ping me.