What began as a promise of democratized AI access through cloud providers has devolved into a frustrating experience of degraded performance, aggressive censorship, and unpredictable costs. For experienced AI users, the solution increasingly lies in self-hosting.

The Hidden Cost of Cloud AI Performance

Cloud AI providers have developed a troubling pattern: launch with exceptional performance to attract subscribers, then gradually degrade service quality. OpenAI users reported that GPT-4o now "responds very quickly, but if the context and instructions are being ignored in order to provide fast responses, then the tool is not usable." This isn't isolated—developers note that ChatGPT's ability to track changes across multiple files and recommend project-wide modifications has vanished entirely. The culprit? Token batching—a technique where providers group multiple user requests together for GPU efficiency, causing individual requests to wait up to 4x longer as batch sizes increase.

The performance degradation extends beyond simple delays. Static batching forces all sequences in a batch to complete together, meaning your quick query waits for someone else's lengthy generation. Even "continuous batching" introduces overhead that slows individual requests. Cloud providers optimize for overall throughput at the expense of your experience—a trade-off that makes sense for their business model but devastates user experience.

Censorship: When Safety Becomes Unusable

Testing reveals Google Gemini refuses to answer 10 out of 20 controversial but legitimate questions—more than any competitor. Applications for sexual assault survivors get blocked as "unsafe content." Historical roleplay conversations suddenly stop working after updates. Mental health support applications trigger safety filters. Anthropic's Claude has become "borderline useless" according to users frustrated with heavy censorship that blocks legitimate use cases.

The Local Advantage

Self-hosted AI eliminates these frustrations entirely. With proper hardware, local inference achieves 1900+ tokens/second—10-100x faster time-to-first-token than cloud services. You maintain complete control over model versions, preventing unwanted updates that break workflows. No censorship filters block legitimate content. No rate limits interrupt your work. No surprise bills from usage spikes. Over five years, cloud subscriptions cost $1,200+ for basic access and 10x more for advanced subscriptions. And AI providers prices are growing and limits become stricter and stricter, while a one-time hardware investment provides unlimited usage with only physical hardware limitations on performance.

Hardware Requirements: Building Your AI Powerhouse

Understanding Model Sizes and Quantization

The key to self-hosting success lies in matching models to your hardware capabilities. Modern quantization techniques compress models without significant quality loss:

What is Quantization? Quantization reduces the precision of model weights from their original floating-point representation to lower-bit formats. Think of it like compressing a high-resolution image—you're trading some detail for dramatically smaller file sizes. In neural networks, this means storing each parameter using fewer bits, which directly reduces memory usage and speeds up inference.

Why Quantization Matters Without quantization, even modest language models would be inaccessible to most users. A 70B parameter model at full precision requires 140GB of memory—beyond most consumer GPUs. Quantization democratizes AI by making powerful models run on everyday hardware, enabling local deployment, reducing cloud costs, and improving inference speed through more efficient memory access patterns.

- FP16 (Full Precision): Original model quality, maximum memory requirements

- 8-bit Quantization: ~50% memory reduction, minimal quality impact

- 4-bit Quantization: ~75% memory reduction, slight quality trade-off

- 2-bit Quantization: ~87.5% memory reduction, noticeable quality degradation

For a 7B parameter model, this translates to 14GB (FP16), 7GB (8-bit), 3.5GB (4-bit), or 1.75GB (2-bit) of memory required.

Popular Open-Source Models and Their Requirements

Small Models (1.5B-8B parameters):

- Qwen3 4B/8B: The latest generation with hybrid thinking modes. Qwen3-4B outperforms many 72B models on programming tasks. Requires ~3-6GB in 4-bit quantization

- DeepSeek-R1 7B: Excellent reasoning capabilities, 4GB RAM minimum

- Mistral Small 3.1 24B: The newest Apache 2.0 model with multimodal capabilities, 128K context window, and 150 tokens/sec performance. Runs on single RTX 4090 or 32GB Mac

Medium Models (14B-32B parameters):

- GPT-OSS 20B: OpenAI's first open model since 2019, Apache 2.0 licensed. MoE architecture with 3.6B active parameters delivers o3-mini performance. Runs on RTX 4080 with 16GB VRAM

- Qwen3 14B/32B: Dense models with thinking mode capabilities. Qwen3-14B matches Qwen2.5-32B performance while being more efficient

- DeepSeek-R1 14B: Optimal on RTX 3070 Ti/4070

- Mistral Small 3.2: Latest update with improved instruction following and reduced repetition

Large Models (70B+ parameters):

- Llama 3.3 70B: ~35GB in 4-bit quantization, needs dual RTX 4090 or A100

- DeepSeek-R1 70B: 48GB VRAM recommended, achievable with 2x RTX 4090

- GPT-OSS 120B: OpenAI's flagship open model with 5.1B active parameters via 128-expert MoE. Matches o4-mini performance, runs on single H100 (80GB) or 2-4x RTX 3090s

- Qwen3-235B-A22B: Flagship MoE model with 22B active parameters, competitive with o3-mini

- DeepSeek-R1 671B: The giant requiring 480GB+ VRAM or specialized setups

Specialized Coding Models:

Small Coding Models (1B-7B active parameters):

- Qwen3-Coder 30B-A3B: MoE model with only 3.3B active parameters. Native 256K context (1M with YaRN) for repository-scale tasks. Runs on RTX 3060 12GB in 4-bit quantization

- Qwen3-Coder 30B-A3B-FP8: Official 8-bit quantization maintaining 95%+ performance. Requires 15GB VRAM, optimal for RTX 4070/3080

- Unsloth Qwen3-Coder 30B-A3B: Dynamic quantizations with fixed tool-calling. Q4_K_M runs on 12GB, Q4_K_XL on 18GB with better quality

Large Coding Models (35B+ active parameters):

- Qwen3-Coder 480B-A35B: Flagship agentic model with 35B active via 160-expert MoE. Achieves 61.8% on SWE-Bench, comparable to Claude Sonnet 4. Requires 8x H200 or 12x H100 at full precision

- Qwen3-Coder 480B-A35B-FP8: Official 8-bit reducing memory to 250GB. Runs on 4x H100 80GB or 4x A100 80GB

- Unsloth Qwen3-Coder 480B-A35B: Q2_K_XL at 276GB runs on 4x RTX 4090 + 180GB RAM. IQ1_M at 150GB feasible on 2x RTX 4090 + 100GB RAM

Hardware Configurations by Budget

Budget Build (~$2,000):

- AMD Ryzen 7 7700X processor

- 64GB DDR5-5600 RAM

- PowerColor RX 7900 XT 20GB or used RTX 3090

- Handles models up to 14B comfortably

Performance Build (~$4,000):

- AMD Ryzen 9 7900X

- 128GB DDR5-5600 RAM

- RTX 4090 24GB

- Runs 32B models efficiently, smaller 70B models with offloading

Professional Setup (~$8,000):

- Dual Xeon/EPYC processors

- 256GB+ RAM

- 2x RTX 4090 or RTX A6000

- Handles 70B models at production speeds

Mac Options:

- MacBook M1 Pro 36GB: Excellent for 7B-14B models, unified memory advantage

- Mac Mini M4 64GB: Comfortable with 32B models

- Mac Studio M3 Ultra 512GB: The ultimate option—runs DeepSeek-R1 671B at 17-18 tokens/sec for ~$10,000

The AMD EPYC Alternative: For ultra-large models, AMD EPYC systems offer exceptional value. A ~$2,500 EPYC 7702 system with 512GB-1TB DDR4 delivers 3.5-8 tokens/sec on DeepSeek-R1 671B—slower than GPUs but vastly more affordable for models this size.

The $2,000 EPYC Build (Digital Spaceport Setup): This configuration can run DeepSeek-R1 671B at 3.5-4.25 tokens/second:

- CPU: AMD EPYC 7702 (64 cores) - $650, or upgrade to EPYC 7C13/7V13 - $599-735

- Motherboard: MZ32-AR0 (16 DIMM slots, 3200MHz support) - $500

- Memory: 16x 32GB DDR4-2400 ECC (512GB total) - $400, or 16x 64GB for 1TB - $800

- Storage: 1TB Samsung 980 Pro NVMe - $75

- Cooling: Corsair H170i Elite Capellix XT - $170

- PSU: 850W (CPU-only) or 1500W (future GPU expansion) - $80-150

- Case: Rack frame - $55

Total Cost: ~$2,000 for 512GB, ~$2,500 for 1TB configuration

Performance Results:

- DeepSeek-R1 671B Q4: 3.5-4.25 tokens/second

- Context Window: 16K+ supported

- Power Draw: 60W idle, 260W loaded

- Memory Bandwidth: Critical—faster DDR4-3200 improves performance significantly

This setup proves that massive models can run affordably on CPU-only systems, making frontier AI accessible without GPU requirements. The dual-socket capability and massive memory support make EPYC ideal for models that exceed GPU VRAM limits.

Source: Digital Spaceport - How To Run Deepseek R1 671b Fully Locally On a $2000 EPYC Server

Software Setup: From Installation to Production

Ollama: The Foundation

Ollama has become the de facto standard for local model deployment, offering simplicity without sacrificing power.

Installation:

# Linux/macOS

curl -fsSL https://ollama.com/install.sh | sh

# Windows: Download installer from ollama.com/download

Essential Configuration:

# Optimize for performance

export OLLAMA_HOST="0.0.0.0:11434" # Enable network access

export OLLAMA_MAX_LOADED_MODELS=3 # Concurrent models

export OLLAMA_NUM_PARALLEL=4 # Parallel requests

export OLLAMA_FLASH_ATTENTION=1 # Enable optimizations

export OLLAMA_KV_CACHE_TYPE="q8_0" # Quantized cache

# Download models

ollama pull qwen3:4b

ollama pull qwen3:8b

ollama pull mistral-small3.1

ollama pull deepseek-r1:7b

Running Multiple Instances: For multi-GPU setups, run separate Ollama instances:

# GPU 1

CUDA_VISIBLE_DEVICES=0 OLLAMA_HOST="0.0.0.0:11434" ollama serve

# GPU 2

CUDA_VISIBLE_DEVICES=1 OLLAMA_HOST="0.0.0.0:11435" ollama serve

Exo.labs: Distributed Inference Magic

Exo.labs enables running massive models across multiple devices—even mixing MacBooks, PCs, and Raspberry Pis.

Installation:

git clone https://github.com/exo-explore/exo.git

cd exo

pip install -e .

Usage: Simply run exo on each device in your network. They automatically discover each other and distribute model computation. A setup with 3x M4 Pro Macs achieves 108.8 tokens/second on Llama 3.2 3B—a 2.2x improvement over single-device performance.

GUI Options

Open WebUI provides the best ChatGPT-like experience:

docker run -d -p 3000:8080 --gpus=all \

-v ollama:/root/.ollama \

-v open-webui:/app/backend/data \

--name open-webui \

ghcr.io/open-webui/open-webui:ollama

Access at http://localhost:3000 for a full-featured interface with RAG support, multi-user management, and plugin system.

GPT4All offers the simplest desktop experience:

- Download from

gpt4all.iofor Windows, macOS, or Linux - One-click installation with automatic Ollama detection

- Built-in model browser and download manager

- Perfect for beginners who want a native desktop app

- Supports local document chat and plugins

AI Studio provides a powerful development-focused interface:

- Multi-model comparison and testing capabilities

- Advanced prompt engineering workspace

- API endpoint management and testing

- Model performance analytics and benchmarking

- Supports Ollama, LocalAI, and custom backends

- Ideal for developers and AI researchers

- Features include conversation branching, prompt templates, and export options

SillyTavern excels for creative applications and character-based interactions, offering extensive customization for roleplay and creative writing scenarios.

Remote Access with Tailscale: Your AI Everywhere

One of the most powerful aspects of self-hosting AI is the ability to access your models from anywhere while maintaining complete privacy. Tailscale VPN makes this trivially easy by creating a secure mesh network between all your devices.

Setting Up Tailscale for Remote AI Access

Install Tailscale on your AI server:

# Linux/macOS

curl -fsSL https://tailscale.com/install.sh | sh

sudo tailscale up

# Windows: Download from tailscale.com/download

Configure Ollama for network access:

# Set environment variable to listen on all interfaces

export OLLAMA_HOST="0.0.0.0:11434"

ollama serve

Install Tailscale on client devices (laptop, phone, tablet) using the same account. All devices automatically appear in your private mesh network with unique IP addresses (typically 100.x.x.x range).

Check your server's Tailscale IP:

tailscale ip -4

# Example output: 100.123.45.67

Access from any device on your Tailnet:

- Web interface:

http://100.123.45.67:3000(Open WebUI) - API endpoint:

http://100.123.45.67:11434/v1/chat/completions - Mobile apps: Configure Ollama endpoint to your Tailscale IP

Advanced Tailscale Configuration

Enable subnet routing to access your entire home network:

# On AI server

sudo tailscale up --advertise-routes=192.168.1.0/24

# Replace with your actual subnet

Use Tailscale Serve for HTTPS with automatic certificates:

# Expose Open WebUI with HTTPS

tailscale serve https / http://localhost:3000

This creates a public URL like https://your-machine.your-tailnet.ts.net accessible only to your Tailscale network.

Mobile Access Setup

For iOS/Android devices:

- Install Tailscale app from App Store/Play Store

- Sign in with same account

- Install compatible apps:

- iOS: Enchanted, Mela, or any OpenAI-compatible client

- Android: Ollama Android app, or web browser

Configure the app to use your Tailscale IP: http://100.123.45.67:11434

Security Best Practices

Tailscale provides security by default through its encrypted mesh network—no additional firewall configuration needed! The beauty of Tailscale is that it:

- Automatically encrypts all traffic using WireGuard

- Only allows authenticated devices in your network

- Creates isolated connections that bypass your router entirely

- Prevents unauthorized access from the public internet

Since Tailscale traffic is encrypted and only accessible to your authenticated devices, your Ollama server remains completely private even when accessible remotely. No port forwarding, no VPS setup, no complex firewall rules—just secure, direct device-to-device connections.

With Tailscale, your self-hosted AI becomes truly portable—access your models with full privacy whether you're at a coffee shop, traveling, or working from another location. The encrypted mesh network ensures your AI conversations never leave your control.

Agentic Workflows: AI That Actually Works

Goose from Block

Goose transforms your local models into autonomous development assistants capable of building entire projects.

Installation:

curl -fsSL https://github.com/block/goose/releases/download/stable/download_cli.sh | bash

Configuration for Ollama:

goose configure

# Select: Configure Providers → Custom → Local

# Base URL: http://localhost:11434/v1

# Model: qwen3:8b

Goose excels at code migrations, performance optimization, test generation, and complex development workflows. Unlike simple code completion, it plans and executes entire development tasks autonomously.

Crush from Charm

For terminal enthusiasts, Crush provides a glamorous AI coding agent with deep IDE integration.

Installation:

brew install charmbracelet/tap/crush # macOS/Linux

# or

npm install -g @charmland/crush

Ollama Configuration (.crush.json):

{

"providers": {

"ollama": {

"type": "openai",

"base_url": "http://localhost:11434/v1",

"api_key": "ollama",

"models": [{

"id": "qwen3:8b",

"name": "Qwen3 8B",

"context_window": 32768

}]

}

}

}

n8n AI Starter Kit

For visual workflow automation, the n8n self-hosted kit combines everything needed:

git clone https://github.com/n8n-io/self-hosted-ai-starter-kit.git

cd self-hosted-ai-starter-kit

docker compose --profile gpu-nvidia up

Access the visual workflow editor at http://localhost:5678/ with 400+ integrations and pre-built AI templates.

Corporate-Scale Inference: The 50 Million Tokens/Hour Setup

For organizations requiring extreme performance, the boundaries of self-hosting extend far beyond traditional home servers, for example @nisten setup on X.

- Model: Qwen3-Coder-480B (480B parameters, 35B active MoE architecture)

- Hardware: 4x NVidia H200

- Output: 50 million tokens/hour (around $250/hour if using Sonnet)

Cost Analysis

Initial Investment:

- Budget setup: ~$2,000

- Performance setup: ~$4,000

- Professional setup: ~$9,000

Operational Costs:

- Electricity: $50-200/month

- Zero API fees

- No usage limits

- Complete cost predictability

Break-even Timeline: Heavy users recoup investment in 3-6 months. Moderate users break even within a year. The freedom from rate limits, censorship, and performance degradation? Priceless.

Conclusion

Self-hosting AI has evolvedStart small with a single GPU and Ollama. Experiment with different models. Add agentic capabilities. Scale as needed. Most importantly, enjoy the freedom of AI that works exactly as you need it to—no compromises, no censorship, no surprises. Go from experimental curiosity to practical necessity. The combination of powerful open-source models, mature software ecosystems, and accessible hardware creates an unprecedented opportunity for AI independence. Whether you're frustrated with cloud limitations, concerned about privacy, or simply want consistent performance, the path to self-hosted AI is clearer than ever.

Links to the relevant articles on self-hosting:

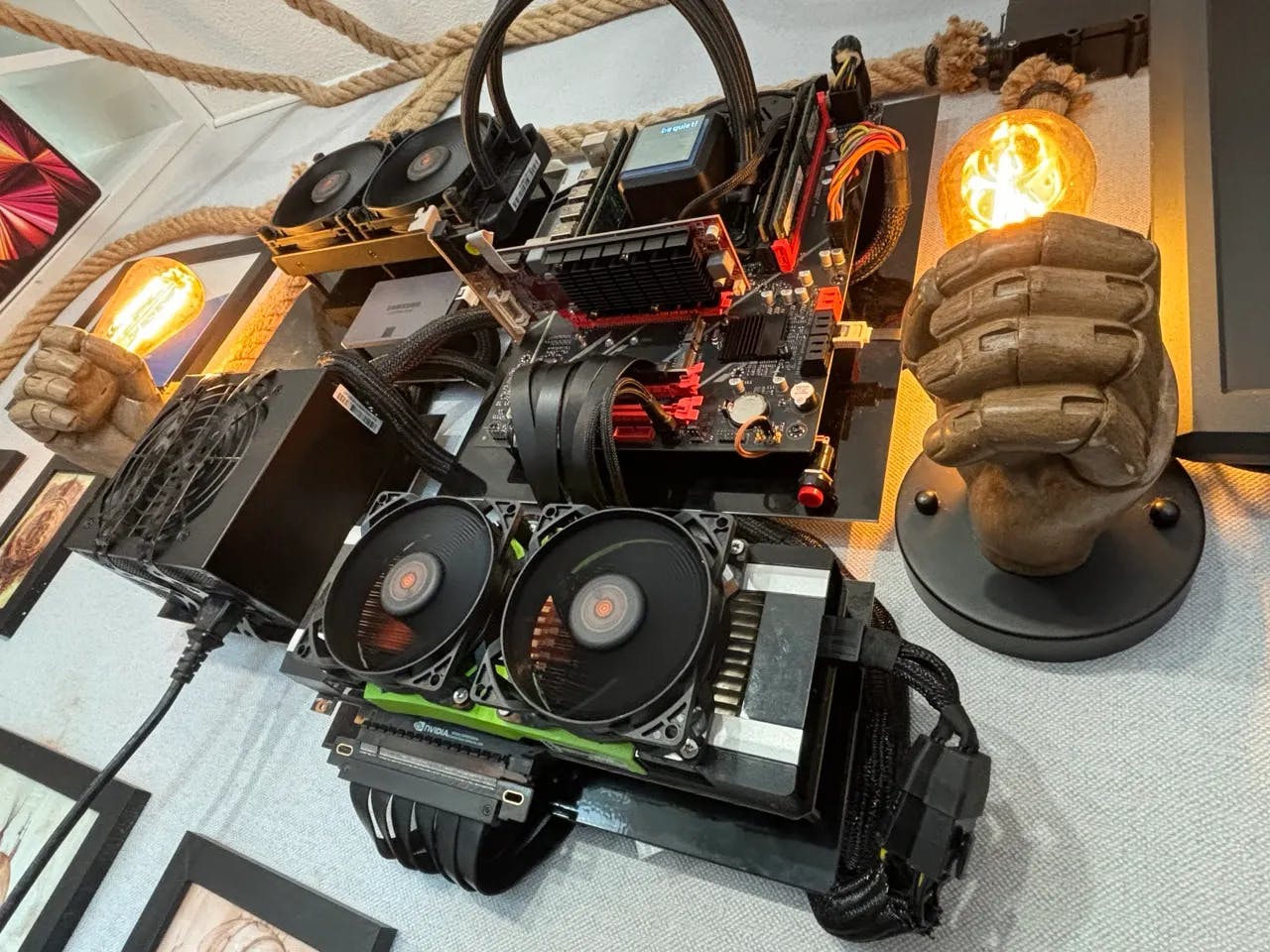

- Ingo Eichhorst and his beautiful setup, photo of which I used for this article: https://ingoeichhorst.medium.com/building-a-wall-mounted-and-wallet-friendly-ml-rig-0683a7094704

- Digital Spaceport EPYC rig: https://digitalspaceport.com/how-to-run-deepseek-r1-671b-fully-locally-on-2000-epyc-rig/

- Show Me Your Rig thread on LocalLLaMa subreddit: https://www.reddit.com/r/LocalLLaMA/comments/1fqwler/show_me_your_ai_rig/

- Ben Arent AI homelab: https://benarent.co.uk/blog/ai-homelab/

- Exo Labs cluster with 5 Mac Studio: https://www.youtube.com/watch?v=Ju0ndy2kwlw