Table of Links

2 INTERACTIVE WORLD SIMULATION

3.1 DATA COLLECTION VIA AGENT PLAY

3.2 TRAINING THE GENERATIVE DIFFUSION MODEL

4.1 AGENT TRAINING

4.2 GENERATIVE MODEL TRAINING

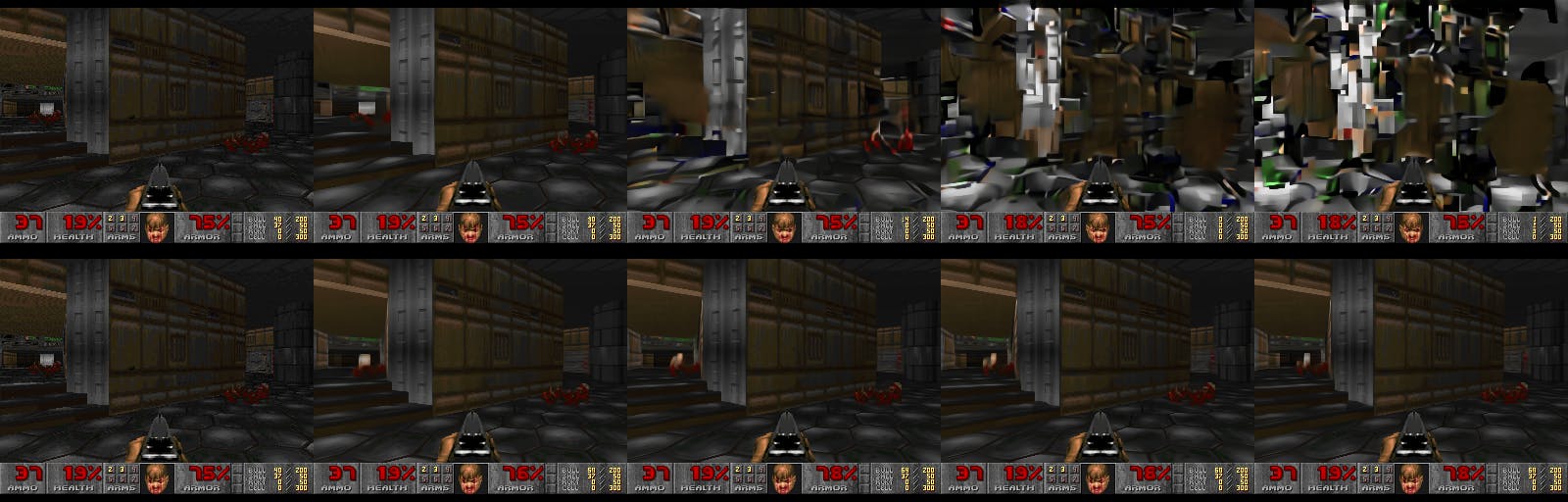

5.1 SIMULATION QUALITY

5.2 ABLATIONS

7 DISCUSSION, ACKNOWLEDGEMENTS AND REFERENCES

4 EXPERIMENTAL SETUP

4.1 AGENT TRAINING

The agent model is trained using PPO (Schulman et al., 2017), with a simple CNN as the feature network, following Mnih et al. (2015). It is trained on CPU using the Stable Baselines 3 infrastructure (Raffin et al., 2021). The agent is provided with downscaled versions of the frame images and in-game map, each at resolution 160x120. The agent also has access to the last 32 actions it performed. The feature network computes a representation of size 512 for each image. PPO’s actor and critic are 2-layer MLP heads on top of a concatenation of the outputs of the image feature network and the sequence of past actions. We train the agent to play the game using the Vizdoom environment (Wydmuch et al., 2019). We run 8 games in parallel, each with a replay buffer size of 512, a discount factor γ = 0.99, and an entropy coefficient of 0.1. In each iteration, the network is trained using a batch size of 64 for 10 epochs, with a learning rate of 1e-4. We perform a total of 10M environment steps.

4.2 GENERATIVE MODEL TRAINING

We train all simulation models from a pretrained checkpoint of Stable Diffusion 1.4, unfreezing all U-Net parameters. We use a batch size of 128 and a constant learning rate of 2e-5, with the Adafactor optimizer without weight decay (Shazeer & Stern, 2018) and gradient clipping of 1.0. We change the diffusion loss parameterization to be v-prediction (Salimans & Ho (2022a). The context frames condition is dropped with probability 0.1 to allow CFG during inference. We train using 128 TPU-v5e devices with data parallelization. Unless noted otherwise, all results in the paper are after 700,000 training steps. For noise augmentation (Section 3.2.1), we use a maximal noise level of 0.7, with 10 embedding buckets. We use a batch size of 2,048 for optimizing the latent decoder, other training parameters are identical to those of the denoiser. For training data, we use all trajectories played by the agent during RL training as well as evaluation data during training, unless mentioned otherwise. Overall we generate 900M frames for training. All image frames (during training, inference, and conditioning) are at a resolution of 320x240 padded to 320x256. We use a context length of 64 (i.e. the model is provided its own last 64 predictions as well as the last 64 actions).

This paper is