If you thought breaking into tech was hard, try staying relevant at an AI company without writing a single line of Python.

It’s a world where every meeting has at least three acronyms you’re pretending to understand, where job postings ask for “AI-native thinking” without defining what that means, and where your engineering team speaks a language that sounds like English but definitely isn’t. I’ve met product managers at LLM startups who don’t know what a context window is. Sales reps at RAG companies who can’t explain what retrieval augmented generation actually does. Marketing leads writing blog posts about “agentic AI” who think an agent is just a chatbot with a personality.

Here’s the thing, though. The non-technical people who are crushing it in AI? They can’t write Python either. They can explain what RAG does, estimate whether a feature is a two-day or two-month build, and tell when a vendor is lying about their model’s capabilities. Code? Zero.

The non-technical people who are struggling? Some of them actually took a Python bootcamp. Didn’t help. Because knowing how to write a for loop doesn’t teach you how AI systems actually work. Those are completely different skills.

Think about it like cooking. There’s a massive difference between Gordon Ramsay and someone who can follow a recipe. But there’s also a huge gap between the recipe-follower and someone who doesn’t know what a stove is. Most people working in AI are closer to “doesn’t know what a stove is” than they’d like to admit.

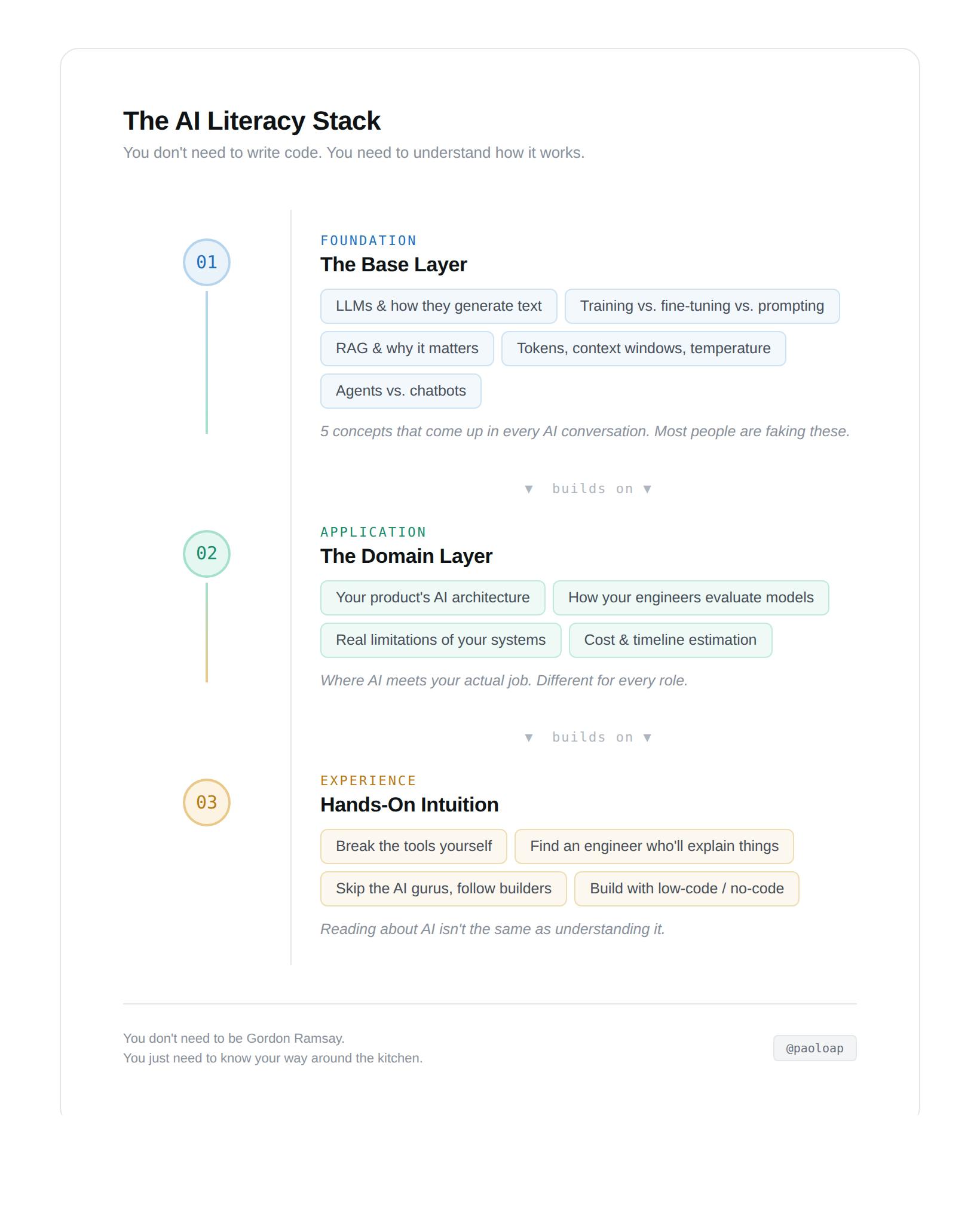

You don’t need to be Gordon Ramsay. But you do need to know your way around the kitchen. Which is why getting AI-literate should be built on three things:

- Master the base layer of concepts that everyone in AI needs to know.

- Build your domain layer so you understand how AI applies to your specific role.

- Developing real intuition gets your hands dirty with the actual tools.

Step 1: Master the Base Layer

Otherwise known as “the stuff you should really know before your next all-hands,” this is the foundation that makes every other conversation possible.

The base layer isn’t deep technical knowledge. It’s the set of concepts that come up constantly in any AI company, and that you need to understand at an intuitive level. Not the math. The “what is this, why does it matter, and what does it mean when someone brings it up in a meeting” level.

Here’s the short list:

- What’s a large language model and how does it generate text?

- What’s the difference between training, fine-tuning, and prompting?

- What’s RAG and why does every company seem to be building one?

- What are tokens, context windows, and temperature?

- What’s an AI agent and how is it different from a chatbot?

You’d be surprised how far just these five concepts take you. A product manager who understands the difference between fine-tuning and RAG can save weeks of engineering time on a single spec. (I watched it happen. PM rewrote “add memory to the AI” as “store user preferences in a structured profile, inject relevant context into the system prompt per session.” Engineering estimate dropped from 3 weeks to 4 days. Same outcome.)

The challenge here is that there’s no single course that covers this well. Most “AI for non-technical people” content is either too shallow (what ChatGPT is) or too deep (here’s how backpropagation works). The sweet spot is weirdly hard to find. Company engineering blogs are your best bet. Anthropic’s research blog, OpenAI’s docs, Hugging Face’s course. These are written for people who use the products, not just people who build them.

Step 2: Build Your Domain Layer

Getting the base layer down is hard enough, but the real leverage comes from connecting those concepts to the specific work you do every day.

In order for AI literacy to actually change your performance, you have to go beyond general knowledge and understand how AI shows up in your function. This is what separates someone who “knows about AI” from someone their team actually relies on.

The issue with the domain layer is that there’s no one correct answer. It depends entirely on your role:

If you’re a PM, you need to understand your product’s architecture well enough to translate between “business needs X” and “that means we need to do Y with the model.” You need to know what evaluation looks like and why “just make it more accurate” is not a useful product requirement.

If you’re in sales, you need to handle technical questions without reaching for a script. AI-native buyers (especially engineering leaders) will ask about latency, token costs, and how you handle hallucinations. A rep who understood that hallucination is inherent to how LLMs work, and could explain how their product used retrieval to ground suggestions, closed a six-figure deal in a week. The reps who said “I’ll get back to you on that” didn’t.

If you’re in marketing, credibility is everything. AI audiences are unforgiving. One technically inaccurate blog post and your company loses trust with the exact people you’re trying to reach. I watched a marketing team almost run a campaign claiming their product “eliminates AI hallucinations.” Their own engineers were mortified. The marketer who understood the basics reframed it as “grounded AI with built-in verification.” The campaign performed. The company didn’t become a punchline on Hacker News.

Think less “I’ll learn AI broadly” and more “where have I lost out by not understanding the technical side?” That’s your domain layer curriculum.

Step 3: Develop Real Intuition

It has taken people a long time to fully realize that AI literacy isn’t something you can learn from reading alone. You build it by using the tools, breaking them, and talking to the people who build them.

Use the tools yourself. Not as a consumer. As a student. Play with ChatGPT, Claude, Cursor, Perplexity. Try to make them fail. Find their limits. Understand when they hallucinate and when they don’t. The rise of AI-powered development tools is blurring the line between “technical” and “non-technical” faster than anyone expected. Product managers are building prototypes. Marketers are creating internal tools. None of them are “learning to code.” They’re describing what they want in plain English and letting AI write the code. But using these tools effectively still requires understanding what’s possible and knowing when the output is wrong.

Find an engineer who’ll explain things. If you work at an AI company, you’re surrounded by people who understand this stuff deeply. But come prepared. “Can you explain transformers to me?” is a terrible question. “I was reading about RAG and I understand the retrieval part, but I don’t get how the retrieved context gets combined with the user’s question before it goes to the model” is a great one. Show that you’ve done the work. Engineers respect that.

90% of AI social media is noise. AI Twitter is full of engagement bait about “10x your productivity with AI.” Ignore most of it. Follow the engineers, researchers, and builders sharing what they’re actually working on. You’ll absorb more from six months of reading practitioner posts than from any course.

Just remember: AI literacy is not a weekend project. The field moves fast, the concepts keep evolving, and there will always be something new to learn. It’s not for the faint of heart. But then again, if it was easy, then everyone would do it.

The people who’ll thrive in AI aren’t necessarily the ones writing the code. They’re the ones who understand what the code does, why it matters, and how to use that knowledge to create real value.

Start with the base layer. Build your domain layer. Get your hands dirty with the actual tools. And stop letting “I’m not technical” be your excuse.