In modern data pipelines, data often comes in nested JSON or XML formats. These formats are flexible, allowing hierarchical relationships and arrays, but they can be challenging to handle with traditional relational approaches. Flattening this data efficiently is critical for analytics, reporting, or loading into data warehouses like Snowflake.

In this blog, we explore a dynamic, recursive approach to parsing nested JSON and XML in Spark using a reusable function, flatten_df_recursive.

Real-World Scenario

Imagine working at an e-commerce company:

- Customer Data is stored in JSON files with nested structures for addresses, orders, and payment info.

- Order Details may contain arrays of items, each with nested product info.

- Legacy Partners sends XML files for inventory and shipment updates.

Your goal:

- Load this data into Databricks/Spark.

- Flatten all nested structures dynamically (without hardcoding column names).

- Save the flattened output for analytics, ML, or reporting.

Challenges:

- Unknown nesting levels.

- Arrays with nested structs.

- Frequent schema changes.

This is exactly where recursive flattening comes in handy.

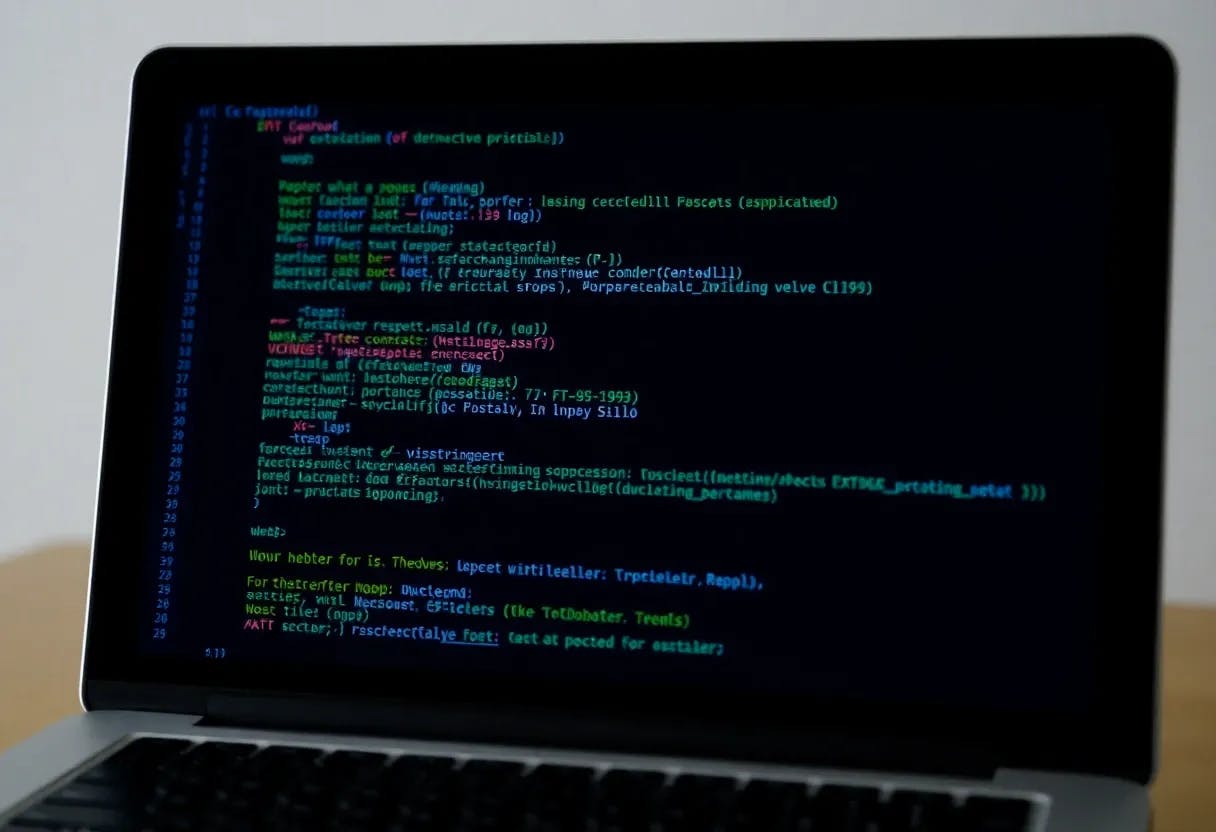

The Recursive Flatten Function

Here’s the core function:

from pyspark.sql.types import StructType, ArrayType

from pyspark.sql.functions import col, explode_outer

def flatten_df_recursive(df):

"""

Recursively flattens all nested StructType and ArrayType columns in a Spark DataFrame.

Supports multiple nested levels for JSON/XML data.

"""

# Track complex fields (StructType or ArrayType)

complex_fields = [(field.name, field.dataType)

for field in df.schema.fields

if isinstance(field.dataType, (StructType, ArrayType))]

while complex_fields:

col_name, col_type = complex_fields.pop(0)

# If StructType, expand its fields with aliases

if isinstance(col_type, StructType):

expanded_cols = [

col(f"{col_name}.{nested_field.name}").alias(f"{col_name}_{nested_field.name}")

for nested_field in col_type.fields

]

df = df.select("*", *expanded_cols).drop(col_name)

# If ArrayType, explode the array

elif isinstance(col_type, ArrayType):

df = df.withColumn(col_name, explode_outer(col(col_name)))

# Refresh the complex fields list after modifications

complex_fields = [(field.name, field.dataType)

for field in df.schema.fields

if isinstance(field.dataType, (StructType, ArrayType))]

return df

Key Features:

- Fully dynamic: Handles any number of nested struct or array levels.

- No hardcoding: Works even if new fields are added later.

- Array support: Automatically explodes arrays to rows.

- XML & JSON friendly: Works with any hierarchical data loaded into Spark.

Reading Nested JSON in Spark

Read the JSON file with multiline

df = spark.read.option("multiline", "true").json("dbfs:/FileStore/temp/orders.json")

display(df)

Passing the JSON Data Frame into flatten_df_recursive will flatten all nested structs and arrays, making the data ready for analytics or reporting.

flat_df = flatten_df_recursive(df)

display(flat_df)

Reading Nested XML in Spark

xml_path = "/dbfs/data/nested_orders.xml"

df = spark.read.format("xml") \

.option("rowTag", "order") \

.load(xml_path)

flat_df = flatten_df_recursive(df)

flat_df.show(truncate=False)

- “rowTag” indicates the repeating XML element representing one record.

- Nested elements and arrays are automatically handled by “flatten_df_recursive”.

Why This Approach Matters

- Dynamic Schema Handling: No need to rewrite flattening logic if JSON/XML structure changes.

- Supports Multi-Level Nesting: Works for deeply nested structs and arrays.

- Scalable: Can process large files on Spark without loading everything in memory.

- Reusable: Works for any source — JSON, XML, Snowflake, Delta, or Parquet with nested structures.

Summary

Nested JSON and XML are common in modern data pipelines, but traditional flattening approaches fail with deep nesting or schema changes. By using a recursive, dynamic Spark flattening function, you can:

- Automatically flatten any depth of nesting.

- Handle arrays and structs seamlessly.

- Integrate with analytics, ML pipelines, and warehouses like Snowflake.

This approach is especially useful for ETL pipelines, data lake ingestion, and reporting systems where data structure evolves frequently.

Here I have attached the entire report for your reference.