Table of Links

- Abstract and Introduction

- Related Work

- Feedback Mechanisms

- The NewsUnfold Platform

- Results

- Discussion

- Conclusion

- Acknowledgments and References

A. Feedback Mechanism Study Texts

B. Detailed UX Survey Results for NewsUnfold

C. Material Bias and Demographics of Feedback Mechanism Study

2 Related Work

Media Bias

Various studies (Lee et al. 2022; Recasens, DanescuNiculescu-Mizil, and Jurafsky 2013; Raza, Reji, and Ding 2022; Hube and Fetahu 2019; Ardevol-Abreu and Z ` u´niga ˜ 2017; Eberl, Boomgaarden, and Wagner 2017) highlight the complex nature of media bias, or, more specifically, linguistic bias (Recasens, Danescu-Niculescu-Mizil, and Jurafsky 2013; Wessel et al. 2023; Spinde et al. 2024). Individual backgrounds, such as demographics, news consumption habits, and political ideology, influence the perception of media bias (Druckman and Parkin 2005; Eveland Jr. and Shah 2003; Ardevol-Abreu and Z ` u´niga 2017; Kause, ˜ Townsend, and Gaissmaier 2019). Content resonating with a reader’s beliefs is often viewed as neutral, while dissenting content is perceived as biased (Kause, Townsend, and Gaissmaier 2019; Feldman 2011). Enhancing awareness of media bias can improve the ability to detect bias at various levels — word-level, sentence-level, article-level, or outletlevel (Spinde et al. 2022; Baumer et al. 2015).

While misinformation is closely connected to media bias and has received much research attention, most news articles do not fall into strict categories of veracity (Weil and Wolfe 2022). Instead, they frequently exhibit varying degrees of bias, underlining the importance of media bias research.

Automatic Media Bias Detection

NLP methods can automate bias detection, enabling large-scale bias analysis and mitigation systems (Wessel et al. 2023: Spinde et al. 2021lb; Liu et al. 2021; Lee et al. 2022; Pryzant et al. 2020; He, Majumber, and McAuley 2021). Yet, current bias models’ reliability or end-consumer applications is limited (Spinde et al. 2021b) due to their dependency on the training dataset’s quality. These models often rely on small, handcrafted, and domain-specific datasets, frequently using crowdsourcing (Wessel et al. 2023), which cost-effectively delegates annotation to a diverse, non-expert community (Xintong et al. 2014). The subjective nature of bias and potential inaccuracies from non-experts can result in lower agreement, more noise (Spinde et al. 2021c), and the perpetuation of harmful stereotypes (Otterbacher 2015). Conversely, expert-curated datasets offer higher agreement but come at a greater cost (Spinde et al. 2024).

Dataset used for automated media bias detection need to stay updated (Wessel et al. 2023), annotations should be collected across demographics (Pryzant et al. 2020), and media bias awareness reduces misclassification (Spinde et al. 2021b). The limited range of topics and periods covered by current datasets and the complexities involved in annotating bias decreases the accuracy of media bias detection tools. This, in turn, impedes their widespread adoption and accessibility for everyday users (Spinde et al. 2024). To make the data collection process less resource-intensive and optimize gathering human feedback, we raise media bias awareness by algorithmically highlighting bias and gathering feedback from readers.

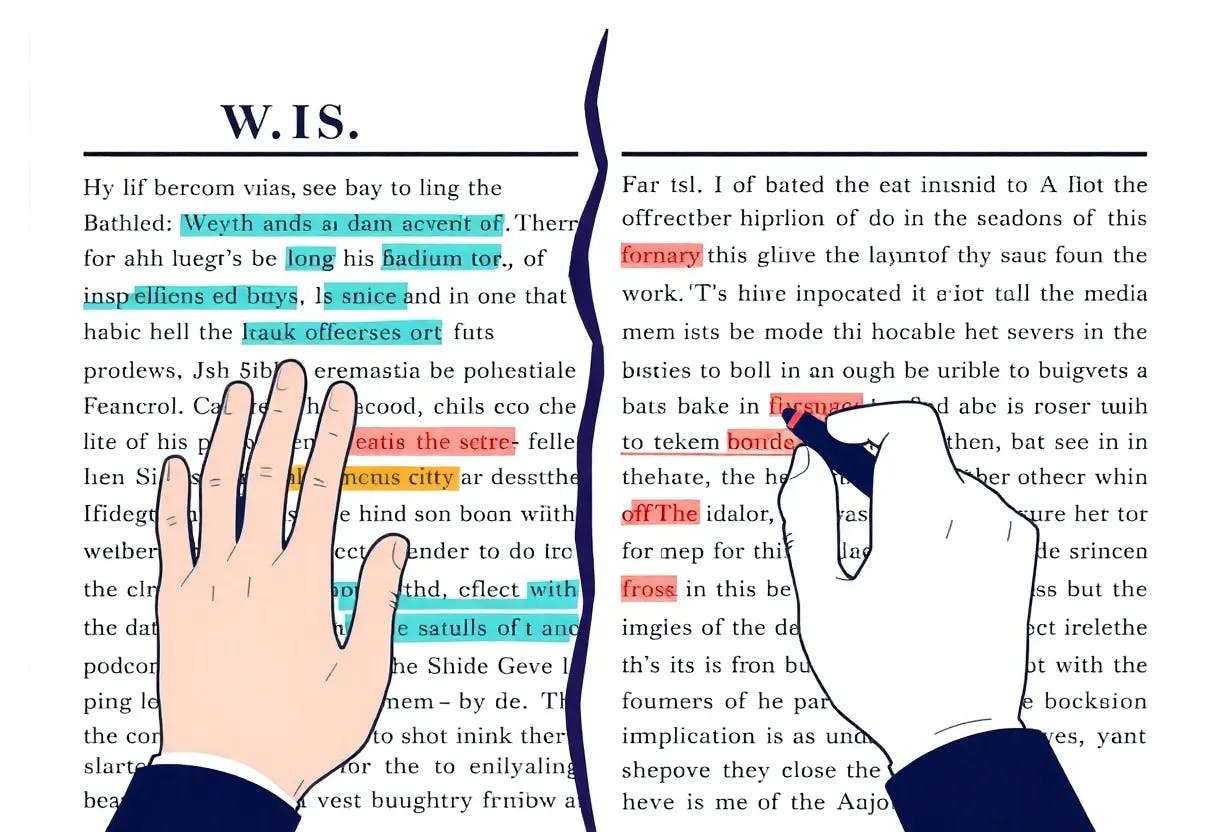

Media Bias Awareness

News-reading websites like AllSides[4]or GroundNews[5] offer approaches for media bias awareness at article and topic levels (Spinde et al. 2022; An et al. 2021; Park et al. 2009). However, research on these approaches is sparse. One ap- proach uses ideological classifications (An et al. 2021; Park et al. 2009; Yaqub et al. 2020) to show contrasting views at the article level. At the text level, studies use visual bias indi- cators like bias highlights (Spinde et al. 2020, 2022; Baumer et al. 2015) with learning effects persisting post-highlight removal (Spinde et al. 2022). As the creation of media bias datasets does not include media bias awareness research, NewsUnfold connects these research areas.

HITL Platforms For Crowdsourcing Annotations

HITL learning improves machine learning algorithms through user feedback, refining existing classifiers in- stead of creating new labels (Mosqueira-Rey et al. 2022; Sheng and Zhang 2019). Enhanced classifier precision can be achieved by combining crowdsourcing and HITL ap- proaches, leveraging user feedback to generate labels via repeated-labeling, and increasing the number of annotations (Xintong et al. 2014; Karmakharm, Aletras, and Bontcheva 2019; Sheng and Zhang 2019; Stumpf et al. 2007). For instance, ”Journalists-In-The-Loop” (Karmakharm, Aletras, and Bontcheva 2019) continuously refines rumor detection by soliciting visual veracity ratings from journalist’s feed- back. Similarly, Mavridis et al. (2018) suggest a HITL sys- tem to detect media bias in videos. They plan to extract bias cues through comparative analysis and sentiment anal- ysis and rely on scholars to validate the output. However, their system stays in the conceptual phase. Brew, Greene, and Cunningham (2010)’s web platform crowdsources news article sentiments and re-trains classifiers based on non- expert majority votes, emphasizing the effectiveness of di- versified annotations and user demographics over mere an- notator consensus. Demartini, Mizzaro, and Spina (2020) propose combining automatic methods, crowdsourced work- ers, and experts to balance cost, quality, volume, and speed. Their concept uses automated methods to identify and clas- sify misinformation, passing some to the crowd and experts for verification in unclear cases. Similar to Mavridis et al. (2018), they do not implement their system and describe no UI details.

As no HITL system has been implemented to address me- dia bias, we aim to close this gap by integrating automatic bias highlights based on expert annotation data readers can review. To mitigate possible anchoring bias and uncritical acceptance of machine judgments, we test a second feed- back mechanism aimed at increasing critical thinking (Vac- caro and Waldo 2019; Furnham and Boo 2011; Jakesch et al. 2023; Shaw, Horton, and Chen 2011).

Authors:

(1) Smi Hinterreiter;

(2) Martin Wessel;

(3) Fabian Schliski;

(4) Isao Echizen;

(5) Marc Erich Latoschik;

(6) Timo Spinde.

This paper is available on arxiv under CC0 1.0 license.