Citation Note: The content and the structure of this article is based on the deep learning lectures from One-Fourth Labs — PadhAI.

Implementation In Python Using Numpy

- Sigmoid Neuron Class

- Overall setup — What is the data, model, task

- Plotting functions — 3D & contour plots

- Individual algorithms and how they perform

Axes3Dmpl_toolkits.mplot3dcolorscolormap(cm)animationrcHTMLnumpyfrom mpl_toolkits.mplot3d import Axes3D

import matplotlib.pyplot as plt

from matplotlib import cm

import matplotlib.colors

from matplotlib import animation, rc

from IPython.display import HTML

import numpy as npSigmoid Neuron Implementation

Sigmoid Neuron Recap

Learning Algorithm

Sigmoid Neuron Class

class SN:

#constructor

def __init__(self, w_init, b_init, algo):

self.w = w_init

self.b = b_init

self.w_h = []

self.b_h = []

self.e_h = []

self.algo = algo

#logistic function

def sigmoid(self, x, w=None, b=None):

if w is None:

w = self.w

if b is None:

b = self.b

return 1. / (1. + np.exp(-(w*x + b)))

#loss function

def error(self, X, Y, w=None, b=None):

if w is None:

w = self.w

if b is None:

b = self.b

err = 0

for x, y in zip(X, Y):

err += 0.5 * (self.sigmoid(x, w, b) - y) ** 2

return err

def grad_w(self, x, y, w=None, b=None):

if w is None:

w = self.w

if b is None:

b = self.b

y_pred = self.sigmoid(x, w, b)

return (y_pred - y) * y_pred * (1 - y_pred) * x

def grad_b(self, x, y, w=None, b=None):

if w is None:

w = self.w

if b is None:

b = self.b

y_pred = self.sigmoid(x, w, b)

return (y_pred - y) * y_pred * (1 - y_pred)

def fit(self, X, Y,

epochs=100, eta=0.01, gamma=0.9, mini_batch_size=100, eps=1e-8,

beta=0.9, beta1=0.9, beta2=0.9

):

self.w_h = []

self.b_h = []

self.e_h = []

self.X = X

self.Y = Y

if self.algo == 'GD':

for i in range(epochs):

dw, db = 0, 0

for x, y in zip(X, Y):

dw += self.grad_w(x, y)

db += self.grad_b(x, y)

self.w -= eta * dw / X.shape[0]

self.b -= eta * db / X.shape[0]

self.append_log()

elif self.algo == 'MiniBatch':

for i in range(epochs):

dw, db = 0, 0

points_seen = 0

for x, y in zip(X, Y):

dw += self.grad_w(x, y)

db += self.grad_b(x, y)

points_seen += 1

if points_seen % mini_batch_size == 0:

self.w -= eta * dw / mini_batch_size

self.b -= eta * db / mini_batch_size

self.append_log()

dw, db = 0, 0

elif self.algo == 'Momentum':

v_w, v_b = 0, 0

for i in range(epochs):

dw, db = 0, 0

for x, y in zip(X, Y):

dw += self.grad_w(x, y)

db += self.grad_b(x, y)

v_w = gamma * v_w + eta * dw

v_b = gamma * v_b + eta * db

self.w = self.w - v_w

self.b = self.b - v_b

self.append_log()

elif self.algo == 'NAG':

v_w, v_b = 0, 0

for i in range(epochs):

dw, db = 0, 0

v_w = gamma * v_w

v_b = gamma * v_b

for x, y in zip(X, Y):

dw += self.grad_w(x, y, self.w - v_w, self.b - v_b)

db += self.grad_b(x, y, self.w - v_w, self.b - v_b)

v_w = v_w + eta * dw

v_b = v_b + eta * db

self.w = self.w - v_w

self.b = self.b - v_b

self.append_log()

#logging

def append_log(self):

self.w_h.append(self.w)

self.b_h.append(self.b)

self.e_h.append(self.error(self.X, self.Y))#constructor

def __init__(self, w_init, b_init, algo):

self.w = w_init

self.b = b_init

self.w_h = []

self.b_h = []

self.e_h = []

self.algo = algo__init__

— These parameters take the initial values for the parameters ‘w’ and ‘b’ instead of setting parameters randomly, we are setting it to specific values. This allows us to understand how an algorithm performs by visualizing for different initial points. Some algorithms get stuck in local minima at some parameters.w_init,b_init

— It tells which variant of gradient descent algorithm to use for finding the optimal parameters.algo

def sigmoid(self, x, w=None, b=None):

if w is None:

w = self.w

if b is None:

b = self.b

return 1. / (1. + np.exp(-(w*x + b)))

—By taking ‘w’ and ‘b’ as the parameters it helps us to calculate the value of the sigmoid function at specifically specified values of parameters. If these arguments are not passed, it will take the values of learned parameters to compute the logistic function.w & b

def error(self, X, Y, w=None, b=None):

if w is None:

w = self.w

if b is None:

b = self.b

err = 0

for x, y in zip(X, Y):

err += 0.5 * (self.sigmoid(x, w, b) - y) ** 2

return errerrorsigmoidsigmoiddef grad_w(self, x, y, w=None, b=None):

.....

def grad_b(self, x, y, w=None, b=None):

.....grad_wgrad_bdef fit(self, X, Y, epochs=100, eta=0.01, gamma=0.9, mini_batch_size=100, eps=1e-8,beta=0.9, beta1=0.9, beta2=0.9):

self.w_h = []

.......def append_log(self):

self.w_h.append(self.w)

self.b_h.append(self.b)

self.e_h.append(self.error(self.X, self.Y))theappend_logSetting Up for Plotting

#Data

X = np.asarray([3.5, 0.35, 3.2, -2.0, 1.5, -0.5])

Y = np.asarray([0.5, 0.50, 0.5, 0.5, 0.1, 0.3])

#Algo and parameter values

algo = 'GD'

w_init = 2.1

b_init = 4.0

#parameter min and max values- to plot update rule

w_min = -7

w_max = 5

b_min = -7

b_max = 5

#learning algorithum options

epochs = 200

mini_batch_size = 6

gamma = 0.9

eta = 5

#animation number of frames

animation_frames = 20

#plotting options

plot_2d = True

plot_3d = Falsesn = SN(w_init, b_init, algo)

sn.fit(X, Y, epochs=epochs, eta=eta, gamma=gamma, mini_batch_size=mini_batch_size)

plt.plot(sn.e_h, 'r')

plt.plot(sn.w_h, 'b')

plt.plot(sn.b_h, 'g')

plt.legend(('error', 'weight', 'bias'))

plt.title("Variation of Parameters and loss function")

plt.xlabel("Epoch")

plt.show()3D & 2D Plotting Setup

if plot_3d:

W = np.linspace(w_min, w_max, 256)

b = np.linspace(b_min, b_max, 256)

WW, BB = np.meshgrid(W, b)

Z = sn.error(X, Y, WW, BB)

fig = plt.figure(dpi=100)

ax = fig.gca(projection='3d')

surf = ax.plot_surface(WW, BB, Z, rstride=3, cstride=3, alpha=0.5, cmap=cm.coolwarm, linewidth=0, antialiased=False)

cset = ax.contourf(WW, BB, Z, 25, zdir='z', offset=-1, alpha=0.6, cmap=cm.coolwarm)

ax.set_xlabel('w')

ax.set_xlim(w_min - 1, w_max + 1)

ax.set_ylabel('b')

ax.set_ylim(b_min - 1, b_max + 1)

ax.set_zlabel('error')

ax.set_zlim(-1, np.max(Z))

ax.view_init (elev=25, azim=-75) # azim = -20

ax.dist=12

title = ax.set_title('Epoch 0')errorSNax.plot_surfacerstridecstridedef plot_animate_3d(i):

i = int(i*(epochs/animation_frames))

line1.set_data(sn.w_h[:i+1], sn.b_h[:i+1])

line1.set_3d_properties(sn.e_h[:i+1])

line2.set_data(sn.w_h[:i+1], sn.b_h[:i+1])

line2.set_3d_properties(np.zeros(i+1) - 1)

title.set_text('Epoch: {: d}, Error: {:.4f}'.format(i, sn.e_h[i]))

return line1, line2, title

if plot_3d:

#animation plots of gradient descent

i = 0

line1, = ax.plot(sn.w_h[:i+1], sn.b_h[:i+1], sn.e_h[:i+1], color='black',marker='.')

line2, = ax.plot(sn.w_h[:i+1], sn.b_h[:i+1], np.zeros(i+1) - 1, color='red', marker='.')

anim = animation.FuncAnimation(fig, func=plot_animate_3d, frames=animation_frames)

rc('animation', html='jshtml')

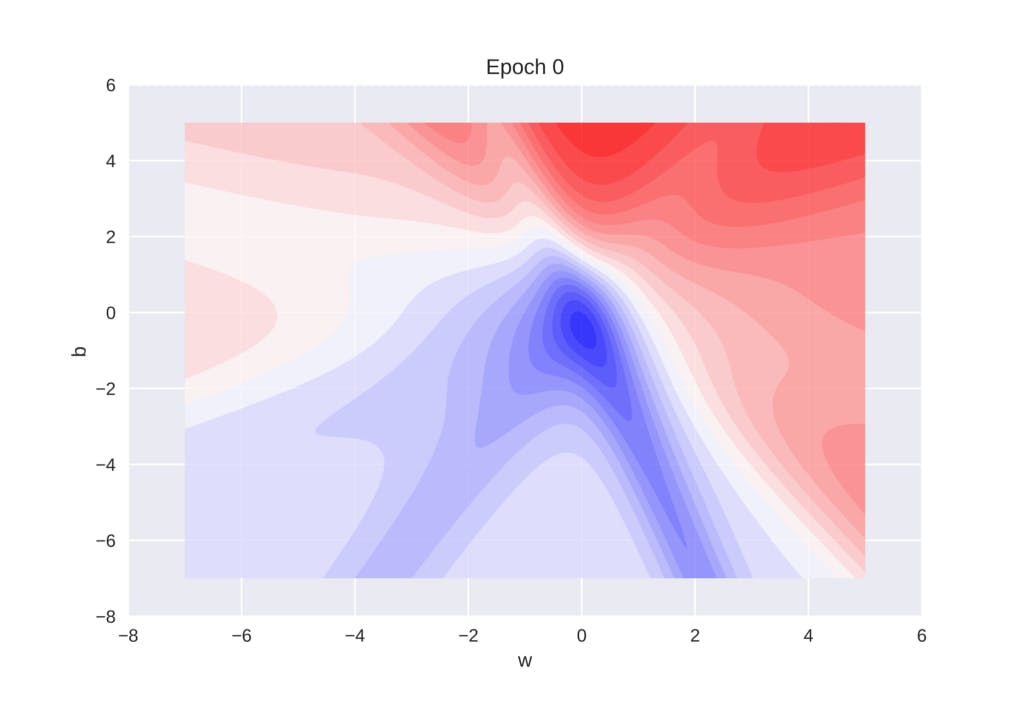

animanimation.FuncAnimationplot_animate_3dplot_animate_3drcif plot_2d:

W = np.linspace(w_min, w_max, 256)

b = np.linspace(b_min, b_max, 256)

WW, BB = np.meshgrid(W, b)

Z = sn.error(X, Y, WW, BB)

fig = plt.figure(dpi=100)

ax = plt.subplot(111)

ax.set_xlabel('w')

ax.set_xlim(w_min - 1, w_max + 1)

ax.set_ylabel('b')

ax.set_ylim(b_min - 1, b_max + 1)

title = ax.set_title('Epoch 0')

cset = plt.contourf(WW, BB, Z, 25, alpha=0.8, cmap=cm.bwr)

plt.savefig("temp.jpg",dpi = 2000)

plt.show()

def plot_animate_2d(i):

i = int(i*(epochs/animation_frames))

line.set_data(sn.w_h[:i+1], sn.b_h[:i+1])

title.set_text('Epoch: {: d}, Error: {:.4f}'.format(i, sn.e_h[i]))

return line, title

if plot_2d:

i = 0

line, = ax.plot(sn.w_h[:i+1], sn.b_h[:i+1], color='black',marker='.')

anim = animation.FuncAnimation(fig, func=plot_animate_2d, frames=animation_frames)

rc('animation', html='jshtml')

animAlgorithm Implementation

Vanilla Gradient Descent

for i in range(epochs):

dw, db = 0, 0

for x, y in zip(X, Y):

dw += self.grad_w(x, y)

db += self.grad_b(x, y)

self.w -= eta * dw / X.shape[0]

self.b -= eta * db / X.shape[0]

self.append_log()

X = np.asarray([0.5, 2.5])

Y = np.asarray([0.2, 0.9])

algo = 'GD'

w_init = -2

b_init = -2

w_min = -7

w_max = 5

b_min = -7

b_max = 5

epochs = 1000

eta = 1

animation_frames = 20

plot_2d = True

plot_3d = Truealgoplot_2dplot_3dplot_animate_3dMomentum-based Gradient Descent

v_w, v_b = 0, 0

for i in range(epochs):

dw, db = 0, 0

for x, y in zip(X, Y):

dw += self.grad_w(x, y)

db += self.grad_b(x, y)

v_w = gamma * v_w + eta * dw

v_b = gamma * v_b + eta * db

self.w = self.w - v_w

self.b = self.b - v_b

self.append_log()

v_wv_bappend_logX = np.asarray([0.5, 2.5])

Y = np.asarray([0.2, 0.9])

algo = 'Momentum'

w_init = -2

b_init = -2

w_min = -7

w_max = 5

b_min = -7

b_max = 5

epochs = 1000

mini_batch_size = 6

gamma = 0.9

eta = 1

animation_frames = 20

plot_2d = True

plot_3d = TruealgogammaNesterov Accelerated Gradient Descent

v_w, v_b = 0, 0

for i in range(epochs):

dw, db = 0, 0

v_w = gamma * v_w

v_b = gamma * v_b

for x, y in zip(X, Y):

dw += self.grad_w(x, y, self.w - v_w, self.b - v_b)

db += self.grad_b(x, y, self.w - v_w, self.b - v_b)

v_w = v_w + eta * dw

v_b = v_b + eta * db

self.w = self.w - v_w

self.b = self.b - v_b

self.append_log()

v_wv_bv_w = gamma * v_w

v_b = gamma * v_b

for x, y in zip(X, Y):

dw += self.grad_w(x, y, self.w - v_w, self.b - v_b)

db += self.grad_b(x, y, self.w - v_w, self.b - v_b)

v_w = v_w + eta * dw

v_b = v_b + eta * db

self.wself.balgoMini-Batch and Stochastic Gradient Descent

for i in range(epochs):

dw, db = 0, 0

points_seen = 0

for x, y in zip(X, Y):

dw += self.grad_w(x, y)

db += self.grad_b(x, y)

points_seen += 1

if points_seen % mini_batch_size == 0:

self.w -= eta * dw / mini_batch_size

self.b -= eta * db / mini_batch_size

self.append_log()

dw, db = 0, 0AdaGrad Gradient Descent

v_w, v_b = 0, 0

for i in range(epochs):

dw, db = 0, 0

for x, y in zip(X, Y):

dw += self.grad_w(x, y)

db += self.grad_b(x, y)

v_w += dw**2

v_b += db**2

self.w -= (eta / np.sqrt(v_w) + eps) * dw

self.b -= (eta / np.sqrt(v_b) + eps) * db

self.append_log()

RMSProp Gradient Descent

v_w, v_b = 0, 0

for i in range(epochs):

dw, db = 0, 0

for x, y in zip(X, Y):

dw += self.grad_w(x, y)

db += self.grad_b(x, y)

v_w = beta * v_w + (1 - beta) * dw**2

v_b = beta * v_b + (1 - beta) * db**2

self.w -= (eta / np.sqrt(v_w) + eps) * dw

self.b -= (eta / np.sqrt(v_b) + eps) * db

self.append_log()v_wv_bv_wv_wv_bAdam Gradient Descent

v_w, v_b = 0, 0

m_w, m_b = 0, 0

num_updates = 0

for i in range(epochs):

dw, db = 0, 0

for x, y in zip(X, Y):

dw = self.grad_w(x, y)

db = self.grad_b(x, y)

num_updates += 1

m_w = beta1 * m_w + (1-beta1) * dw

m_b = beta1 * m_b + (1-beta1) * db

v_w = beta2 * v_w + (1-beta2) * dw**2

v_b = beta2 * v_b + (1-beta2) * db**2

m_w_c = m_w / (1 - np.power(beta1, num_updates))

m_b_c = m_b / (1 - np.power(beta1, num_updates))

v_w_c = v_w / (1 - np.power(beta2, num_updates))

v_b_c = v_b / (1 - np.power(beta2, num_updates))

self.w -= (eta / np.sqrt(v_w_c) + eps) * m_w_c

self.b -= (eta / np.sqrt(v_b_c) + eps) * m_b_c

self.append_log()m_w & m_bv_w & v_bX = np.asarray([3.5, 0.35, 3.2, -2.0, 1.5, -0.5])

Y = np.asarray([0.5, 0.50, 0.5, 0.5, 0.1, 0.3])

algo = 'Adam'

w_init = -6

b_init = 4.0

w_min = -7

w_max = 5

b_min = -7

b_max = 5

epochs = 200

gamma = 0.9

eta = 0.5

eps = 1e-8

animation_frames = 20

plot_2d = True

plot_3d = FalseWhat's Next?

LEARN BY PRACTICING

Conclusion

What’s Next?

In my next post, we will discuss different activation functions such as logistic, ReLU, LeakyReLU, etc… and some best initialization techniques like Xavier and He initialization. So make sure you follow me on medium to get notified as soon as it drops.