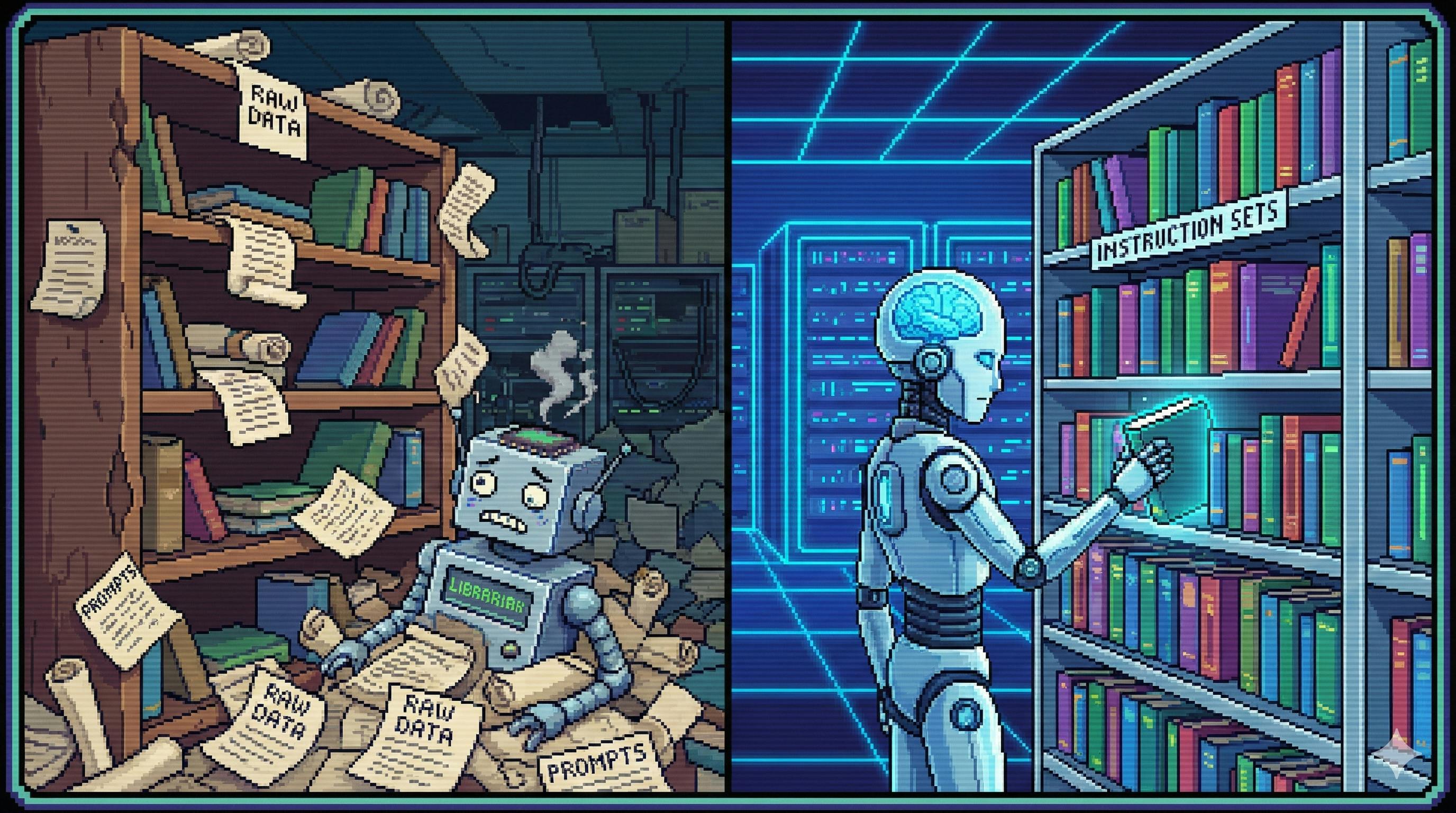

Prompt Tricks Don’t Scale. Instruction Tuning Does.

If you’ve ever shipped an LLM feature, you know the pattern:

- You craft a gorgeous prompt.

- It works… until real users show up.

- Suddenly your “polite customer support bot” becomes a poetic philosopher who forgets the refund policy.

That’s the moment you realise: prompting is configuration; Instruction Tuning is installation.

Instruction Tuning is how you teach a model to treat your requirements like default behaviour—not a suggestion it can “creatively interpret”.

What Is Instruction Tuning, Really?

Definition

Instruction Tuning is a post-training technique that trains a language model on Instruction–Response pairs so it learns to:

- understand the intent behind a task (“summarise”, “classify”, “extract”, “fix code”)

- produce output that matches format, tone, and constraints reliably

In other words, you’re moving from:

“Generate coherent text”

to:

“Execute tasks like a dependable system component.”

A quick intuition

A base model may respond to:

“Summarise this document”

with something long, vague, and slightly dramatic.

A tuned model learns:

- what counts as a summary

- what “key points” actually means

- how to keep it short, structured, and consistent

Instruction Tuning vs Prompt Tuning: The Difference That Matters

|

Dimension |

Instruction Tuning |

Traditional Prompt Tuning |

|---|---|---|

|

Where it acts |

Model weights (behaviour changes) |

Input text only |

|

Data need |

Needs many labelled examples |

Needs few examples |

|

Best for |

Long-term, repeated tasks (support, compliance, extraction) |

Ad-hoc tasks (one-off writing, translation) |

|

Persistence |

Behaviour sticks after training |

You redesign prompts repeatedly |

|

Barrier |

Higher (data + training setup) |

Lower (just write prompts) |

If you’re running the same workflows every day, Instruction Tuning pays off fast.

The Custom Instruction Library: Your “Ammo Depot”

Instruction Tuning is the strategy. A custom instruction library is the ammunition.

It’s a curated dataset that encodes:

- what tasks you care about

- how they should be handled

- how outputs must look and sound

Think of it as your model’s operating manual, written in training data.

What a High-Quality Instruction Pair Must Contain

Every example should have four parts. No shortcuts.

- Task type e.g., summarisation, translation, extraction, code repair, sentiment analysis

- Instruction Clear, unambiguous, with output constraints (format, length, tone)

- Input The raw material: document, conversation log, code snippet, medical notes, etc.

- Reference response The ideal output: correct, complete, consistent, and formatted

A simple schema (JSONL)

{"task":"refund_support","instruction":"Reply in friendly UK English. Confirm refund status and estimate payout time. Avoid jargon.","input":"Order #A91822. I requested a refund yesterday due to a faulty item. What's happening and when will I get the money?","output":"Hi! I can see refund request for order A91822 is approved and currently processing. You should receive the funds within 1–3 working days (depending on your bank). You don’t need to do anything else — we’ll notify you once it’s completed."}

Notice what’s happening: tone, region, and format are all part of the spec.

Design Principles That Actually Move the Needle

1) Coverage: hit the long tail, not just the happy path

If you’re tuning for e-commerce support, don’t only include:

- “Where’s my parcel?”

- “I want a refund.”

Also include the messy real world:

- partial refunds

- missing items

- “Royal Mail says delivered but it isn’t”

- chargebacks

- angry customers who won’t provide an order number

A model trained on only “clean” scenarios will panic the first time the input isn’t.

2) Precision: remove ambiguity from your instructions

Bad instruction:

“Handle this user request.”

Better instruction:

“Classify the sentiment as Positive/Neutral/Negative, then give a one-sentence reason.”

Best instruction:

“Return JSON exactly: {"label": "Positive|Neutral|Negative", "reason": "..."}. No extra text.”

3) Diversity: vary inputs aggressively

Include:

- short vs long inputs

- slang, typos, mixed languages

- formal tickets vs WhatsApp-style messages

- different difficulty levels

Your production users are a chaos generator. Train accordingly.

4) Consistency: standardise output formats like you mean it

If 200 “Order status” answers are formatted differently, the model will learn inconsistency.

Pick a template and enforce it:

- fixed headings

- stable phrasing

- stable field order

Three Domain Examples (With Slightly More Realism)

1) Healthcare: extract case notes into a structured table (with safety)

Task: clinical note extraction Instruction: extract fields, present as a table, avoid inventing missing values

Input (example):

Patient: male, 45. Complains of cough and phlegm for 8 weeks, worsened with chest tightness for 1 week. History: hypertension x5 years, on nifedipine. CT: inflammation in both lower lobes. WBC: 12.5×10^9/L (high). Preliminary diagnosis: community-acquired pneumonia; hypertension grade 2.

Output (example):

|

Category |

Details |

|---|---|

|

Demographics |

Age: 45; Sex: Male |

|

Presenting complaint |

Cough and phlegm for 8 weeks; worse with chest tightness for 1 week |

|

History |

Hypertension for 5 years; nifedipine |

|

Tests |

CT: bilateral lower-lobe inflammation; WBC: 12.5×10^9/L (elevated) |

|

Preliminary diagnosis |

Community-acquired pneumonia; Hypertension (grade 2) |

Note: In medical settings, you typically add safety rules (“don’t diagnose beyond provided evidence”) directly into the instruction library.

2) Programming: fix broken Python (and explain why)

Input (broken code):

def calculate_average(nums):

total = 0

for n in nums

total += n

return total / len(nums)

Output (fixed + explanation):

def calculate_average(nums):

total = 0

for n in nums: # missing colon fixed

total += n

return total / len(nums)

Explanation: for loops in Python require a colon (:). Without it, the interpreter can’t parse the loop body.

(Also: avoid naming a variable sum—it shadows Python’s built-in sum().)

3) Education: generate a Year 4 maths word problem (UK flavour)

Instruction:

- Generate one word problem

- Topic: two-digit × one-digit multiplication

- Include: scenario, known facts, question

- Use £ and UK context

Output:

Scenario: School fair Known facts: Each ticket costs £24. A parent buys 3 tickets. Question: How much do they pay in total?

Implementation Workflow: From Library to Tuned Model

Instruction Tuning is a pipeline. If you skip steps, you pay later.

Step 1: Build the dataset

Sources

- Internal logs: support tickets, agent replies, summaries

- Public datasets: Alpaca, FLAN, ShareGPT (filter aggressively)

- Human labelling: for tasks where correctness matters

Cleaning checklist

- remove vague instructions (“handle this”, “do the thing”)

- remove wrong/incomplete outputs

- deduplicate near-identical samples

- standardise formatting and field names

Split

- Train: 70–80%

- Validation: 10–15%

- Test: 10–15%

No leakage. No overlap.

Step 2: Choose the base model (with reality constraints)

Pick based on:

- task complexity

- deployment constraints

- compute budget

A practical rule:

- 7B–13B models + LoRA work well for many enterprise workflows

- bigger models help with harder reasoning and richer generation

- if you need on-device or edge, plan for quantisation and smaller footprints

Step 3: Fine-tune strategy and hyperparameters

Typical starting points (LoRA):

- learning rate: 1e-4 to 5e-5

- batch size: 8–32 (use gradient accumulation if needed)

- epochs: 3–5

- early stopping if validation stops improving

LoRA is popular because it’s efficient: you train small adapter matrices instead of all weights.

Step 4: Evaluate like you’re going to ship it

Quantitative (depends on task)

- classification accuracy (for extract/classify)

- BLEU/ROUGE (for summarise/translate — imperfect but useful)

- perplexity (language quality proxy)

Qualitative (the “would you trust this?” test)

Get 3–5 reviewers to score:

- instruction adherence

- completeness

- format correctness

- domain alignment

Also run scenario tests: 10–20 realistic edge cases.

Step 5: Deploy + keep tuning

After deployment:

- log response times, failures, “format drift”

- collect bad outputs and user feedback

- convert them into new instruction pairs

- periodically re-tune

Instruction libraries are living assets.

A Practical Case Study: E‑Commerce Support

Goal

Teach a model to handle:

- order status

- refunds

- product recommendations

with:

- consistent format

- friendly UK English

- accurate, policy-compliant answers

Dataset (example proportions)

- 300 order status

- 400 refunds

- 300 recommendations

Training setup (example)

- base model: a chat-tuned 7B model

- LoRA rank: 8

- LoRA dropout: 0.05

- epochs: 3

- one consumer GPU class machine (or cloud instance)

Deployment trick

Quantise to 4-bit / 8-bit for serving efficiency, then integrate with your order/refund systems:

- model drafts the response

- the system injects verified order facts

- the final message is generated with hard constraints

This hybrid approach reduces hallucinations dramatically.

Common Failure Modes (And Fixes)

1) “We don’t have enough data”

If you’re below ~500 high-quality pairs, results can be shaky.

Fix:

- generate candidate pairs with a strong model

- have humans review and correct

- do light data augmentation (rephrase, swap names, vary order numbers)

2) Overfitting: great on train, bad on test

Fix:

- reduce epochs

- add diversity

- add dropout/weight decay

- use early stopping based on validation performance

3) Domain terms confuse the model

Fix:

- add “term explanation” examples

- include lightweight domain Q&A pairs so the model builds vocabulary

4) Output format keeps drifting

Fix:

- standardise reference outputs

- make formatting requirements explicit

- add negative examples (“do not output extra text”)

The Future: Auto-Instructions, Multimodal, and Edge Fine-Tuning

Where this is heading:

- Auto-instruction generation: models produce draft datasets, humans curate

- multimodal tuning: text + images + audio instructions become normal (e.g., “analyse this product photo and write a listing”)

- lighter tuning on edge devices: smaller models + efficient adapters + on-device updates

Final Take

Prompting is a great way to ask a model to behave. Instruction Tuning is how you teach it to behave.

If you want reliable outputs across many tasks, stop writing prompts like spells—and start building a custom instruction library like a real product asset:

- comprehensive coverage

- precise instructions

- diverse inputs

- consistent outputs

That’s how you get “one fine-tune, many tasks” without babysitting the model forever.