Table of Links

2 Related Work

2.2 Creativity Support Tools for Animation

2.3 Generative Tools for Design

4 Logomotion System and 4.1 Input

4.2 Preprocess Visual Information

4.3 Visually-Grounded Code Synthesis

5.1 Evaluation: Program Repair

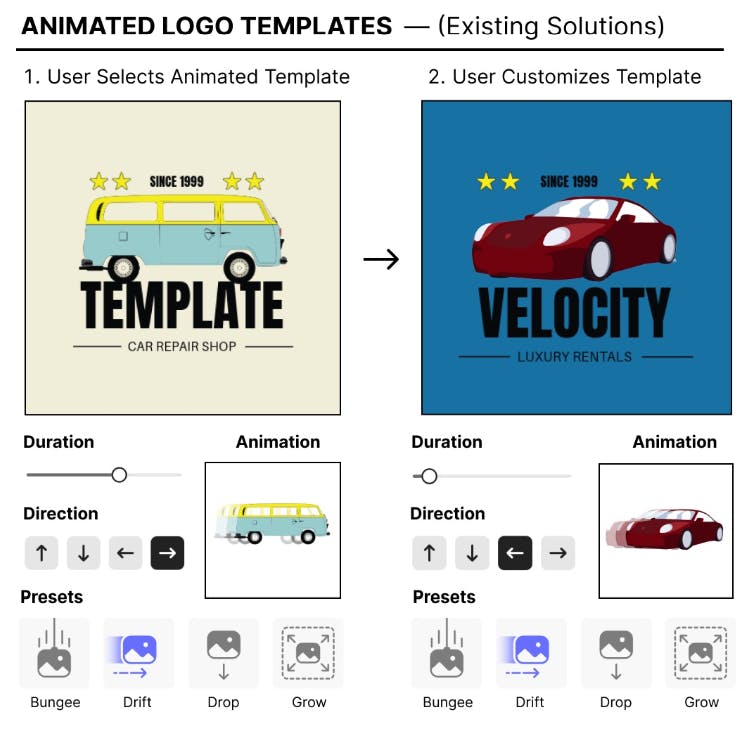

7 Discussion and 7.1 Breaking Away from Templates

7.2 Generating Code Around Visuals

2.3 Generative Tools for Design

Generative AI technologies have popularized natural language as a new form of interaction for content creation. LLMs [19] have shown promise in brainstorming support [44], script and writing assistance [29, 59, 61], and sensemaking [55]. Text-to-image models [14, 15, 50, 51] have shown to be effective at visual asset generation for visual blending [28], news illustration [44], storyboarding [59], product design [45], world building [24], and video generation [43]. Generative technologies have also begun to be applied to motion design and animation [31, 43].

The closest generative work to ours is Keyframer, a study of how novice and expert designers prompt GPT for animations. A major finding is that 84% of prompts were semantic in nature - users wanted to describe high level directions like “make the clouds wiggle" more often than low level prompts like changing the opacity. This clearly shows that people want semantically relevant animations–motion that characterizes how that element might move in real life.

LogoMotion studies a similar problem (animating digital layouts, but in the logo domain), and we also use LLMs for code synthesis. However, we build upon this direction by introducing a pipeline that performs code synthesis and program repair in a visuallygrounded manner. Keyframer generated animation code without using visual context from the canvas and had less built-in support

for the grouping and timing of design elements. The preprocessing and image understanding implemented within LogoMotion helps it come up with sophisticated design concepts that specify hero moments for the primary element and handle the sequencing of other design elements (e.g. synchronized secondary elements, text animation). Furthermore, we compare our approach to state-ofthe-art baselines and show a significant improvement in content awareness.

Authors:

(1) Vivian Liu, Columbia University (vivian@cs.columbia.edu);

(2) Rubaiat Habib Kazi, Adobe Research (rhabib@adobe.com);

(3) Li-Yi Wei, Adobe Research (lwei@adobe.com);

(4) Matthew Fisher, Adobe Research (matfishe@adobe.com);

(5) Timothy Langlois, Adobe Research (tlangloi@adobe.com);

(6) Seth Walker, Adobe Research (swalker@adobe.com);

(7) Lydia Chilton, Columbia University (chilton@cs.columbia.edu).

This paper is