Problem Statement

"Moving from one proven platform to another is less about change and more about aligning with the future direction of the organization."

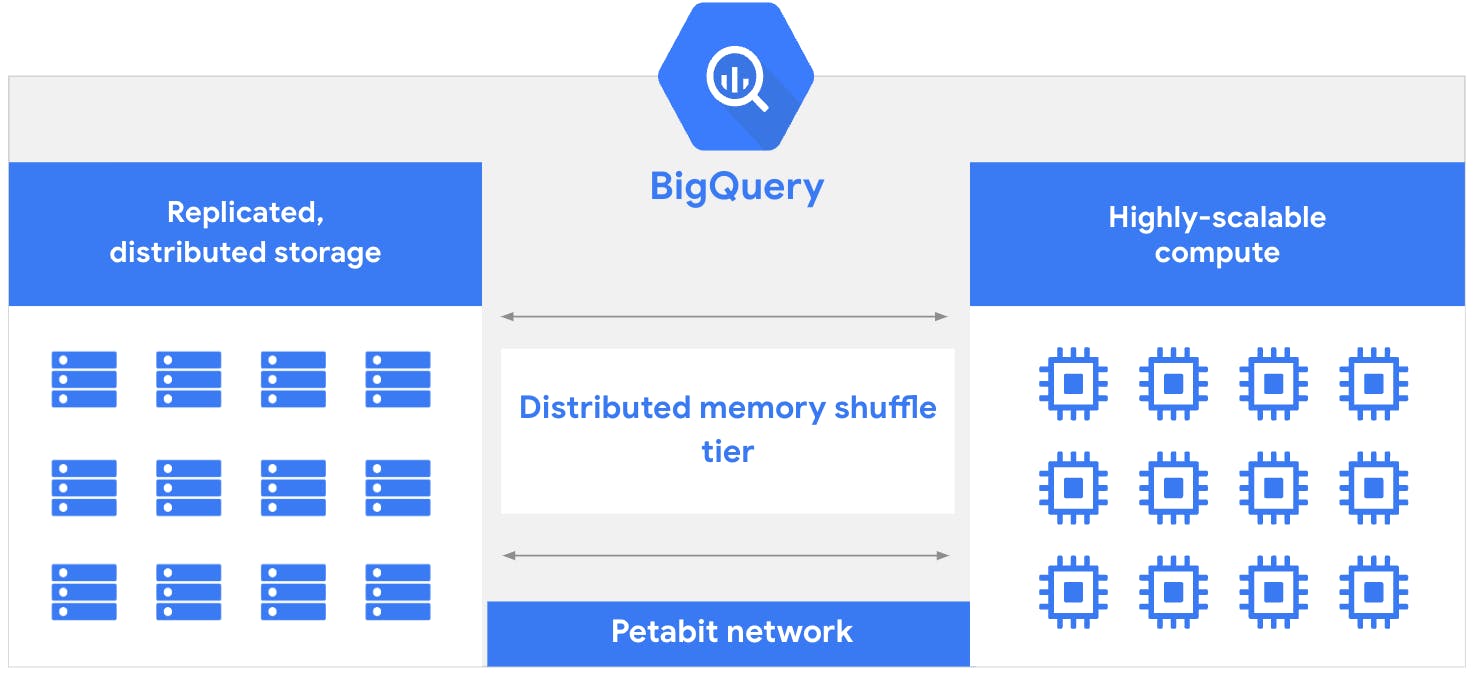

Cloud data lake migration comprises a wide scope, such as lifting and shifting tables, migrating workloads, setting up the network, evaluating Data Ingestion strategies, reviewing the Data Consumption strategies, analyzing Analytics reporting strategies, making data available for Data science & AI, training resources, and many more. As enterprises evolve toward BigQuery and the broader Google Cloud ecosystem, metadata becomes an insightful hub: it tells us which tables matter most, how workloads are used, and where to focus optimization efforts. How can it be used for futuristic applications resembling Data Cataloging?

But here’s the challenge—managing and making sense of thousands of Database objects and usage metrics during a migration is complicated. I planned to confront this challenge using BigQuery’s native AI capabilities. By combining ML.GENERATE_EMBEDDING and ML.GENERATE_TEXT with vector indexing and Retrieval-Augmented Generation (RAG), I envisioned static metadata into an intelligent knowledge layer.

The result? Smarter migration planning, real-time tracking, and even a metadata catalog that teams can search in natural language. A go-to guide for the Data Engineering and Data Analytics team. It builds a key portion of the foundation of “Data-as-a-service”.

Solution: Leveraging BigQuery AI for Intelligent Metadata Search and Migration Tracking

To address the challenges of discovering existing Data Lake environments, extracting metadata of Data Lake Objects, and using it for tracking progress during a cloud data lake migration, I leveraged BigQuery’s AI capabilities, specifically ML.GENERATE_EMBEDDING and ML.GENERATE_TEXT. This approach enables intelligent search across metadata, semantic understanding of table usage, and the ability to query data in natural language.

The solution can be broken down into the following steps:

- Data Preparation

-

Capture Information Schema metadata and usage metrics from the source system.

-

Scramble sensitive data to maintain integrity and compliance while preserving structure (as applicable)

-

- Data Upload to Google Cloud

-

Upload prepared data files to Google Cloud Storage (GCS) as a staging area.

-

Create the necessary BigQuery environment: project, datasets, and tables.

-

Grant IAM roles to ensure proper access and security.

-

- Model Creation

-

Develop a model for generating embeddings of the metadata.

-

Develop a model for semantic text search to enable natural language queries.

-

- Generate Embeddings

-

Vectorize metadata and usage metrics using the embeddings model.

-

- Create Vector Index

-

Build an index on the embeddings for fast approximate nearest neighbor (KNN) search.

-

- Leverage RAG for Contextual Search

-

Perform Retrieval-Augmented Generation (RAG) to retrieve relevant metadata based on queries.

-

- Generate Contextual Responses

- Use the retrieved context to generate meaningful text responses, providing insights into migration progress and metadata exploration.

This solution facilitates migration planning and monitoring and lays the groundwork for a metadata catalog that can serve long-term organizational needs. Wrapping this workflow in Python and Streamlit provides a user-friendly interface, giving the feel of a fully functional metadata tool.

How it works (Pseudo Code)

1. Data Preparation

Metadata about the DataLake is stored in the Information Schema. I used the SQL to extract table metadata from all accessible databases.

SELECT TABLE_CATALOG as database_name, TABLE_SCHEMA as schema_name, TABLE_NAME as table_name, TABLE_TYPE as table_type, ROW_COUNT, BYTES, CREATED, LAST_ALTERED, COMMENT as table_comment FROM INFORMATION_SCHEMA.TABLES WHERE TABLE_SCHEMA NOT IN ('INFORMATION_SCHEMA') ORDER BY TABLE_CATALOG, TABLE_SCHEMA, TABLE_NAME

Similarly, Account Usage was used to retrieve data related to table usage metrics.

SELECT b.TABLE_CATALOG as database_name, b.TABLE_SCHEMA as schema_name, b.TABLE_NAME as table_name, b.ROW_COUNT as table_row_count, COALESCE(SUM(a.read_count),0) as total_read_count_30days, COUNT(DISTINCT a.user_name) as unique_users_30days, MIN(a.query_start_time) as first_access_30days, MAX(a.query_start_time) as last_access_30days FROM INFORMATION_SCHEMA.TABLES b LEFT JOIN ( SELECT ah.query_start_time, ah.user_name, d.value:"objectName"::string AS table_name, COUNT(*) AS read_count FROM ACCOUNT_USAGE.ACCESS_HISTORY ah, LATERAL FLATTEN(input => ah.base_objects_accessed) d WHERE d.value:"objectDomain"::string IN ('Table') AND ah.query_start_time >= DATEADD(day, -1, CURRENT_TIMESTAMP) GROUP BY ah.query_start_time, ah.user_name, table_name ) a ON UPPER(a.TABLE_NAME) = UPPER(b.TABLE_CATALOG||'.'||b.TABLE_SCHEMA||'.'||b.TABLE_NAME) WHERE b.TABLE_SCHEMA NOT IN ('INFORMATION_SCHEMA') GROUP BY b.TABLE_CATALOG, b.TABLE_SCHEMA, b.TABLE_NAME, b.ROW_COUNT ORDER BY total_read_count_30days DESC, b.TABLE_CATALOG, b.TABLE_SCHEMA, b.TABLE_NAME

This method provides metadata for all necessary database objects, such as procedures, functions, views, columns, etc.

Another important consideration I thought of is scrambling the data, as required. It would help when we deal with sensitive data and not just Metadata. The MD5 worked well in maintaining consistency.

For this use case, I’ll only consider Metadata.

import hashlib

# Create deterministic hash for consistent scrambling

hash_object = hashlib.md5(original_name.encode())

hash_hex = hash_object.hexdigest()[:8]

2. Data Upload to Google Cloud

I used the Google Cloud console to upload data files to Google Cloud Storage. The files were organized as a raw data folder and a scrambled data folder, as shown below.

Later, using the BigQuery console, I created tables for each data set. The UI accurately and conveniently reads the schema based on the GCS file and makes the table. It was straightforward.

The final step of Data preparation and organization is granting access to the principal. Again with UI, I got all the grants assigned to the account.

3. Model Creation

The approach leverages metadata from the existing environment to support migration planning and strategy for BigQuery. By analyzing schema information and usage metrics, the process enables the design of the migration roadmap and validation of migration progress over time. Furthermore, once consolidated in BigQuery, this metadata can be repurposed as a searchable Data Catalog, adding visibility and governance to the Data Lake.

Having said this, I used two functions:

a. ML.GENERATE_TEXT:

The function generates text responses based on a prompt, similar to Generative AI chat. For this use case, I used the Gemini model in the function. It has SQL-based syntax and will be run on BigQuery UI, making it convenient.

CREATE OR REPLACE MODEL <project id>.<data set>.<model name> REMOTE WITH CONNECTION DEFAULT OPTIONS (ENDPOINT = 'gemini-2.0-flash');

b. ML.GENERATE_EMBEDDING:

The function generates text embeddings. I have used Gemini; however, other available models are Mistral, Clave, Lama, etc

CREATE OR REPLACE MODEL <project id>.<data set>.<model name> REMOTE WITH CONNECTION DEFAULT OPTIONS (ENDPOINT = 'gemini-embedding-001');

4. Generate Embeddings

Using Models from Point 3, I created metadata embedding. In the first attempt, I selected all columns and then considered only the columns needed as per my use case. I concatenated these columns for embedding, and the result is also promising.

The mandatory requirement is to have one string column. After executing this SQL, new columns appear with embeddings in integer format.

CREATE OR REPLACE TABLE <project id>.<dataset id>.<Existing Table Name_embedding> AS SELECT * FROM ML.GENERATE_EMBEDDING( MODEL <From point 3>, (SELECT *,'SNOWFLAKE' AS content FROM <project id>.<dataset id>.<Existing Table> ) ) WHERE LENGTH(ml_generate_embedding_status) = 0;`

The SELECT clause {\*,DATABASE_NAME||SCHEMA_NAME||TABLE_NAME } gave me the expected result.

5. Create Vector Index

Like a Table index, a vector index on an embedding column helps vector search. Here, the technique used is nearest neighbor. It improves vector search performance and returns more approximate results. There are multiple parameter that helps in search performance:

a. index_type - IVF: Specifying IVF builds the vector index as an inverted file index (IVF). An IVF uses a k-means algorithm to cluster the vector data, and then partitions the vector data based on those clusters (Reference). b. distance_type has three options: euclidean, cosine, and dot_product. c. ivf_options "The only supported option is num_lists. Specify an INT64 less than or equal to 5,000 that determines how many lists the IVF algorithm creates. During indexing, vectors are assigned to the list corresponding to their nearest cluster centroid. num_lists controls query tuning granularity. (Reference)

Here is the SQL for creating a Vector Index.

CREATE OR REPLACE VECTOR INDEX <> ON <embedding table from point 4>(ml_generate_embedding_result) OPTIONS(index_type = 'IVF', distance_type = 'COSINE', ivf_options = '{"num_lists":50}');

6. Leverage RAG for Contextual Search

The vector embeddings help find the relevant context from the data store, enabling the LLM to provide accurate results with a given prompt. In the two queries below and their results, I am showing with the given text (aliased as content) whether the vector search returns the expected result. My data store pulls data from Adobe Campaign, a marketing platform. So when the text was ‘Data of ADOBE CAMPAIGN', the vector search returned text. There was no result text when the text was Data

Additionally, the WHERE clause uses “1 - COSINE_DISTANCE(base.ml_generate_embedding_result, query.ml_generate_embedding_result) > 0.70.” This is another functionality that helps identify the relevant text. In my case, the value of “0.70” worked. It is basically a cosine similarity measure of two vectors.

Query 1:

SELECT query.query, base.database_name, base.schema_name, base.table_name FROM VECTOR_SEARCH( TABLE <point 4>, 'ml_generate_embedding_result',(SELECT ml_generate_embedding_result, content AS query FROM ML.GENERATE_EMBEDDING(MODEL < point 3>, (SELECT 'Data of ADOBE CAMPAIGN' AS content))),top_k => 1, options => '{"fraction_lists_to_search": 0.01}') WHERE 1 - COSINE_DISTANCE(base.ml_generate_embedding_result, query.ml_generate_embedding_result) > 0.70;

Result:

Query 2:

SELECT query.query, base.database_name, base.schema_name, base.table_name FROM VECTOR_SEARCH(TABLE <point 4>, 'ml_generate_embedding_result',(SELECT ml_generate_embedding_result, content AS query FROM ML.GENERATE_EMBEDDING(MODEL < point 3>,(SELECT 'Data of ADOBE RESEARCH' AS content))),top_k => 1, options => ‘{"fraction_lists_to_search": 0.01}') WHERE 1 - COSINE_DISTANCE(base.ml_generate_embedding_result, query.ml_generate_embedding_result) > 0.70;

Result:

7. Generate Contextual Responses

I am fulfilling two use cases with this RAG solution.

a. metadata from existing DataLake helps organize and plan the migration to a new cloud-based Data warehouse solution. Taking a small step. As part of the scope determination, there are questions. How many tables are there in a schema? What is the size of each table in that schema? Let’s try to get this answer.

User Question 1: How many tables are there in a schema?

SELECT ml_generate_text_llm_result AS generated, prompt FROM ML.GENERATE_TEXT( MODEL <point 3 text model>,(SELECT CONCAT('You are a data analyst that focuses on understanding existing Data Lake and plan the migration strategy to another efficient and cost effective platform, organize the task based on volumne, priority. With access to a vector index built from SaaS Cloud database"s information schema columns metadata. When a user asks how many tables are in any schema answer it based on the context retrieved from vector search. If you get list of tables then answer the cound and name of the tables. If the question is find unique users on this tables in last 30 days then answer based on the column value.', 'Instructions: If the object name mentioned in the user"s question exactly matches in the retrieved metadata content, respond with the context retrieved" If the object name is not found in the retrieved content, respond with: "No, the dataset is yet to be pulled into the data lake." Do not speculate or provide partial matches. Only respond based on exact or clearly matching object names in the retrieved metadata. Example Question:"Is the object customer_orders_2023 available in the ata lake?','Based on above context can you tell how many tables are in schema ADOBE_CAMPAIGN ',STRING_AGG( FORMAT("Database Name: %s, Database Schema: %s, Table Name: %s, Unique Visitor 30 days: %d", base.database_name, base.schema_name, base.table_name, base.unique_users_30days), ',\n')) AS prompt,FROM VECTOR_SEARCH(TABLE <point 4 embedding table>, 'ml_generate_embedding_result',(SELECT ml_generate_embedding_result,content AS query FROM ML.GENERATE_EMBEDDING( MODEL <point 3 embedding model>, (SELECT 'schema: ADOBE_CAMPAIGN' AS content))), top_k => 100, options => '{"fraction_lists_to_search": 0.01}') ),STRUCT(600 AS max_output_tokens, TRUE AS flatten_json_output));`

Result:

User Question 2: What is the size of each table in that schema?

SELECT ml_generate_text_llm_result AS generated, prompt FROM ML.GENERATE_TEXT( MODEL <point 3 text model>, (SELECT CONCAT('You are a data analyst that focuses on understanding existing Data Lake and plan the migration strategy to another efficient and cost effective platform, organize the task based on volumne, priority. With access to a vector index built from SaaS Cloud database"s information schema columns metadata. When a user asks how many tables are in any schema answer it based on the context retrieved from vector search. If you get list of tables then answer the cound and name of the tables. If the question is find unique users on this tables in last 30 days then answer based on the column value.', 'Instructions: If the object name mentioned in the user"s question exactly matches in the retrieved metadata content, respond with the context retrieved" If the object name is not found in the retrieved content, respond with: "No, the dataset is yet to be pulled into the data lake." Do not speculate or provide partial matches. Only respond based on exact or clearly matching object names in the retrieved metadata. Example Question:"Is the object customer_orders_2023 available in the ata lake?','Based on above context can you tell how many unique visitors of each table in schema ADOBE_CAMPAIGN in last 30 days',STRING_AGG(FORMAT("Database Name: %s, Database Schema: %s, Table Name: %s, Unique Visitor 30 days: %d", base.database_name, base.schema_name, base.table_name, base.unique_users_30days),',\n')) AS prompt, FROM VECTOR_SEARCH( TABLE <point 4 embedding table>, 'ml_generate_embedding_result',(SELECT ml_generate_embedding_result, content AS query FROM ML.GENERATE_EMBEDDING( MODEL <point 3 embedding model>, (SELECT 'schema: ADOBE_CAMPAIGN' AS content))), top_k => 100, options => '{"fraction_lists_to_search": 0.01}')),STRUCT(600 AS max_output_tokens, TRUE AS flatten_json_output));

Result:

b. Data Catalog: Once migration is complete, the vector store of metadata will be re-purposed for Data Catalogging. Continuing the vector creation of new and modified data will lead to a valuable application. Again, this is a small step. As part of cataloging, there are questions. Do we have data from Adobe Financials? Let’s try to get this answer.

User Question: Do we have data from Adobe Financials?

SELECT ml_generate_text_llm_result AS generated, prompt FROM ml.generate_text(model <point 3 text model>, (SELECT concat( 'You are a data assistant with access to a vector index built from SaaS cloud data lake"s information schema columns metadata. When a user asks whether a specific database object (e.g., table or view) is available in the data lake, retrieve the most relevant metadata entries using semantic search.', 'Instructions: If the object name mentioned in the user"s question appears in the retrieved metadata content, respond with: "Yes, the object is available in the data lake." If the object name is not found in the retrieved content, respond with: "No, the dataset is yet to be pulled into the data lake." Do not speculate or provide partial matches. Only respond based on exact or clearly matching object names in the retrieved metadata. Example Question:"Is the object customer_orders_2023 available in the ata lake?','Based on above context can you tell if we have ADOBE FINANCIALS data? ',string_agg( format("Database Object: %s", query.query), ',\n')) AS prompt, FROM vector_search( TABLE <point 4 embedding TABLE>, 'ml_generate_embedding_result', ( SELECT ml_generate_embedding_result, content AS query FROM ml.generate_embedding( model <point 3 embedding model>, ( SELECT 'Data from ADOBE FINANCIALS' AS content) ) ), top_k => 100, options => '{"fraction_lists_to_search": 0.01}') ), struct(600 AS max_output_tokens, true AS flatten_json_output));

Result:

Conclusion

To conclude the evaluation of BigQuery's AI capability, it is convenient to use because of its availability in SQL syntax. There is flexibility in modifying the result accuracy using factors during embedding, creating a vector index, and doing vector search. Multiple LLM options will address a variety of use cases.

Google Cloud data lake migration is more than a technical exercise—it is a strategic investment in future-ready data platforms. By leveraging the AI-powered capabilities of BigQuery, the static metadata will be transformed into a dynamic knowledge layer. The approach accelerates migration planning and monitoring and lays the foundation for an intelligent metadata catalog that evolves with the organization’s data ecosystem. By combining ML-generated embeddings, semantic search, and RAG-driven contextual responses, teams (Engineering or Business) can access metadata in a human-like, intuitive way, reducing friction in decision-making and unlocking higher productivity.

In essence, leveraging BigQuery’s native AI features enables organizations to build Data-as-a-Service capabilities where metadata becomes actionable intelligence. It empowers data engineers, analysts, and business stakeholders to not only migrate confidently but also to innovate quickly and enable advanced analytics and AI-driven insights.

References

pic :<https://cloud.google.com/bigquery/docs/introduction>

<https://cloud.google.com/bigquery/docs/generate-text-embedding#console_1>

<https://cloud.google.com/bigquery/docs/generate-text-embedding#console_1>

<https://cloud.google.com/bigquery/docs/vector-index-text-search-tutorial>

<https://cloud.google.com/bigquery/docs/reference/standard-sql/data-definition-language#create_vector_index_statement>