The Starting Point

I recently read this article: "Reasoning Models Generate Societies of Thought". The idea is that enhanced reasoning comes not from pure computation power but from internal dialog between multiple voices, or as stated "diversification and debate among internal cognitive perspectives". This gave me a simple idea: if a model performs better by simulating multiple perspectives internally, why not externalize this pattern with a second model?

The approach is not particularly novel - for example AI pipelines often use a code-review agent that reviews code changes. However in this case I decided to focus on the plan mode in Claude Code. When I work with Claude Code (Opus 4.5 and now 4.6), I always begin in plan mode. And before implementing anything, I typically do 2-3 iterations on the plan with Claude. What if I could save an iteration by adding an external reviewer?

I wanted another model since the idea was to add diversity and debate; the idea behind it was that a model of the same family would have the same biases, making the "discussion" less enriching. I decided to test Kimi K2.5 from Moonshot AI. The model has strong results on coding and reasoning benchmarks, a thinking mode, and is rather cheap ($0.60 per million input tokens, $3 per million output tokens). However any openai compatible endpoint should work.

With kimi a plan review is cheap, something between 2 to 4 cents.

The Script: kimi-advisor

I wrote a short Python CLI with 3 functions :

ask: ask a question, get advicereview: critique a plandecompose: break down a task into subtasks

Kimi has no access to the codebase, all relevant context must be included in the prompt. I included in the repo a few suggested lines to add to your CLAUDE.md.

Making the Review Mandatory

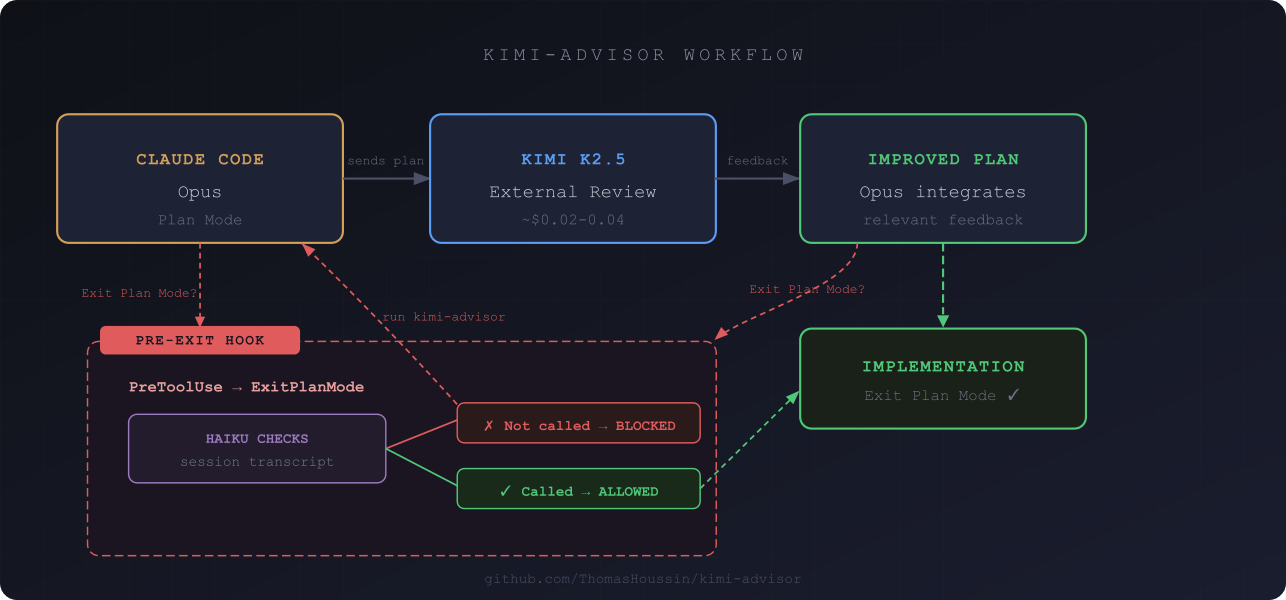

I noticed that the advisor was not called as frequently as I wanted, even with the request in the CLAUDE.md. I decided to force mandatory review before presenting the plan and created a Claude Code hook. It's a Node.js script (for portability) that blocks ExitPlanMode if kimi-advisor hasn't been executed in the session ; haiku is used in the script to do the check (called with claude -p --model haiku).

Configuration in .claude/settings.json:

{

"hooks": {

"PreToolUse": [

{

"matcher": "ExitPlanMode",

"hooks": [

{

"type": "command",

"command": "node .claude/hooks/pre-exit-plan-mode.mjs"

}

]

}

]

}

}

The hook reads the session transcript, uses Claude Haiku to verify if kimi-advisor was actually called (not just mentioned in a reminder), and blocks if it wasn't:

const PROMPT = `Analyze this Claude Code session transcript (JSONL format).

Question: Has "kimi-advisor" been executed (via a Bash tool call) to review or challenge the current plan?

[...]

Respond with ONLY a JSON object, no other text:

{"executed": true} or {"executed": false}`;

If Kimi wasn't called, the hook refuses to exit plan mode with an explicit message. Claude runs the script, improves the plan if needed and ask again for ExitPlanMode.

The code for both scripts is here: github.com/ThomasHoussin/kimi-advisor

Real-World Testing

Case 1: Adding File Support

The first version didn't have file attachment; I wanted to add a -f option to attach files to requests. Opus made the plan, then passed it to Kimi for review. Kimi found several real issues:

TOCTOU Race Condition — The plan called for checking path.exists() then reading the file. Kimi pointed out the vulnerability:

"Checkingpath.exists()andpath.is_file()before opening creates a time-of-check to time-of-use vulnerability. File could be deleted/modified between check and read. Recommendation: Remove pre-checks; usetry/exceptaround file operations."

Suboptimal Message Ordering — The plan put text files before the question:

"Placing text files before the user question forces the model to read potentially large code blocks before knowing what the question is. Recommendation: User question first, then text file contexts with clear headers, then images."

SVG Misclassified — The plan listed SVG in image extensions:

"SVG is listed in IMAGE_EXTENSIONS but is XML text. Kimi K2.5 vision API likely expects raster images. Recommendation: Treat SVG as text."

Opus integrated this feedback into the implementation. The current code uses try/except instead of pre-checks, puts the question first, and treats SVG as text.

Case 2: Rust Lambda

On another project adding a Rust Lambda, Kimi suggested switching to ARM64 (Graviton) for cost and cold start improvements. It also mentioned MSYS_NO_PATHCONV for Git Bash on Windows. Opus integrated both.

Case 3: DynamoDB Storage

On a plan that stored rawResponse in DynamoDB, Kimi flagged the 400KB item size limit and suggested S3 for large responses. Opus analyzed the actual need and decided not to store this data at all as it wasn't necessary.

The Limits

Of course these examples focus on the added value: the advisor isn't always right. Sometimes it's over-engineering, sometimes not adapted to the context. But the pattern works: Opus sorts through the feedback and integrates the most relevant points.

Conclusion

It's a small tool, but the overhead (a few cents per review and 1 or 2 minutes delay) is rather small. It's often paid back by avoided bugs.

The repo: github.com/ThomasHoussin/kimi-advisor