This is a Plain English summary of the research paper, LongCat-Flash-Thinking-2601 Technical Report.

Overview

- LongCat-Flash-Thinking-2601 develops a reasoning model that balances computational efficiency with strong performance on complex tasks

- The model uses a combination of pre-training strategies and reinforcement learning to improve how it thinks through problems

- Key approaches include text-driven synthesis, environment-grounded synthesis, and planning-oriented data augmentation

- The work scales reinforcement learning to enhance the model's reasoning capabilities across diverse problem types

Plain English Explanation

Think of reasoning models like students preparing for an exam. Some students dive straight into practice problems without understanding the fundamentals, while others study methodically, building knowledge step by step. LongCat-Flash-Thinking-2601 takes a structured approach to training—it doesn't just throw data at the model and hope it learns to think better.

The model starts with a strong foundation through pre-training, where it learns from different types of training data. Text-driven synthesis teaches it from pure language examples. Environment-grounded synthesis connects its knowledge to actual situations and contexts. Planning-oriented data augmentation creates additional training examples specifically designed to teach planning and reasoning.

After this foundation is built, the researchers apply reinforcement learning—a technique where the model learns by trying tasks and receiving feedback on whether it did well. This is like a student getting test scores and adjusting their study habits accordingly. The model learns which approaches work and gradually improves at solving harder problems.

The motivation behind this work addresses a real challenge in AI: models that think too much waste computational resources, while models that think too little make mistakes. LongCat-Flash-Thinking-2601 aims to find the sweet spot where the model reasons appropriately for each problem.

Key Findings

The paper demonstrates that combining multiple pre-training strategies with scaled reinforcement learning produces models that handle complex reasoning tasks effectively. The use of environment-grounded data helps the model understand real-world context, not just abstract language patterns. Planning-oriented data augmentation specifically boosts performance on tasks requiring step-by-step reasoning.

The scaling of reinforcement learning shows that models benefit from structured feedback during training, improving their ability to solve novel problems they haven't seen before. The research validates that combining these techniques creates measurable improvements in reasoning capability.

Technical Explanation

The pre-training phase operates on three distinct channels. Text-driven synthesis uses standard language modeling data to build linguistic foundations. Environment-grounded synthesis incorporates contextual information, helping the model understand how language relates to specific scenarios and constraints. Planning-oriented data augmentation generates synthetic examples that require sequential decision-making, forcing the model to develop planning skills.

The reinforcement learning stage involves training the model to maximize a reward signal that reflects solution quality. Rather than using fixed datasets, the model learns through interaction with tasks, receiving feedback on whether its reasoning paths lead to correct answers. This approach scales to handle diverse problem types because the reward signal can be adapted to different domains.

The architecture supports both efficiency and capability by allowing the model to allocate computational resources based on problem difficulty. Some problems may require more reasoning steps while others resolve quickly, and the system learns to adapt accordingly. This adaptive reasoning approach mirrors how humans spend more mental effort on harder problems and less on straightforward ones.

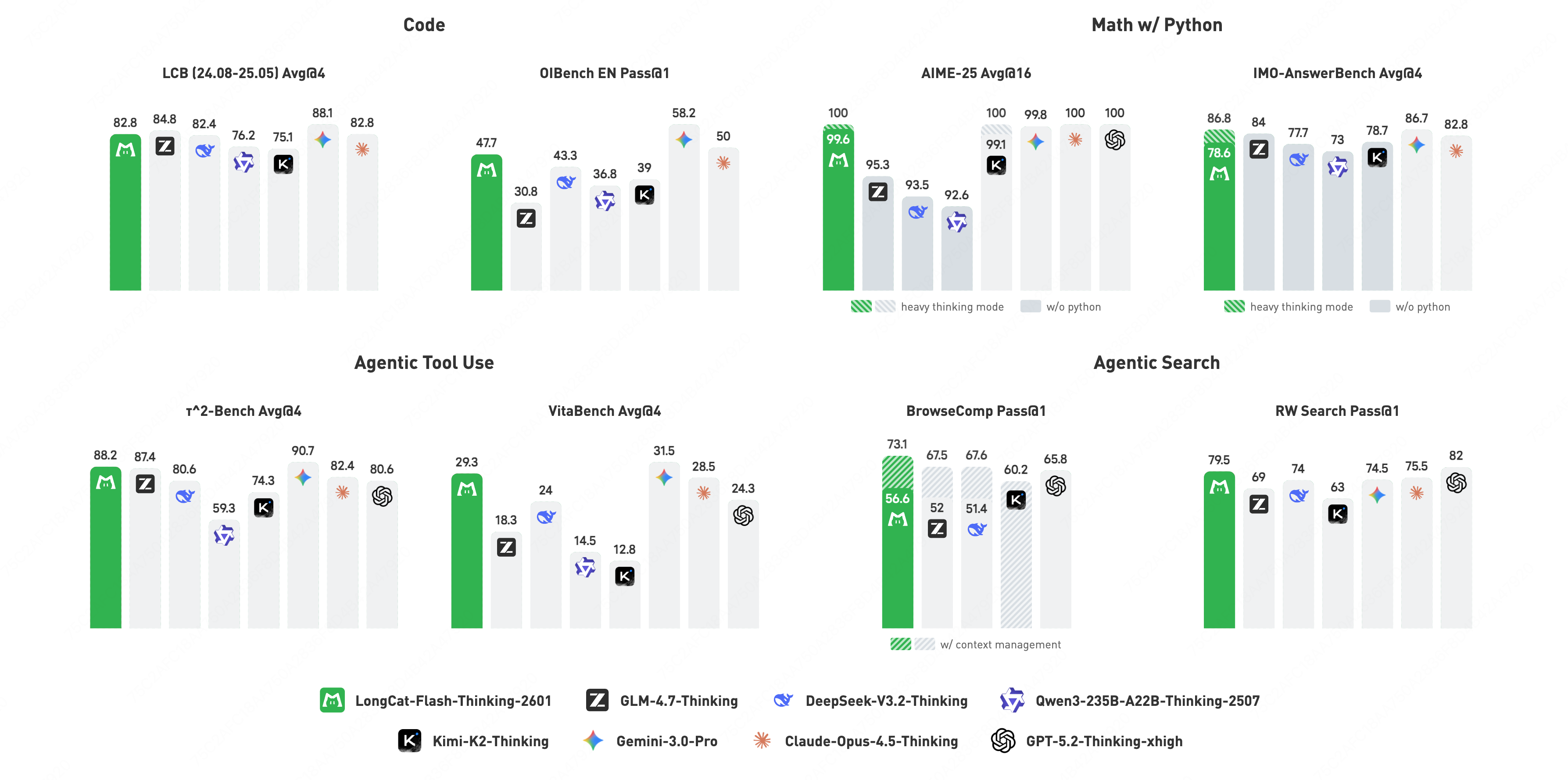

The researchers measure performance across multiple benchmarks that test reasoning, mathematics, and problem-solving. The combination of pre-training strategies and reinforcement learning produces consistent improvements, suggesting the approach generalizes beyond any single task type.

Critical Analysis

The paper's strength lies in combining multiple training approaches, but questions remain about which components contribute most to the improvements. The relative importance of text-driven synthesis versus environment-grounded synthesis versus planning-oriented augmentation deserves clearer analysis.

Reinforcement learning introduces its own challenges. The method depends on having good reward signals, and designing appropriate rewards for complex reasoning remains difficult. If the reward function is poorly designed, the model may learn shortcuts rather than genuine reasoning. The paper would benefit from discussing how sensitive the approach is to reward specification.

Computational costs during training are not thoroughly addressed. While the final model aims for efficiency, the reinforcement learning stage itself may be expensive, and the paper doesn't provide clear metrics on training costs versus performance gains. This matters for practitioners considering whether to use this approach.

The generalization claims would be stronger with evaluation on truly novel problem types not represented in training. Testing on multi-agent and collaborative reasoning tasks or domains substantially different from training data would provide confidence that the learned reasoning approaches are robust.

Additionally, the paper could better address failure modes. When and why does the model still fail on problems it should theoretically handle? Understanding failure patterns would help future researchers refine these methods.

Conclusion

LongCat-Flash-Thinking-2601 represents progress in building reasoning models that balance capability with computational efficiency. By combining pre-training strategies that teach different aspects of reasoning—from language fundamentals to real-world grounding to explicit planning—the work provides a more comprehensive training approach than standard methods. The subsequent application of reinforcement learning refines these capabilities through structured feedback.

The implications extend to making advanced reasoning models more practical. As models become more capable, training costs and inference costs matter for real-world deployment. A model that reasons appropriately rather than wastefully is valuable both for cost reduction and for enabling deployment in resource-constrained settings.

The research opens paths for future work in designing better training data and rewards specification, understanding which reasoning strategies generalize across domains, and testing whether these approaches scale to even more complex reasoning tasks. The core insight—that reasoning models benefit from diverse pre-training channels combined with structured learning feedback—likely extends beyond this specific implementation.

If you like these kinds of analyses, join AIModels.fyi or follow on Twitter.