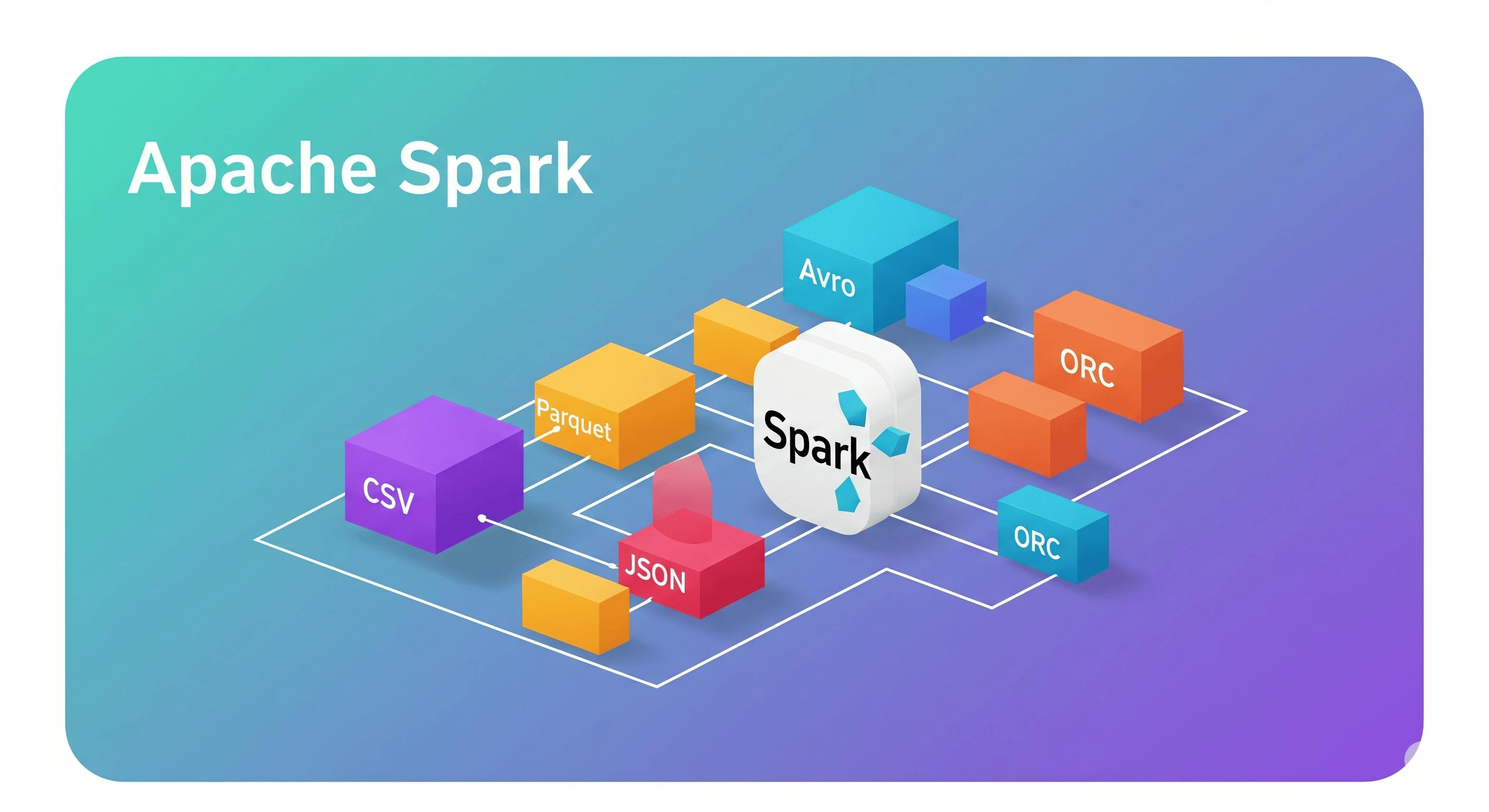

One of the most important decisions in your Apache Spark pipeline is how you store your data. The data format you choose can dramatically affect performance, storage costs, and query speed. Let’s explore the most common file formats supported by Apache Spark, and in which cases they can fit the most.

Different file formats

There are different types of data formats commonly used in data processing, especially with tools like Apache Spark, broken into categories based on their structure and use case:

Row-Based File Formats

The data is stored row by row, and it is easy to write and process linearly, but less efficient for analytical queries where only a few columns are needed.

CSV (Comma-Separated Values)

-

CSV is a plain text, row-based format where columns are separated by commas. It is easy to work with but not efficient for big data.

-

Pros: CSV is human-readable, simple to write and read, and is used globally.

-

Cons: CSV lacks data types, requiring Spark to infer column types from a sample of the CSV file, which adds extra work and may not be accurate. Additionally, CSV has poor compression and struggles with encoding complex data.

-

Use cases: Legacy systems, small data exports, debugging, and working with spreadsheets.

-

Reading CSV file in Apache Spark example:

# Pyspark example df = spark.read.options(delimiter=",", header=True).csv(path) # Scala example val df = spark.read.option("delimiter", ",").option("header", "true").csv(path)JSON (JavaScript Object Notation)

-

JSON is a lightweight, text-based format for exchanging data. It uses human-readable text to store and send information, but it can be slow and doesn't enforce a schema.

-

Pros: JSON is readable and widely supported by many systems, and can store semi-structural data.

-

Cons: JSON is slow to parse, and each row must be a valid JSON for Spark to parse. Additionally, from a storage perspective, JSON produces large files because many boilerplate tokens and key names are repeated in each row, and it lacks schema enforcement.

-

Use case: Mainly use JSON for debugging or exploring data. It can also be used to integrate with external systems that provide JSON, which you can't control, but don’t depend on it as the final storage data format.

-

Reading JSON file in Apache Spark example:

# Pyspark example df = spark.read.json(path) # Scala example val df = spark.read.json(path)Apache Avro

-

Apache Avro is a row-based format often used with Kafka pipelines and data exchange scenarios. It supports descriptive extendable schema and is compact for serialization.

-

Pros: Avro is efficient in storage, since it is in binary format, and has a great schema evolution feature.

-

Cons: While Avro is efficient in storage, it is not optimized for columnar queries, since you need to scan the whole file to read specific columns.

-

Use case: Avro is mainly used with real-time streaming systems like Kafka because it is easy to serialize and transmit. It also allows for easy schema evolution through a schema registry.

-

The spark-avro module is external and not included in the spark-submit or spark-shell by default, but

spark-avro_VERSIONand its dependencies can be directly added tospark-submitusing--packages./bin/spark-submit --packages org.apache.spark:spark-avro_VERSION ./bin/spark-shell --packages org.apache.spark:spark-avro_VERSION -

Reading Avro file in Apache Spark example:

# Pyspark example df = spark.read.format("avro").load(path) # Scala example val df = spark.read.format("avro").load(path)Columnar File Formats

The data is stored column by column, making them ideal for analytics and interactive dashboards where only a subset of columns is queried.

Parquet (The Gold Standard for Analytics)

-

Parquet is a columnar binary format optimized for analytical queries. It’s the most popular format for Spark workloads.

-

Pros: Parquet is built for efficient reads with compression and predicate push-down, which makes it fast, compact, ideal for Spark, Hive, Presto.

-

Cons: Parquet is slightly slower to write than row-based formats.

-

Use case: Parquet is the first choice for Spark and analytical queries, data lakes, cloud storage.

-

Reading Parquet file in Apache Spark example:

# Pyspark example df = spark.read.parquet(path) # Scala example val df = spark.read.parquet(path)Apache ORC (Optimized Row Columnar)

-

ORC is another columnar format, optimized for the Hadoop ecosystem, especially Hive.

-

Pros: ORC has a high compression ratio, and is optimized for scan-heavy queries, and supports predicates push-down similar to Parquet.

-

Cons: ORC has less support outside Hadoop tools, which makes it harder to integrate with other tools.

-

Use case: Hive-based data warehouses, HDFS-based systems.

-

Reading ORC file in Apache Spark example:

# Pyspark example df = spark.read.format("orc").load(path) # Scala example val df = spark.read.format("orc").load(path)Summary table

|

Format |

Type |

Compression |

Predicate Push-down |

Best Use Case |

|---|---|---|---|---|

|

Parquet |

Columnar |

Excellent |

✅ Yes |

Big data, analytics, selective queries |

|

ORC |

Columnar |

Excellent |

✅ Yes |

Hive-based data lakes |

|

Avro |

Row-based |

Good |

❌ No (limited) |

Kafka pipelines, schema evolution |

|

JSON |

Row-based |

None |

❌ No |

Debugging, integration |

|

CSV |

Row-based |

None |

❌ No |

Legacy formats, ingestion, exploration |

Conclusion

Choosing the right file format in Spark is not just a technical decision, but it's a strategic one. Parquet and ORC are solid choices for most modern workloads, but your use case, tools, and ecosystem should guide your choice.