Prompt Rate Limits & Batching: Your LLM API Has a Speed Limit (Even If Your Product Doesn’t)

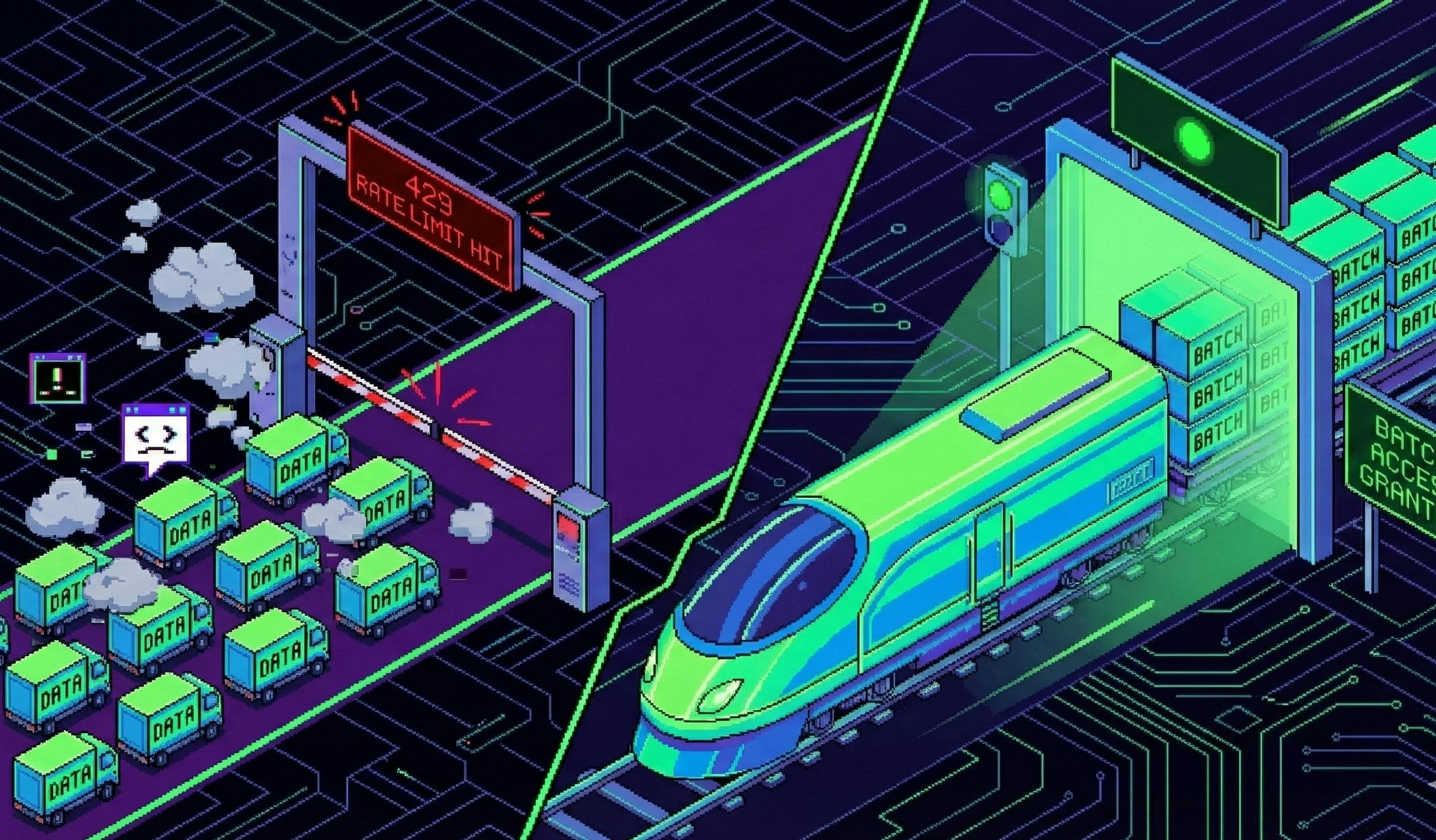

You ship a feature, your traffic spikes, and suddenly your LLM layer starts returning 429s like it’s handing out parking tickets.

The bad news: rate limits are inevitable.

The good news: most LLM “rate limit incidents” are self-inflicted—usually by oversized prompts, bursty traffic, and output formats that are impossible to parse at scale.

This article is a practical playbook for:

- understanding prompt-related throttles,

- avoiding the common failure modes, and

- batching requests without turning your responses into soup.

1) The Three Limits You Actually Hit (And What They Mean)

Different providers name things differently, but the mechanics are consistent:

1.1 Context window (max tokens per request)

If your input + output exceeds the model context window, the request fails immediately.

Symptoms:

- “Maximum context length exceeded”

- “Your messages resulted in X tokens…”

Fix:

- shorten, summarise, or chunk data.

1.2 RPM (Requests Per Minute)

You can be under token limits and still get throttled if you burst too many calls. Gemini explicitly documents RPM as a core dimension.

Symptoms:

- “Rate limit reached for requests per minute”

- HTTP 429

Fix:

- client-side pacing, queues, and backoff.

1.3 TPM / Token throughput limits

Anthropic measures rate limits in RPM + input tokens/minute + output tokens/minute (ITPM/OTPM). Gemini similarly describes token-per-minute as a key dimension.

Symptoms:

- “Rate limit reached for token usage per minute”

- 429 + Retry-After header (Anthropic calls this out)

Fix:

- reduce tokens, batch efficiently, or request higher quota.

2) The Most Common “Prompt Limit” Failure Patterns

2.1 The “one prompt to rule them all” anti-pattern

You ask for:

- extraction

- classification

- rewriting

- validation

- formatting

- business logic

…in a single request, and then you wonder why token usage spikes.

Split the workflow. If you need multi-step logic, use Prompt Chaining (small prompts with structured intermediate outputs).

2.2 Bursty traffic (the silent RPM killer)

Production traffic is spiky. Cron jobs, retries, user clicks, webhook bursts—everything aligns in the worst possible minute.

If your client sends requests like a machine gun, your provider will respond like a bouncer.

2.3 Unstructured output = expensive parsing

If your output is “kinda JSON-ish”, your parser becomes a full-time therapist.

Make the model output strict JSON or a fixed table. Treat format as a contract.

3) Rate Limit Survival Kit (Compliant, Practical, Boring)

3.1 Prompt-side: shrink tokens without losing signal

- Delete marketing fluff (models don’t need your company origin story).

- Convert repeated boilerplate into a short “policy block” and reuse it.

- Prefer fields over prose (“material=316 stainless steel” beats a paragraph).

A tiny prompt rewrite that usually saves 30–50%

Before (chatty):

“We’re a smart home brand founded in 2010… please write 3 marketing lines…”

After (dense + precise):

“Write 3 UK e-commerce lines. Product: smart bulb. Material=PC flame-retardant. Feature=3 colour temperatures. Audience=living room.”

3.2 Request-side: backoff like an adult

If the provider returns Retry-After, respect it. Anthropic explicitly returns Retry-After on 429s.

Use exponential backoff + jitter:

- attempt 1: 1s

- attempt 2: 2–3s

- attempt 3: 4–6s

- then fail gracefully

3.3 System-side: queue + concurrency caps

If your account supports 10 concurrent requests, do not run 200 coroutines and “hope”.

Use:

- a work queue

- a semaphore for concurrency

- and a rate limiter for RPM/TPM

4) Batching: The Fastest Way to Cut Calls, Cost, and 429s

Batching means: one API request handles multiple independent tasks.

It works best when tasks are:

- same type (e.g., 20 product blurbs)

- independent (no step depends on another)

- same output schema

Why it helps

- fewer network round-trips

- fewer requests → lower RPM pressure

- more predictable throughput

Also: OpenAI’s pricing pages explicitly include a “Batch API price” column for several models. (That doesn’t mean “batching is free”, but it’s a strong hint the ecosystem expects this pattern.)

5) The Batching Prompt Template That Doesn’t Fall Apart

Here’s a format that stays parseable under pressure.

5.1 Use task blocks + a strict JSON response schema

SYSTEM: You output valid JSON only. No Markdown. No commentary.

USER:

You will process multiple tasks.

Return a JSON array. Each item must be:

{

"task_id": <int>,

"title": <string>,

"bullets": [<string>, <string>, <string>]

}

Rules:

- UK English spelling

- Title ≤ 12 words

- 3 bullets, each ≤ 18 words

- If input is missing: set title="INSUFFICIENT_DATA" and bullets=[]

TASKS:

### TASK 1

product_name: Insulated smart mug

material: 316 stainless steel

features: temperature alert, 7-day battery

audience: commuters

### TASK 2

product_name: Wireless earbuds

material: ABS shock-resistant

features: ANC, 24-hour battery

audience: students

That “INSUFFICIENT_DATA” clause is your lifesaver. One broken task shouldn’t poison the whole batch.

6) Python Implementation: Batch → Call → Parse (With Guardrails)

Below is a modern-ish pattern you can adapt (provider SDKs vary, so treat it as structure, not a copy‑paste guarantee).

import json

import random

import time

from typing import Any, Dict, List, Tuple

MAX_RETRIES = 4

def backoff_sleep(attempt: int, retry_after: float | None = None) -> None:

if retry_after is not None:

time.sleep(retry_after)

return

base = 2 ** attempt

jitter = random.random()

time.sleep(min(10, base + jitter))

def build_batch_prompt(tasks: List[Dict[str, str]]) -> str:

header = (

"You output valid JSON only. No Markdown. No commentary.\n\n"

"Return a JSON array. Each item must be:\n"

"{\n \"task_id\": <int>,\n \"title\": <string>,\n \"bullets\": [<string>, <string>, <string>]\n}\n\n"

"Rules:\n"

"- UK English spelling\n"

"- Title ≤ 12 words\n"

"- 3 bullets, each ≤ 18 words\n"

"- If input is missing: set title=\"INSUFFICIENT_DATA\" and bullets=[]\n\n"

"TASKS:\n"

)

blocks = []

for t in tasks:

blocks.append(

f"### TASK {t['task_id']}\n"

f"product_name: {t.get('product_name','')}\n"

f"material: {t.get('material','')}\n"

f"features: {t.get('features','')}\n"

f"audience: {t.get('audience','')}\n"

)

return header + "\n".join(blocks)

def parse_json_strict(text: str) -> List[Dict[str, Any]]:

# Hard fail if it's not JSON. This is intentional.

return json.loads(text)

def call_llm(prompt: str) -> Tuple[str, float | None]:

"""Return (text, retry_after_seconds). Replace with your provider call."""

raise NotImplementedError

def run_batch(tasks: List[Dict[str, str]]) -> List[Dict[str, Any]]:

prompt = build_batch_prompt(tasks)

for attempt in range(MAX_RETRIES):

try:

raw_text, retry_after = call_llm(prompt)

return parse_json_strict(raw_text)

except json.JSONDecodeError:

# Ask the model to repair formatting in a second pass (or log + retry)

prompt = (

"Fix the output into valid JSON only. Preserve meaning.\n\n"

f"BAD_OUTPUT:\n{raw_text}"

)

backoff_sleep(attempt)

except Exception as e:

# If your SDK exposes HTTP status + retry-after, use it here

backoff_sleep(attempt)

last_error = e

raise RuntimeError(f"Batch failed after retries: {last_error}")

What changed vs “classic” snippets?

- We treat JSON as a hard contract.

- We handle format repair explicitly (and keep it cheap).

- We centralise backoff logic so every call behaves the same way.

7) How to Choose Batch Size (The Rule Everyone Learns the Hard Way)

Batch size is constrained by:

- context window (max tokens per request)

- TPM throughput

- response parsing stability

- your business tolerance for “one batch failed”

A practical heuristic:

- start with 10–20 items per batch

- measure token usage

- increase until you see:

- output format drift, or

- timeouts / latency spikes, or

- context overflow risk

And always keep a max batch token budget.

8) “Cost Math” Without Fantasy Numbers

Pricing changes. Tiers change. Models change.

So instead of hard-coding ancient per-1K token values, calculate cost using the provider’s current pricing page.

OpenAI publishes per‑token pricing on its API pricing pages. Anthropic also publishes pricing and documents rate limit tiers.

A useful cost estimator:

cost ≈ (input_tokens * input_price + output_tokens * output_price) / 1,000,000

Then optimise the variables you control:

- shrink input tokens

- constrain output tokens

- reduce number of calls (batch)

9) Risks of Batching (And How to Not Get Burnt)

Risk 1: one bad item ruins the batch

Fix: “INSUFFICIENT_DATA” fallback per task.

Risk 2: output format drift breaks parsing

Fix: strict JSON, repair step, and logging.

Risk 3: batch too big → context overflow

Fix: token budgeting + auto-splitting.

Risk 4: “creative” attempts to bypass quotas

Fix: don’t. If you need more capacity, request higher limits and follow provider terms.

Final Take

Rate limits aren’t the enemy. They’re your early warning system that:

- prompts are too long,

- traffic is too bursty,

- or your architecture assumes “infinite throughput”.

If you treat prompts like payloads (not prose), add pacing, and batch like a grown-up, you’ll get:

- fewer 429s

- lower cost

- and a system that scales without drama

That’s the whole game.