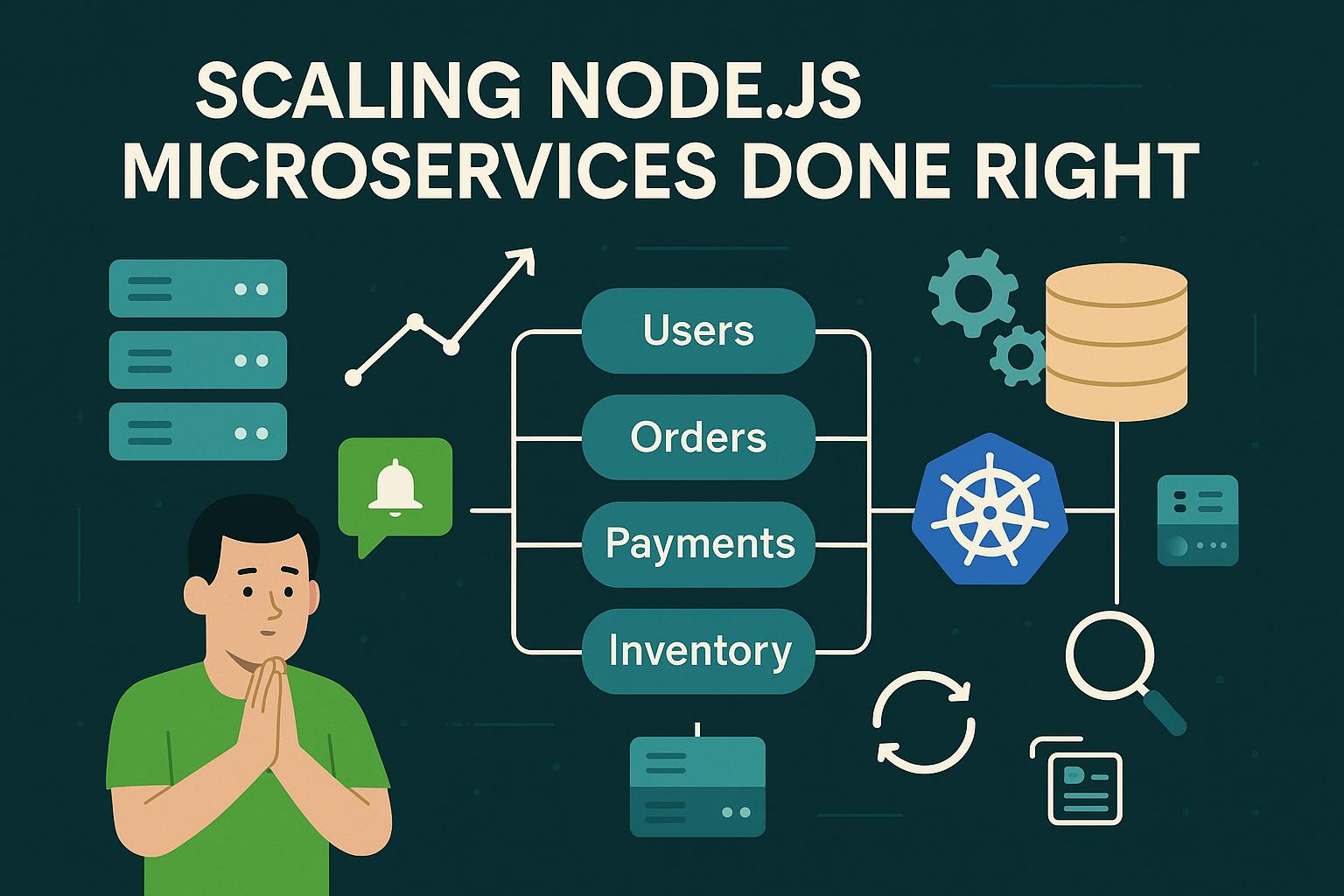

Your nodejs service is under a lot of pressure from increasing RPS (requests per second), and you are praying that you don’t get paged by pagerduty. Microservices can come to the rescue — as long as you don’t fall for the common JavaScript traps. In this guide, I’ll show you some scalability concepts using actual JS implementations starting from service decomposition

1. Service Decomposition: The Art of Breaking Monoliths

The Issue: The “God Service” Trap

Consider an all-encompassing Express app that is clumped with users, orders, payments, and inventory. It functions... until the payment service fails and crashes, bringing user logins with it.

// 🚫 Monolithic disaster (app.js)

const express = require('express');

const app = express();

// User routes

app.post('/users', (req, res) => { /* ... */ });

// Order routes

app.post('/orders', (req, res) => {

// Checks inventory, processes payment, updates user history...

});

// Payment routes

app.post('/payments', (req, res) => { /* ... */ });

app.listen(3000);

The Solution: Domain-Driven Design (DDD) for Express

Divide into multiple services:

- User Service (user-service/index.js):

const express = require('express');

const app = express();

app.post('/users', (req, res) => { /* ... */ });

app.listen(3001);

- Order Service (order-service/index.js):

const express = require('express');

const app = express();

app.post('/orders', (req, res) => { /* ... */ });

app.listen(3002);

Advantages:

- Isolated Failures: Payment service outage won’t lead to user login failures.

- Independent Scaling: During sales, more pods can be added to the order service.

Disadvantages:

- Network Latency: Services now talk to each other over HTTP (timeouts are bad!).

- DevOps Complication: Instead of deploying one, four services have to be deployed.

2. Communication: Escape The Gruesome Synchronization Hell

The Problem: Timeout After Timeout

Within the order service, communication with the user service and with the payment service is done synchronously. One slow response affects the entire flow

// 🚫 Order service (order-service/index.js)

const axios = require('axios');

app.post('/orders', async (req, res) => {

// Call user service

const user = await axios.get('http://user-service:3001/users/123');

// Call payment service

const payment = await axios.post('http://payment-service:3003/payments', {

userId: user.id,

amount: 100

});

// ...

});

The Solution: Integration of RabbitMQ into an Asynchronous System

Leverage a message broker for greater system autonomy:

- The Order Service generates and sends an order.created event.

- The Payment Service takes in the event and processes the user’s payment.

// Order Service (publish event)

const amqp = require('amqplib');

async function publishOrderCreated(order) {

const conn = await amqp.connect('amqp://localhost');

const channel = await conn.createChannel();

await channel.assertExchange('orders', 'topic', { durable: true });

channel.publish('orders', 'order.created', Buffer.from(JSON.stringify(order)));

}

app.post('/orders', async (req, res) => {

const order = createOrder(req.body);

await publishOrderCreated(order); // Non-blocking

res.status(202).json({ status: 'processing' });

});

// Payment Service (consume event)

const amqp = require('amqplib');

async function consumeOrders() {

const conn = await amqp.connect('amqp://localhost');

const channel = await conn.createChannel();

await channel.assertExchange('orders', 'topic', { durable: true });

const queue = await channel.assertQueue('', { exclusive: true });

channel.bindQueue(queue.queue, 'orders', 'order.created');

channel.consume(queue.queue, (msg) => {

const order = JSON.parse(msg.content.toString());

processPayment(order);

channel.ack(msg);

});

}

consumeOrders();

Pros:

- What are the advantages of the Payment Service that consumes the event?

- Services are Decoupled: Payment service is down? Not a problem, messages stacks up and gets attempted again later.

- Order service issues a 202 Responds faster, so that’s that.

Cons:

- As complex as the system gets, Payment and Order Services have a lot of integration problems that exist in the system.

- Difficult Debugging: Following a payment failure through the queues can necessitate something like Rabbit MQ’s interface.

- These are some of the key disadvantages of event driven architecture that are observable after events have occurred.

3. Data Management: Don’t share databases

The Problem: Coupled Database

All micro-services work on one shared postgreSQL orders set. As the everything-in-one approach seems elegant, it can cause the service for orders to break due to changes in the schema of the inventory microservice.

The Fix: Each Service has its own Database + Event Sourcing.

- Order Service: Has its own orders set and owns it.

- Inventory Service: Keeps a separate DB for eg. Redis for counting stock.

Example: Event Sourcing toward achieving Consistency

// Order Service saves events

const { OrderEvent } = require('./models');

async function createOrder(orderData) {

await OrderEvent.create({

type: 'ORDER_CREATED',

payload: orderData

});

}

// Materialized view for queries

const { Order } = require('./models');

async function rebuildOrderView() {

const events = await OrderEvent.findAll();

// Replay events to build current state

const orders = events.reduce((acc, event) => {

// Apply event logic (e.g., add order)

}, {});

await Order.bulkCreate(orders);

}

Pros:

- Audit Log: Every single change of state is recorded as an event.

- Rebuildable: Views can be reconstructed if the requirements change Flexibility.

Cons:

- Architectural Complexity: There also needs to be a mechanism for replaying events.

- Increased Storage Cost: The database can quickly lose its efficiency as millions of events can potentially compromise its integrity.

4. Deployment: Auto-Scale with Kubernetes

The Problem: You Need To Scale At 3 In The Morning Manually

You want to pm the service and scale payment-service +1 out of the EC2 instances at peak traffic times.

The Fix: Payment service container in a deployment.yaml file can be defined along with a horizontal pod autoscaler.

Define a deployment.yaml for the payment service:

apiVersion: apps/v1

kind: Deployment

metadata:

name: payment-service

spec:

replicas: 2

template:

spec:

containers:

- name: payment

image: your-registry/payment-service:latest

ports:

- containerPort: 3003

resources:

requests:

cpu: "100m"

limits:

cpu: "200m"

---

apiVersion: autoscaling/v2

kind: HorizontalPodAutoscaler

metadata:

name: payment-service

spec:

scaleTargetRef:

apiVersion: apps/v1

kind: Deployment

name: payment-service

minReplicas: 2

maxReplicas: 10

metrics:

- type: Resource

resource:

name: cpu

target:

type: Utilization

averageUtilization: 70

Pros:

- Self-Healing: When containers crash, Kubernetes reloads by default.

- Cost Savings: When there is no traffic at night, scale down.

Cons:

- YAML Overload: Configuration is the new mess.

- Cold Starts: They take a while to init.

5. Observability: Logs, Traces, and Metrics.

The Problem: “Payment Service Is Slow.”

Coming up with a solution without logs will make you guess where the failure is occurring.

The Fix: Winston + OpenTelemetry

// Logging with Winston (payment-service/logger.js)

const winston = require('winston');

const logger = winston.createLogger({

level: 'info',

format: winston.format.json(),

transports: [

new winston.transports.File({ filename: 'error.log', level: 'error' }),

new winston.transports.Console()

]

});

// In your route handler

app.post('/payments', async (req, res) => {

logger.info('Processing payment', { userId: req.body.userId });

// ...

});

Distributed Tracing with OpenTelemetry:

const { NodeTracerProvider } = require('@opentelemetry/sdk-trace-node');

const { SimpleSpanProcessor } = require('@opentelemetry/sdk-trace-base');

const { JaegerExporter } = require('@opentelemetry/exporter-jaeger');

const provider = new NodeTracerProvider();

provider.addSpanProcessor(

new SimpleSpanProcessor(new JaegerExporter({ endpoint: 'http://jaeger:14268/api/traces' }))

);

provider.register();

Pros:

- Trace flows: Understands how a request flows through services.

- Error Context: Logs contain user ID, order IDs etc.

Cons:

- Performance Hit: Added overhead from tracing.

- Tool Sprawl: Jaeger, Prometheus, Grafana. So many tools.

6. Fault Tolerance: Circuit Breakers & Retries

The Problem: Invalid State Transitions - Cascading Failures

User service dies, and order service keeps invoking on user service in oder to try and succeed, potentially DoS-ing itself.

The Fix: cocktail for Retry Policies

const { Policy, handleAll, circuitBreaker } = require('cockatiel');

// Circuit breaker: stop calling a failing service

const breaker = circuitBreaker(handleAll, {

halfOpenAfter: 10_000,

breaker: {

threshold: 0.5, // 50% failure rate trips the breaker

duration: 30_000

}

});

// Retry with exponential backoff

const retry = Policy

.handleAll()

.retry()

.attempts(3)

.exponential();

// Wrap API calls

app.post('/orders', async (req, res) => {

try {

await retry.execute(() =>

breaker.execute(() => axios.get('http://user-service:3001/users/123'))

);

} catch (error) {

// Fallback logic

}

});

Pros:

- Fail Fast: Stop trying to access a service that is broken.

- Self-Recovery: After sometime, the breaker resets.

Cons:

- Configuration Hell: Need to repeatedly fine tune your retries/breaker thresholds.

- Fallback Logic: You will still need to deal with failed logic elegantly.

Surviving the Microservices Maze: FAQs

Q: When is it best to break apart my monolith?

A: Different deployment sections are held up while waiting on other deployments.

- Certain parts of the application need more resources than others (for example: analytics versus payments).

- You need to solve endless merge conflicts in “package.json.”

Q: REST versus GraphQL versus gRPC. Differences?

A: REST: Used for public APIs (like mobile applications).

- GraphQL: When clients need to pull dynamic data (example: admin dashboards).

- gRPC: For use with internal services where performance is critical (protobuf FTW).

Q: What approach would you take to solve distributed transactions? \ A: Implement the Saga pattern:

- An order is created by the order service (status: PENDING).

- The payment service attempts to charge the user.

- If the user is not charged successfully, then the order service sets status to FAILED and informs the user.

Final Thoughts

Scaling microservices with Node.js is like juggling chainsaws – exhilarating but also very risky. Use a step-by-step approach; start with a small solution and then begin to separate services when necessary. Always have contingency plans in place for when things go wrong. Remember: observability isn’t a nice to have, it’s a must. You are unable to resolve the issues that you cannot see.

So go forth and conquer that monolith. Your ops team will be grateful. 🔥