Exploring Emotion, Identity, and Creativity Through a Human-AI Art Experiment with DALL·E 3

TL;DR: As generative AI tools like DALL·E rapidly evolve, they challenge our understanding of art and emotion. Can machines truly make us feel the way human artists do? In this article, we explore that question through a philosophical lens - and a creative experiment.

The Human Pulse in the Age of Algorithms

With the rapid rise of generative AI, it’s no surprise that AI-generated art has surged alongside it - flooding galleries and imaginations.

As both an AI expert and an artist, I find myself asking: What does this mean for the place of the human creator?

This question leads us into philosophical terrain.

Rendering Feeling: Is Emotion Within Reach of AI?

Can AI evoke emotion the same way a human artist can? Or is there something inherently human in the act of making others feel through art?

We often say that art is not a science - and for good reason. The success of a piece is not easily measured. Experts might analyze its composition, symbolism, or technical skill, but for most people, the response is profoundly emotional. A painting can stir joy, grief, awe, or disgust without a clear explanation.

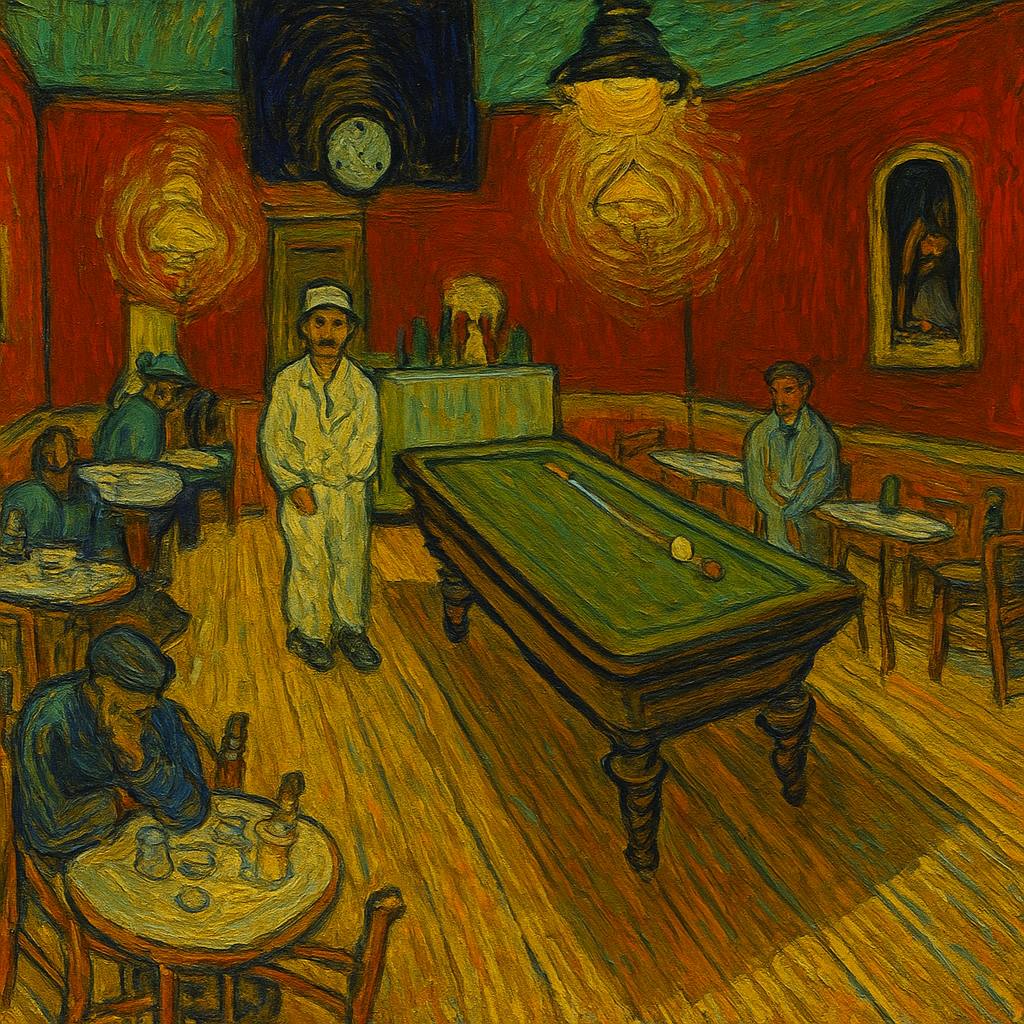

A Case Study in Emotion: Van Gogh’s Night Cafe

Take, for example, Van Gogh’s Le café de nuit (The Night Café):

A single glance is often enough to evoke unease, isolation, or even dread.

Few would describe it as cheerful or comforting - and this is no accident.

Van Gogh wrote of the painting:

“In my picture of the Night café, I have tried to express the idea that the café is a place where one can destroy oneself, go mad or commit a crime. In short, I have tried … to express the powers of darkness in a common tavern.”

How does he do this? One of the most powerful techniques is his use of color. According to color theory, high-saturation red and green- complementary opposites can create a jarring visual vibration that feels harsh or disorienting.

As Van Gogh described to his brother:

“The room is blood red and dark yellow with a green billiard table in the middle … Everywhere there is a clash and contrast of the most alien reds and greens.”

If the Machine Can Move You, Who Needs the Hand?

Now, here’s the pivot: if we can understand this scientifically, then so can a generative AI model. If we instruct it to produce something “hopeful” or “unsettling”, it can replicate these compositional principles to achieve a targeted emotional effect.

So, if AI can learn to make us feel - why would we prefer classic art?

And what then becomes of sentiment, originality, or the very concept of uniqueness?

Once, owning a single, handcrafted painting was a mark of wealth and taste. Today, anyone can generate a unique “masterpiece” in seconds. Does this abundance erode value? Or are we witnessing the emergence of a new kind of art - a collaboration between algorithm and artist?

The Dark Enchantress Experiment

To further explore the boundary between human and machine-made art, I embarked on a small creative experiment - one part crafted by hand, the other conjured through code.

Using both traditional digital media and DALL·E 3, OpenAI’s generative model, I set out to bring a new character to life.

(For those curious about how DALL·E 3 operates, I delve deeper into its mechanics in my previous article: I Traded My Sketchpad for a Prompt Box—And Art Will Never Be the Same)

The concept I envisioned was a figure named Dark Enchantress. But a name alone wouldn’t suffice - I wanted to build a fuller mythology around her, something rich enough to guide both my imagination and DALL·E’s algorithmic interpretation.

She would be the central character in a fictional video game: half-human, half-vampire, imbued with arcane magical powers and accompanied by a loyal animal sidekick. A heroine born from darkness, shaped by narrative, and rendered through two distinctly different creative processes.

The prompt I provided to DALL·E 3 was intentionally crafted to mirror the character I had imagined:

Generate a picture of a character. The character should be a female. Her name is Dark Enchantress. She is the main character of a computer game. Her story is she has magic powers and she is half vampire half human. Her sidekick is an animal. The picture should be generated in animation 2D style.

This carefully worded instruction aimed to give the model enough narrative and visual cues to guide its generative process - bridging story, style, and structure into a cohesive image.

Below are the results: one illustration generated by DALL·E 3, and the other drawn by me, by hand, on my iPad.

Two Images, One Question: Which Is Human?

To lend more weight to my experiment, I conducted a small informal survey among family and friends. The participants, ranging in age from 10 to 50, were each presented with the same 3 questions:

Given the backstory of the Dark Enchantress, which image most accurately reflects the character description?

Which illustration resonated with you more aesthetically?

And finally,

Which illustration was created by generative AI?

The results were unexpected.

For the record: Image A was created by DALL·E 3.

A majority felt that the AI-generated image was more accurate and emotionally resonant.

Strikingly, many assumed that my hand-drawn artwork was the one created by the machine.

This sparked a curious paradox: Did participants believe Image B was AI-generated and thus subconsciously yearn to connect more deeply with what they assumed was human-made - leading them to prefer Image A?

Or did they simply find Image A more compelling, which in turn led them to conclude it must have been the product of human creativity?

Or perhaps there is no direct causality at all - only the deeper revelation that, emotionally, AI-generated art can indeed resonate, and its origin is often indistinguishable to the untrained eye.

Final Reflections: The Signature in the Code

Perhaps the most haunting realization is not that machines can imitate us, but that we may no longer recognize ourselves in what we create.

In the age of generative AI, where an algorithm can render emotion, tension, and beauty, we’re confronted with a question that is no longer hypothetical: if art can move us, does it matter who - or what - made it?

Yet, maybe we’re asking the wrong question.

Art has always been a mirror - of culture, of time, of the self. And now, it becomes a prism: refracting human intention through artificial intelligence, distorting and amplifying it in ways we’re only beginning to grasp. The image produced is no longer solely ours, but also not entirely other.

About me

I am Maria Piterberg - an AI expert leading the Runtime software team at Habana Labs (Intel) and a semi-professional artist working across traditional and digital mediums. I specialize in large-scale AI training systems, including communication libraries (HCCL) and runtime optimisation. Bachelor of Computer Science.

Feature image: DALLE-3 version of Van Gogh’s Le café de nuit (The Night Café). Used prompt: Generate your own version of Van Gogh’s Le café de nuit (The Night Café).