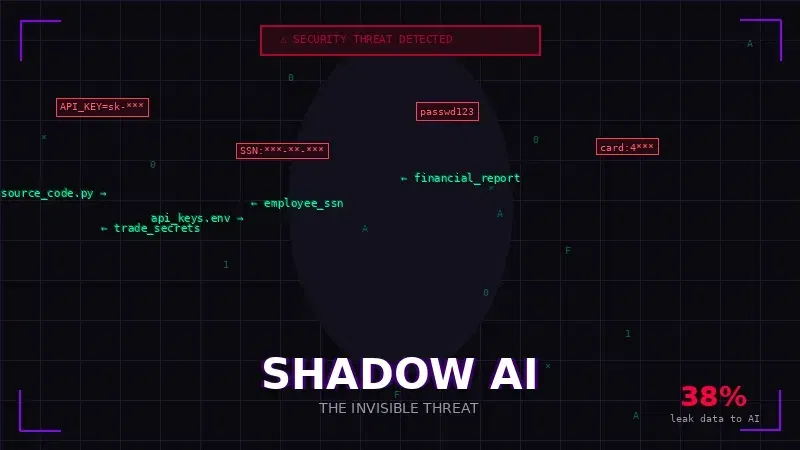

Your Employees Are Feeding Secrets to AI — And You Don't Even Know It

The $670,000 Mistake You're Probably Making Right Now

While you're reading this, someone in your organization is pasting confidential data into an AI tool. They're not malicious. They're not stupid. They're just trying to finish a report 10 minutes faster.

And that's exactly why Shadow AI is your biggest security nightmare in 2025.

Shadow AI — the unauthorized use of AI tools by employees without IT oversight — has graduated from a nuisance to a five-alarm fire. According to IBM's 2025 Cost of a Data Breach analysis, 20% of all data breaches are now linked to Shadow AI, adding an average of $670,000 to remediation costs per incident.

Let that sink in: nearly three-quarters of a million dollars, not because someone hacked your firewall, but because an intern pasted customer emails into a chatbot.

The Samsung Wake-Up Call

The textbook case happened in 2023, and security teams are still having nightmares about it.

Within a 20-day span, three Samsung semiconductor engineers made three catastrophic mistakes:

- The Code Leak: An engineer pasted top-secret source code into a generative AI tool to debug a measurement database

- The Yield Leak: Another uploaded code related to chip defect detection — literally the "secret sauce" of semiconductor manufacturing

- The Strategy Leak: A third employee recorded a confidential meeting, transcribed it, and asked AI to summarize the minutes

Samsung didn't get hacked. They got helped — by well-meaning employees who just wanted to work smarter.

The result? A company-wide ban on generative AI, massive reputational damage, and a case study that every CISO now keeps bookmarked.

Why Shadow AI Is Worse Than Shadow IT

If you survived the Shadow IT era of rogue Dropbox accounts and unauthorized Slack workspaces, you might think you've seen this movie before. You haven't.

Shadow IT was about data storage. Shadow AI is about data processing.

When employees use unauthorized AI tools, they're not just storing data somewhere risky — they're asking these tools to reason about the data. They're feeding it context, trade secrets, strategy documents, and personally identifiable information (PII) to get an output.

And here's the terrifying part: that data doesn't just vanish.

When you paste proprietary code into a public AI service, it lands on third-party servers. Depending on the service's data retention policies, it might enter training datasets. Your competitive advantage could literally be teaching your competitor's AI how to beat you.

The Numbers Don't Lie

The scale of Shadow AI adoption is staggering:

|

Statistic |

Source |

|---|---|

|

45% of enterprise employees now use generative AI tools |

LayerX 2025 Report |

|

77% of AI users copy and paste data into their queries |

LayerX 2025 Report |

|

22% of those paste operations include PII or payment card data |

LayerX 2025 Report |

|

82% of pastes come from unmanaged personal accounts |

LayerX 2025 Report |

|

60% of organizations cannot identify Shadow AI usage |

Cisco 2025 |

|

38% of employees admit sharing sensitive data with AI without permission |

IBM 2025 |

That last statistic is the one that should keep you up at night. More than one-third of your workforce is actively sharing sensitive information with AI tools — and they're telling you about it.

Imagine how many aren't.

The Shadow AI Landscape: It's Not Just Chatbots

When security teams think about Shadow AI, they often focus on the obvious culprits — consumer chatbots and writing assistants. But the threat landscape is far broader:

Generative AI Chatbots: Claude, Gemini, Copilot, Perplexity, and dozens of others. Employees use these for everything from drafting emails to analyzing contracts.

AI-Powered Browser Extensions: Over 40 popular "productivity booster" extensions were compromised in early 2025, silently scraping data from active browser tabs — including sensitive corporate sessions.

Embedded AI Features: Your CRM has AI. Your email client has AI. Your note-taking app has AI. Many of these features are enabled by default, processing data without explicit user awareness.

Code Assistants: GitHub Copilot, Amazon CodeWhisperer, Tabnine, and others are embedded directly into development environments, with access to proprietary codebases.

AI-Powered Meeting Tools: Transcription services, note-takers, and summarizers that join calls and process everything discussed — including confidential business strategy.

Custom and Open-Source Models: Employees downloading and running local models like Llama, Mistral, or DeepSeek on corporate hardware, often without any oversight.

The average mid-sized company uses approximately 150 SaaS tools, and roughly 35% of them now feature AI technology. That's potentially 50+ AI applications running in your environment without any governance.

Why Your Current Security Stack Won't Save You

If your strategy is "block the popular AI websites at the firewall," you've already lost.

The productivity gains from AI are simply too high. Employees will find workarounds: personal phones, home networks, VPN bypasses, or simply switching to one of the hundreds of AI-powered tools that aren't on your blocklist yet.

Traditional security tools fail against Shadow AI for several reasons:

1. The Tool Explosion

New AI tools launch daily. By the time you've added one to your blocklist, ten more have emerged. And increasingly, AI isn't a separate tool — it's a feature embedded in software your employees already use and trust.

2. The Visibility Gap

Most organizations have zero visibility into what data is flowing to external AI providers. Your DLP might catch someone emailing a spreadsheet of customer data, but it probably won't flag someone pasting that same data into an AI prompt.

3. The Speed Problem

Every prompt, upload, or query is a potential breach. But AI's self-learning nature means risks compound faster than traditional threats. A marketing intern pasting customer emails into an AI tool today could leak PII that trains a model used by competitors within days.

4. The BYOD Blindspot

Even if you lock down corporate devices, employees have phones in their pockets. They can photograph a whiteboard of strategy notes, upload it to an AI vision model, and ask for analysis — completely bypassing your network security.

The Compliance Time Bomb

Here's where it gets really ugly.

Regulatory frameworks like GDPR, HIPAA, SOC 2, PCI DSS, and CCPA weren't built for AI. Shadow AI doesn't just sidestep your internal policies — it makes compliance nearly impossible.

GDPR requires a legal basis for processing personal data and the ability to erase it. How do you erase data that's been ingested into an AI model you don't control?

HIPAA demands strict control over patient health information. When a healthcare worker uses AI to summarize patient notes, that control evaporates.

PCI DSS requires protection of cardholder data. But if 22% of AI paste operations include payment card data, how many compliance violations are happening every hour?

SOC 2 requires you to demonstrate control over your data processing. Shadow AI makes that demonstration impossible.

When Shadow AI leaks EU customer data without consent, fines can hit 4% of global revenue. That's not a typo. Four percent. Of everything.

The Zero Trust Approach to Shadow AI

Blocking doesn't work. But blind acceptance isn't an option either.

The answer is controlled capitulation — give employees the AI tools they need, but with guardrails. Here's how:

1. Deploy Sanctioned AI Environments

Provide enterprise-grade AI tools that offer:

- Data excluded from model training

- Enterprise-grade encryption

- Audit logging and compliance controls

- SSO integration for identity management

- Data residency controls for regulatory compliance

When employees have a secure, approved option that's just as easy to use as the unauthorized alternative, Shadow AI adoption drops dramatically.

2. Apply Zero Trust Principles

Treat all AI as risky until verified. This means:

- Require authentication for any AI tool access

- Monitor and log all AI interactions

- Classify AI tools into Approved, Limited-Use, and Prohibited categories

- Implement real-time DLP that understands AI workflows

- Verify AI tool security posture before approval

3. Create an AI Acceptable Use Policy

Policies alone won't stop Shadow AI, but they're a necessary foundation. Your policy should:

- Define which AI tools are approved for which use cases

- Specify data handling rules (what can and cannot be fed into AI)

- Establish consequences for violations

- Provide clear guidance on how to request approval for new tools

- Address both cloud-based and locally-run AI models

4. Build an Internal AI AppStore

Create a curated allow-list of approved tools, making it easy for employees to find safe alternatives. If someone needs an AI-powered writing assistant, don't just ban the unauthorized one — provide an approved option.

Consider offering:

- Approved chatbot alternatives for general queries

- Sanctioned code assistants for developers

- Vetted transcription tools for meetings

- Cleared summarization tools for document review

5. Monitor, Detect, and Respond

Implement continuous monitoring for:

- Unauthorized AI tool access (network traffic analysis)

- Sensitive data in prompts (AI-aware DLP)

- Unusual AI usage patterns (behavioral analytics)

- Integration of AI with corporate data sources (API monitoring)

- Browser extensions with AI capabilities (endpoint security)

When you detect Shadow AI, respond with education first, enforcement second. The goal is to redirect behavior, not punish productivity.

The Cultural Shift

Technology alone won't solve Shadow AI. You need a cultural shift.

Employees use unauthorized AI tools because:

- They face pressure to deliver faster

- Approved alternatives don't exist or are too cumbersome

- They don't understand the risks

- Nobody told them not to

- The tools are genuinely helpful

Address these root causes:

Make approved tools frictionless. If your sanctioned AI requires a 15-step approval process while consumer AI is one click away, guess which one wins.

Educate without terrifying. Help employees understand the risks in concrete terms. "Your bonus might depend on the IP you just leaked" is more effective than "we have a policy."

Celebrate secure AI usage. When teams find innovative ways to use AI safely, highlight those wins. Make security an enabler, not a blocker.

Create feedback loops. When employees request tools that aren't approved, fast-track the evaluation. Show them you're responsive to their needs.

The Bottom Line

Shadow AI isn't going away. The productivity benefits are too significant, the tools are too accessible, and the workforce is too distributed to contain it through prohibition alone.

The organizations that will thrive in the AI era are those that:

- Accept that AI usage is inevitable

- Provide secure, sanctioned alternatives

- Implement zero-trust monitoring and controls

- Build a culture of responsible AI usage

- Stay agile as the AI landscape evolves

The choice isn't between AI and no AI. It's between controlled AI and chaos.

Your employees are already talking to AI. The only question is whether you're part of the conversation.