When I first started working with LLMs, I thought it was all about writing the perfect prompt. Feed it enough context and — boom — it should just work, right?

Not quite.

Early on, I realized I was basically tossing words at a glorified autocomplete. The output looked smart, but it didn’t understand anything. It couldn’t plan, adjust, or reason. One small phrasing tweak, and the whole thing broke.

What I was missing was structure. Intelligence isn’t just about spitting out answers: it’s about how those answers are formed. The process matters.

That’s what led me to agentic AI patterns, design techniques that give LLMs a bit more intention. They let the model plan, reflect, use tools, and even work with other agents. These patterns helped me go from brittle, hit-or-miss prompts to something that actually gets stuff done.

Here are the five patterns that made the biggest difference for me, explained in a way that’s actually usable.

1. Reflection: Teach Your Agent to Check Its Own Work

Ever asked ChatGPT a question, read the answer, and thought, “This sounds good… but something’s off”?

That’s where Reflection comes in. It’s a simple trick: have the model take a second look at its own output before finalizing it.

The basic flow:

- Ask the question.

- Have the model answer.

- Then prompt it again: “Was that complete? Anything missing? How could it be better?”

- Let it revise itself.

You’re not stacking models or adding complexity. You’re just making it double-check its work. And honestly, that alone cuts down on a ton of sloppy mistakes, especially for code, summaries, or anything detail-heavy.

Think of it like giving your model a pause button and a mirror.

2. Tool Use: Don’t Expect the Model to Know Everything

Your LLM doesn’t know what’s in your database. Or your files. Or today’s headlines. And that’s okay — because you can let it fetch that stuff.

The Tool Use pattern connects the model to real-world tools. Instead of hallucinating, it can query a vector DB, run code in a REPL, or call external APIs like Stripe, WolframAlpha, or your internal endpoints.

This setup does require a bit of plumbing: function-calling, routing, maybe something like LangChain or Semantic Kernel, but it pays off. Your agent stops guessing and starts pulling real data.

People assume LLMs should be smart out of the box. They’re not. But they get a lot smarter when they’re allowed to reach for the right tools.

3. ReAct: Let the Model Think While It Acts

Reflection’s good. Tools are good. But when you let your agent think and act in loops, it gets even better.

That’s what the ReAct pattern is all about: Reasoning + Acting.

Instead of answering everything in one go, the model reasons step-by-step and adjusts its actions as it learns more.

Example:

- Goal: “Find the user’s recent invoices.”

- Step 1: “Query payments database.”

- Step 2: “Hmm, results are outdated. Better ask the user to confirm.”

- Step 3: Adjust query, repeat.

It’s not just responding — it’s navigating.

To make ReAct work, you’ll need three things:

- Tools (for taking action)

- Memory (for keeping context)

- A reasoning loop (to track progress)

ReAct makes your agents flexible. Instead of sticking to a rigid script, they think through each step, adapt in real-time, and course-correct as new information comes in.

If you want to build anything beyond a quick one-off answer, this is the pattern you need.

4. Planning: Teach Your Agent to Think Ahead

LLMs are pretty good at quick answers. But for anything involving multiple steps? They fall flat.

Planning helps with that.

Instead of answering everything in one shot, the model breaks the goal into smaller, more manageable tasks.

Let’s say someone asks, “Help me launch a product.” The agent might respond with:

- Define the audience

- Design a landing page

- Set up email campaigns

- Draft announcement copy

Then it tackles each part, one step at a time.

You can bake this into your prompt or have the model come up with the plan itself. Bonus points if you store the plan somewhere so the agent can pick up where it left off later.

Planning turns your agent from a reactive helper into a proactive one.

This is the pattern to use for workflows and any task that needs multiple steps.

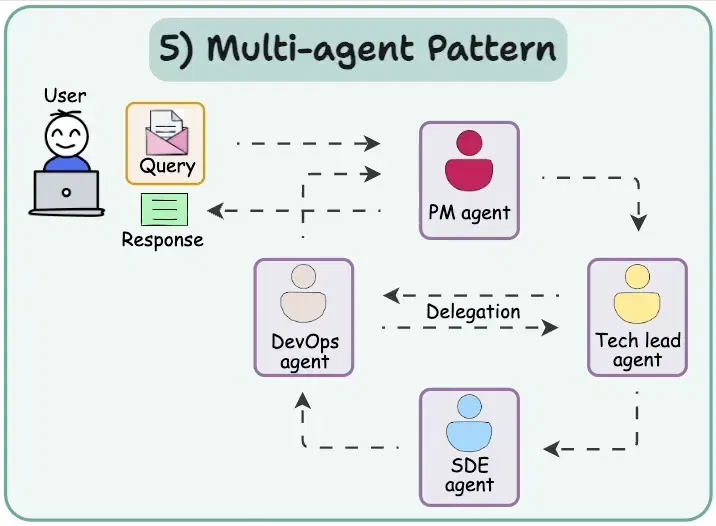

5. Multi-Agent: Get a Team Working Together

Why rely on one agent when you can have a whole team working together?

Multi-agent setups assign different roles to different agents, each handling a piece of the puzzle. They collaborate — sometimes even argue — to come up with better solutions.

Typical setup:

- Researcher gathers info

- Planner outline steps

- Coder writes the code

- Reviewer double-checks everything

- PM: keeps it all moving

It doesn’t have to be fancy. Even basic coordination works:

- Give each agent a name and job.

- Let them message each other through a controller.

- Watch as they iterate, critique, and refine.

The magic happens when they disagree. That’s when you get sharper insights and deeper thinking.

Want to Try This? Here’s a Simple Starting Point

Let’s say you’re building a research assistant. Here’s a no-nonsense setup that puts these patterns into play:

-

Start with Planning

Prompt: “Break this research task into clear steps before answering.”

Example: “1. Define keywords, 2. Search recent papers, 3. Summarize findings.”

-

Use Tool Use

Hook it up to a search API or a vector DB so it’s pulling real facts — not making stuff up.

-

Add Reflection

After each answer, prompt: “What’s missing? What could be clearer?” Then regenerate.

-

Wrap it in ReAct

Let the agent think between steps. “Results look shallow — retrying with new terms.” Then act again.

-

Expand to Multi-Agent (optional)

One agent writes. Another critiques.

They talk. They argue. The output gets better.

That’s it. You’ve got a working MVP. No fancy frameworks required, just smart prompts, basic glue code, and clear roles. You’ll be surprised how much more LLM’s confident you’ll feel.

Wrapping Up

Agentic design isn’t about making the model smarter. It’s about designing better systems. Systems that manage complexity, adapt mid-flight, and don’t fall apart at the first unexpected input.

These patterns helped me stop thinking of LLMs as magic boxes and start thinking of them as messy components in a bigger process. They’re not perfect. But they’re powerful — if you give them structure.

Because the real intelligence? It’s in the scaffolding you build around the model. Not just in the model itself.

The intelligence lives in the design, not just the model. And that’s both frustrating and freeing.

Struggling to grow your audience as a Tech Professional?

The Tech Audience Accelerator is the go-to newsletter for tech creators serious about growing their audience. You’ll get the proven frameworks, templates, and tactics behind my 30M+ impressions (and counting).