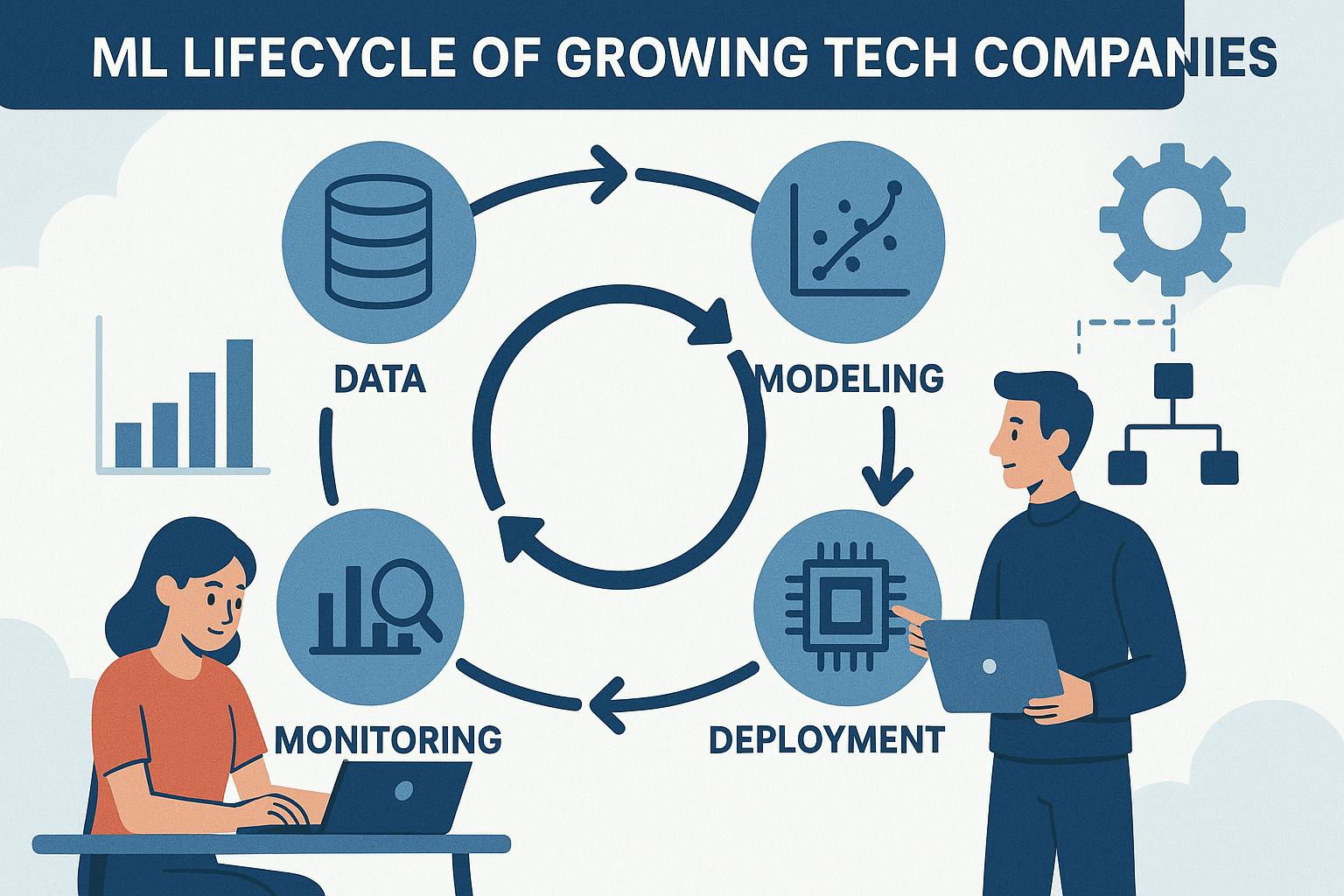

In this article, I will go over the evolution of MLOps in tech companies and how things scale as business grows. I will give a high level overview of the evolution, common pitfalls and general guidance for teams that rely on ML deployments and serving.

When we say ML development, most folks think we should just get some datasets, fine tune it and then build a model, check the scores and publish the model. while this is true for the POC (Proof of concept), it might not work well when we want to maintain it. Imagine training model everyday in the morning when you start working, it becomes too manual and monotonic, and there will be lot of tribal knowledge that will result to back logs or risk for the company or to the team. For startups, this will be the case though. They don’t want to spend lot of time in the infrastructure/CICD when you want to get the business running. At the beginning, this will work out just fine even though its manual, may be a cron tab/scheduled AWS job (EventBridge) or a shell script on the laptop will do just fine for sometime.

This stage is called MLOps stage 0, where things are mostly manual. You can read more about this in the google MLOps document.

As things progress, your team will slowly realize the need for MLOps, folks will go on vacation, shell scripts fail, machines reboot and things wont run, upgrades change the outputs of your model. You will realize, its time to buckle up and improve the model deployment. You will build your first CICD pipeline, now comes the next challenges. You soon realize that now you need SLA to be maintained with your upstream teams, model outputs to be validated, data columns should not change their definitions, science team wants to add more features, more folks want to work on things in parallel. This is where things get crazy and you are in the right direction.

In this stage, you will now invest on building toolings to make life easier, we can call this stage as MLOps stage 1, ML automation, most companies consider this as a stable state and will live with this setup. It has its own pros and cons, but additional SDE investment is time consuming and most companies with limited bandwidth will not consider this.

In Stage 1, you will have a team of 4-10 SDEs or research scientists, you want things to go quickly, traditional approach of building model everyday wont work for your team. You start building pipelines now, these pipelines now automate most of the part for you, like data validation, prep work, model training and validations, and you might rely on docker for maintaining packages and dependencies. You will use a prediction pipeline that will serve the model. you might rely on orchestrators like Airflow, AWS SWF, Docker for packages, and use git for CICD and everything will have structured data and now you define something called SLA (service level agreement). Things will go smoothly for couple of years and your system now evolves and you now have a team of 10 SDEs and 10 Research scientists. Now, every RS wants to build a model and deploy things quickly and you have business needs piling up. SDEs cannot hand hold each pipeline and you will have too many dependencies, base package become bloated, and prediction service needs handholding every change, oncall might have to coordinate deployments to make sure things don’t break. we now need to evolve to stage 2, CI/CD pipeline automation.

In stage 2, we need to take a step back and understand how the pipelines are built, abstract data dependencies, and still rely on the existing infrastructure. This is probably a work in progress in most companies and needs innovation. You need to able to move from experiments to production without major code rewrites, this means, you need to build a platform for this to work and abstract low level details. Once, this is complete, science teams and SDEs can move quicker, but there is a common problem here, since we built multiple abstractions, everyone needs to learn the tech stack for your specific team to move quicker. This leads to steep learning curve and teams need to make sure learning docs are updated timely, otherwise, this will not work.

In future articles, we will discuss about Foundational model pipelines and how they are trained and maintained. And we will go over each component in more technical details and the existing tech stack that you can use for each use case. These stories or opinions are related to my personal experience and this could be different for other SDEs. Thanks for reading and for the google MLOps articles for inspiration.