Table of Links

- Abstract and Introduction

- Related Work

- Experiments

- Discussion

- Limitations and Future Work

- Conclusion, Acknowledgments and Disclosure of Funding, and References

2 Related Work

Fine-tuning models. Since transformer models have become more widely available to developers there has been an increase in interest in fine-tuning models, often on sets of instructions to demonstrate how the model should respond to different types of queries (known as “instruction-tuning”) (Ouyang et al., 2022; Zhang et al., 2024). Instruction-tuning has been shown to enable relatively small open models to achieve improved performance over base models on specified tasks, such as factuality (Tian et al., 2023). However, (Y. Wang et al., 2023) demonstrate that while instruction-tuning on specific datasets can promote specific skills, no one dataset provides optimal performance across all capabilities. The authors find that fine-tuning on datasets can degrade performance on benchmarks not represented within instruction-tuning datasets, likely due to “forgetting”. Prior works have explored the problems of forgetting, with Luo et al. finding that smaller models (ranging from 1 billion to 7 billion parameters in size) are more susceptible to forgetting compared with larger models (Luo et al., 2024; Zhao et al., 2024). However, LoRA fine-tuning has been shown to “forget less” information outside of the fine-tuning target domain, compared with full fine-tuning (Biderman et al., 2024). These results indicate fine-tuning can have unintended impacts on model properties, however LoRA fine-tuning may be less susceptible to the problem of forgetting.

Safety & fine-tuning. Fine-tuning can be used to improve the safety performance of models. Documentation for Phi-3, Llama-3, and Gemma all describe how post-training mitigations such as fine-tuning improve safety performance (Bilenko, 2024; Gemma Team et al., 2024; Meta, 2024). However, prior experiments have shown how fine-tuning can impact safety properties of models. Small numbers of adversarial examples have been demonstrated to undo safety tuning in purportedly aligned language models (Lermen et al., 2023; Qi et al., 2023; Yang et al., 2023; Zhan et al., 2024). The ability to undo safety tuning has been demonstrated on models varying from small open models to large proprietary models which enable fine-tuning, such as GPT-4 (Qi et al., 2023; Zhan et al., 2024). Adversarial fine-tuning has been demonstrated to enable Personal Identifiable Information (PII) leakage and facilitate poisoning of models to manipulate model behavior (Sun et al., 2024; Wan et al., 2023).

Studies have shown that the impacts to safety properties are not always intentional nor require the expense of full-parameter fine-tuning. (He et al., 2024; Kumar et al., 2024; Qi et al., 2023) demonstrate that fine-tuning on benign datasets can undo safety mitigations on models including Llama-2-7B and GPT-3.5. More efficient forms of fine-tuning, such as low-rank adaptation (LoRA), have also been demonstrated to enable adjustments to safety properties of models, despite only engaging with a subset of model parameters (Lermen et al., 2023; Liu et al., 2024). However, these experiments have often been conducted at small-scale and have not considered how fine-tuning impacts can manifest in downstream community-tuned models deployed by users.

Toxicity & fine-tuning. One aspect of safety which has been subject to extensive analysis is the issue of toxicity, sometimes referred to as hateful or harmful language (Davidson et al., 2017). Toxic content generation might be abusive or hateful text outputted by a language model, which can occur when prompted with either harmless or directly harmful content. RealToxicityPrompts is a popular repository of data relating to toxicity, which has been extensively used to study model toxicity (Gehman et al., 2020). Indeed, work has been conducted to compare the propensity of different language models to output toxic content (Cecchini et al., 2024; Nadeau et al., 2024). These types of toxicity assessments are not only carried about by academics, but each of the Gemma, Phi-3, and Llama 2 technical papers report information on toxicity rates across models, demonstrating its importance to model developers (Gemma Team et al., 2024; Microsoft, 2024; Touvron et al., 2023).

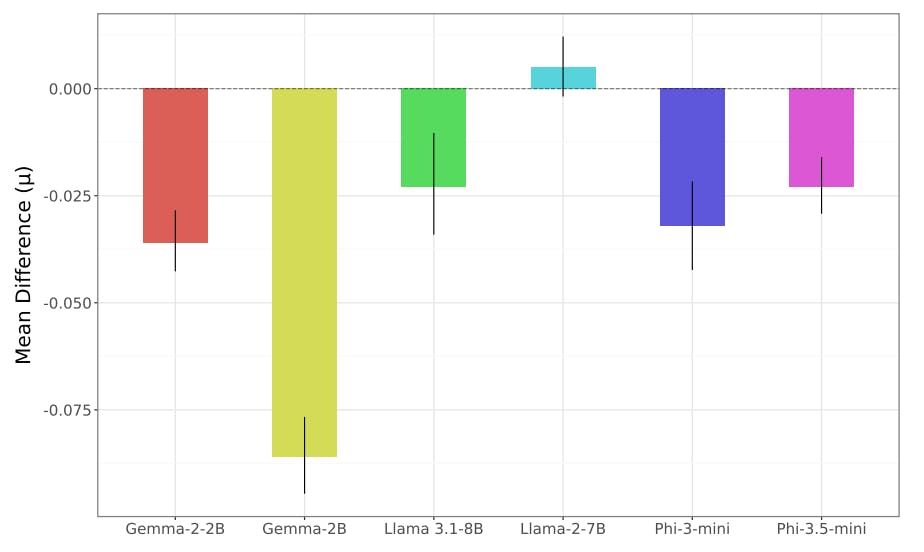

Despite model creators reporting on toxicity metrics to demonstrate model safety and show how fine-tuning can improve toxicity metrics, there has been limited attention on how fine-tuning could adversely impact toxicity. This is particularly important due to the increasing ease at which finetuning can be conducted, and the growing popularity of platforms such as Hugging Face. This work seeks to fill this gap and explore how parameter efficient fine-tuning can, inadvertently, shift toxicity metrics across a wide range of models and community-tuned variants.

Authors:

(1) Will Hawkins, Oxford Internet Institute University of Oxford;

(2) Brent Mittelstadt, Oxford Internet Institute University of Oxford;

(3) Chris Russell, Oxford Internet Institute University of Oxford.

This paper is available on arxiv under CC 4.0 license.