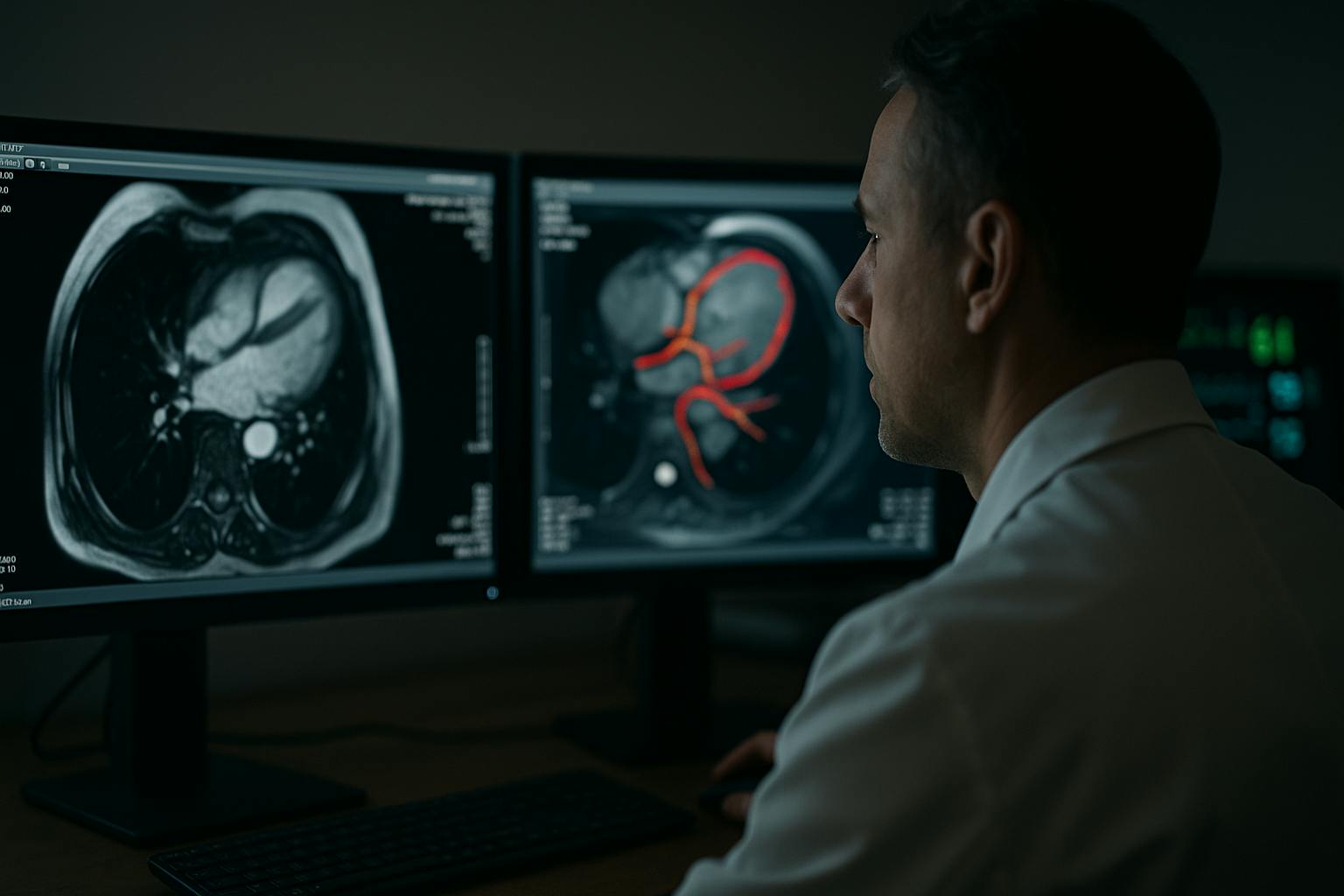

Cardiac imaging has always been about pattern recognition under pressure. Radiologists are tasked with scanning CTs, MRIs, and echocardiograms for faint, early warnings of disease, often while managing a crushing workload. It is no surprise that subtleties like minor changes in wall strain or early valve thickening are easily missed. Artificial intelligence has been brought into this space not as a novelty but as a necessity. What it reveals is not only how medicine is changing, but how the entire data annotation industry must evolve to remain relevant.

A 2025 study in Radiology: Cardiothoracic Imaging found that AI-assisted CT reviews reduced interpretation times by 42 percent and boosted detection rates for asymptomatic coronary artery disease. On paper, the study was about efficiency. In practice, it was a demonstration: when trained on specialized datasets, AI systems can outperform humans at spotting what often goes unnoticed.

This is not about “AI versus radiologists.” It is about what kind of annotation infrastructure makes such tools possible—and why the era of bulk, generic labeling is dead.

From Commodity Data to Clinical Judgment

The AI models reshaping cardiology are not trained on the equivalent of crowd-labeled cats and dogs. They are trained on dynamic imaging data labeled frame by frame, with annotations marking subtle cardiac events invisible to the untrained eye. The complexity is staggering: ejection fractions measured across time series, perfusion shifts tracked in moving scans, valve motion irregularities logged in context.

Annotation here is no longer a commodity service. It is clinical work by another name. Snorkel, once confident in the sufficiency of programmatic labeling, now integrates physicians to review its data pipelines. Surge has repositioned toward recruiting annotators with domain expertise for exactly these kinds of high-stakes cases.

What unites them is the admission that the “bulk food” model of annotation (cheap labor applied at scale) is not just inadequate for healthcare. It is dangerous.

Secondary Readers, Not Silent Replacements

In practice, hospitals are deploying AI as a second reader. The systems measure, compare, and highlight. Radiologists remain in charge, but they are now working with a digital assistant capable of screening thousands of slices without fatigue. The role of AI is not to diagnose, but to catch what overworked specialists may miss, and to force a second look when the cost of error is measured in lives.

The lesson for the broader industry is clear: AI earns trust in medicine not by replacing expertise but by aligning tightly with it. Annotation providers that fail to mirror this philosophy embedding expertise into every stage of their pipelines will not survive the clinical threshold.

Early Warnings as the New Currency

The value of

In a 2024 NHS pilot, AI-assisted MRI reviews increased detection of myocarditis by 18 percent compared to radiologist-only reads. Importantly, the systems did not replace clinicians but instead they re-screened cases already cleared in first reads. In other words, AI acted as a failsafe. That failsafe was possible only because the models were trained on rigorously annotated, clinically verified datasets.

The Trust Bottleneck

Trust is now the bottleneck for adoption. Clinicians are not asking whether AI can process images. They are asking whether it has been trained on data that reflects their patients. They want to know who annotated the scans, what standards were used, and whether biases lurk in the dataset.

This is forcing

The Obsolescence of Generic Annotation

Cardiac imaging makes the verdict explicit. The age of annotation at scale, done cheaply and generically, is over. Healthcare cannot afford brittle models trained on ambiguous data. The firms adapting are reshaping themselves around this reality. Those clinging to the commodity model are watching their relevance evaporate.

The transformation playing out in cardiology is a preview for every other high-stakes domain, from oncology to pharmacovigilance. AI will not replace human judgment in medicine. But it will replace annotation pipelines that cannot encode human judgment into their data.

The industry’s future will not be written by the companies with the largest labeling armies. It will be written by those capable of embedding domain intelligence into every annotation, building datasets that reflect not just images but the clinical reasoning those images demand.

Cardiac imaging has drawn the line. Generic annotation is dead.