This is a simplified guide to an AI model called bisenet-faces maintained by fermatresearch. If you like these kinds of analysis, join AIModels.fyi or follow us on Twitter.

Model overview

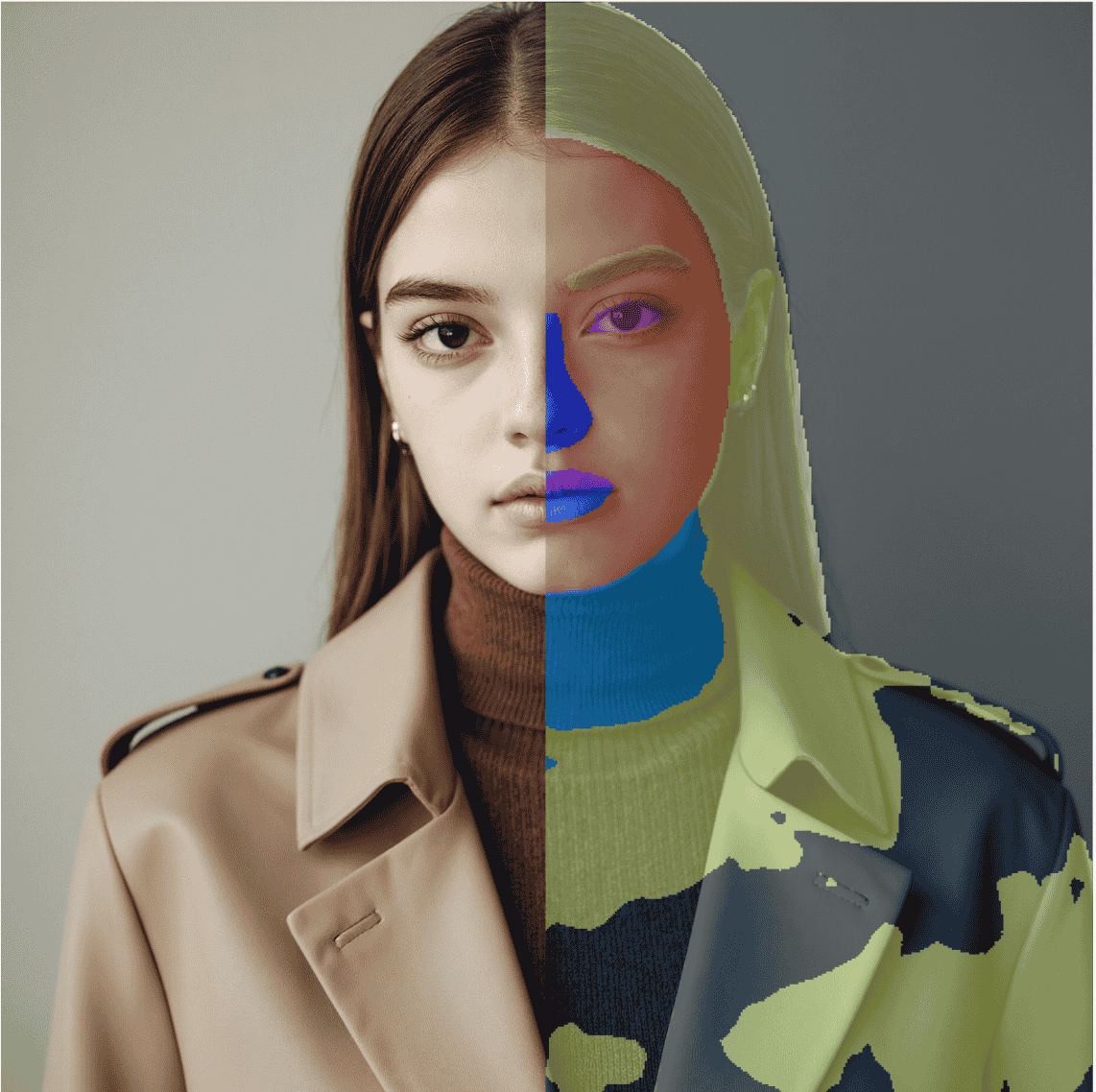

bisenet-faces implements BiSeNet, a bilateral segmentation network designed for real-time semantic segmentation focused on face parsing. Created by fermatresearch, this model segments facial images into distinct regions like skin, hair, eyes, and other facial components. The bilateral approach combines detailed spatial information with context to achieve fast processing times without sacrificing accuracy. Unlike general segmentation models, this implementation targets the specific challenge of parsing facial structure with efficiency suitable for real-time applications.

Model inputs and outputs

bisenet-faces accepts images and processes them through a configurable pipeline. The model outputs segmentation masks that identify different facial regions with pixel-level precision. Input resolution can be adjusted for speed versus detail tradeoffs, and the model supports different backbone architectures for varying performance characteristics.

Inputs

- Image: Input image URL containing a face to parse

- Backbone: Choice between ResNet18 or ResNet34 architectures (default: ResNet18)

- Input Size: Square resolution for processing, ranging from 128 to 1024 pixels (default: 512)

- Use Half: Optional FP16 precision on CUDA devices for faster processing with lower memory usage

Outputs

- Segmentation Masks: Array of segmented image URIs showing parsed facial regions

Capabilities

The model identifies and separates facial components including face boundaries, skin regions, hair, eyebrows, eyes, nose, lips, and neck areas. It processes faces at multiple resolutions and can run efficiently on both CPU and GPU hardware. The ResNet18 backbone provides a lightweight option for resource-constrained environments, while ResNet34 offers improved accuracy for higher-quality segmentation. The model handles various image qualities and lighting conditions typical in real-world face parsing scenarios.

What can I use it for?

Face parsing enables numerous applications in beauty technology, virtual try-on systems, facial animation, and beauty product recommendation engines. Developers can integrate this model into applications that require understanding facial geometry, such as makeup simulation, hairstyle visualization, or skin analysis tools. The real-time performance makes it suitable for streaming applications and interactive experiences where latency matters. Companies in the beauty, gaming, and virtual reality sectors can use this technology to enhance their products with intelligent facial region detection and processing.

Things to try

Experiment with different backbone architectures to find the optimal balance between speed and segmentation quality for your specific use case. Test various input resolutions to understand how pixel density affects parsing accuracy for different facial feature types—smaller faces may require higher resolutions while larger faces work well at lower settings. Try enabling FP16 precision if running on CUDA hardware to improve throughput while monitoring whether the reduced precision impacts your segmentation requirements. Process diverse face images varying in ethnicity, age, and lighting conditions to evaluate model robustness across different demographics and environmental scenarios.